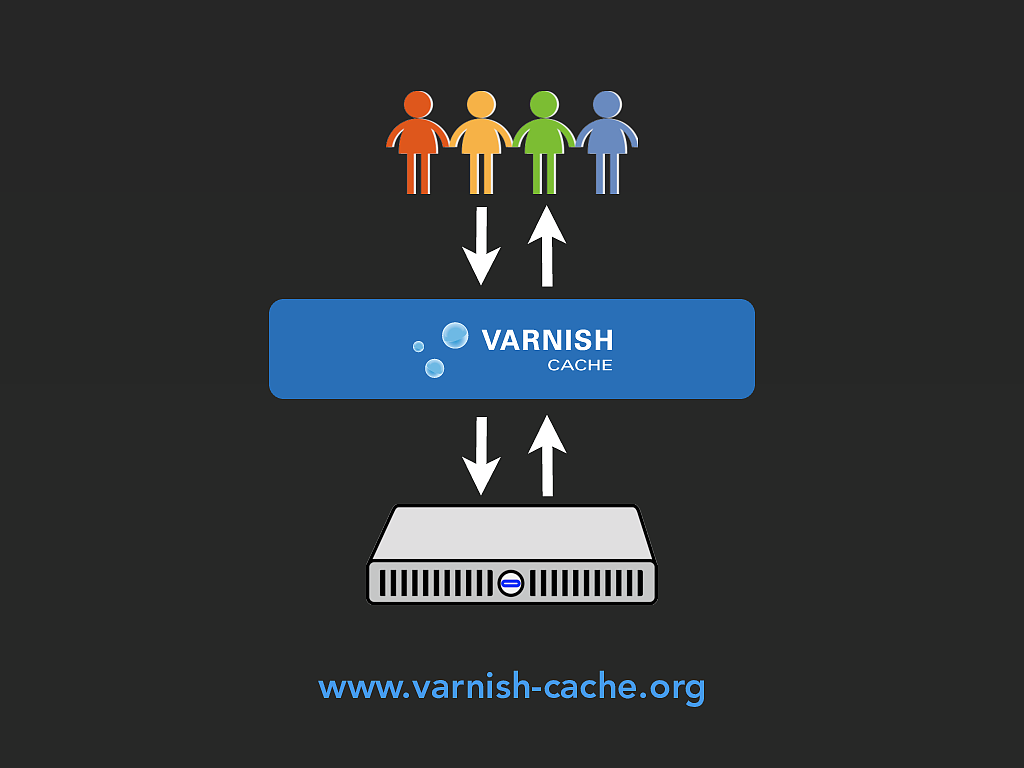

www.varnish-cache.org

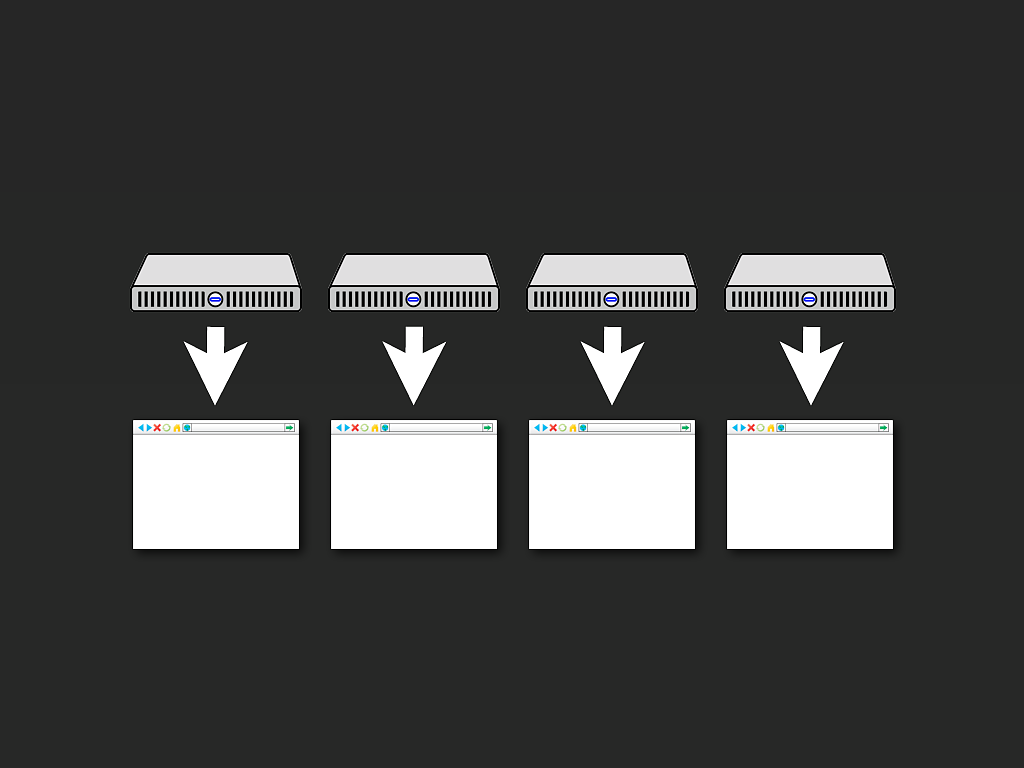

I’ve become a massive fan of Varnish of late. It’s an HTTP cache (or reverse proxy) that sits on port 80 in front of your web server. If the web server’s

response is cachable, it keeps a copy in memory (by default) and serves it up the next time that same page is requested. Done right, it can dramatically

reduce the number of requests hitting your backend web server, whilst serving precompiled pages super-fast from memory.

Good use of Varnish can make your site much faster, however, it is no silver bullet. The caveat “if the web server’s response is cachable” turns out to be a

very important one. You really need to design your site from the ground up to use a front end cache in order to make the best use of it.

As soon as you’ve identified the user with a cookie (including something like a PHP session, which of course uses cookies) then the request will hit your

backend web server. Unless configured otherwise that would include things like Google Analytics cookies, which of course, would be every request from

any JavaScript-enabled browser. If you static assets (images, CSS, JavaScript) from the same domain, by default the cache will be blown on those, too, as

soon as a cookie is set. So you have to design for that.

So while Varnish will help to take the load and shorten response times on common pages like your site’s front page, you can’t rely on it as an end-all

solution for speeding up a slow site. If your backend app is slow, your site will still be slow for a lot of requests.

It’s a bit like putting WP Super Cache on a WordPress site. It will mask the underlying issue to an extent, but it won’t solve the underlying problem.