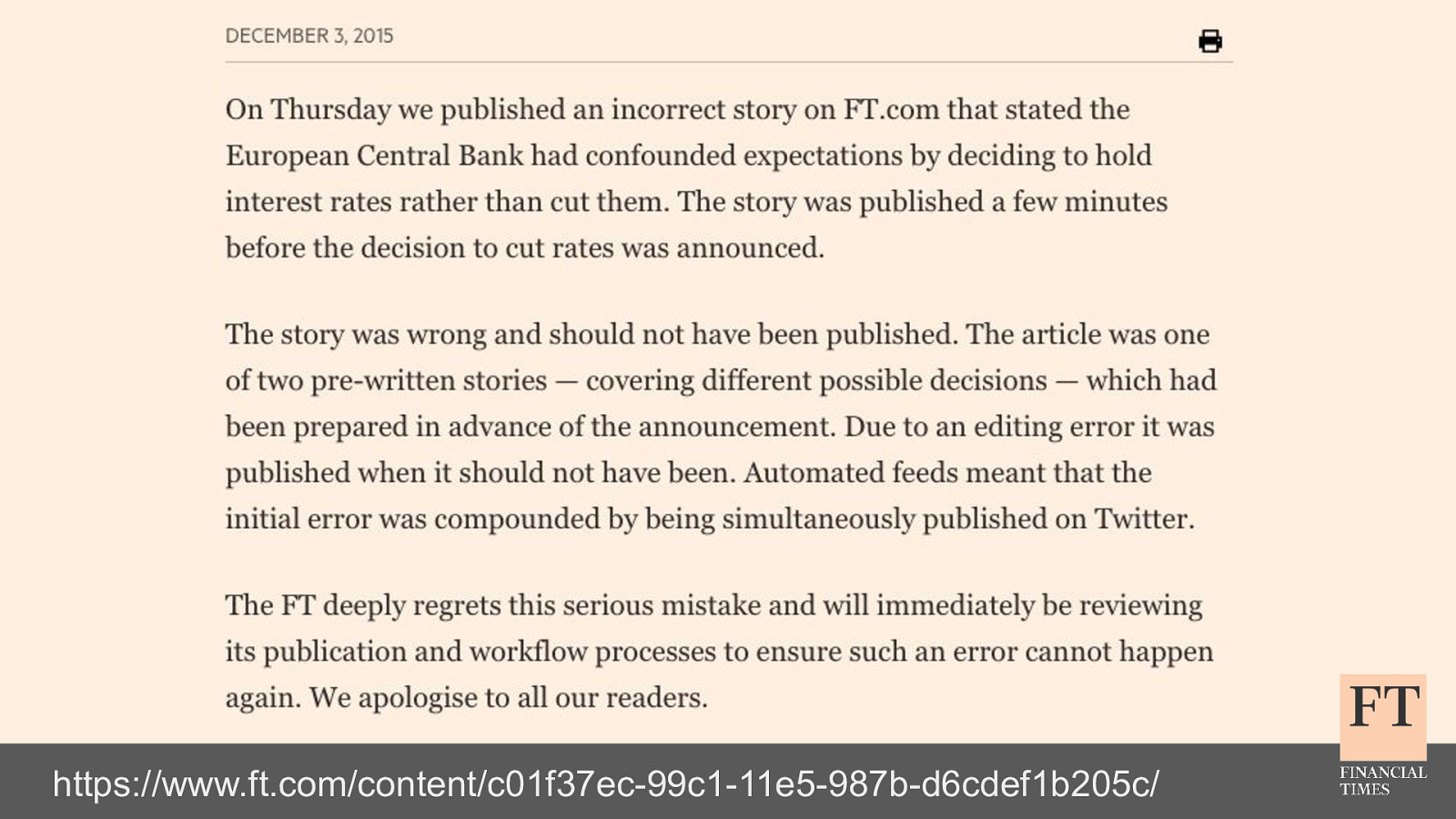

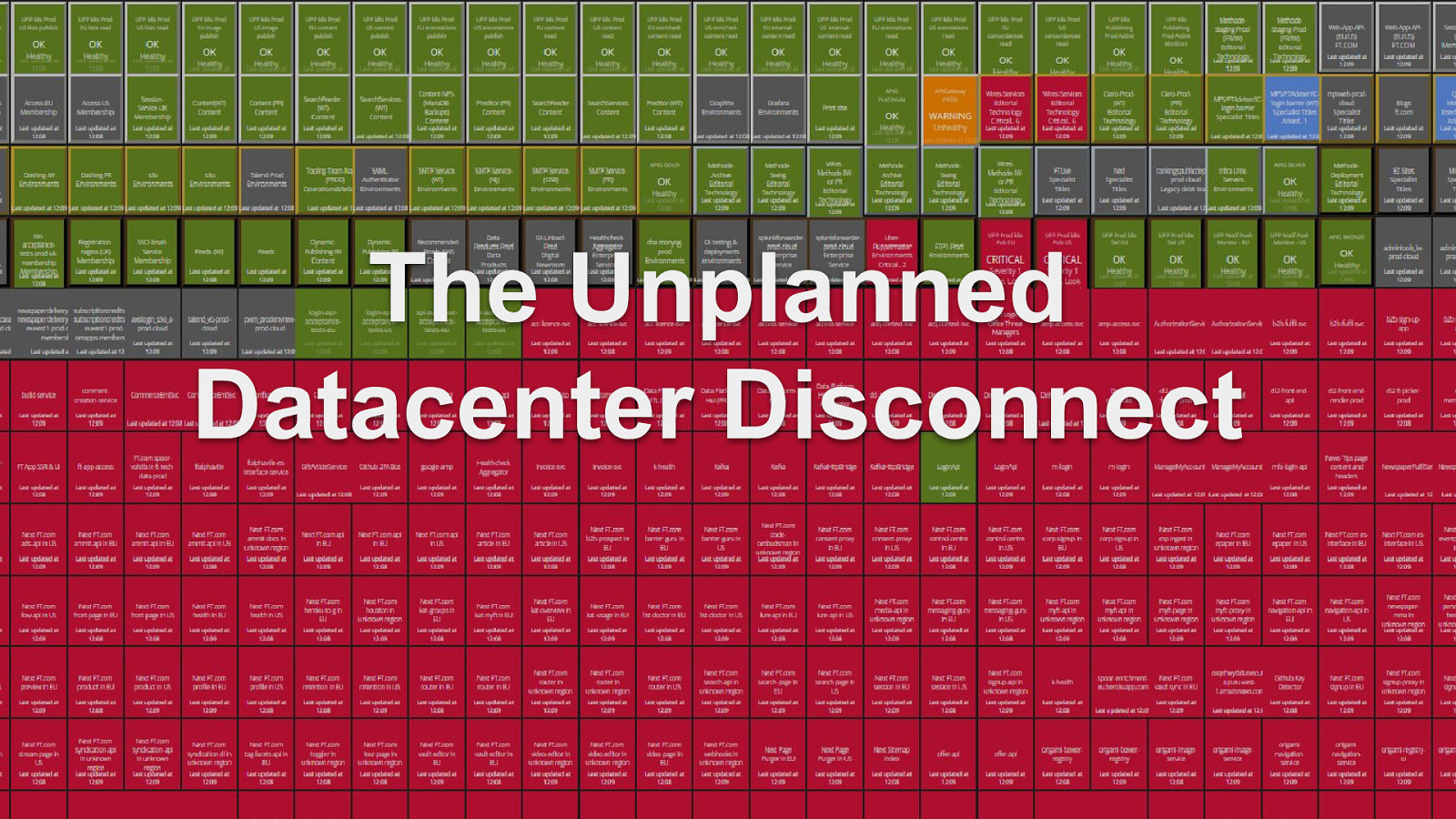

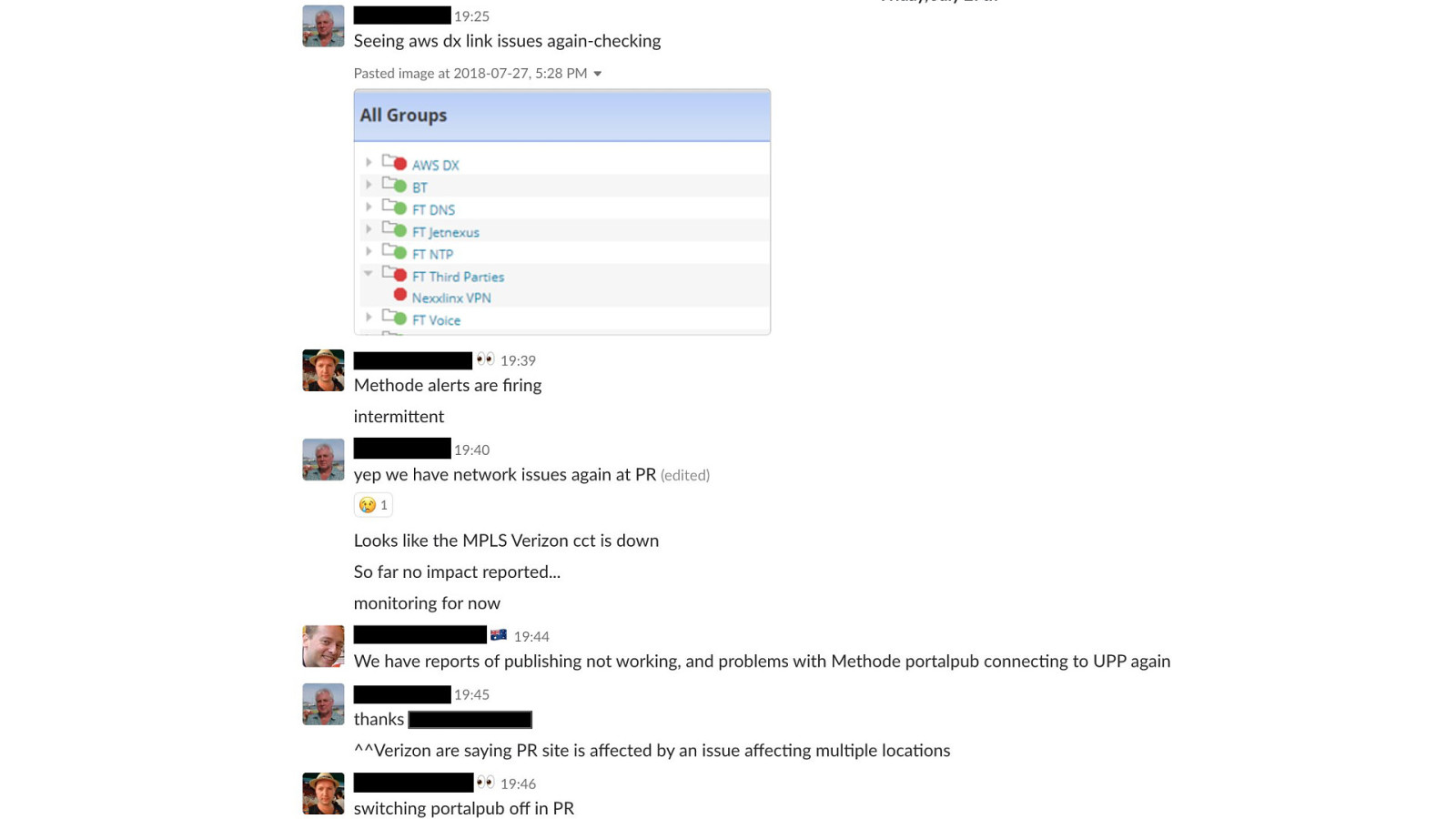

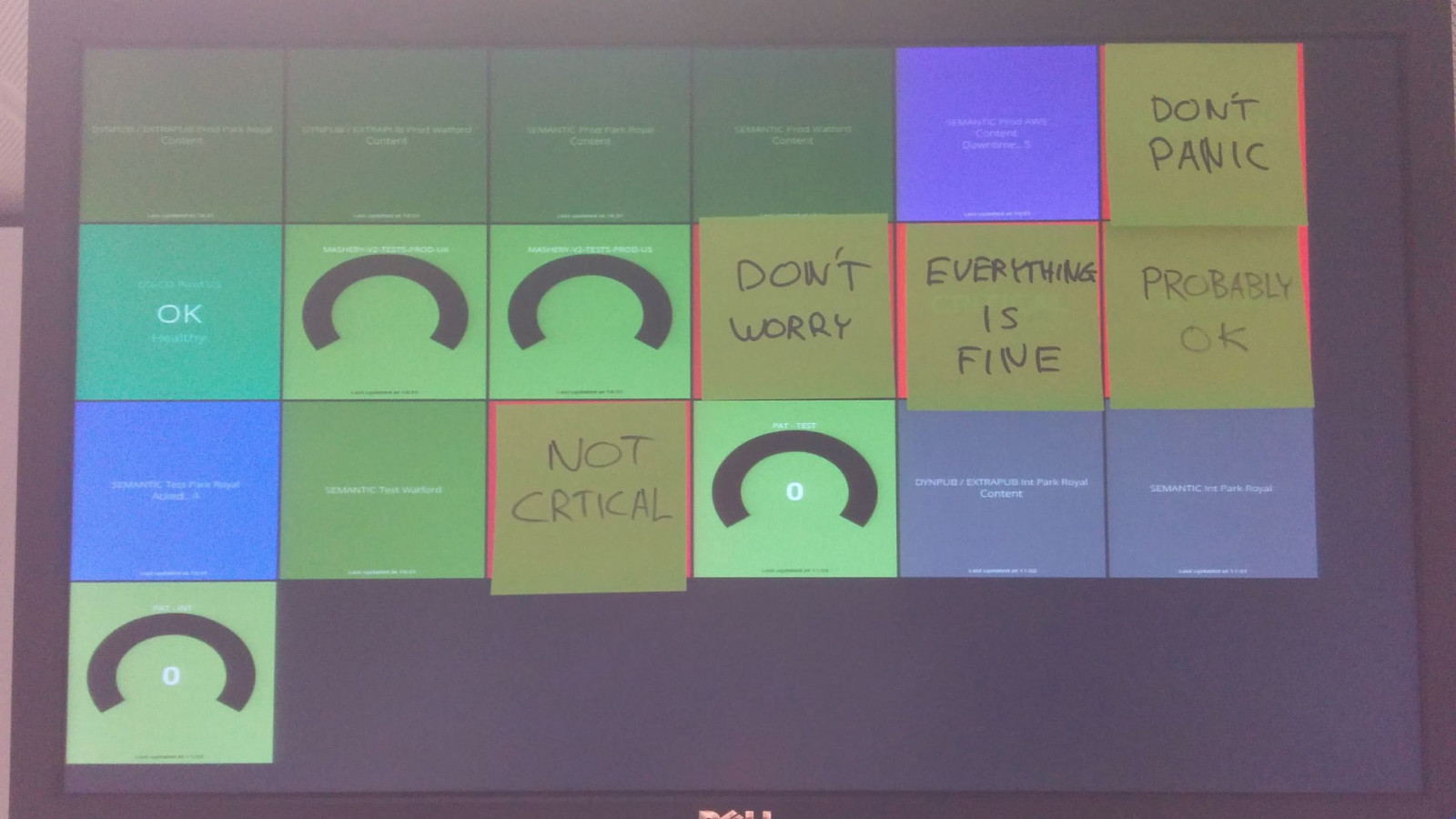

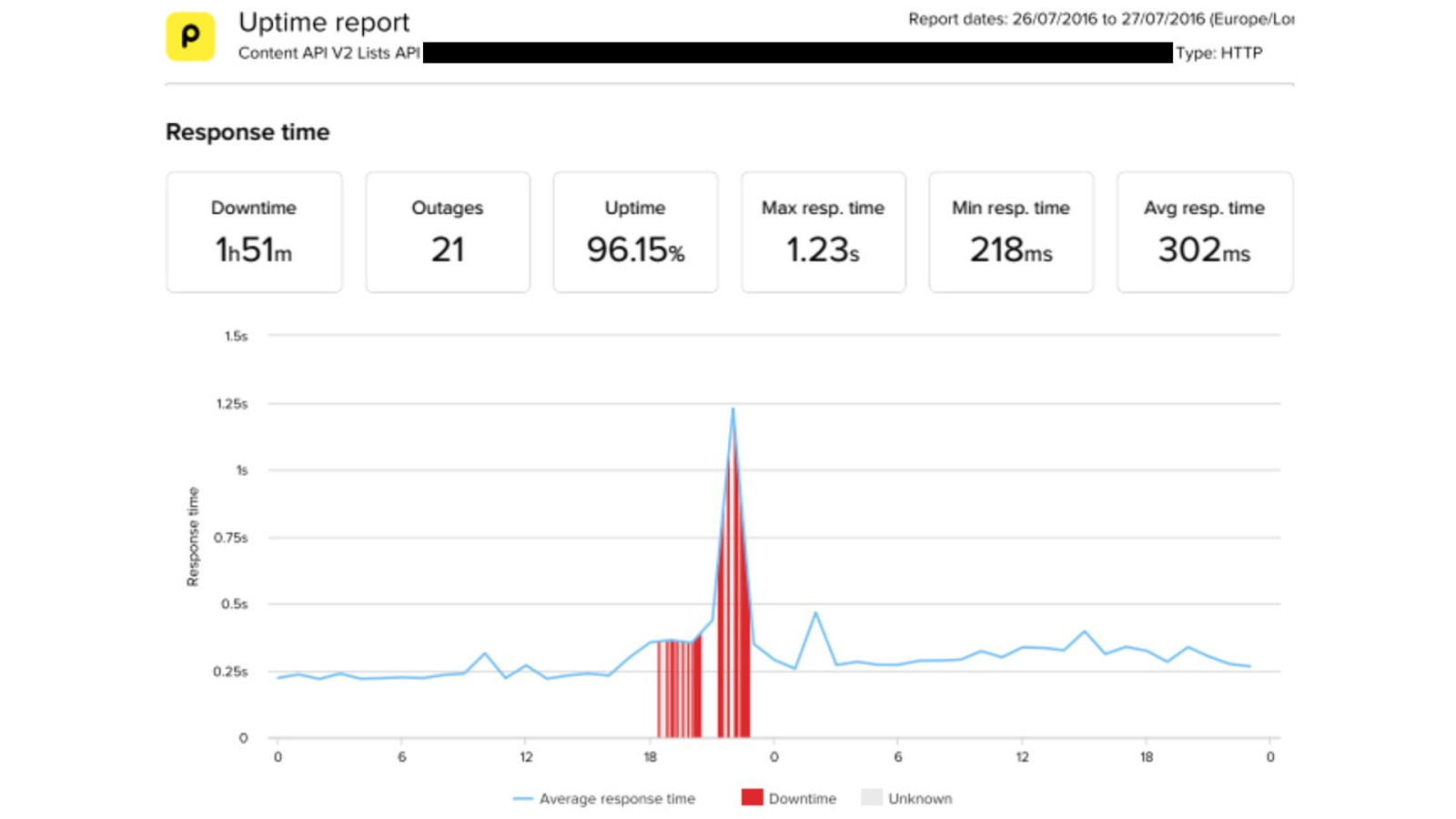

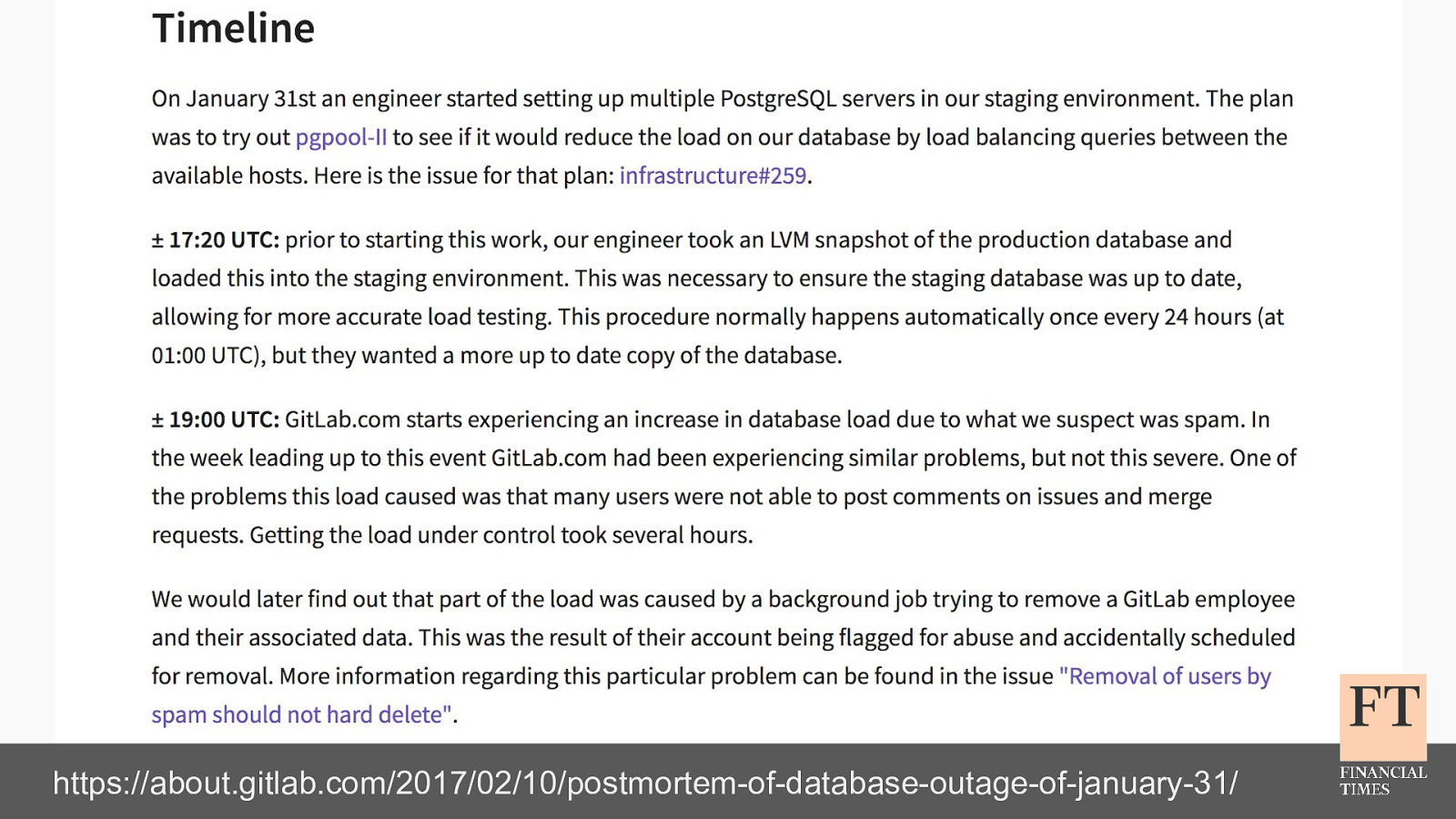

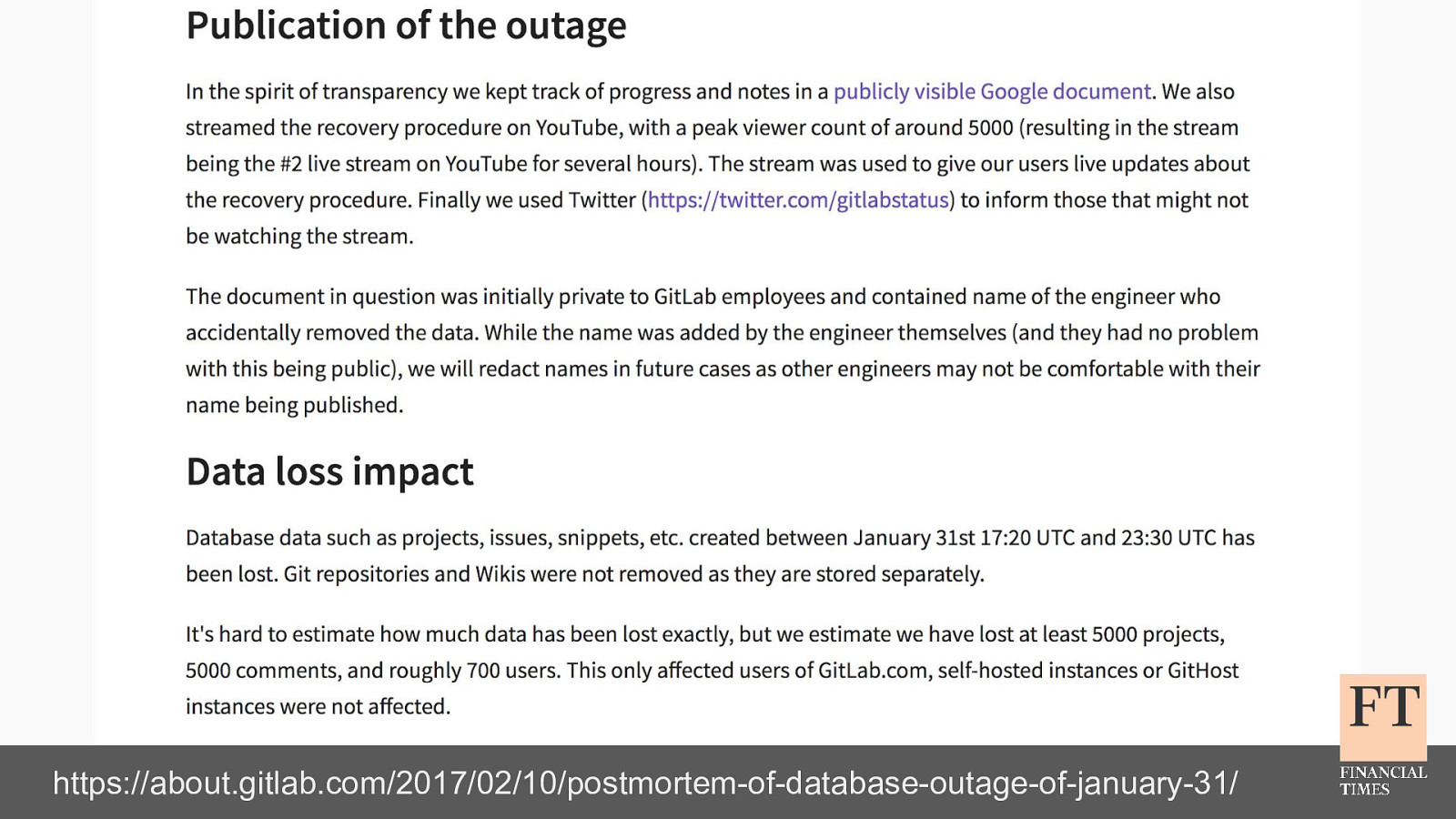

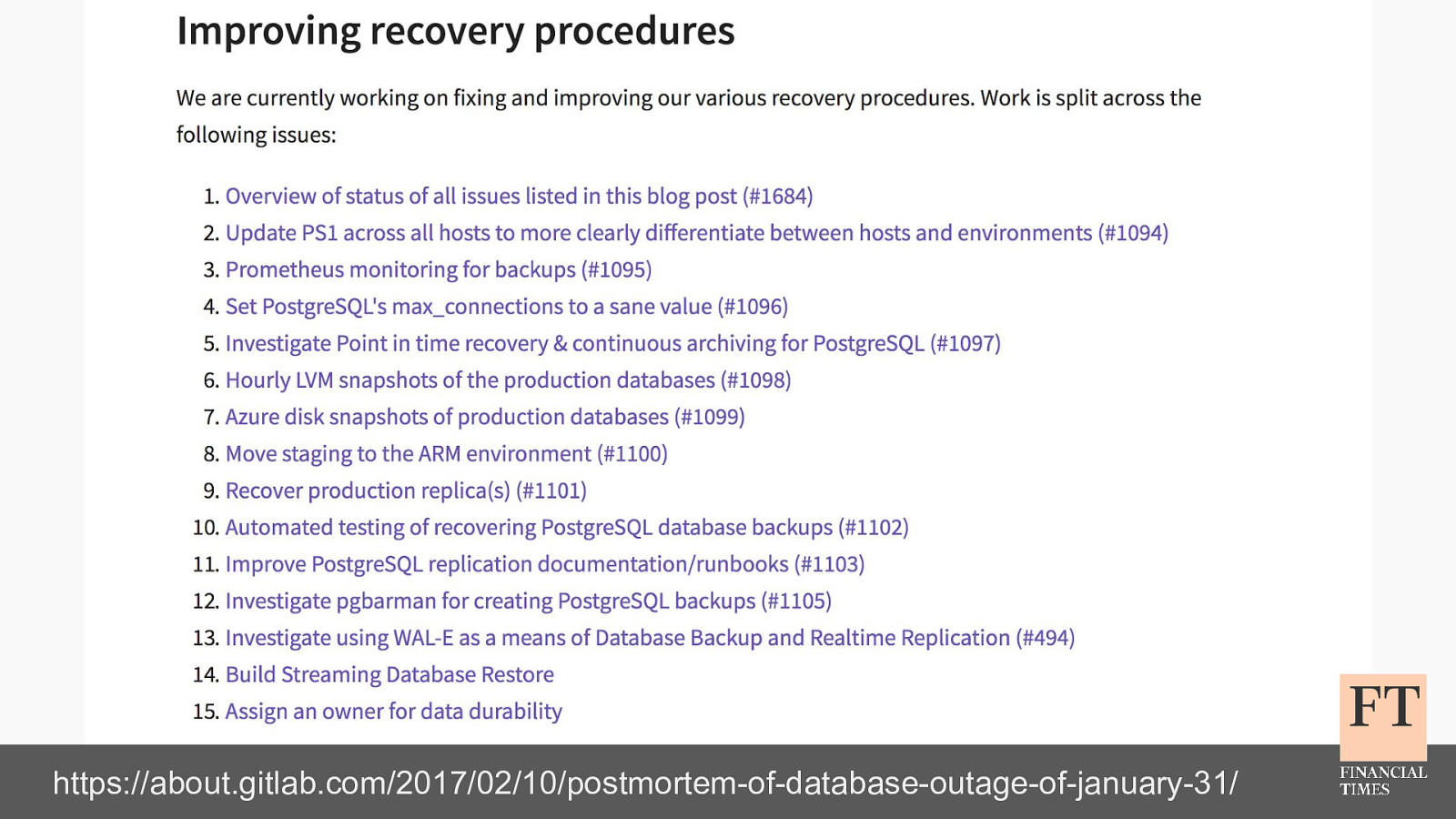

Don’t Panic! How to Cope Now You’re Responsible for Production Euan Finlay @efinlay24 Hi! Thanks for the introduction. despite this being a technical talk the scariest production incident that I’ve been part of in my four years at the Financial Times wasn’t actually caused by anything in our technology stack