Small Technology

Laura Kalbag and Aral Balkan, Ind.ie @laurakalbag @aral

(These slides cover the first half of the talk. Small Technology: The Problem)

A presentation at Think About! in May 2019 in Cologne, Germany by Laura Kalbag

Laura Kalbag and Aral Balkan, Ind.ie @laurakalbag @aral

(These slides cover the first half of the talk. Small Technology: The Problem)

We feel like we know Facebook’s game. They show us adverts, we get to socialise for free. I don’t give Facebook much information, my public profile is barely filled out, I rarely Like or Share anything.

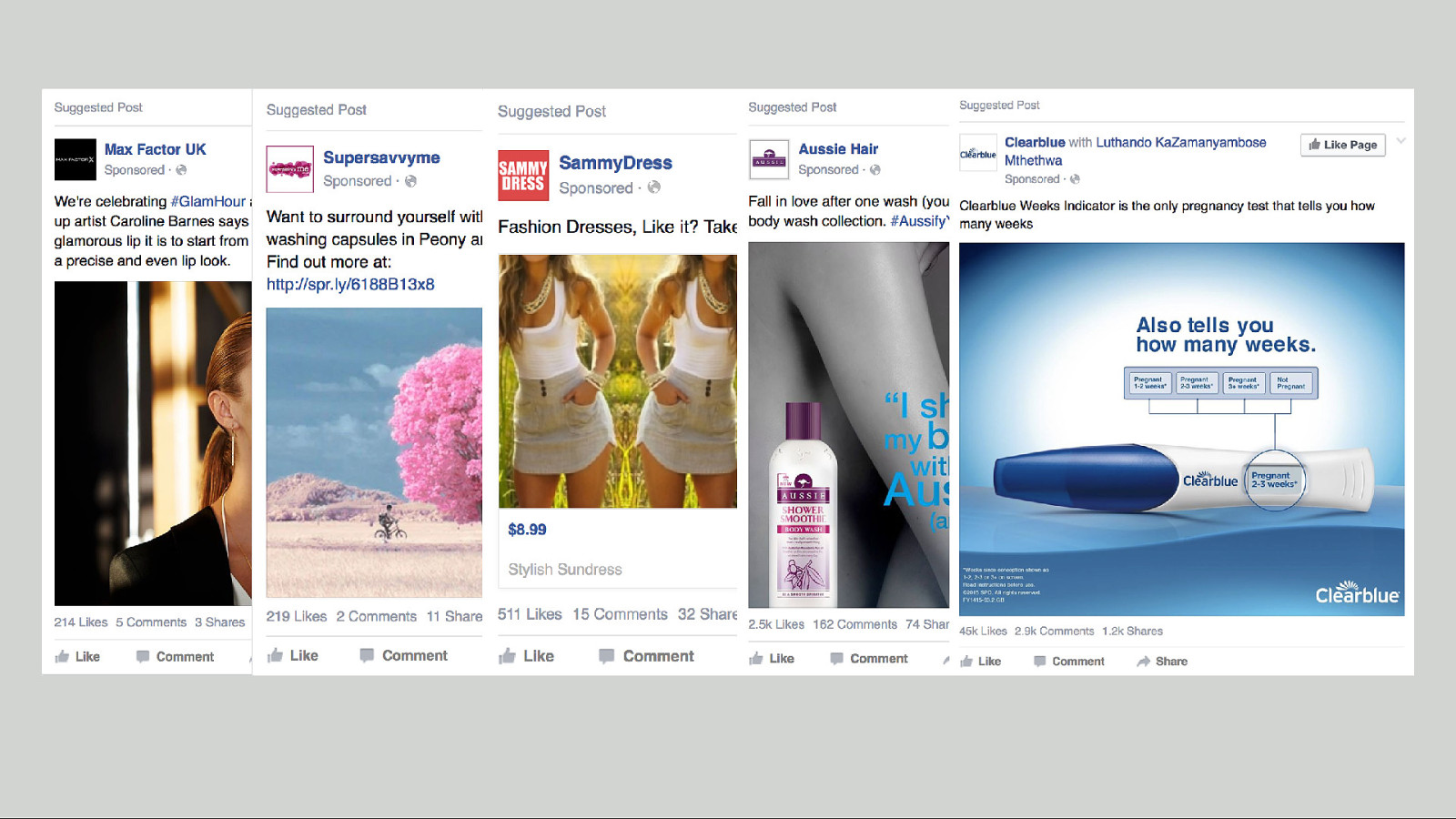

So I get ads aimed at a stereotypical woman in her early thirties. (Makeup, laundry capsules, shampoo, dresses, pregnancy tests…)

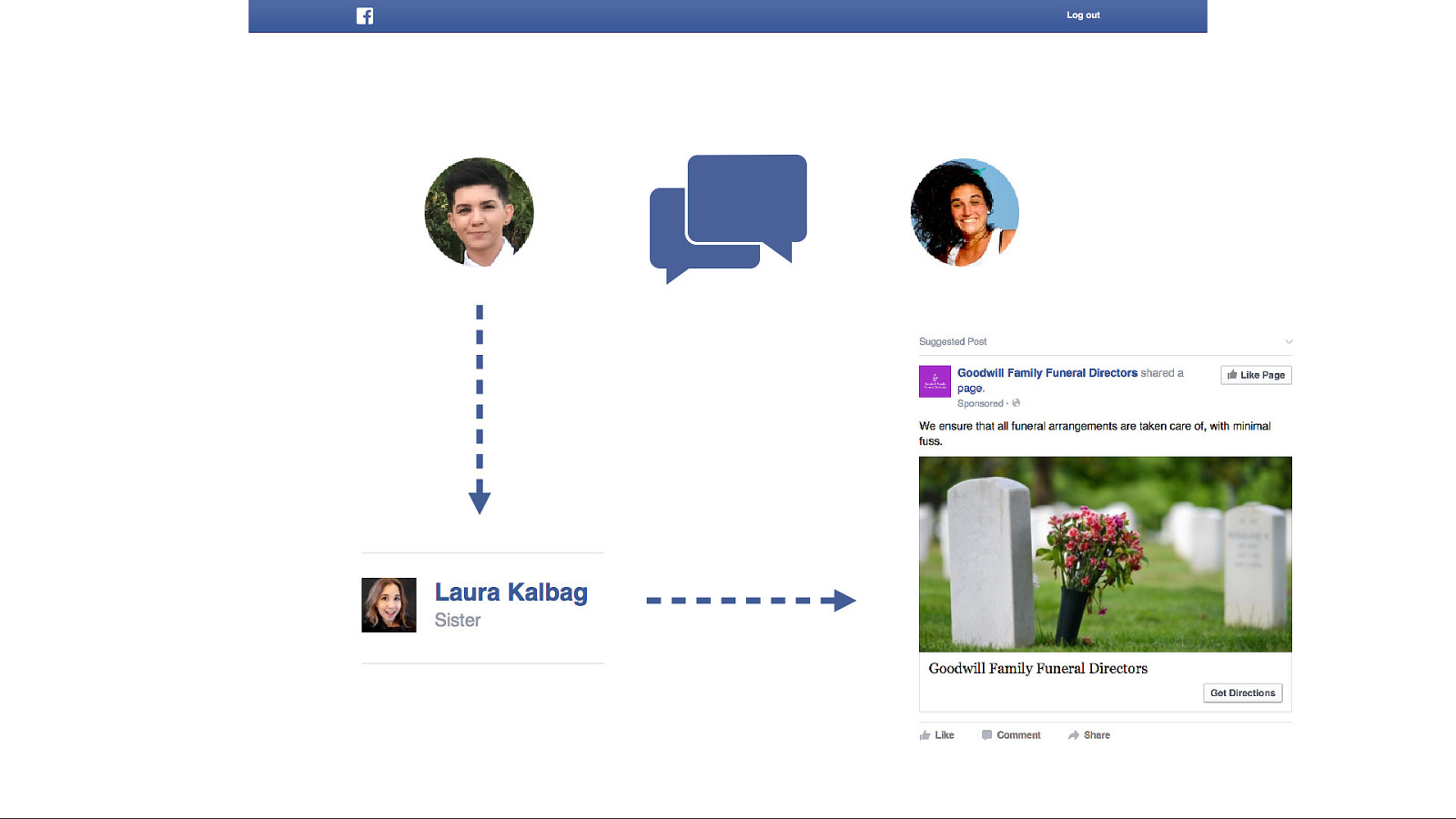

Then four years ago, my mum died. And then Facebook suggested I might be interested in this… Goodwill Family Funeral Directors. It’s quite specific but surely a coincidence?

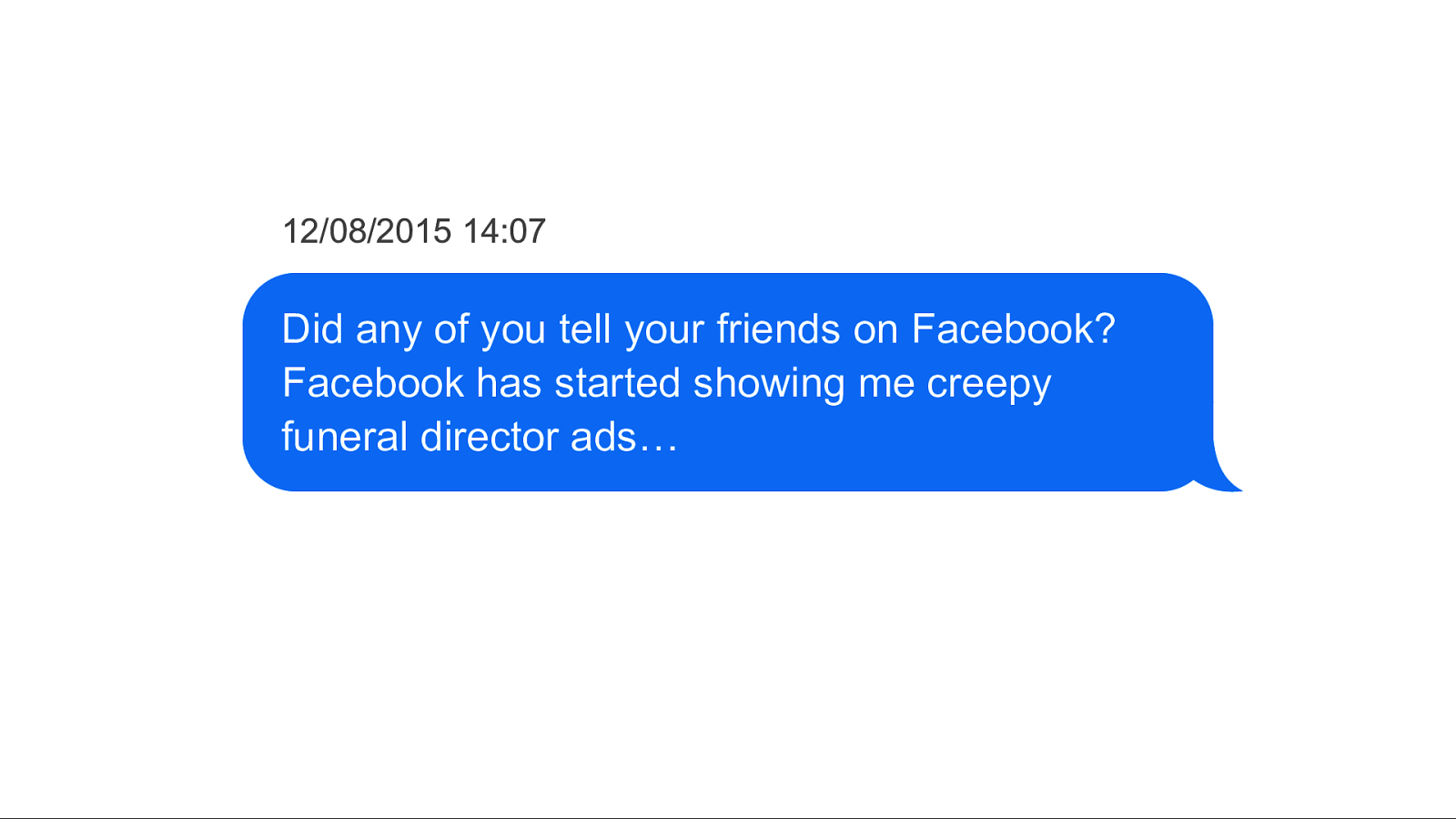

So I asked my siblings if any of them had posted something on Facebook… “Did any of you tell your friends on Facebook? Facebook has started showing me creepy funeral director ads…”

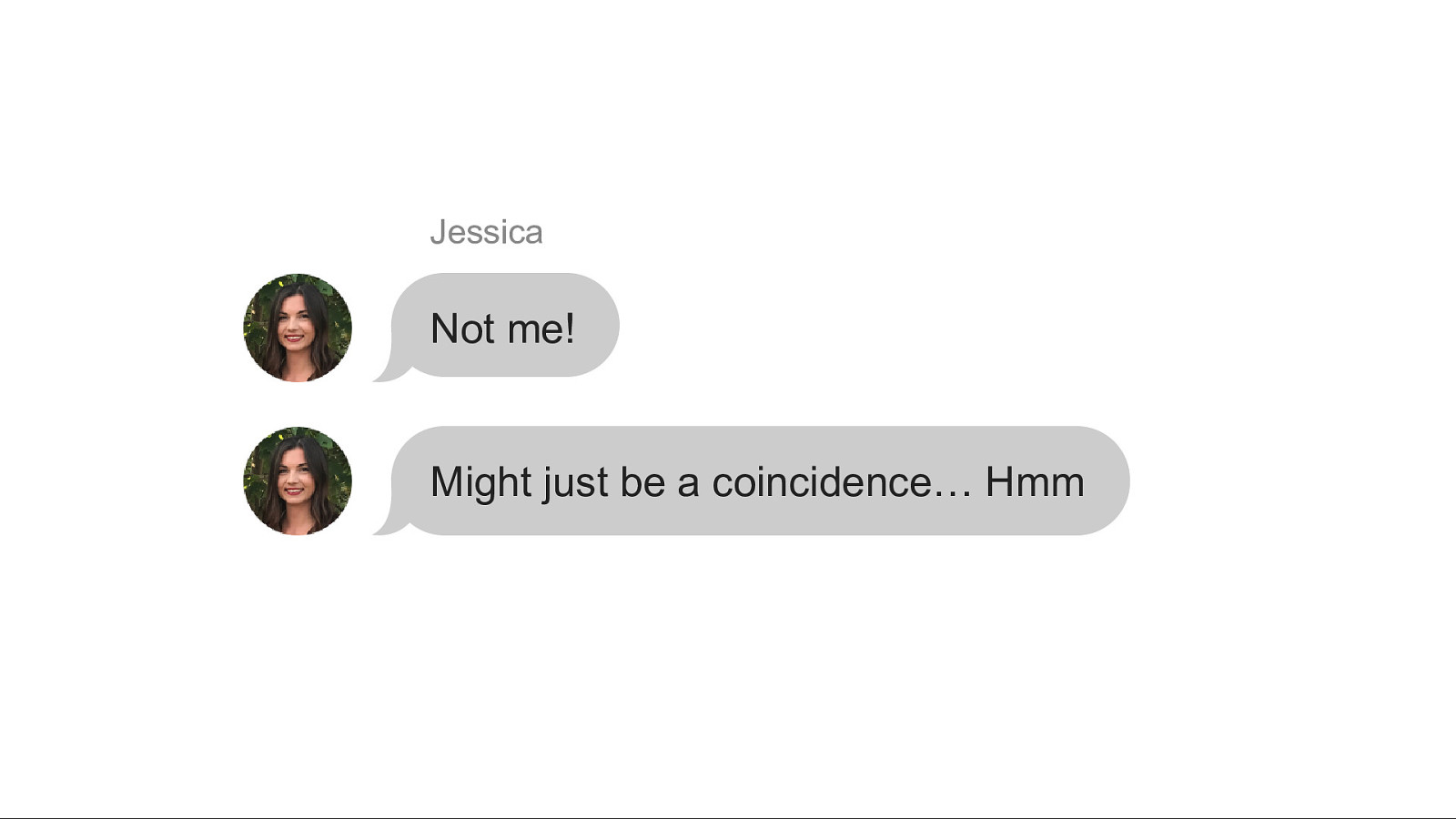

“Not me!” said Jess. Yeah, it might just be a strange coincidence…

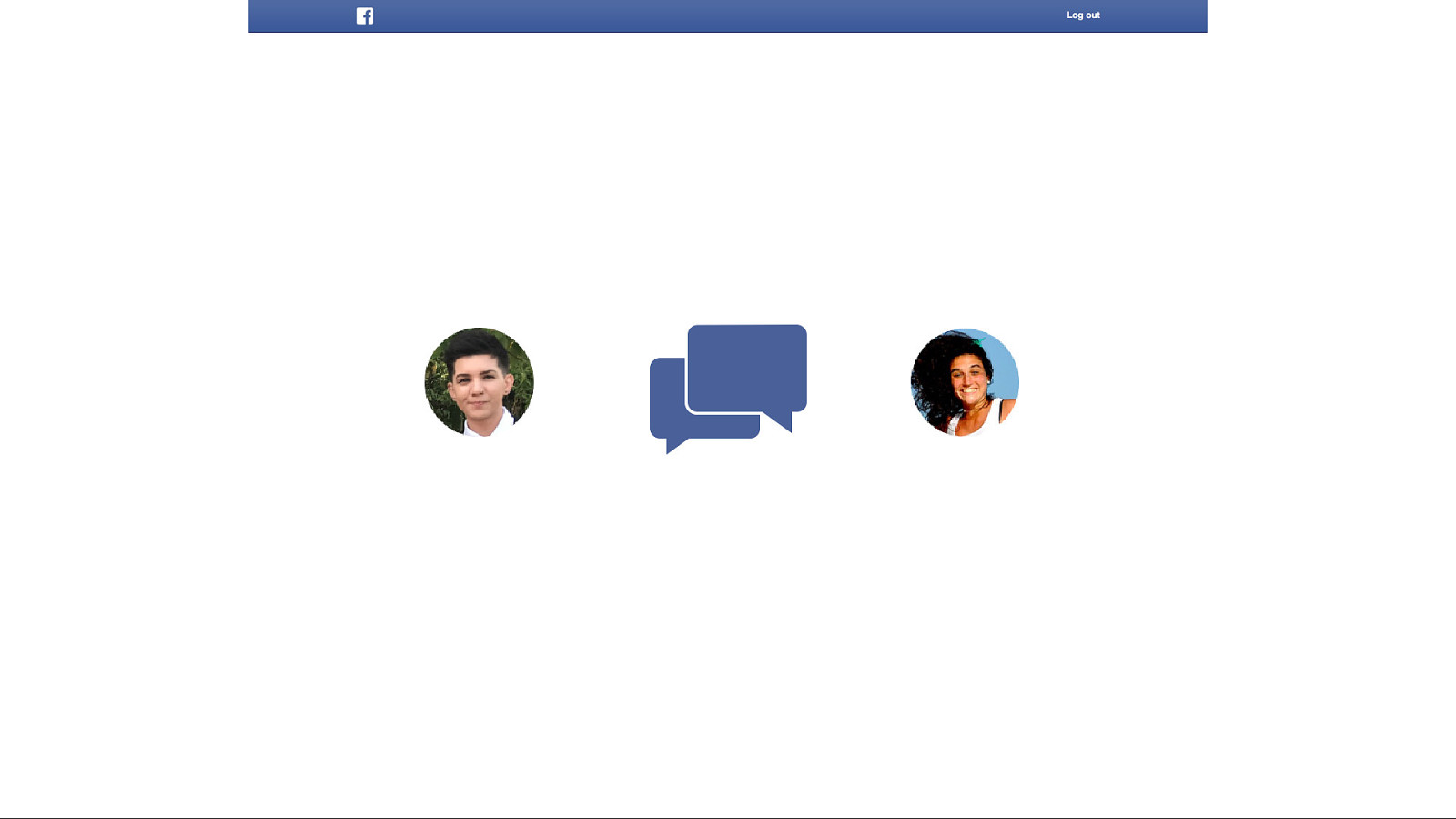

Nini: “sorry, that was me I think I face booked Madds cos she’s in Australia now xxx”

Well it might just be a strange coincidence, but maybe too close to be a coincidence.

My sister had told her friend via private message.

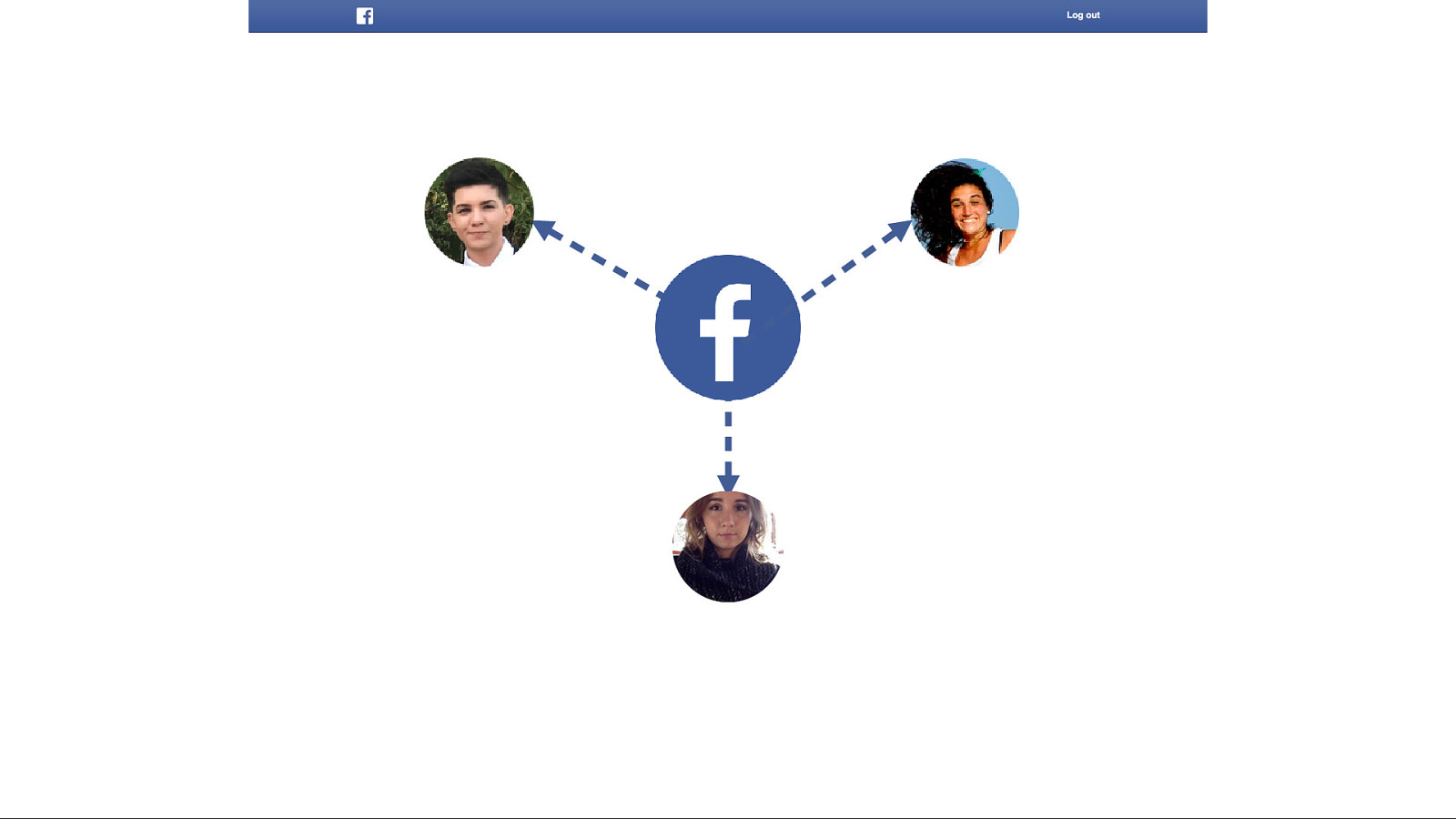

She’d used some key words in a Facebook message to her friend Maddy, Facebook may have made the connection that I’m her sister, figured out that I might want a Funeral Director and stuck that ad in my feed.

It just goes to show how much Facebook knows, and how much complexity it can grasp, despite my not telling it anything.

We hear stories like this all the time. Particularly where people think their devices must be listening to them through its microphones. They opened their phone and started seeing ads for a product they were just talking about in person.

A group of scientists at Northern University in the US did a study into 17,000 apps on Android devices, to see if apps were secretly recording people with the microphone. While they did find that a lot of apps used third party services that recorded videos and screenshots of the screen, they found no evidence of an app unexpectedly activating the microphone or sending audio out when not prompted to do so. It turned out that (for now at least!) apps are unlikely to be listening and recording everything we do.

But the truth is actually more insidious. “What people don’t seem to understand is that there’s a lot of other tracking in daily life that doesn’t involve your phone’s camera or microphone that give a third party just as comprehensive a view of you.” said David Choffnes, one of the authors of the paper.

If I was browsing funeral-related sites, maybe Facebook would have that information if those sites had Facebook Share Buttons or Facebook login. As this article in SBS News points out, those Facebook buttons track you across the web, violating your privacy.

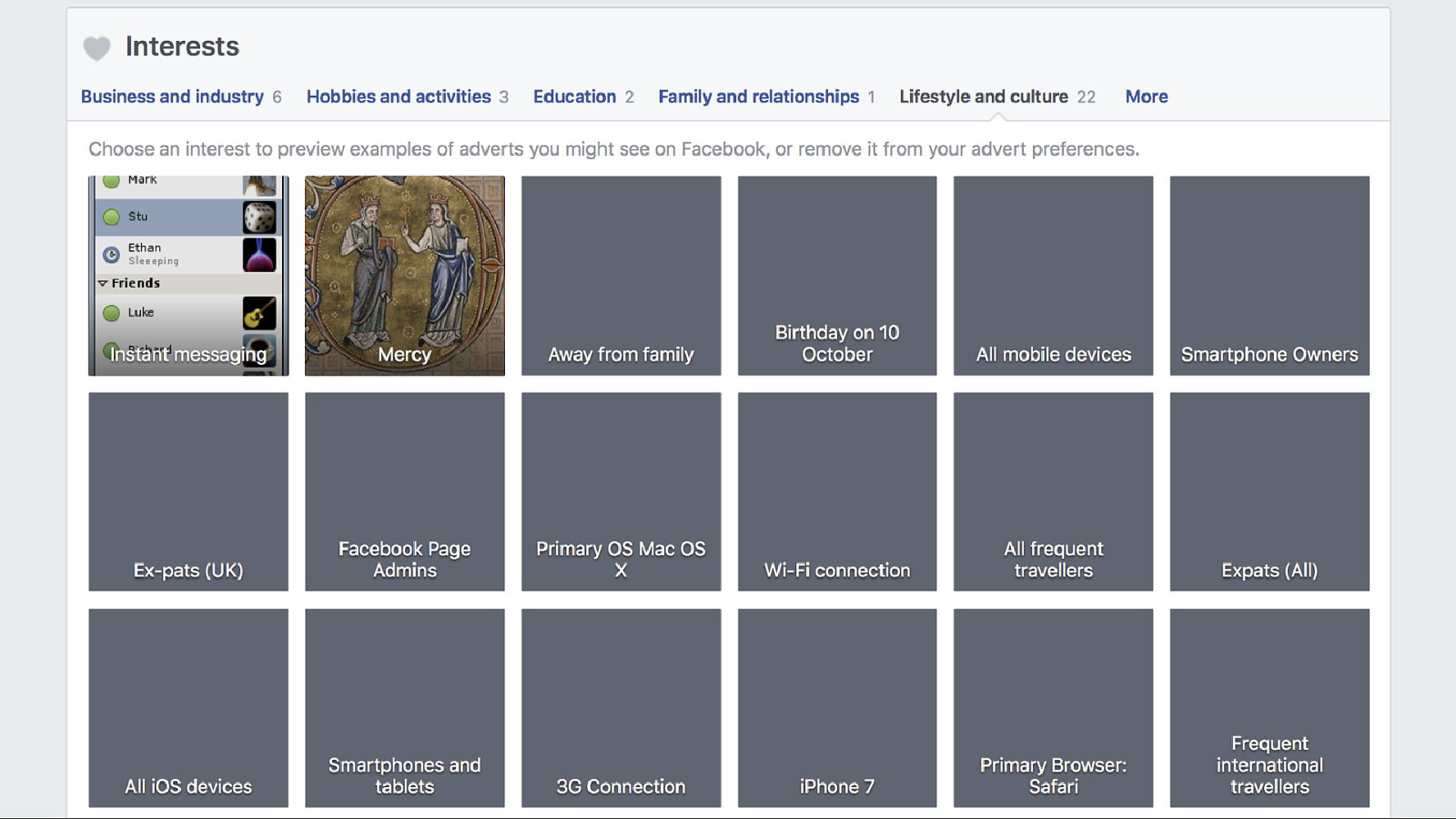

You can find out some of the things Facebook knows about you in your Ad Preferences. This is a screenshot of how Ad Preferences used to look, now you don’t get such a specific insight into the categories it has put you in. You can see it has noted I live “away from family”, I’m an “ex-pat (UK)”, I’m a “frequent international traveller”, as well as detailed categories on the devices, platforms and connections I use to access Facebook.

This is why facebook.com will only let you sign up as Male or Female, not non-binary or the self-declared gender option you get after you’ve signed up. It makes it easier to put you in a box.

As Eva Blum-Dumontet wrote in the New Statesman, “When profiling us, companies are not interested in our names or who we really are: they are interested in patterns of our behaviour that they believe match an audience type… So to target us more efficiently, the advertisement industry relies on a very binary vision of the world.”—’Why we need to talk about the sexism of online ad profiling’

And through this, advertising continues to perpetuates stereotypes and existing prejudices: “We need to be clear that a data driven world – where artificial intelligence makes decision based on simplistic profiles about us – isn’t going to solve prejudices: it’s going to perpetuate them.” –Eva Blum-Dumontet, ’Why we need to talk about the sexism of online ad profiling’ This isn’t just about ads that tell women they should be focused on domestic tasks, looking pretty and getting pregnant…

And through this, advertising continues to perpetuates stereotypes and existing prejudices: In 2016, Pro Publica discovered that Facebook was allowing advertisers to exclude users by race in ads for housing: “The ubiquitous social network not only allows advertisers to target users by their interests or background, it also gives advertisers the ability to exclude specific groups it calls ‘Ethnic Affinities.’ Ads that exclude people based on race, gender and other sensitive factors are prohibited by federal law in housing and employment.” –Julia Angwin and Terry Parris Jr., ‘Facebook Lets Advertisers Exclude Users by Race’

And even after Facebook argued it was not liable for advertisers’ discrimination (but still settled these cases), Facebook still perpetuates inequality in its profiling. A study into who Facebook picked to show ads to from very broad categories showed “given a large group of people who might be eligible to see an advertisement, Facebook will pick among them based on its own profit-maximizing calculations, sometimes serving ads to audiences that are skewed heavily by race and gender.” –Aaron Rieke and Corinne Yu, ‘Discrimination’s Digital Frontier’

“In these experiments, Facebook delivered ads for jobs in the lumber industry to an audience that was approximately 70 percent white and 90 percent men, and supermarket-cashier positions to an audience of approximately 85 percent women. Home-sale ads, meanwhile, were delivered to approximately 75 percent white users, while ads for rentals were shown to a more racially balanced group.”–Aaron Rieke and Corinne Yu, ‘Discrimination’s Digital Frontier’

Concluding: “An ad system that is designed to maximize clicks, and to maximize profits for Facebook, will naturally reinforce these social inequities and so serve as a barrier to equal opportunity.”–Aaron Rieke and Corinne Yu, ‘Discrimination’s Digital Frontier’

And this profiling, and these algorithms are used for the majority of technology today. It’s not just Facebook showing you ads, and determining whose posts you see based on what it thinks is most important to you.

(Most popular homepage in the world.) Search results are not just based on relevancy to the term but are personalised based on Google’s determination of user interests (profiles).

(2nd most popular site in the world.) Recommends videos based on newness and popularity, but also based on user interests and viewing history.

(9th most popular in the world.) Yahoo’s search results are personalised based on user’s search history, and a home page feed of news and other content is recommended based on popularity and the user’s predicted interests.

(10th most popular site in the world.) Products and product categories are recommended on the home page, including ‘featured’ recommendations, based on user purchases and views.

(14th most popular site in the world.) Posts displayed in an order Instagram determines to be most interesting and relevant to the user.

All of these sites use the profiles they’ve gathered to target users with advertising and content.

And these categories can be combined to have an incredibly complex view of you and how you might be manipulated.

This brings us to Cambridge Analytica.

According to whistleblower Christopher Wylie, Cambridge Analytica is a “propaganda machine” and a “psychological warfare weapon” hired by political campaigns to create advertising to influence voters’ decisions The kind of advertising we might consider “fake news” How did they do this?

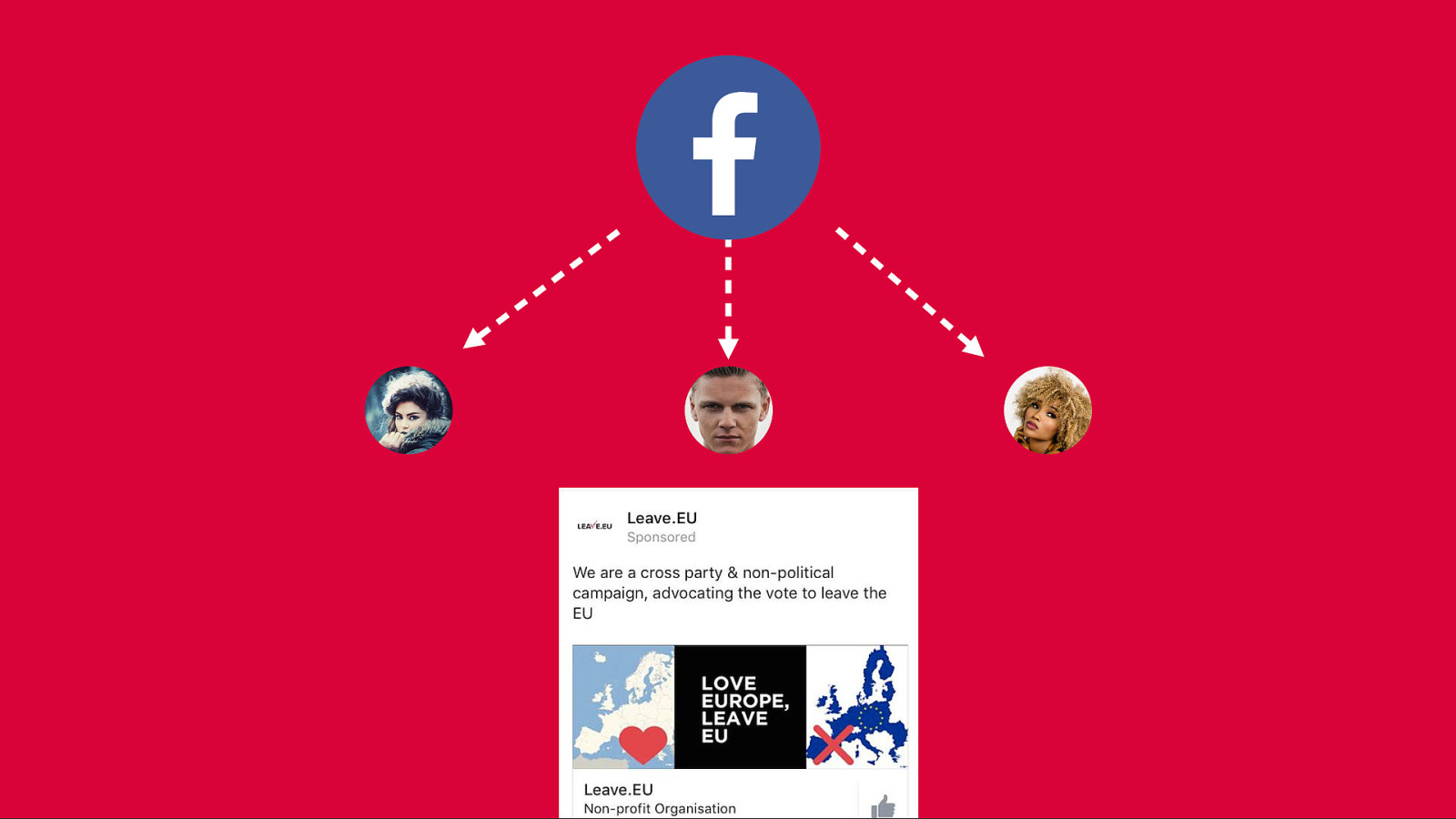

They build algorithms to process profile data from Facebook that could determine how a person is likely to vote, and how their decision could be changed or re-enforced through targeted advertising.

Cambridge Analytica harvested profile data through Facebook personality test apps, which had special permission to harvest particular data…

… not just from the person who used the app or joined the app, but also it would then go into their entire friend network, and pull out all the friends’ data as well.

“we’d only need to touch a couple hundred thousand people to expand into their entire social network, which would scale to most of America”—Christopher Wylie, ex-employee of Cambridge Analytica

Using data such as “status updates, likes, in some cases private messages…”

When all this information came out, Facebook blamed Cambridge Analytica and the researcher who created the personality test app, claiming the use was against Facebook’s terms. However, Facebook doesn’t have a problem with Facebook using data in the same way.

A data scientist who previously worked at Facebook said: “The fundamental purpose of most people at Facebook working on data is to influence and alter people’s moods and behaviour. They are doing it all the time to make you like stories more, to click on more ads, to spend more time on the site.”

“Facebook knows you better than your members of your own family”—Sarah Knapton, Science Editor, Daily Telegraph.

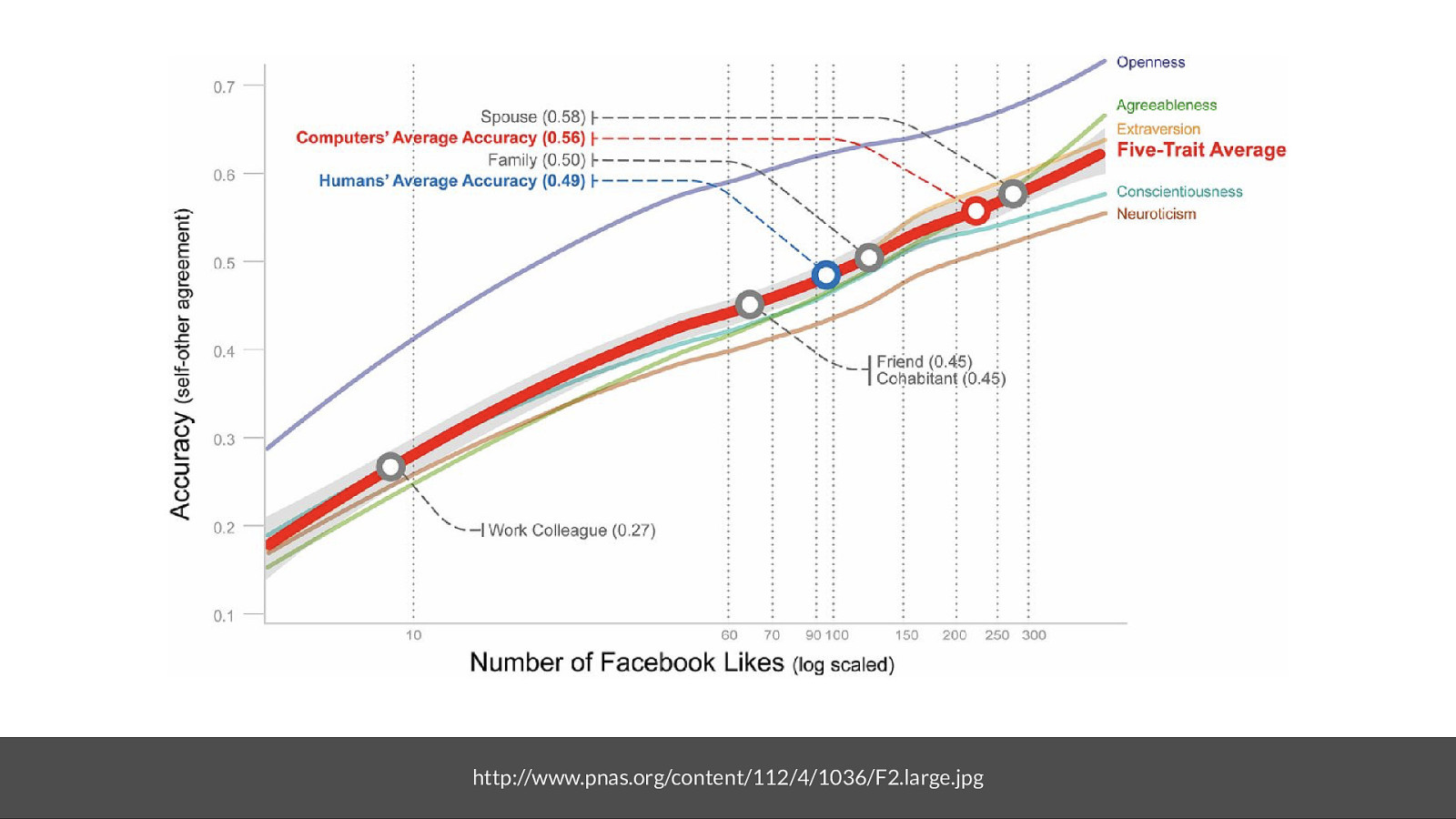

Psychologists and a Computer Scientist from the Universities of Cambridge and Stanford discovered that computer-based personality judgments are more accurate than those made by humans.

“The team found that their software was able to predict a study participant’s personality more accurately than a work colleague by analysing just 10 ‘Likes’.”

“Inputting 70 ‘Likes’ allowed it to obtain a truer picture of someone’s character than a friend or room-mate, while 150 ‘Likes’ outperformed a parent, sibling or partners.”

“It took 300 ‘Likes’ before the programme was able to judge character better than a spouse.”

With the introduction of Facebook reactions, there’s even more Facebook can know about you because they have more specific data.

“Belgian police now says that the site [Facebook] is using them as a way of collecting information about people and deciding how best to advertise to them. As such, it has warned people that they should avoid using the buttons if they want to preserve their privacy.”—Andrew Griffin, The Independent

“By limiting the number of icons to six, Facebook is counting on you to express your thoughts more easily so that the algorithms that run in the background are more effective,” the post continues. “By mouse clicks you can let them know what makes you happy.”—Andrew Griffin, The Independent

And as Julia Angwin, Terry Parris Jr and Surya Mattu found: “What the [Facebook ads] page doesn’t say is that those sources include detailed dossiers obtained from commercial data brokers about users’ offline lives. Nor does Facebook show users any of the often remarkably detailed information it gets from those brokers.”—Julia Angwin, Terry Parris Jr. and Surya Mattu. ‘Facebook Doesn’t Tell Users Everything It Really Knows About Them’

One of the reasons this is so scary is because platforms like Facebook have become a vital part of our social infrastructure. Leaving has big consequences if you find it harder to keep in touch with your family across the world, if your kids’ school uses Facebook for important school updates, if you have to use Facebook for work.

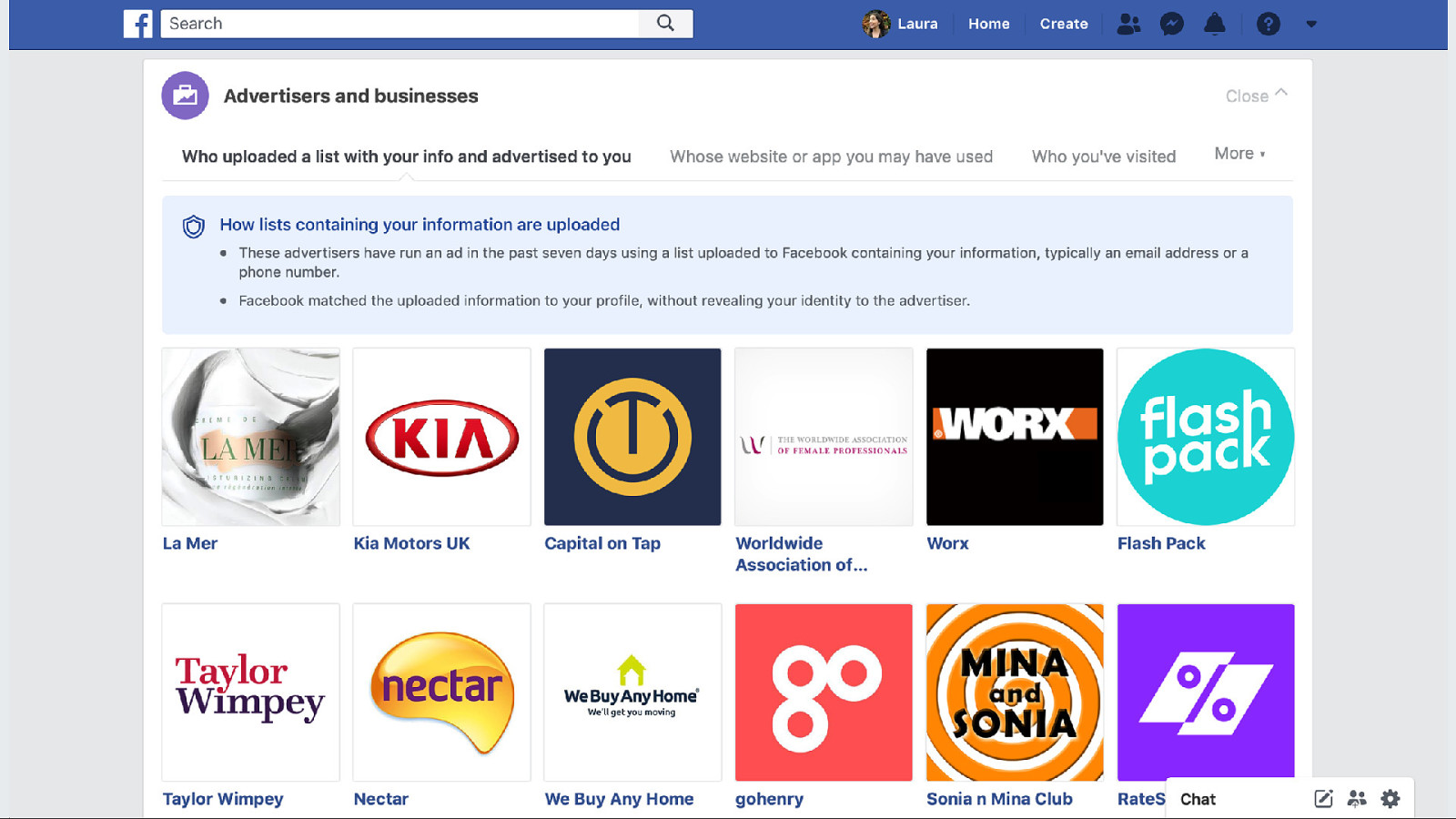

And even if you left or you never joined in the first place, that doesn’t mean you escape Facebook. Facebook has a shadow profile on you.

If a friend or acquaintance has used the Find Friends functionality, or used Facebook Messenger on their phone, they gave Facebook access to their Contacts. Facebook uses those names, email addresses, and phone numbers to build their shadow profiles. And companies who you buy things from upload the details they have on you to Facebook in order to better target their own ads.

“Right now commenters across the Internet will be saying, Don’t join Facebook or Delete your account. But it appears that we’re subject to Facebook’s shadow profiles whether or not we choose to participate.” —Violet Blue, ‘Firm: Facebook’s shadow profiles are ‘frightening’ dossiers on everyone’

”I feel like we’re only beginning to understand why Facebook’s data is so very valuable to advertisers, governments, app makers and malicious entities.”

And it’s not just the web and apps that are tracking you and your behaviour. Nearly all “smart” and “cloud” internet-connected technology needs that internet connectivity to send your information back to its businesses’ servers.

Like the “smart” speakers that are now so ubiquitous in our homes.

Google Nest, having a good look around your home.

Hello Barbie which your kids can talk to, with all that information recorded and sent to a multinational corporation…

Smart pacifier. Put a chip in your baby.

Looncup - smart menstrual cup! One of many smart things that women can put inside themselves. (Most of the internet of things companies in this genre are run by men…)

We Connect (the smart dildo makers) were even sued for tracking users’ habits. (Though many companies will get away with tracking like this as they’ll hide it in the terms and conditions…)

Have you ever wondered how many calories you’re burning during intercourse? How many thrusts? Speed of your thrusts? The duration of your sessions? Frequency? How many different positions you use in the period of a week, month or year? You want the iCondom.

And have you ever wanted to share all that information with advertisers, insurers, your government, and who knows else?

And it’s not just tech for individuals that is affected. Our governments are sharing our health data with tech corporations.

Our cities are being made into “smart” cities which know where we are and what we do at all times.

And it was not an accident, it was very much on purpose.

A term coined by Shoshana Zuboff, a professor at the Harvard Business school, who has recently authored a whole book on this topic.

What is surveillance capitalism? “Surveillance capitalism unilaterally claims human experience as free raw material for translation into behavioral data. Although some of these data are applied to product or service improvement, the rest are…fabricated into prediction products that anticipate what you will do now, soon, and later.”—Shoshana Zuboff, The Age of Surveillance Capitalism

Surveillance capitalism is defined by surveilling our behaviour, and monetising it. If it sounds familiar, it’s because it forms the dominant business model of technology today.

As the people who work in and around technology, we have to understand that surveillance capitalism is not a feature we can replace, or phase out when we’ve found another way to make money. The monetisation of our data is the business model. The investment has been made on the premise that our behaviour will be shaped and pushed towards making the businesses more money in the future.

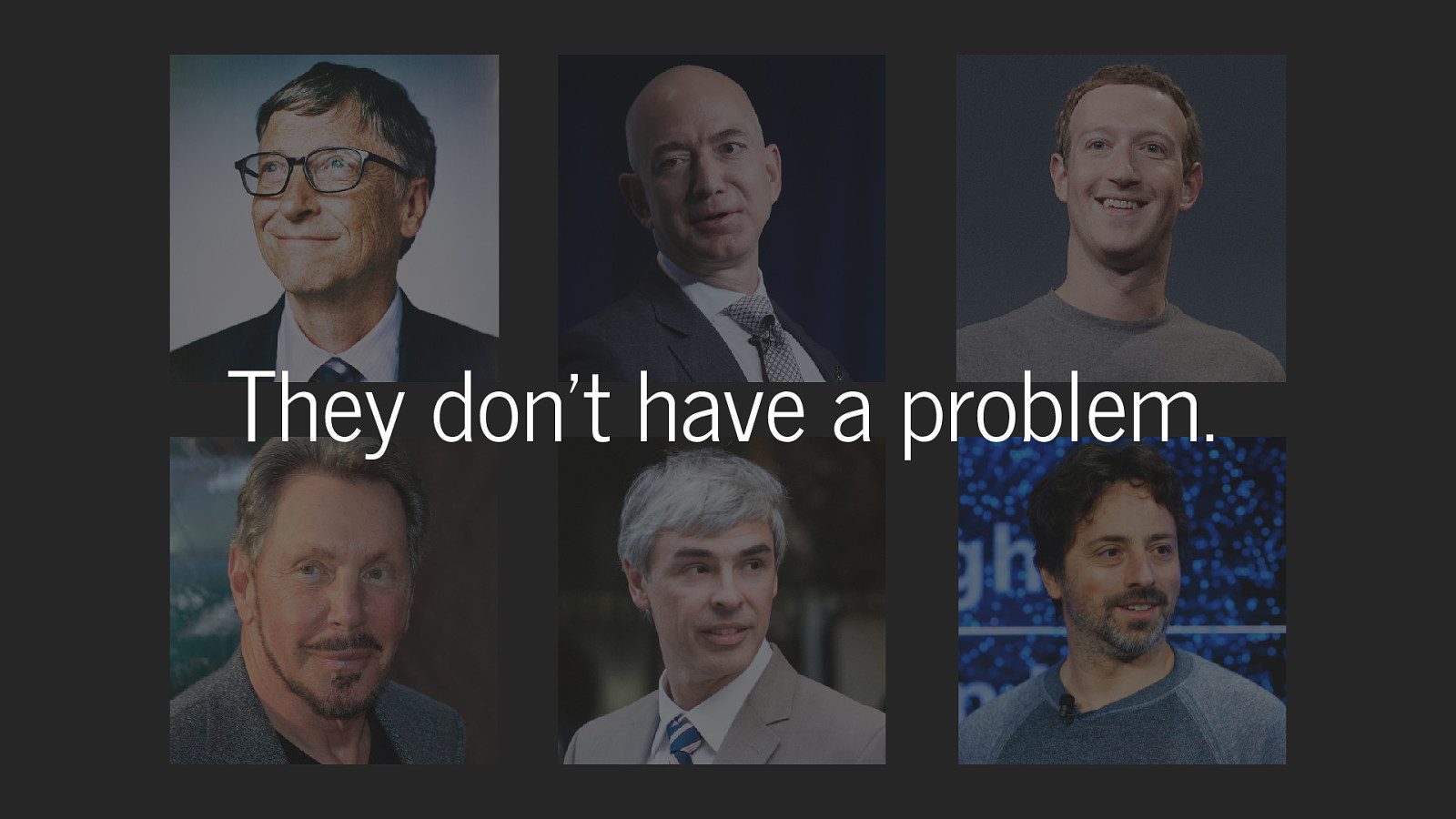

Not least because these guys don’t want to reform big tech and they’re its wealthiest CEOs. Not just that, they are six of the twelve richest people on the planet. They have more people and power than many countries.

They don’t have a problem. They’re doing fine.

Venture capital loves surveillance capitalism. And that is why businesses with venture capital can’t change their models. The business has been sold to the investors, and investors have been promised year-on-year exponential growth. More users. More data. More money.

The thing is, exponential growth is not sustainable. We live on a planet made up of finite resources. This is why we are living in a climate catastrophe.

And much like in the climate catastrophe, the most vulnerable and marginalised people are affected by surveillance capitalism first.

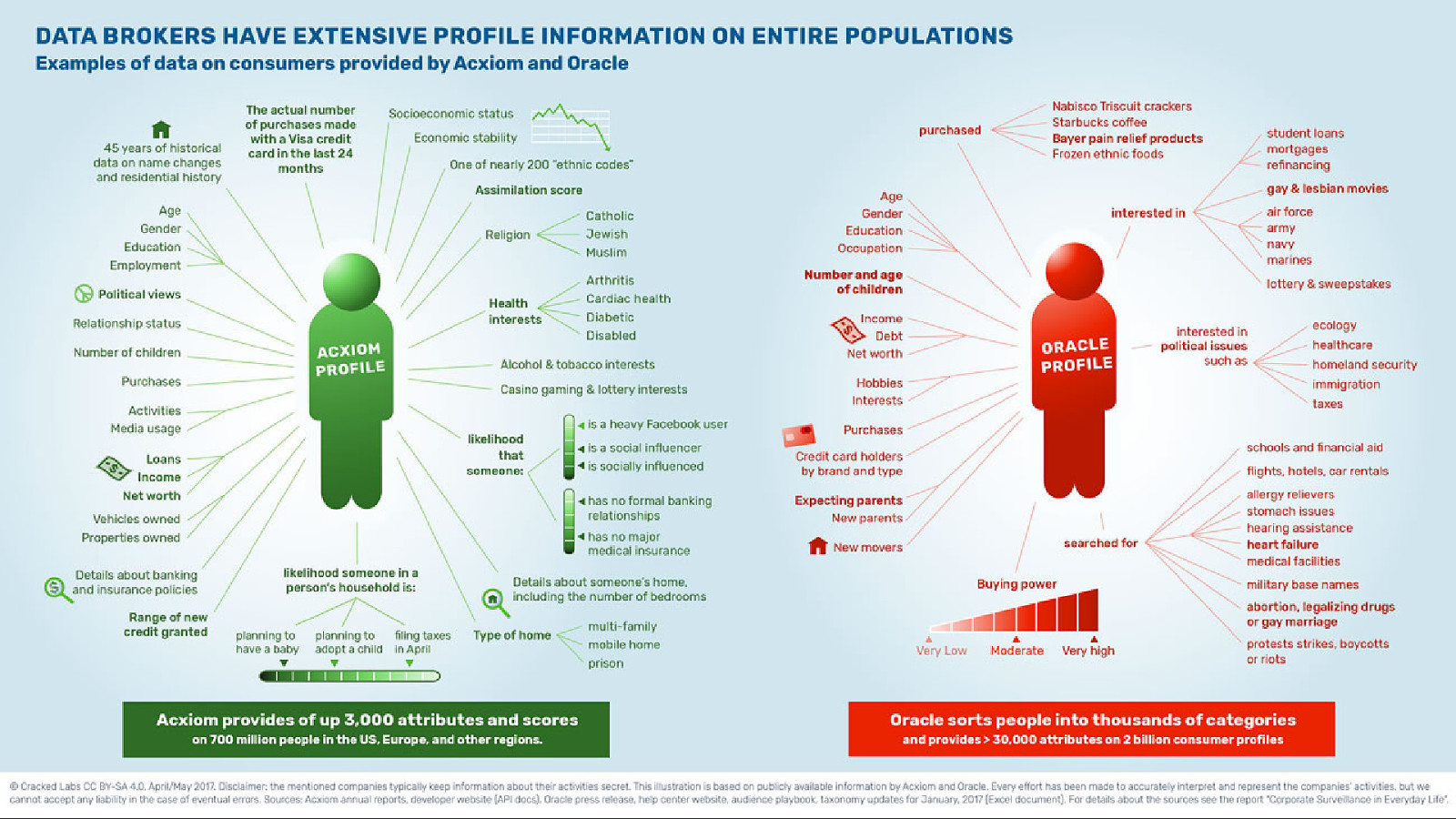

Look at the information that data brokers have about you. This is data that Axiom and Oracle have obtained. They get information in the same way Facebook does. And they’ve profiled you as disabled, or poor, or LGBTQ+. That you take an interest in protests and what your political interests are. And any government can ask for that information. They’ve profiled you as prone to gambling, drinking alcohol, or smoking. They know what your health purchases are, what symptoms and ailments you think you might have. And any corporation can buy that information.

We can’t continue in this way. We need to do something.

And Aral will now explain some of the things we can do about it…