Application Delivery to Kubernetes A 101 to a fast-evolving ecosystem Max Körbächer

A presentation at Developer Week in June 2021 in by Max Körbächer

Application Delivery to Kubernetes A 101 to a fast-evolving ecosystem Max Körbächer

Introduction Max Körbächer Co-Founder & Manager Cloud Native Engineer, focusing on: • Platform Engineering • Application Delivery • Cloud Native Advisory Part of the Kubernetes Release Team & Release Engineering Team 2

CLOUD NATIVE You are not cloud native just because you run on an CSP • Scalable applications • Running in dynamic environments • Based containers or functions • Utilizing declarative APIs • Structured in (micro) services 3 “The cloud isn’t a place, it’s a way of doing IT” – Michael Dell

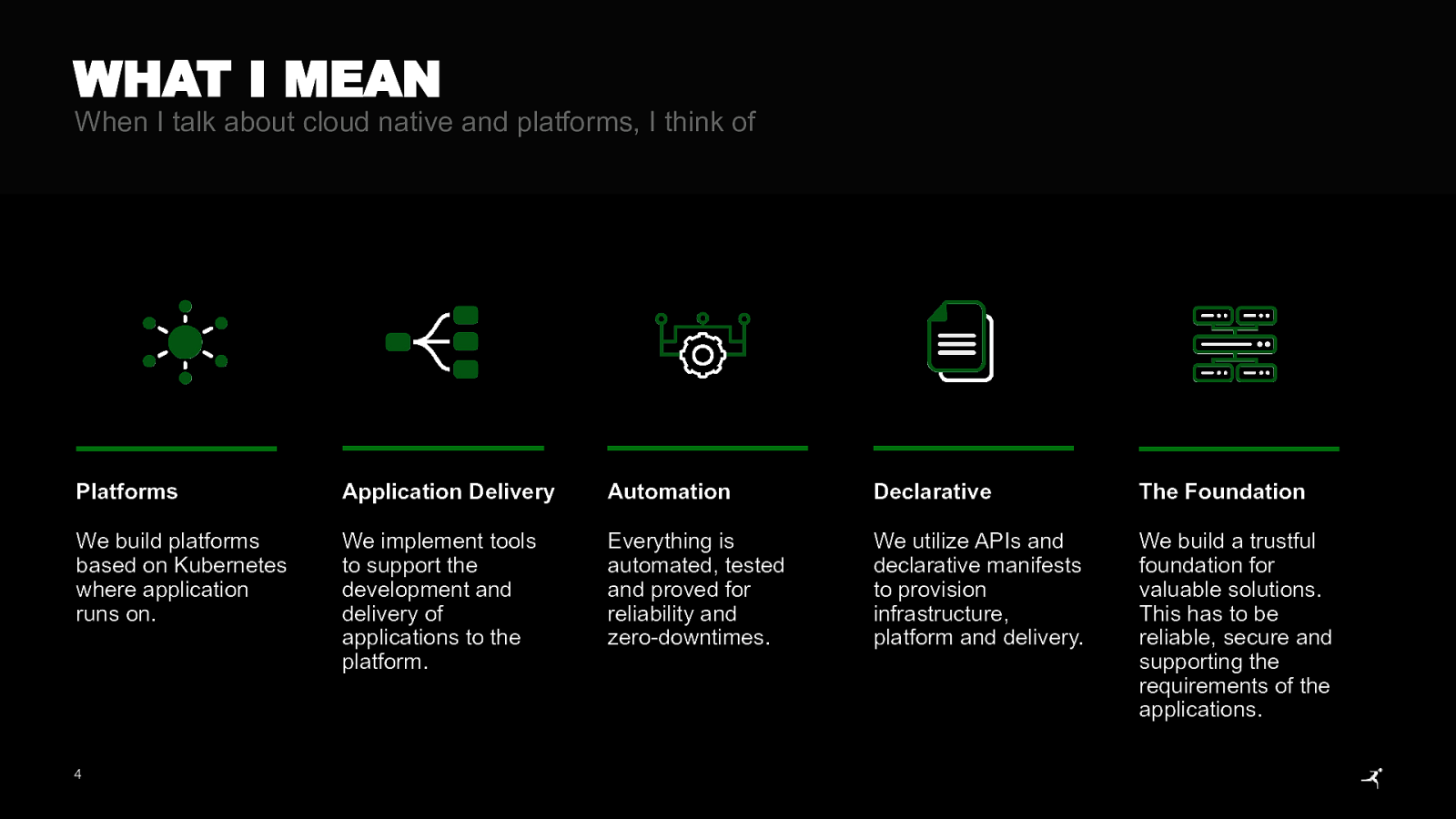

WHAT I MEAN When I talk about cloud native and platforms, I think of Platforms Application Delivery Automation Declarative The Foundation We build platforms based on Kubernetes where application runs on. We implement tools to support the development and delivery of applications to the platform. Everything is automated, tested and proved for reliability and zero-downtimes. We utilize APIs and declarative manifests to provision infrastructure, platform and delivery. We build a trustful foundation for valuable solutions. This has to be reliable, secure and supporting the requirements of the applications. 4

Common patterns & problems with Platforms Platforms abstracts infrastructure complexities away. BUT they create new unknown, custom complexity: ● ● ● ● ● ● New responsibilities 100 options for one problem Single vs Multi Clusters CICD, GitOps or better something else How to build the application? How to ensure security, compliance and governance?

How to deliver software for K8s

The fairy tale of CI/CD ● Specially custom build pipelines ● “Hand Made” ● Yet another script That’s not cloud native!

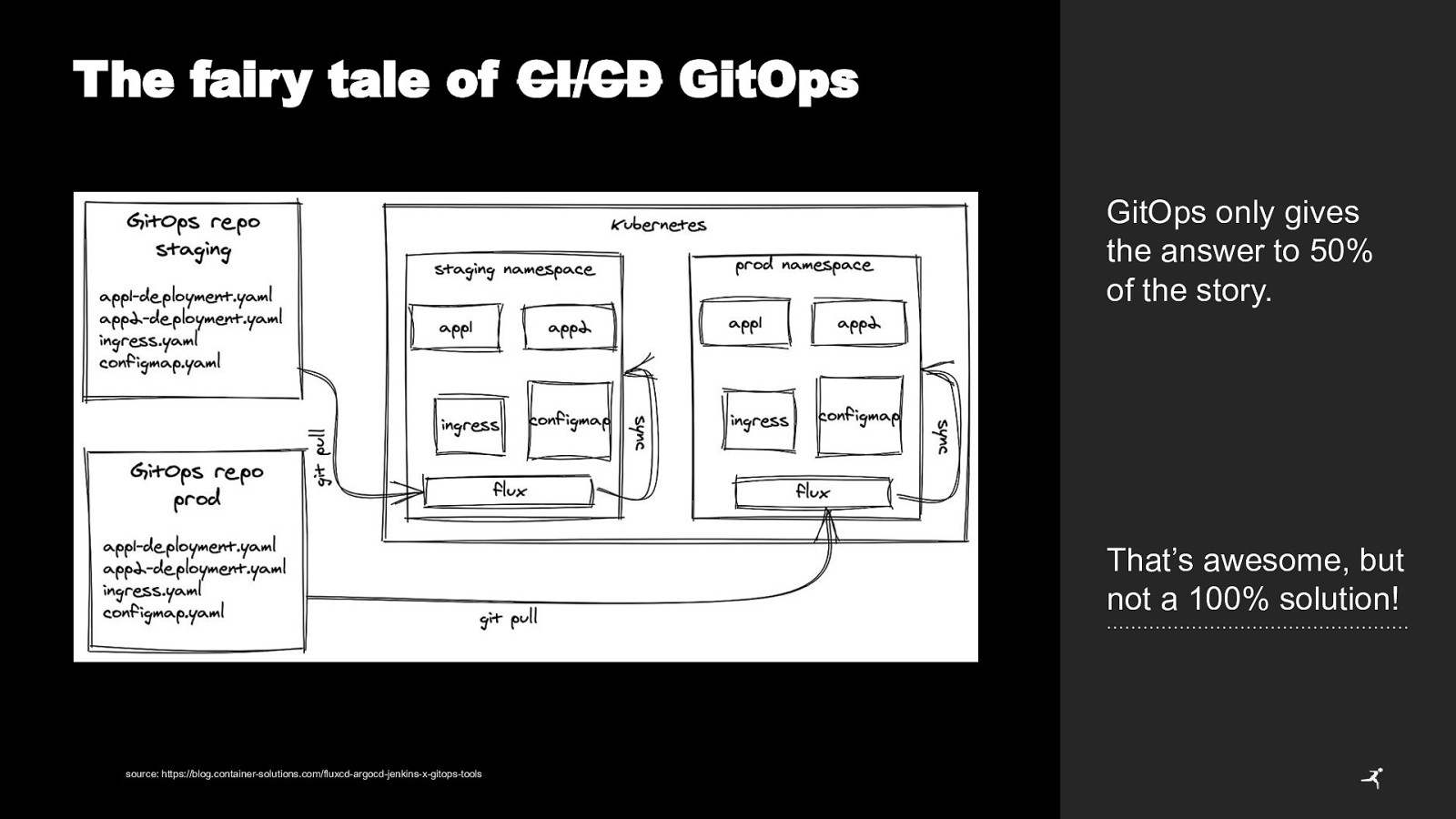

The fairy tale of CI/CD GitOps GitOps only gives the answer to 50% of the story. That’s awesome, but not a 100% solution! source: https://blog.container-solutions.com/fluxcd-argocd-jenkins-x-gitops-tools

None of it is a perfect solution

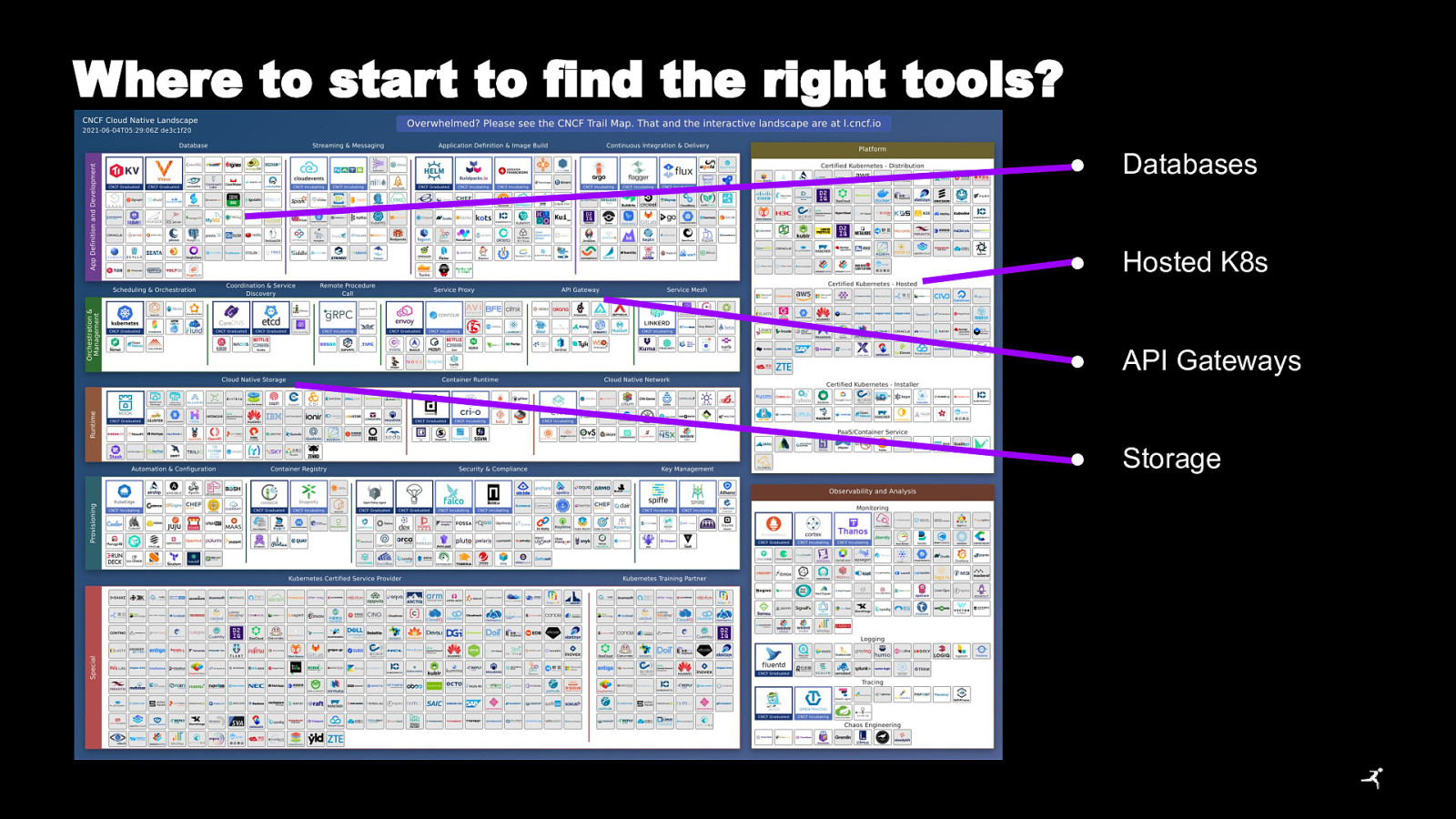

Where to find the right tools?

Where to start to find the right tools? ● Databases ● Hosted K8s ● API Gateways ● Storage

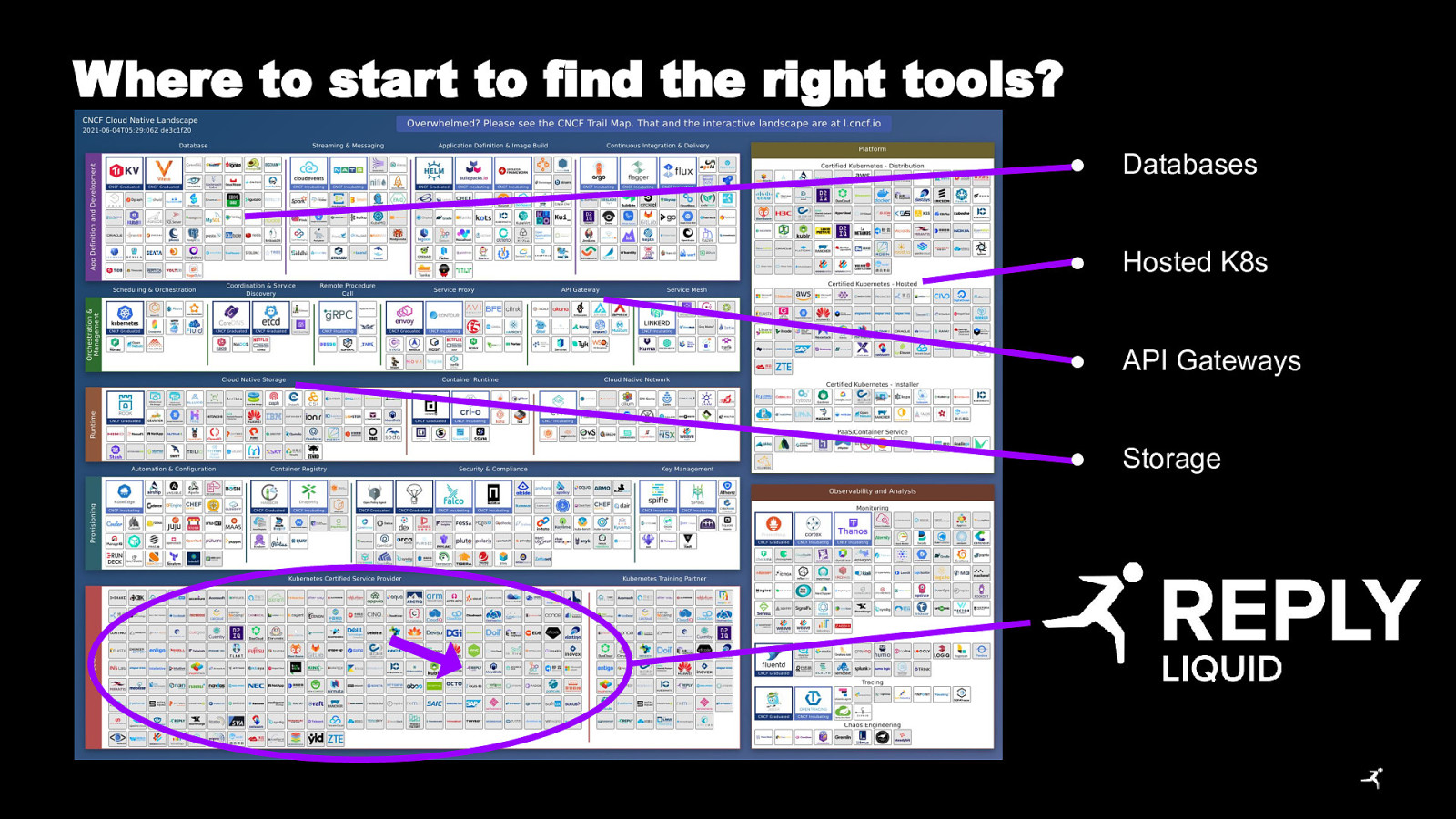

Where to start to find the right tools? ● Databases ● Hosted K8s ● API Gateways ● Storage

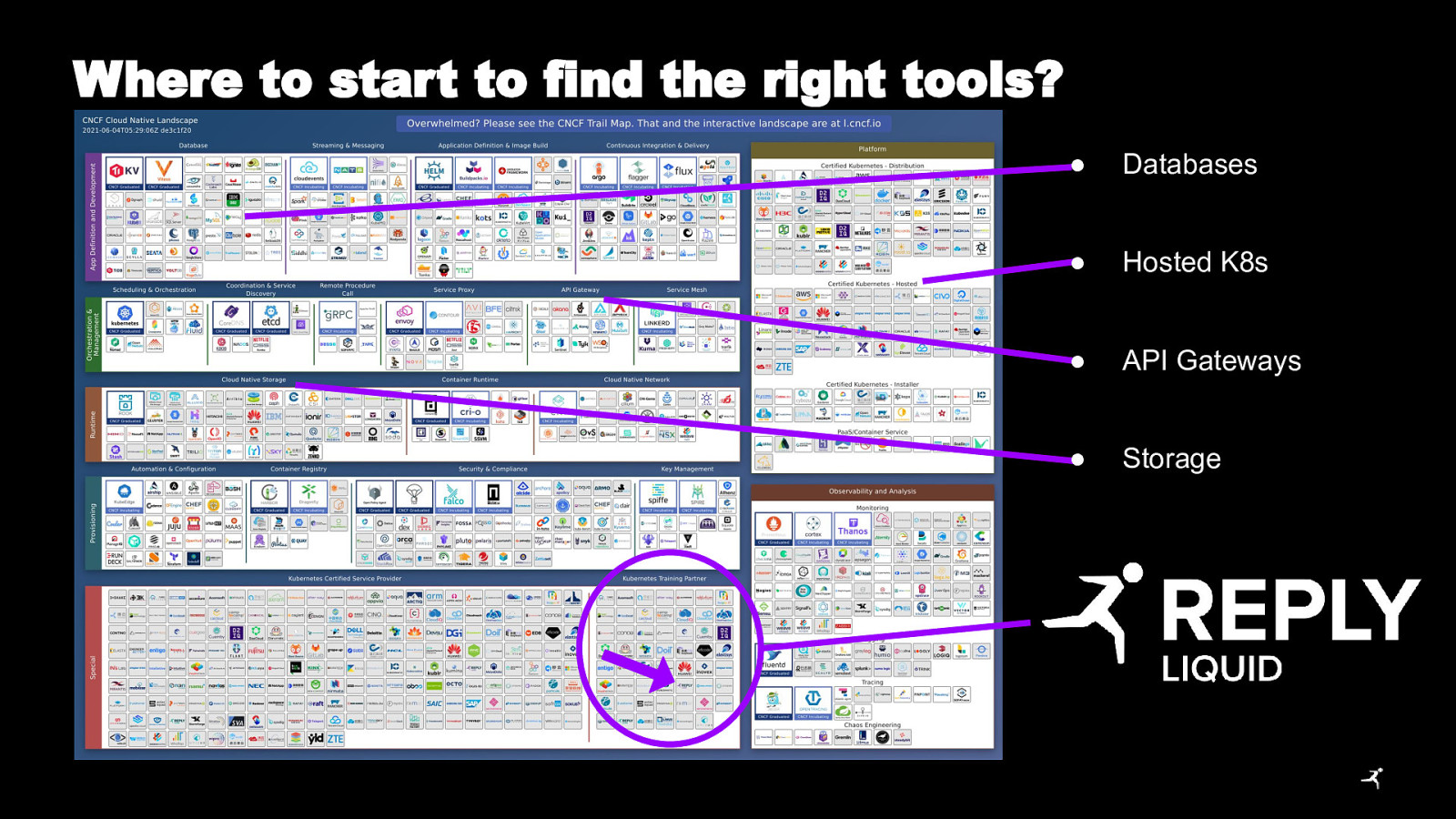

Where to start to find the right tools? ● Databases ● Hosted K8s ● API Gateways ● Storage

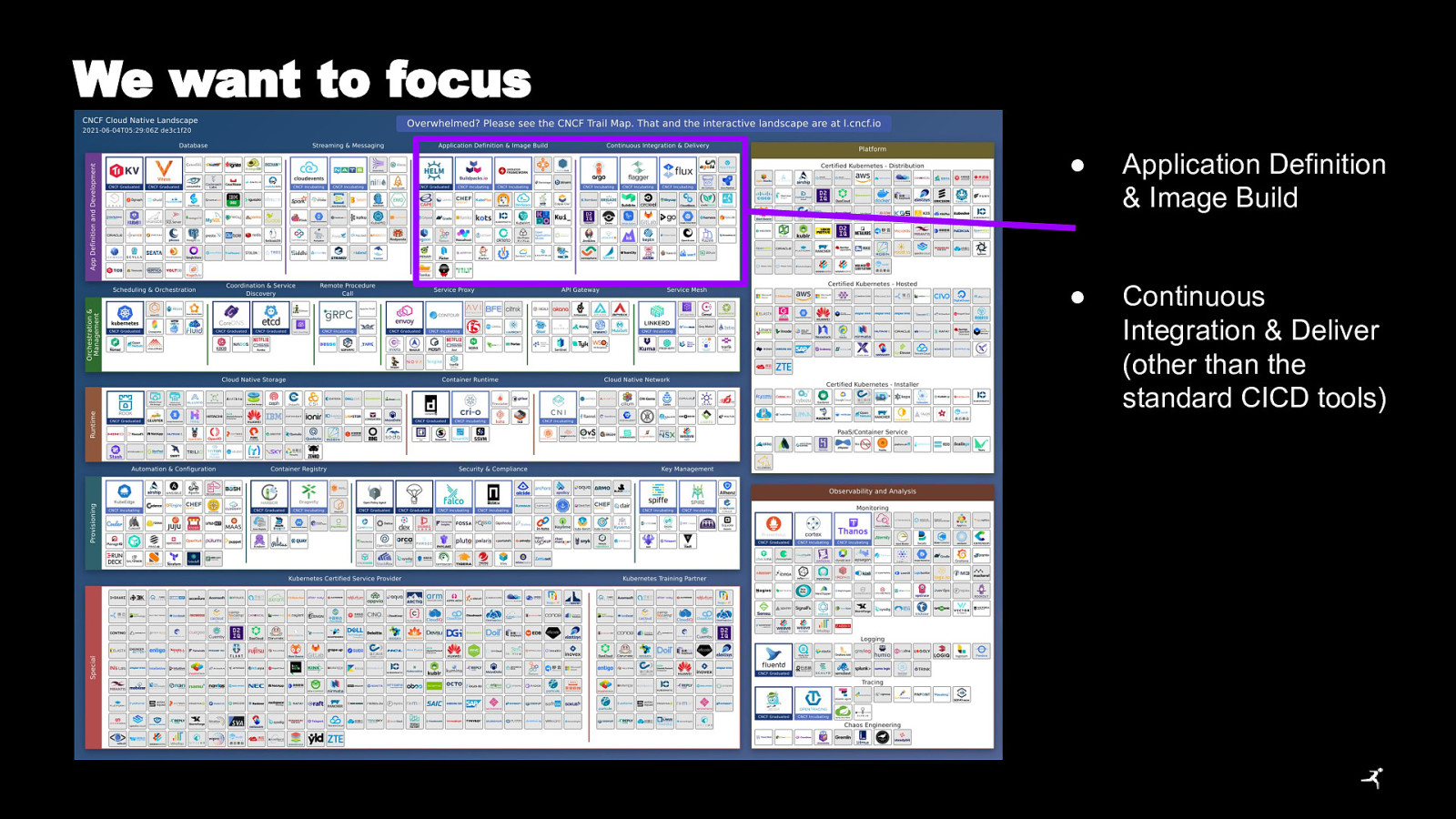

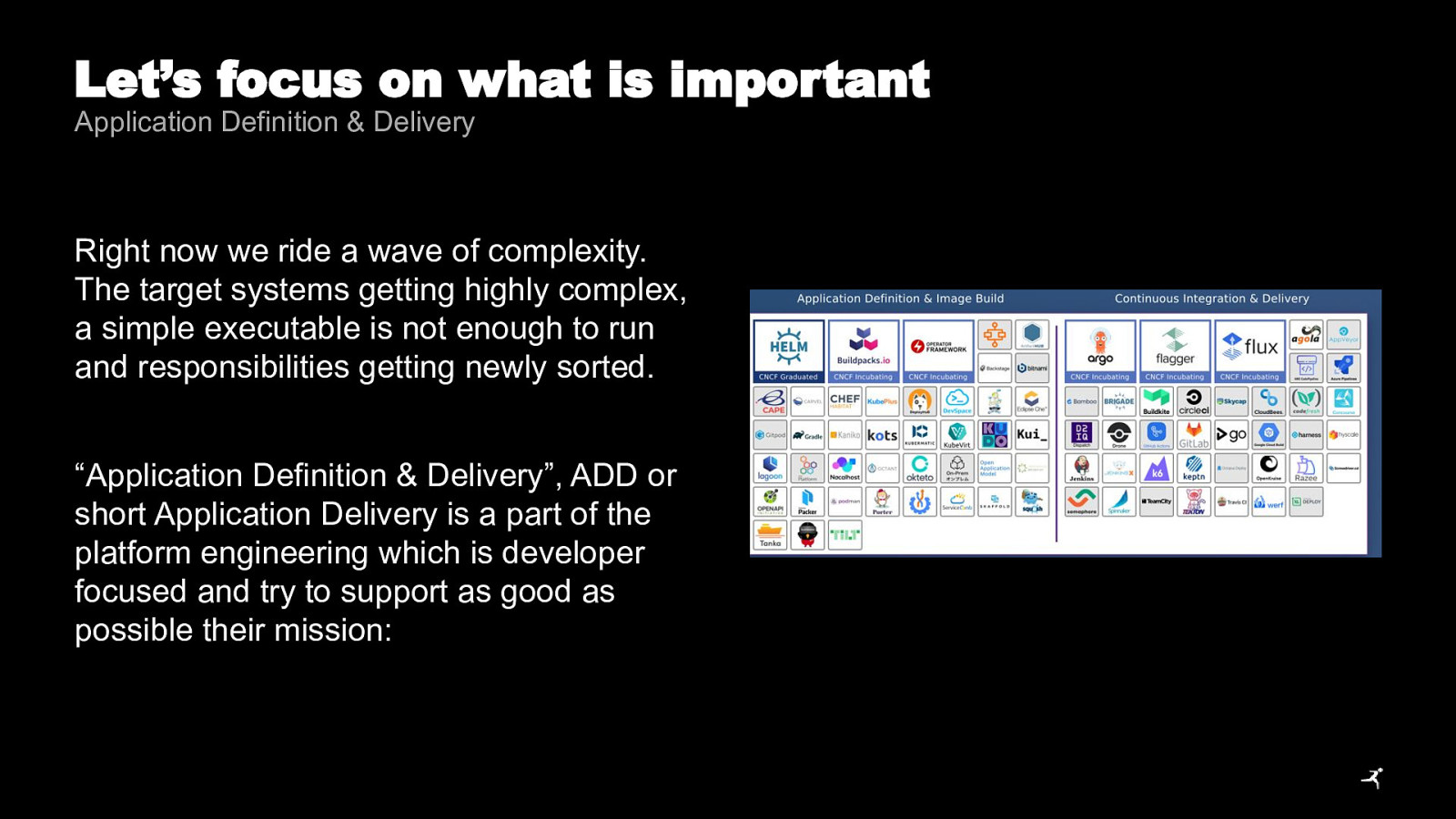

We want to focus ● Application Definition & Image Build ● Continuous Integration & Deliver (other than the standard CICD tools)

Let’s focus on what is important Application Definition & Delivery Right now we ride a wave of complexity. The target systems getting highly complex, a simple executable is not enough to run and responsibilities getting newly sorted. “Application Definition & Delivery”, ADD or short Application Delivery is a part of the platform engineering which is developer focused and try to support as good as possible their mission:

NOT to learn 3x Cloud Provider, K8s, Helm, min. 5 possible sidecar injections and fixing your CICD every 2 days

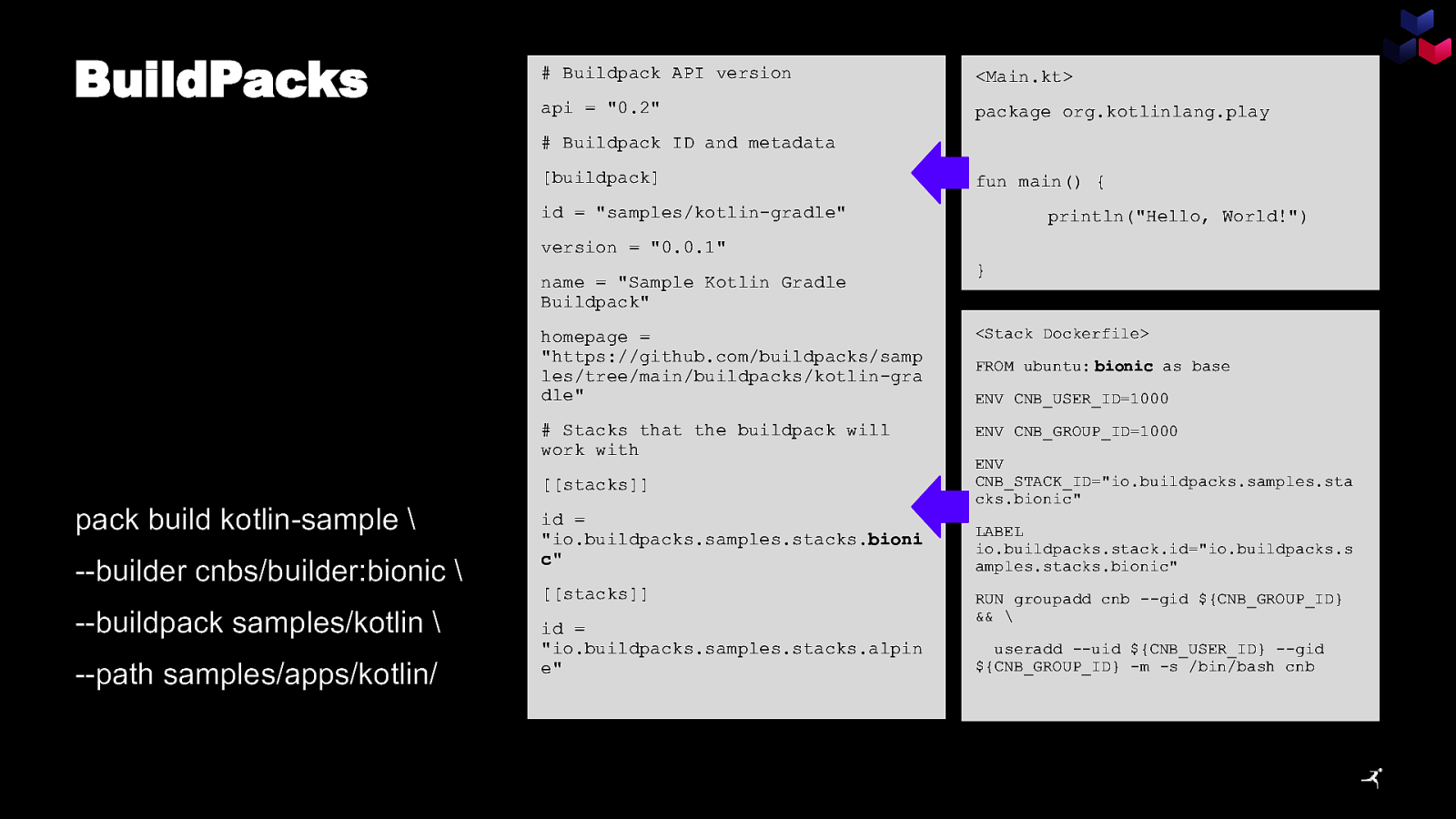

BuildPacks

<Main.kt> api = “0.2” package org.kotlinlang.play

fun main() { id = “samples/kotlin-gradle” println(“Hello, World!”) version = “0.0.1” name = “Sample Kotlin Gradle Buildpack” homepage = “https://github.com/buildpacks/samp les/tree/main/buildpacks/kotlin-gra dle” <Stack Dockerfile>

ENV CNB_GROUP_ID=1000

[[stacks]]

pack build kotlin-sample \ —builder cnbs/builder:bionic

id = “io.buildpacks.samples.stacks.bioni c” [[stacks]]

—buildpack samples/kotlin \ —path samples/apps/kotlin/

}

id = “io.buildpacks.samples.stacks.alpin e”

FROM ubuntu: bionic as base ENV CNB_USER_ID=1000

ENV CNB_STACK_ID=”io.buildpacks.samples.sta cks.bionic” LABEL io.buildpacks.stack.id=”io.buildpacks.s amples.stacks.bionic” RUN groupadd cnb —gid ${CNB_GROUP_ID} && \ useradd —uid ${CNB_USER_ID} —gid ${CNB_GROUP_ID} -m -s /bin/bash cnb

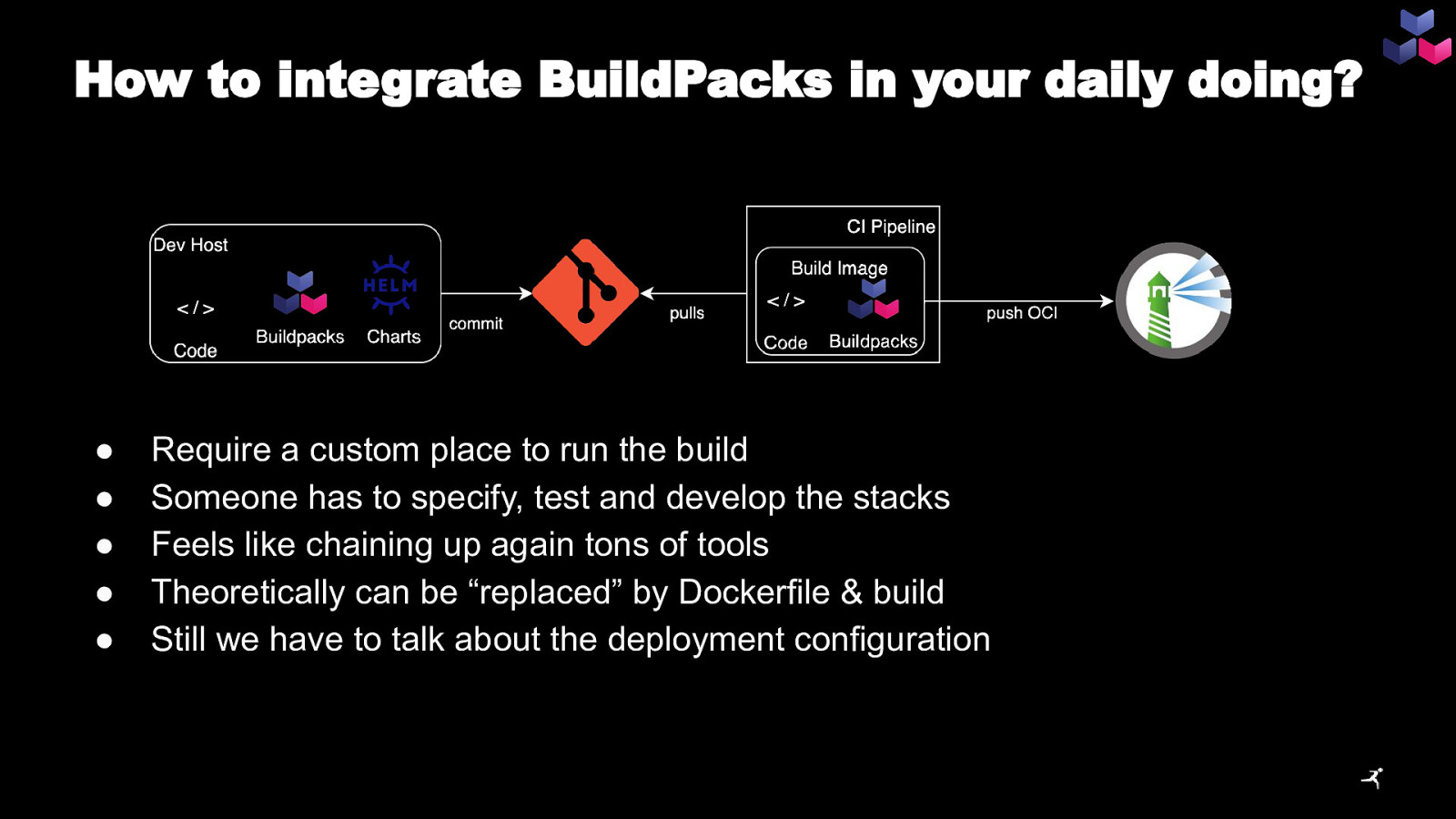

How to integrate BuildPacks in your daily doing? ● ● ● ● ● Require a custom place to run the build Someone has to specify, test and develop the stacks Feels like chaining up again tons of tools Theoretically can be “replaced” by Dockerfile & build Still we have to talk about the deployment configuration

Are CNBs better than the rest? At least you should have a look at

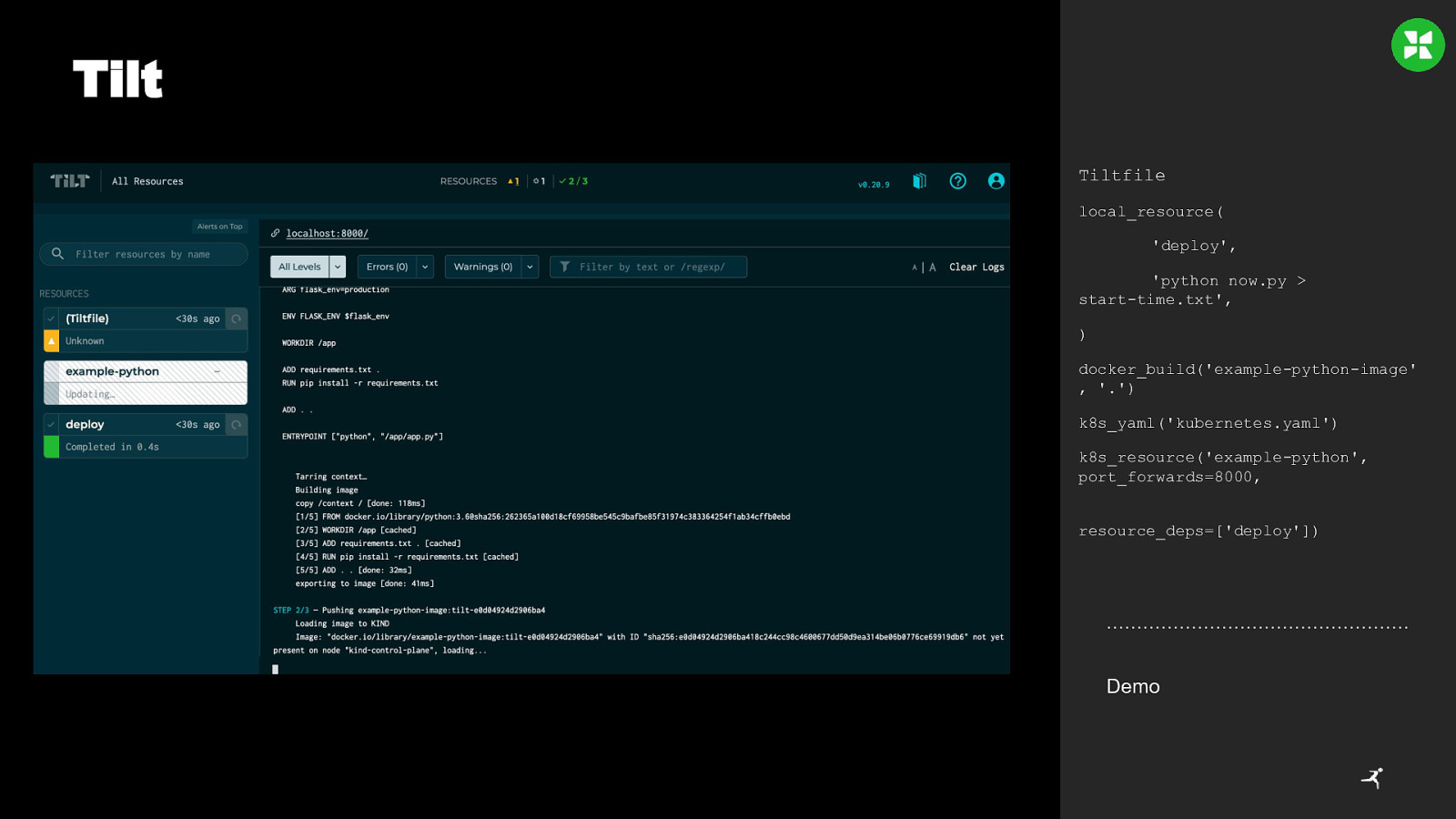

Tilt Tiltfile local_resource( ‘deploy’, ‘python now.py > start-time.txt’, ) docker_build(‘example-python-image’ , ‘.’) k8s_yaml(‘kubernetes.yaml’) k8s_resource(‘example-python’, port_forwards=8000, resource_deps=[‘deploy’]) Demo

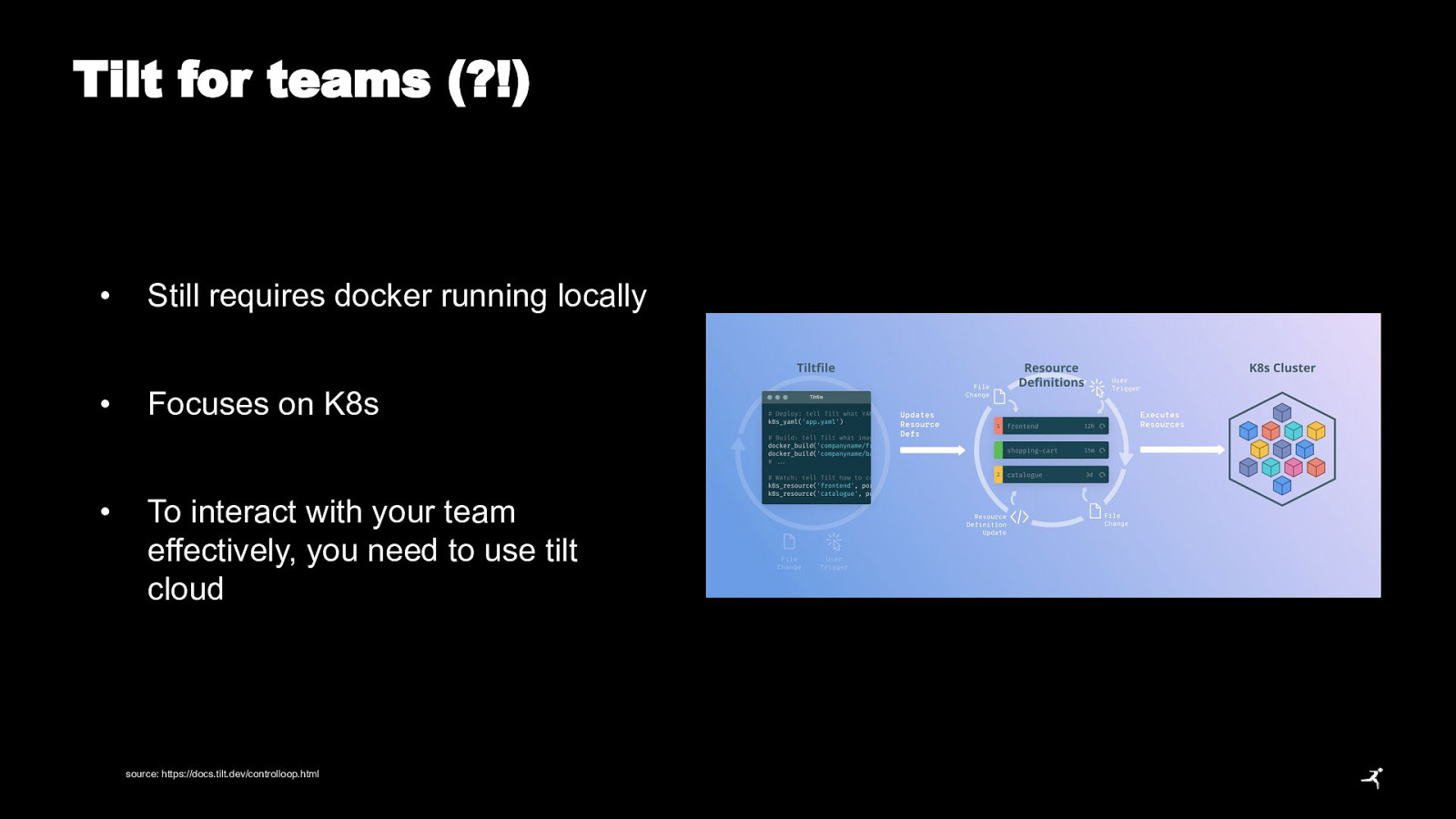

Tilt for teams (?!) • Still requires docker running locally • Focuses on K8s • To interact with your team effectively, you need to use tilt cloud source: https://docs.tilt.dev/controlloop.html

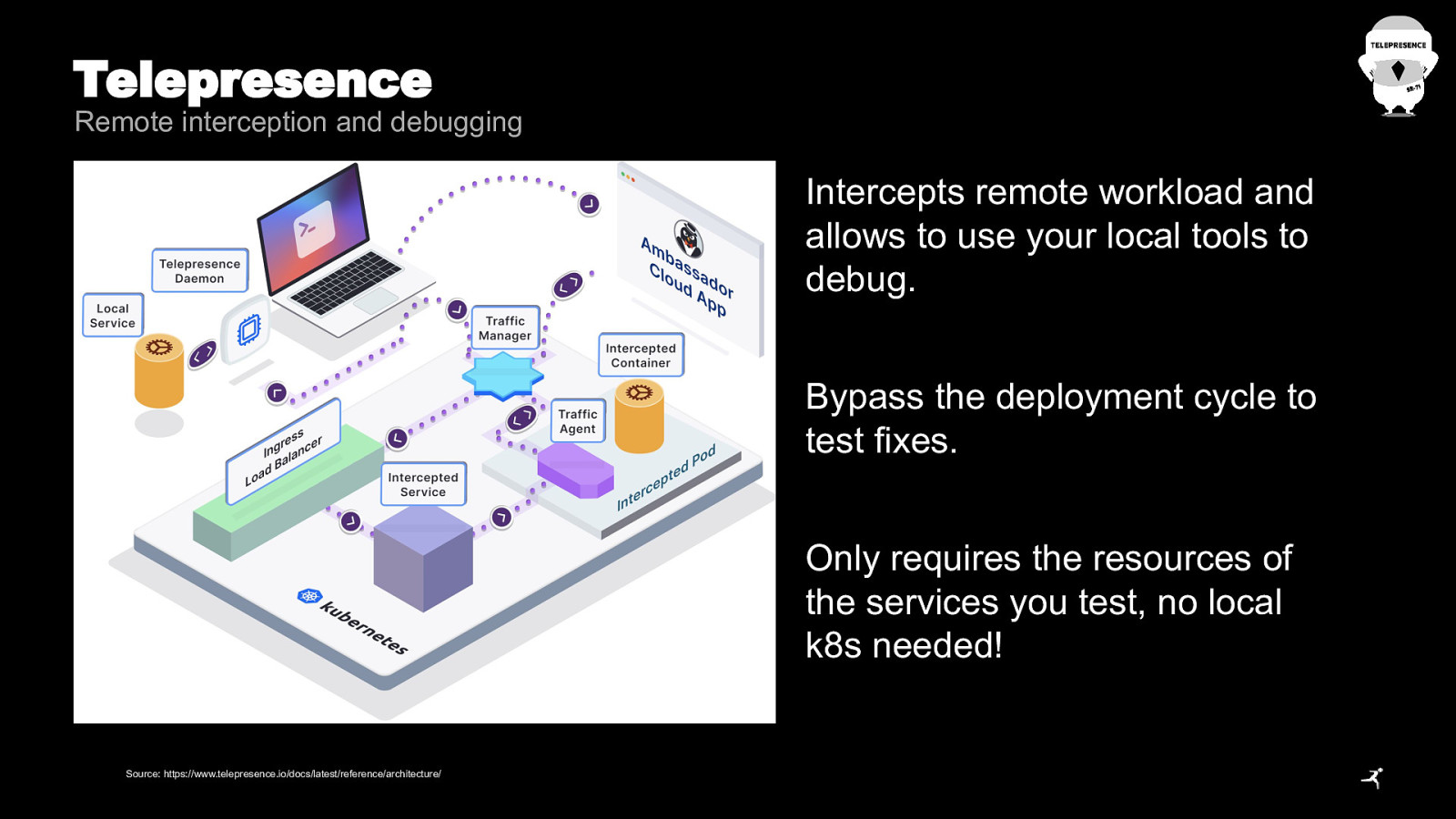

Telepresence Remote interception and debugging Intercepts remote workload and allows to use your local tools to debug. Bypass the deployment cycle to test fixes. Only requires the resources of the services you test, no local k8s needed! Source: https://www.telepresence.io/docs/latest/reference/architecture/

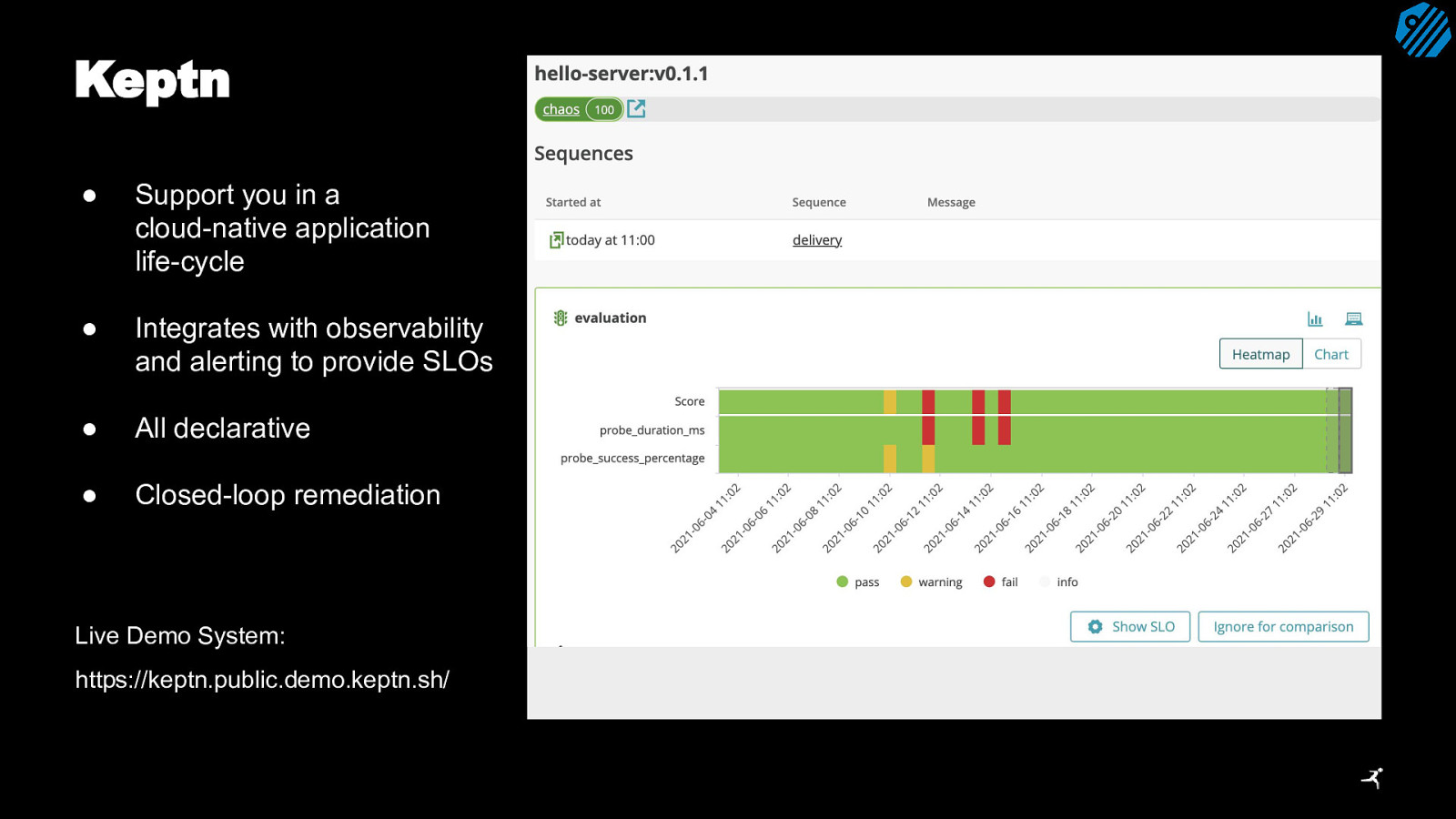

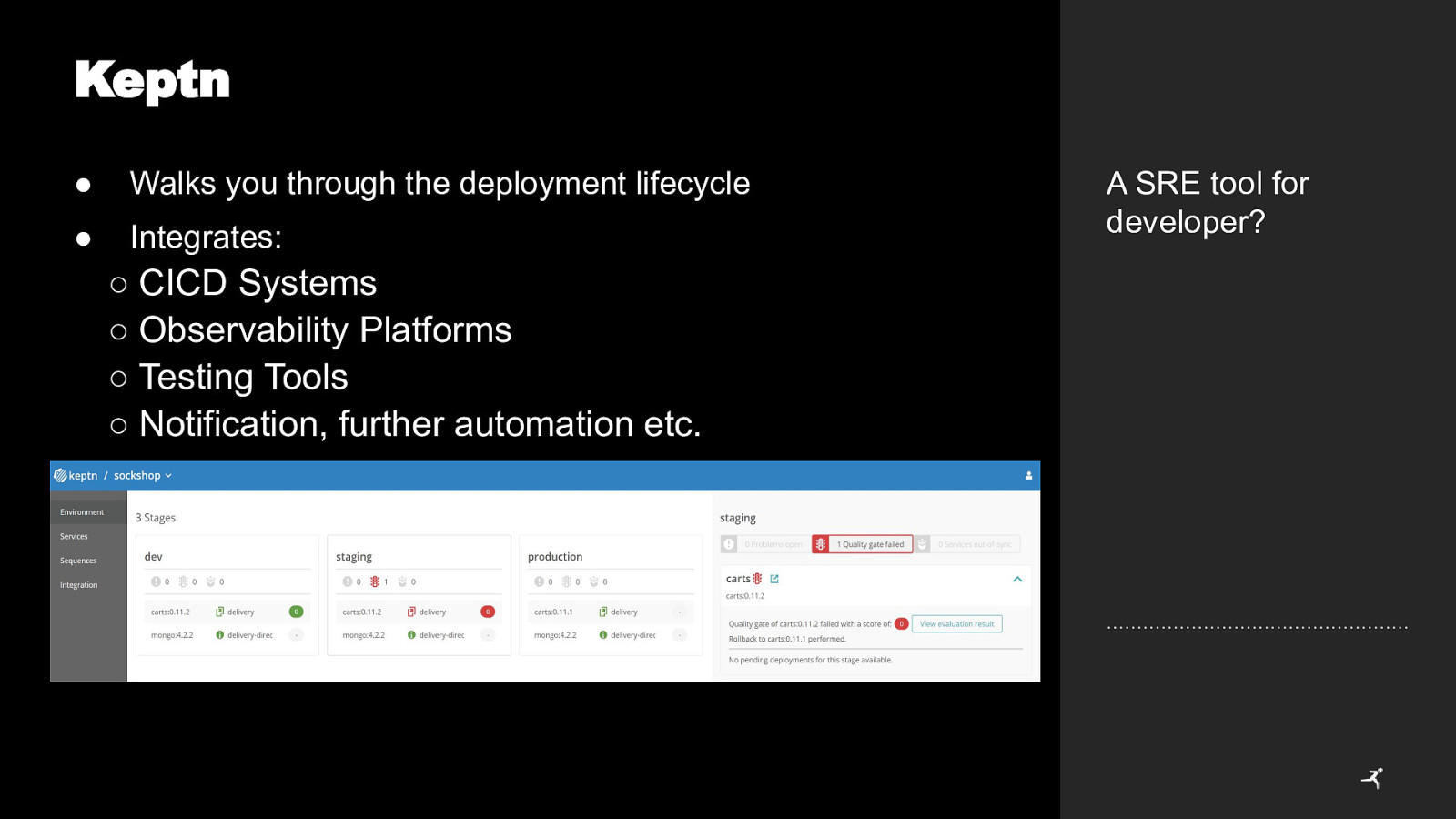

Keptn stages: - name: “dev” deployment_strategy: “direct” ● Support you in a cloud-native application life-cycle test_strategy: “functional” - name: “hardening” deployment_strategy: “blue_green_service” test_strategy: “performance” ● Integrates with observability and alerting to provide SLOs

Keptn ● Walks you through the deployment lifecycle ● Integrates: ○ CICD Systems ○ Observability Platforms ○ Testing Tools ○ Notification, further automation etc. A SRE tool for developer?

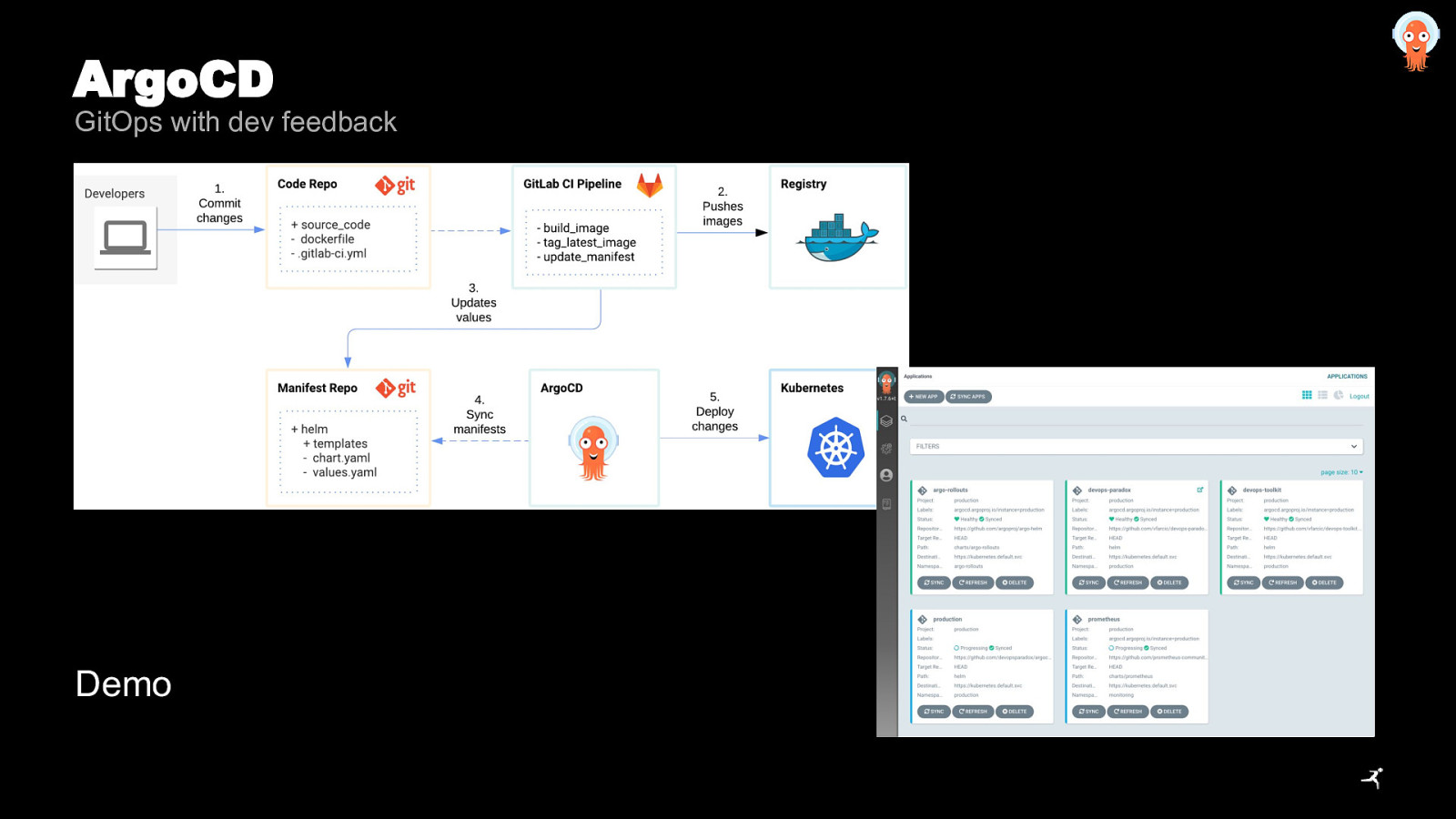

ArgoCD GitOps with dev feedback Demo

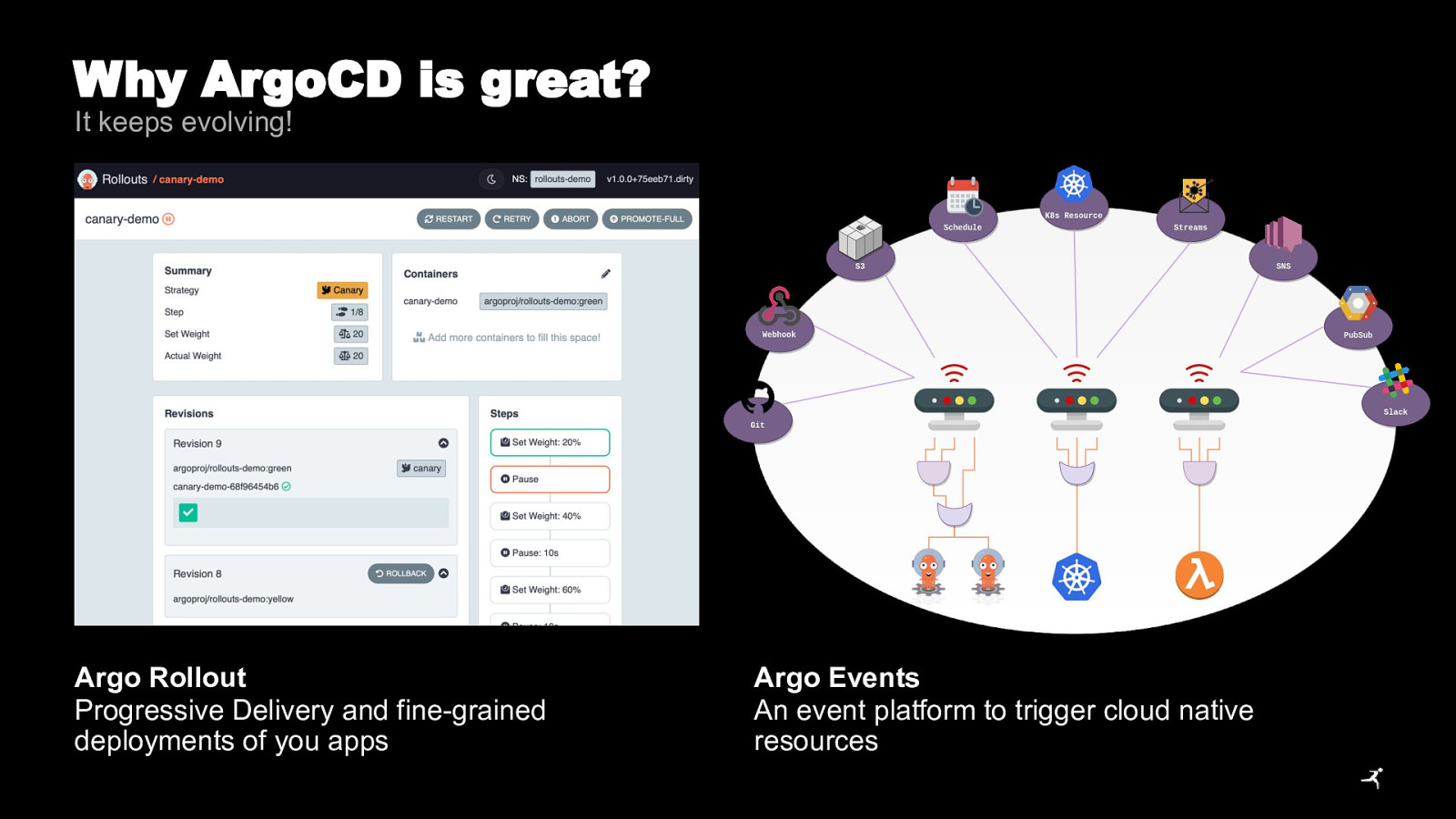

Why ArgoCD is great? It keeps evolving! Argo Rollout Progressive Delivery and fine-grained deployments of you apps Argo Events An event platform to trigger cloud native resources

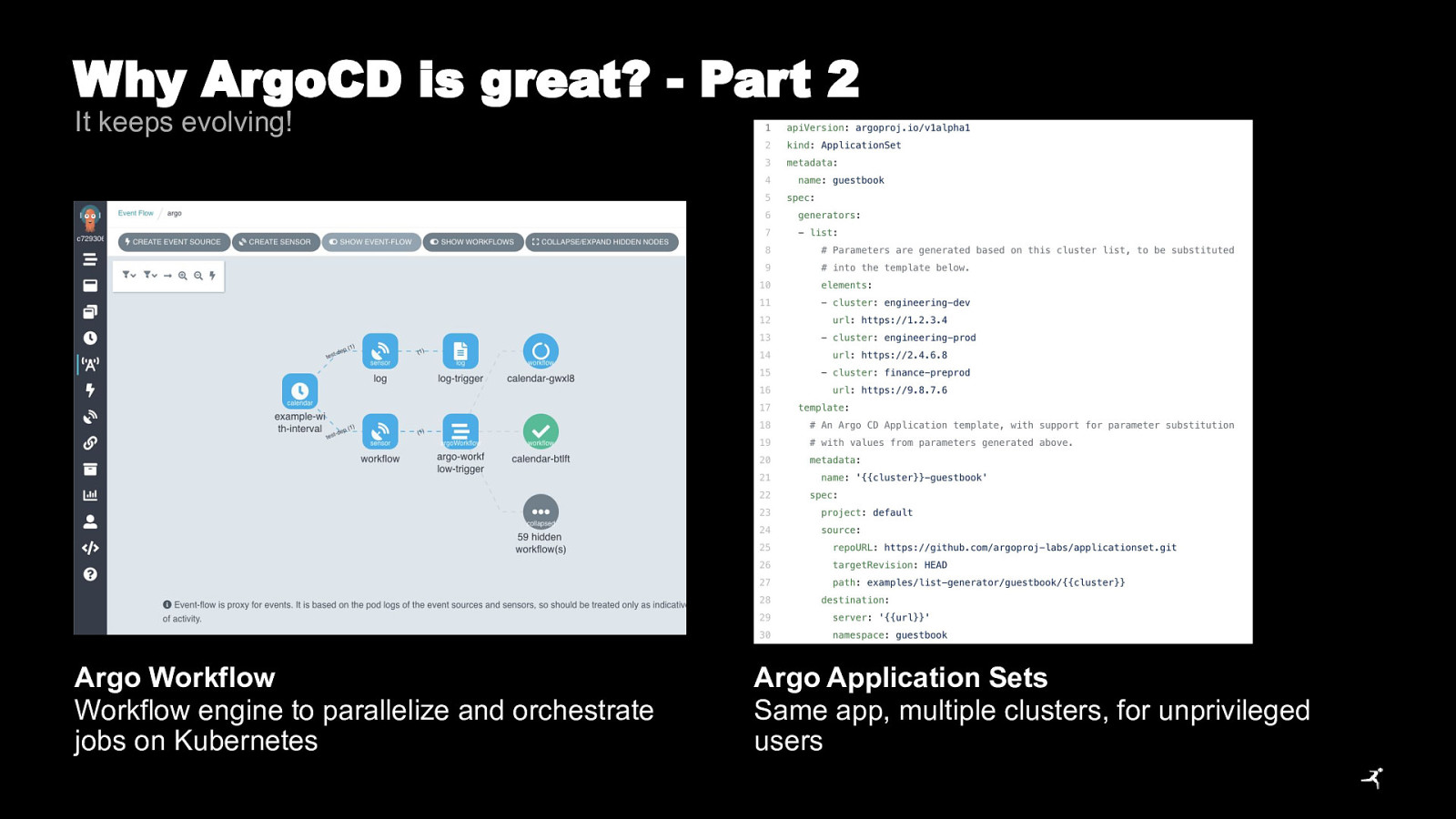

Why ArgoCD is great? - Part 2 It keeps evolving! Argo Workflow Workflow engine to parallelize and orchestrate jobs on Kubernetes Argo Application Sets Same app, multiple clusters, for unprivileged users

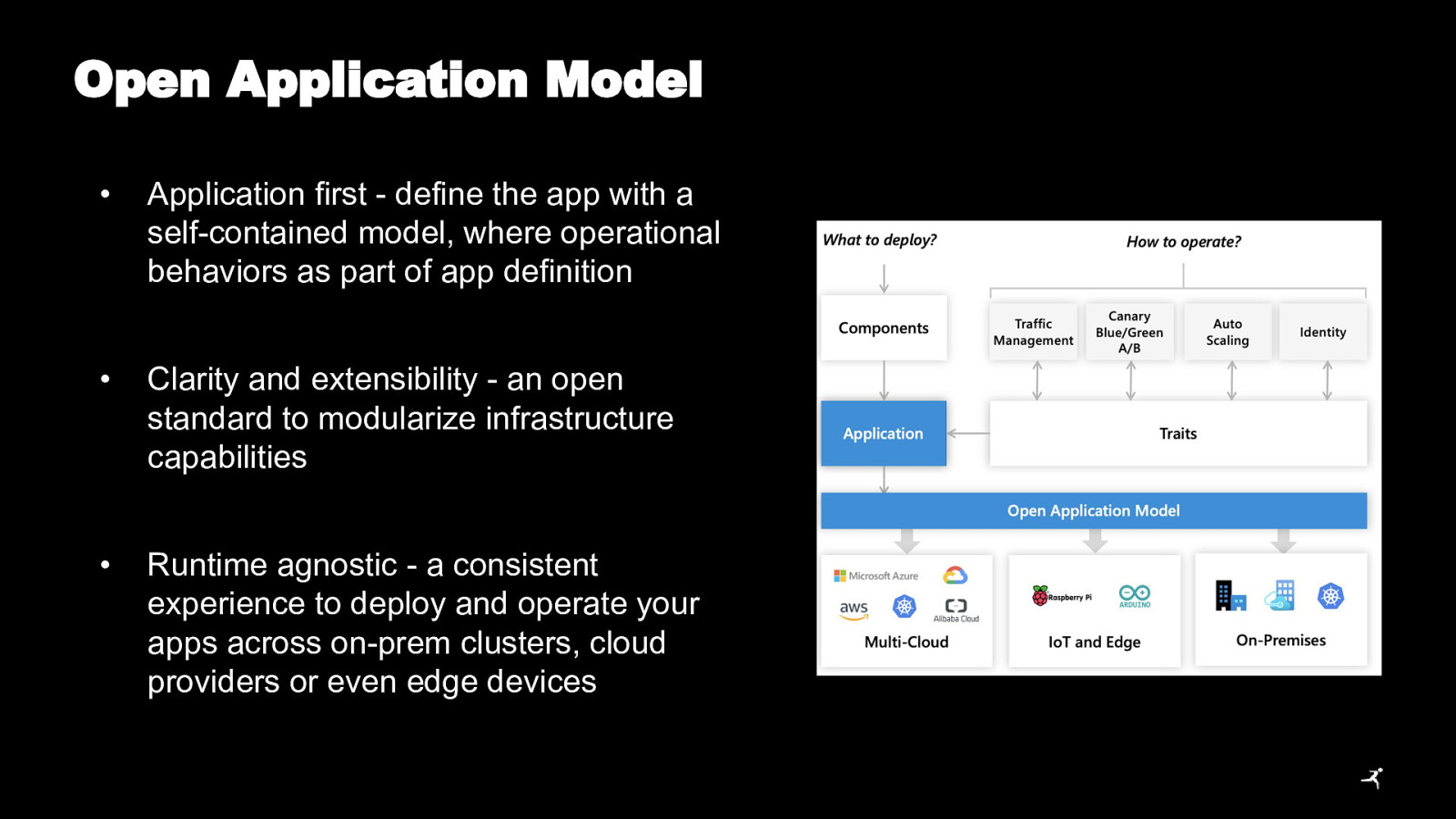

Open Application Model • Application first - define the app with a self-contained model, where operational behaviors as part of app definition • Clarity and extensibility - an open standard to modularize infrastructure capabilities • Runtime agnostic - a consistent experience to deploy and operate your apps across on-prem clusters, cloud providers or even edge devices

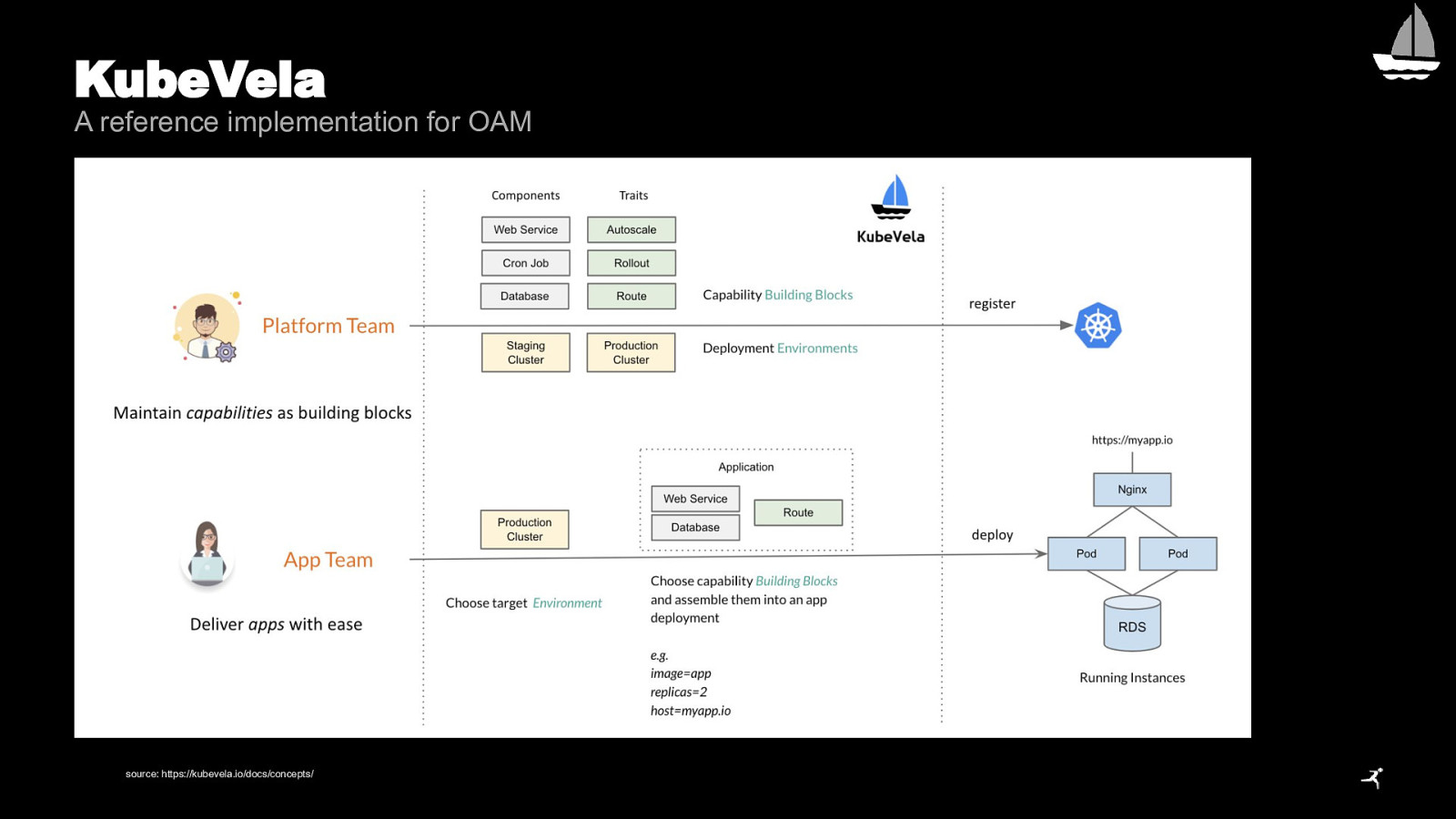

KubeVela A reference implementation for OAM source: https://kubevela.io/docs/concepts/

Summary Some things we will not get rid of Deployment Manifests Infrastructure “Stacks” New Roles, new Responsibilities How the app needs to run? How the app can run? What’s the limits? When it is problematic? Not the servers, but the container images, CSP integrations and supporting services. A 100% clear role for Dev, Ops and DevOps will not be possible. I believe Platform Teams can mediate between the roles. You have to tell! That’s why you have platform teams ;)

Q&A Let’s connect!