Seamless Multi-Cluster Communication and Observability with Linkerd Max Körbächer - Liquid Reply

A presentation at KubeCon + CloudNativeCon Europe in May 2021 in by Max Körbächer

Seamless Multi-Cluster Communication and Observability with Linkerd Max Körbächer - Liquid Reply

Let’s connect! maxkoerbaecher mkoerbi mkorbi Co-Founder & Manager Cloud Native Engineering @ Liquid Reply Kubernetes SIG Release since v1.17 If not bringing K8s to customer I’m playing with drones and crafting the nativecloud.dev newsletter

How do you like your clusters? ➔ One for all: packing everything on a single cluster ➔ One per domain: following my organization’s structure ➔ One per cohesion/subject: placing systems/applications together which have a high communication effort ➔ One per application: keep the things isolated Photo by Cameron Venti on Unsplash

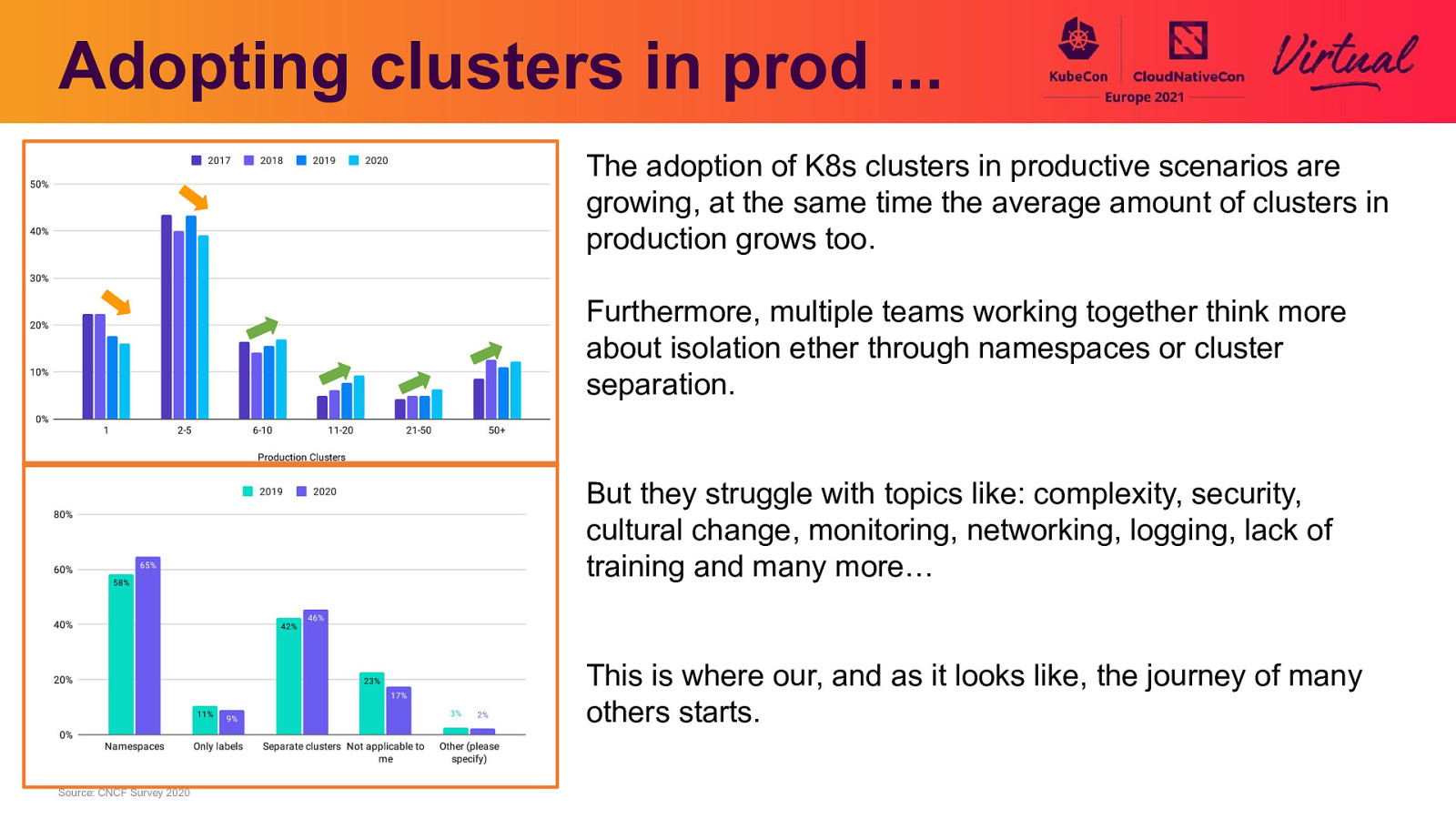

Adopting clusters in prod … The adoption of K8s clusters in productive scenarios are growing, at the same time the average amount of clusters in production grows too. Furthermore, multiple teams working together think more about isolation ether through namespaces or cluster separation. But they struggle with topics like: complexity, security, cultural change, monitoring, networking, logging, lack of training and many more… This is where our, and as it looks like, the journey of many others starts. Source: CNCF Survey 2020

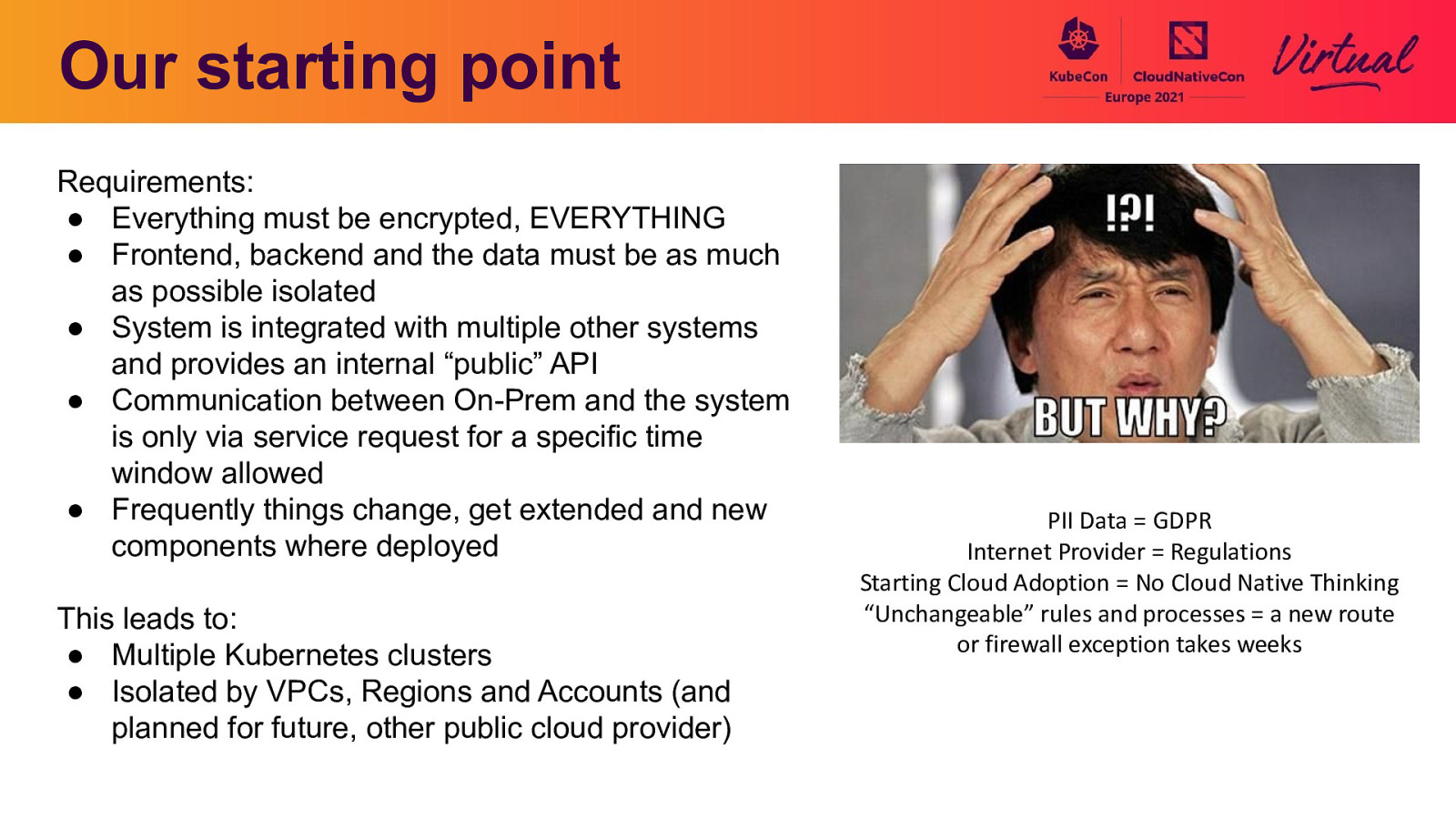

Our starting point Requirements: ● Everything must be encrypted, EVERYTHING ● Frontend, backend and the data must be as much as possible isolated ● System is integrated with multiple other systems and provides an internal “public” API ● Communication between On-Prem and the system is only via service request for a specific time window allowed ● Frequently things change, get extended and new components where deployed This leads to: ● Multiple Kubernetes clusters ● Isolated by VPCs, Regions and Accounts (and planned for future, other public cloud provider) PII Data = GDPR Internet Provider = Regulations Starting Cloud Adoption = No Cloud Native Thinking “Unchangeable” rules and processes = a new route or firewall exception takes weeks

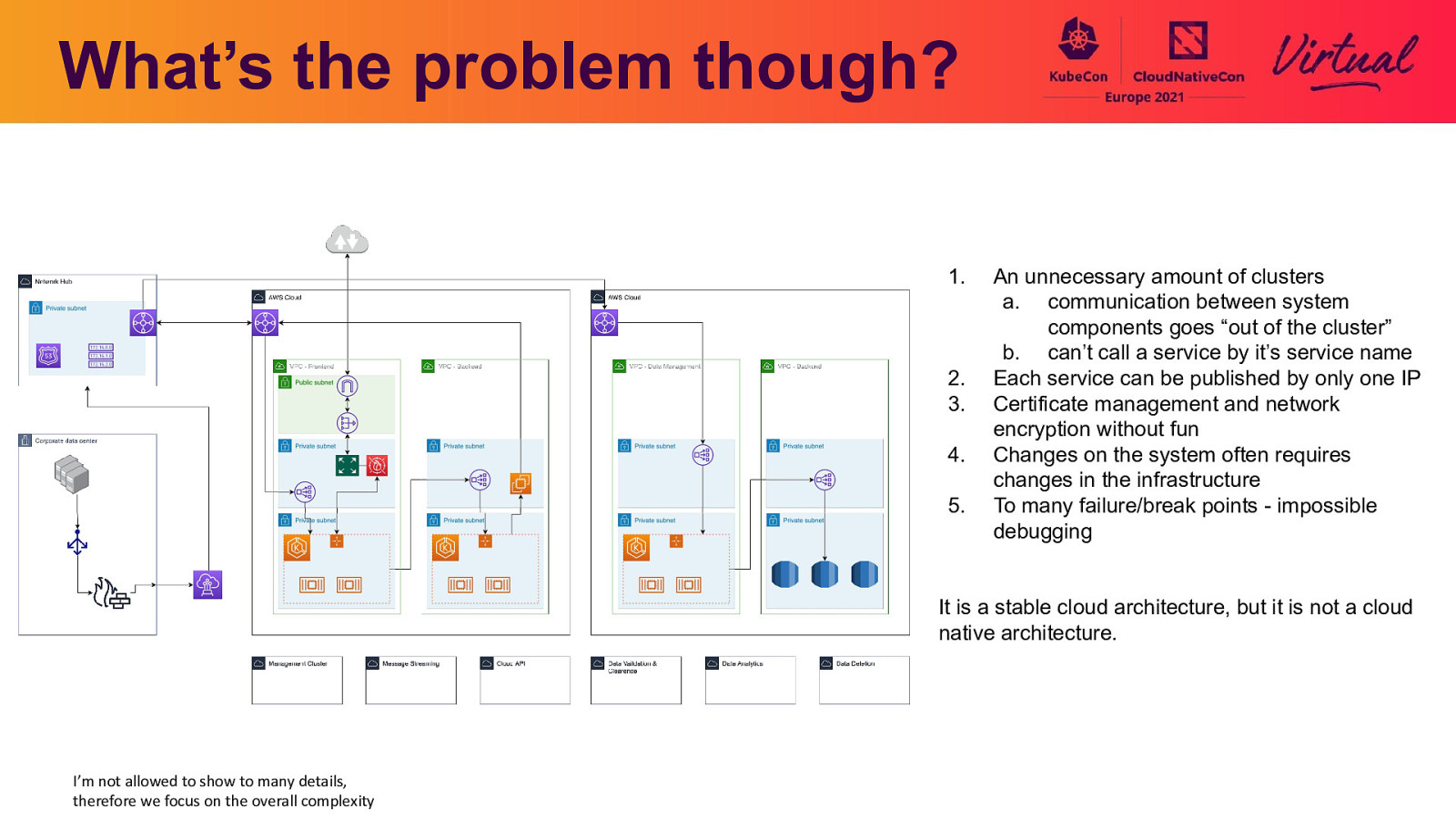

What’s the problem though? 1. 2. 3. 4. 5. An unnecessary amount of clusters a. communication between system components goes “out of the cluster” b. can’t call a service by it’s service name Each service can be published by only one IP Certificate management and network encryption without fun Changes on the system often requires changes in the infrastructure To many failure/break points - impossible debugging It is a stable cloud architecture, but it is not a cloud native architecture. I’m not allowed to show to many details, therefore we focus on the overall complexity

So we proposed Linkerd Our hopes: ● Network encryption for lazy people ○ just everything gets encrypted ● Communication visibility ○ Where does my things break? ● With service mirroring we can talk natively with other clusters ○ less actions on the infrastructure needed ��

Demo ○ For the demo I recreated a similar setup ■ Local: kind cluster - frontend ■ West: eks cluster - eu-west-1 - backend ■ East: eks cluster - eu-west-3 - data management/DB

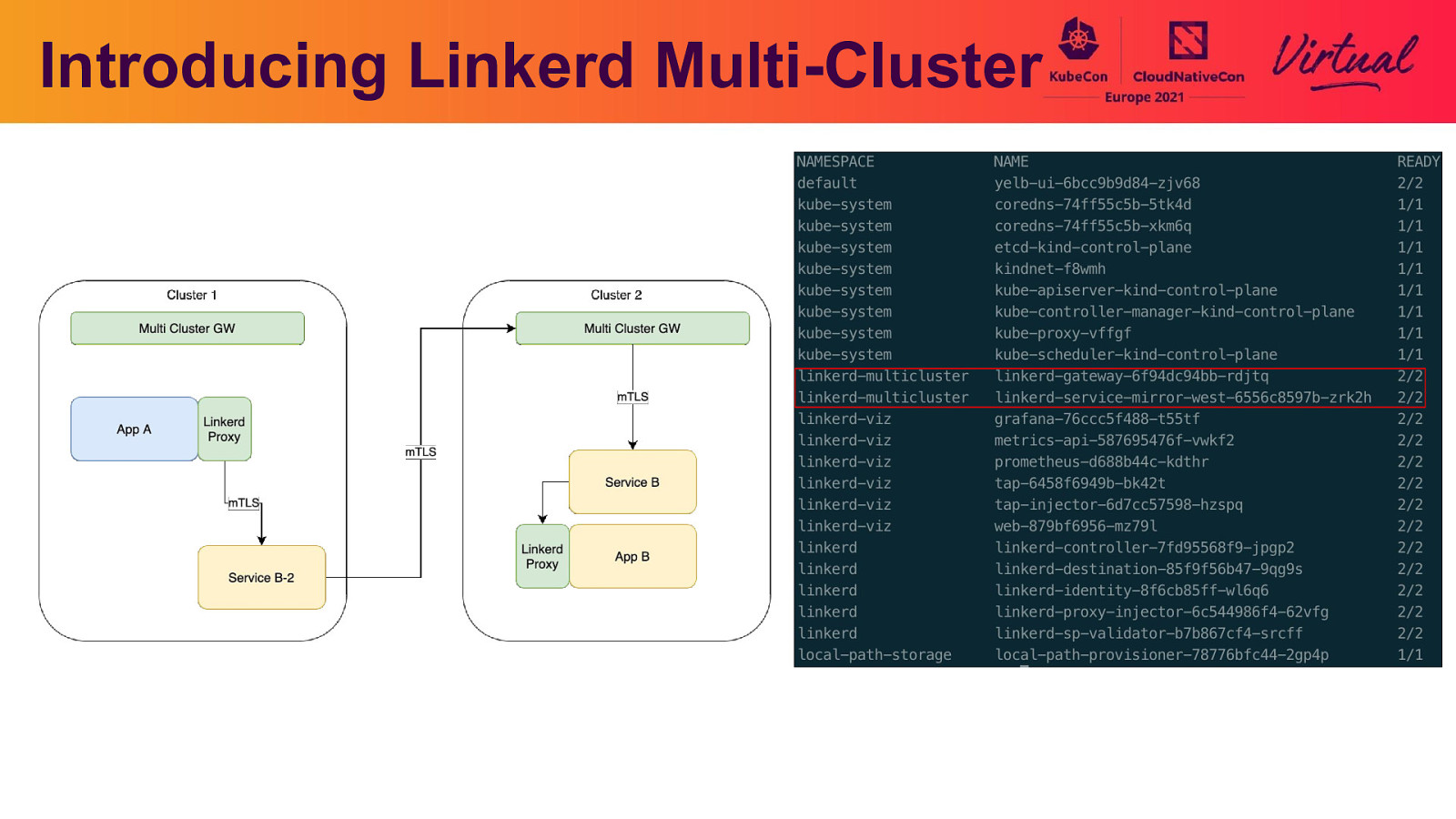

Introducing Linkerd Multi-Cluster

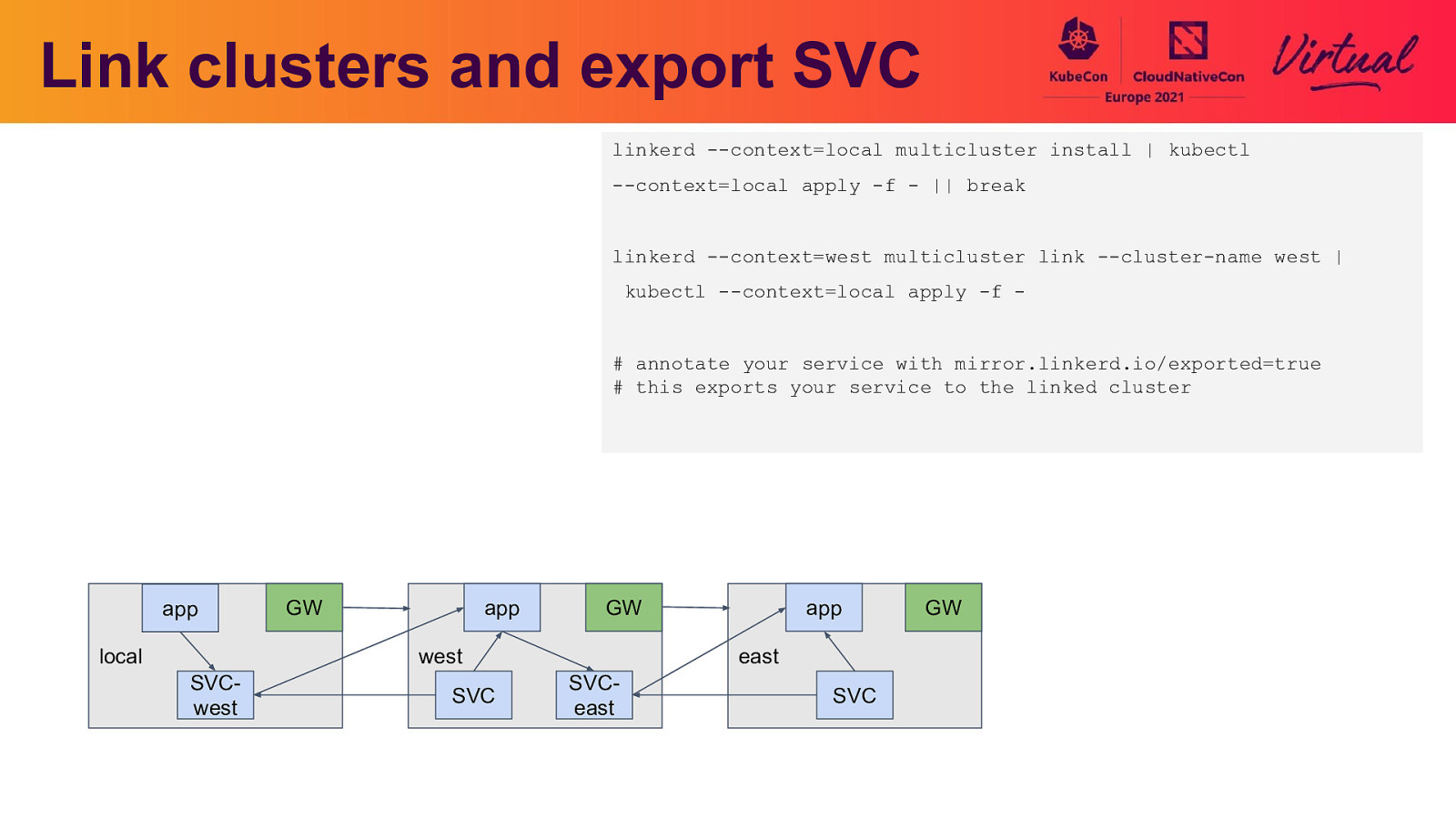

Link clusters and export SVC linkerd —context=local multicluster install | kubectl —context=local apply -f - || break linkerd —context=west multicluster link —cluster-name west | kubectl —context=local apply -f -

app local GW app GW west SVCwest SVC app east SVCeast SVC GW

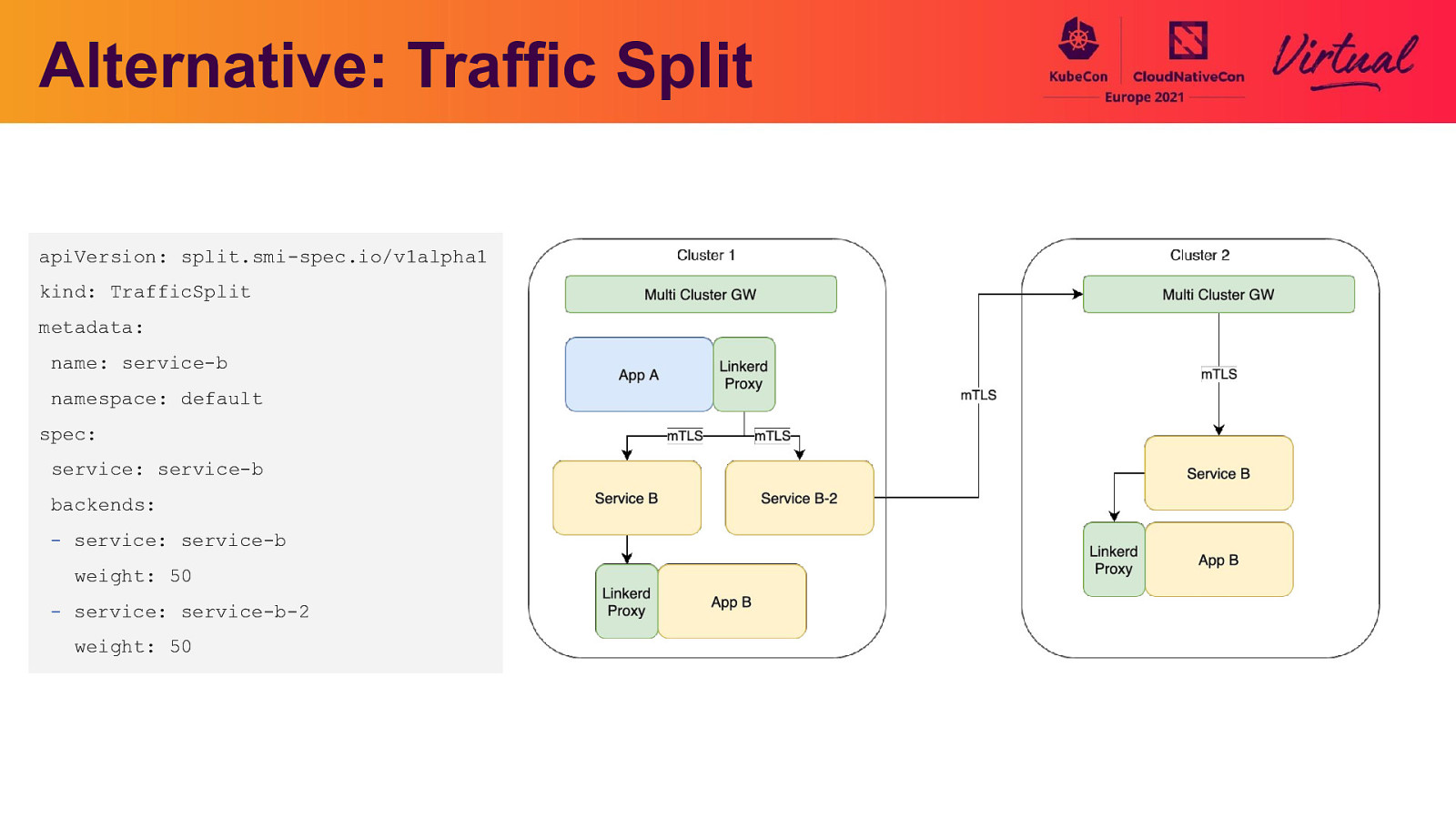

Alternative: Traffic Split apiVersion: split.smi-spec.io/v1alpha1 kind: TrafficSplit metadata: name: service-b namespace: default spec: service: service-b backends: - service: service-b weight: 50 - service: service-b-2 weight: 50

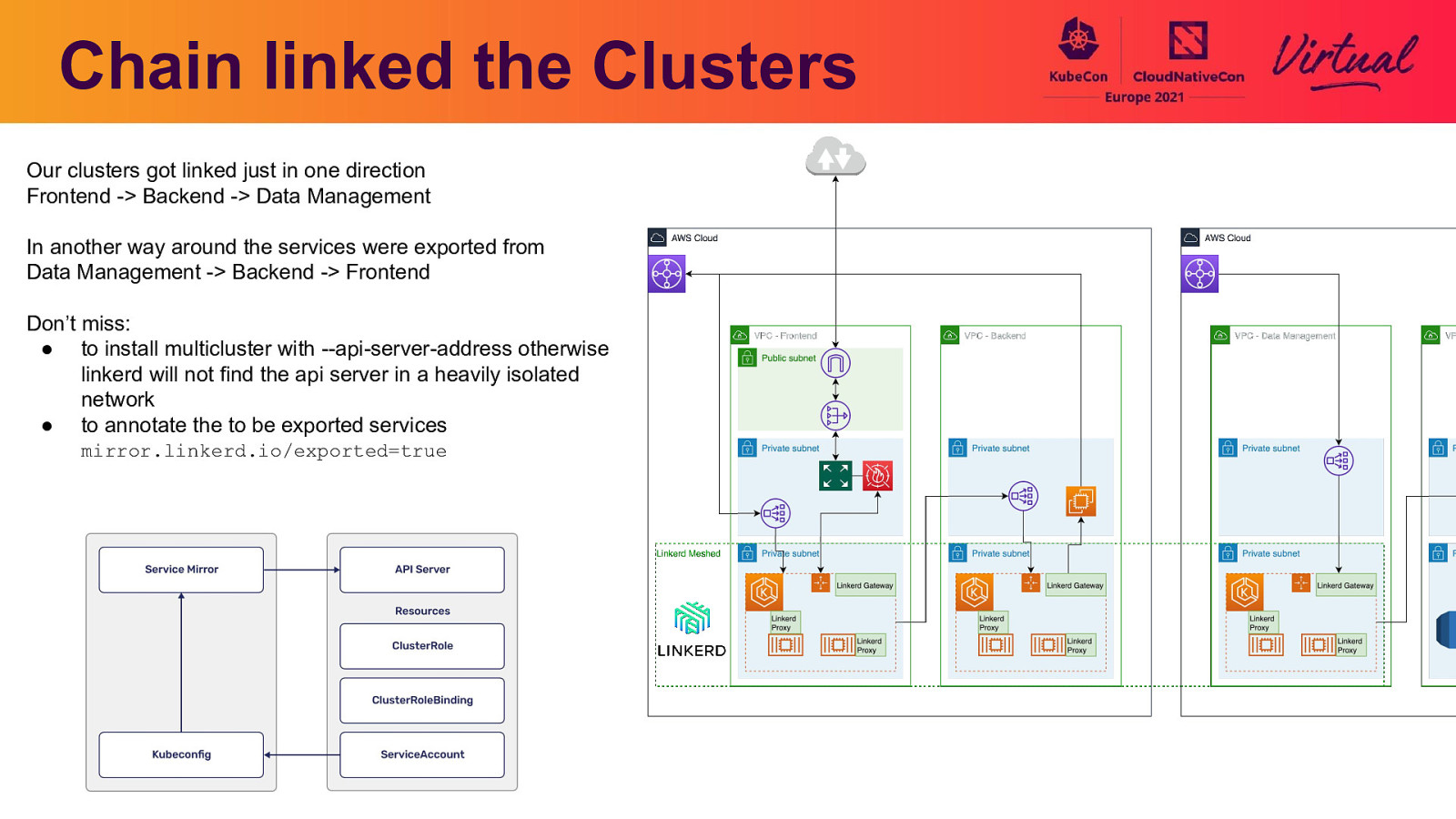

Chain linked the Clusters Our clusters got linked just in one direction Frontend -> Backend -> Data Management In another way around the services were exported from Data Management -> Backend -> Frontend Don’t miss: ● to install multicluster with —api-server-address otherwise linkerd will not find the api server in a heavily isolated network ● to annotate the to be exported services mirror.linkerd.io/exported=true

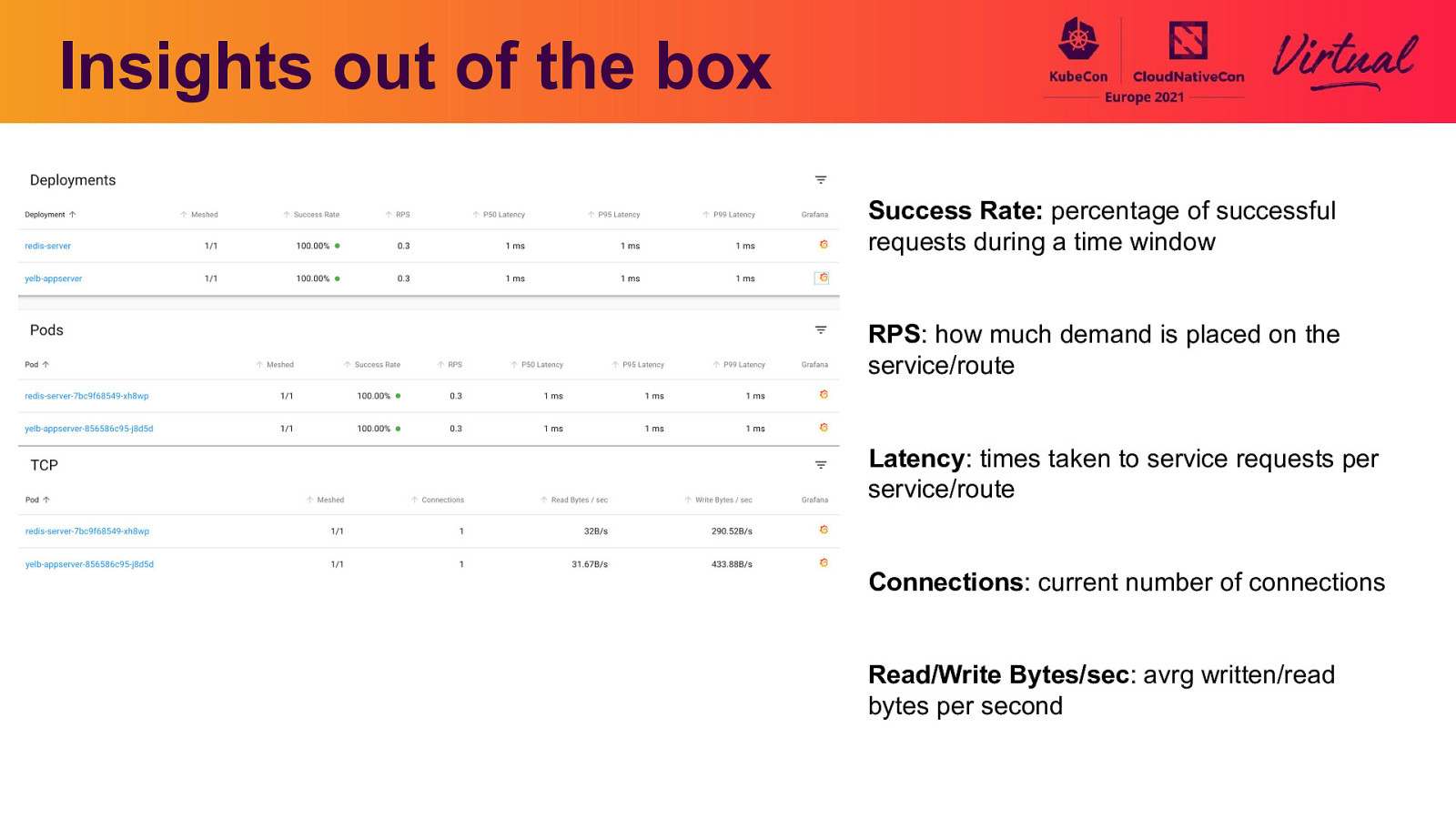

Insights out of the box Success Rate: percentage of successful requests during a time window RPS: how much demand is placed on the service/route Latency: times taken to service requests per service/route Connections: current number of connections Read/Write Bytes/sec: avrg written/read bytes per second

The road to tracing… Is very long, and stony…

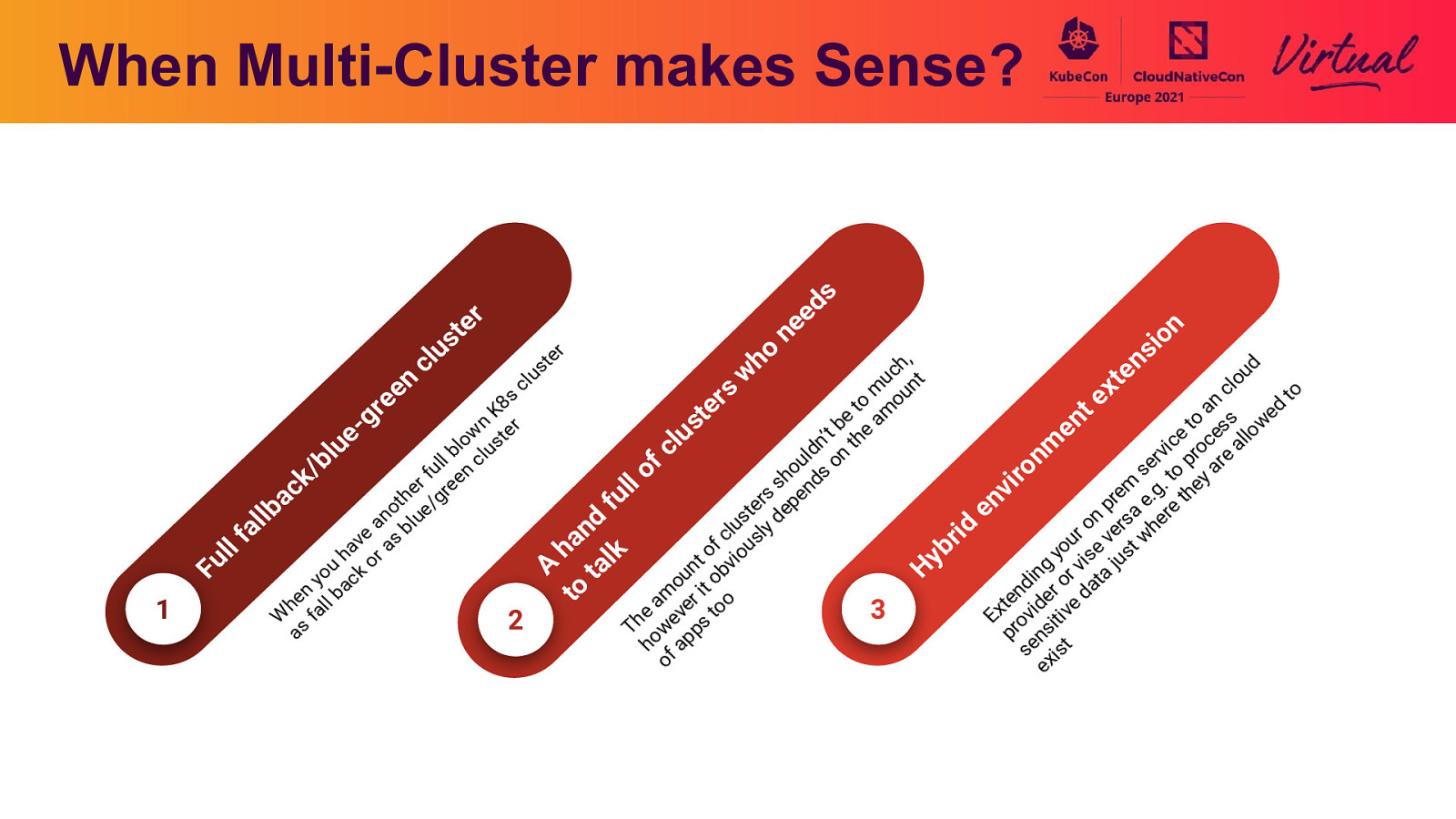

When Multi-Cluster makes Sense? er t s u cl 1 er ho ne s d e on i s h, d c en w u nt ou t n l c c x m to rs e n o ou ee 8s d t a e r t K m e t g n n er to ess ow be e a s e t w e l t ’ u h o l ic roc al ue m dn n t c bl clus v l l r l n f b e o p are ou s o ul en o / s o f t h r k i l s nd m .g. hey l er gre c v e s e h r a n fu pr a e re t ot ue/ te dep e n lb n s l d l a b o ers he d lu sly a n r i e c w f s r a k f ou l av r a ou se v st b o h l y h i y o g r vi a ju u nt bv A tal n H Fu u i o yo ack o it d r o at o n b n m t e ed e r e a e oo t d h all i x 3 W sf E rov itiv he wev s t 2 T p a p ens t ho f ap s xis o e t s lu

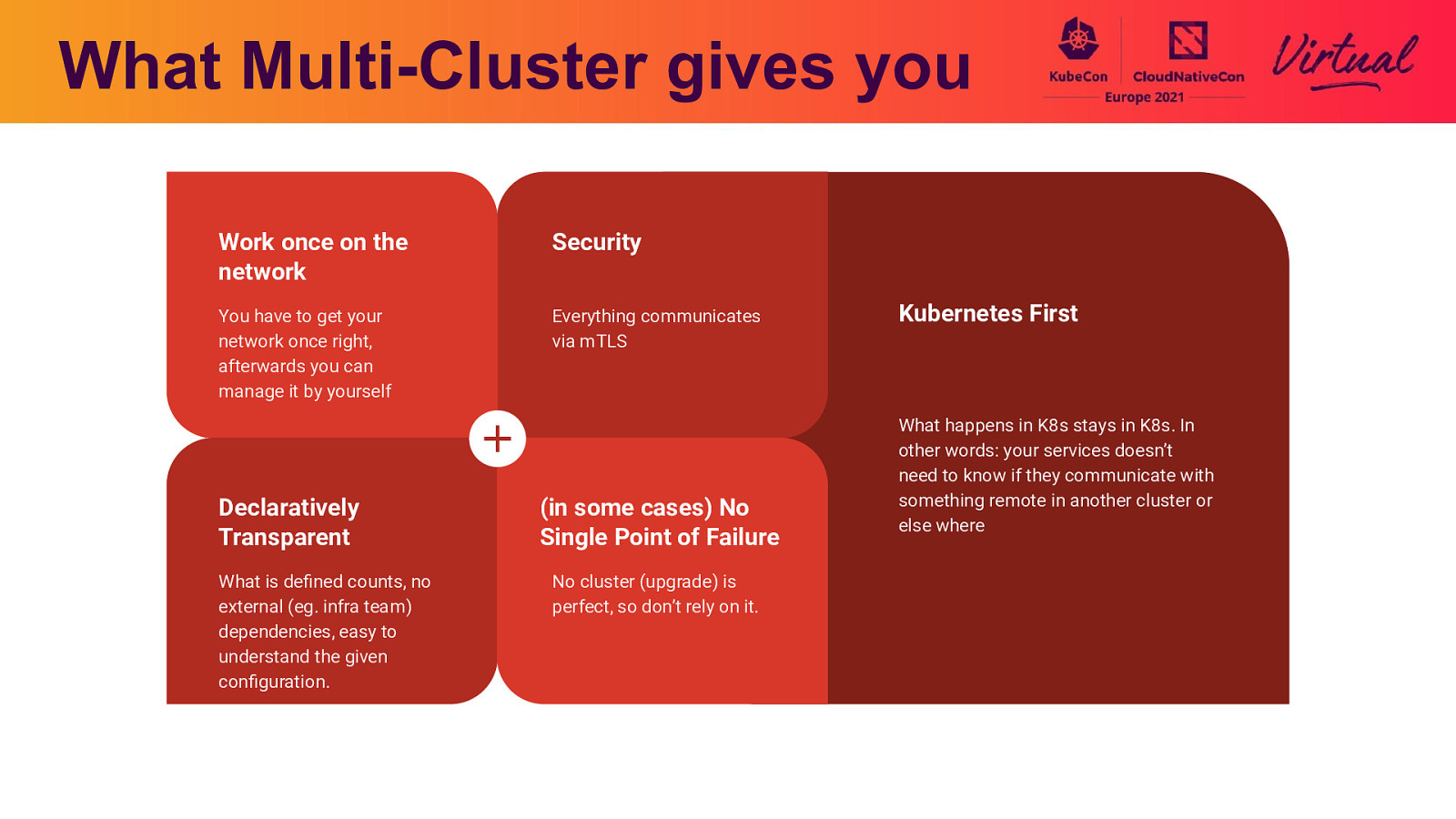

What Multi-Cluster gives you Work once on the network Security You have to get your network once right, afterwards you can manage it by yourself Everything communicates via mTLS Declaratively Transparent What is defined counts, no external (eg. infra team) dependencies, easy to understand the given configuration. (in some cases) No Single Point of Failure No cluster (upgrade) is perfect, so don’t rely on it. Kubernetes First What happens in K8s stays in K8s. In other words: your services doesn’t need to know if they communicate with something remote in another cluster or else where

Lessons we learned 1. Existing apps need to adjust their calling service endpoint from e.g. kubecon-svc to kubecon-svc-theOtherCluster 2. Linkerd upgrades can be challange - just have a look at the last two versions which have an own upgrade guide 3. Sometimes SVC didn’t got updated after rollout of a new version - however, we couldn’t reproduce this 4. Service Meshes are a rabbit hole, even when they are easy to be deployed - think twice 5. Don’t let your service names get to long… a. a-longer-service-name-eu-west-1-domain-department-squad-cost-center-prod is still ok …

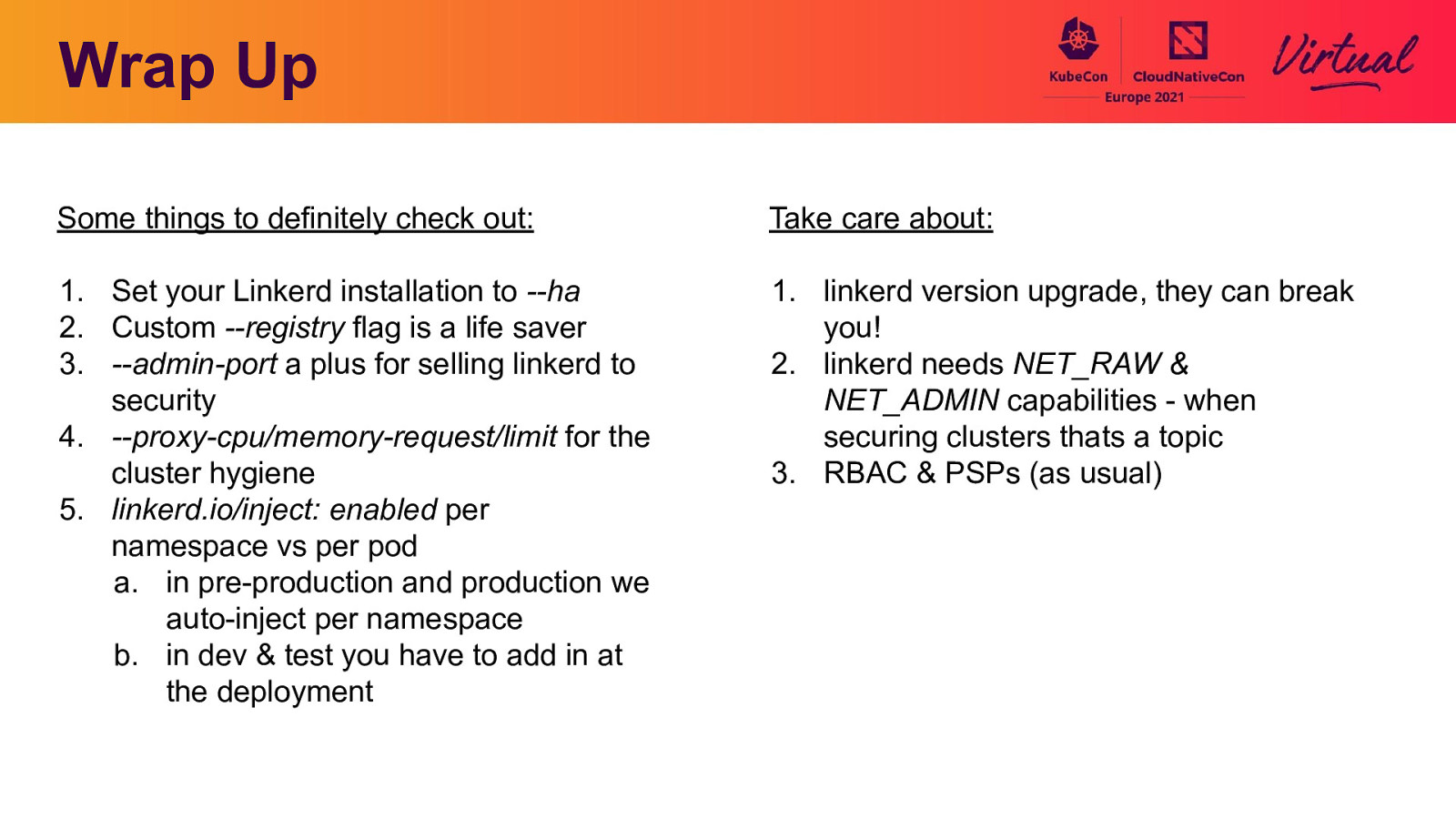

Wrap Up Some things to definitely check out: Take care about:

Thank you!