Building an E2E Analytics Pipeline with PyFlink Marta Paes (@morsapaes) Developer Advocate @morsapaes

A presentation at Data Science UA in November 2020 in by Marta Paes

Building an E2E Analytics Pipeline with PyFlink Marta Paes (@morsapaes) Developer Advocate @morsapaes

About Ververica Original Creators of Apache Flink® 2 @morsapaes Enterprise Stream Processing With Ververica Platform Part of Alibaba Group

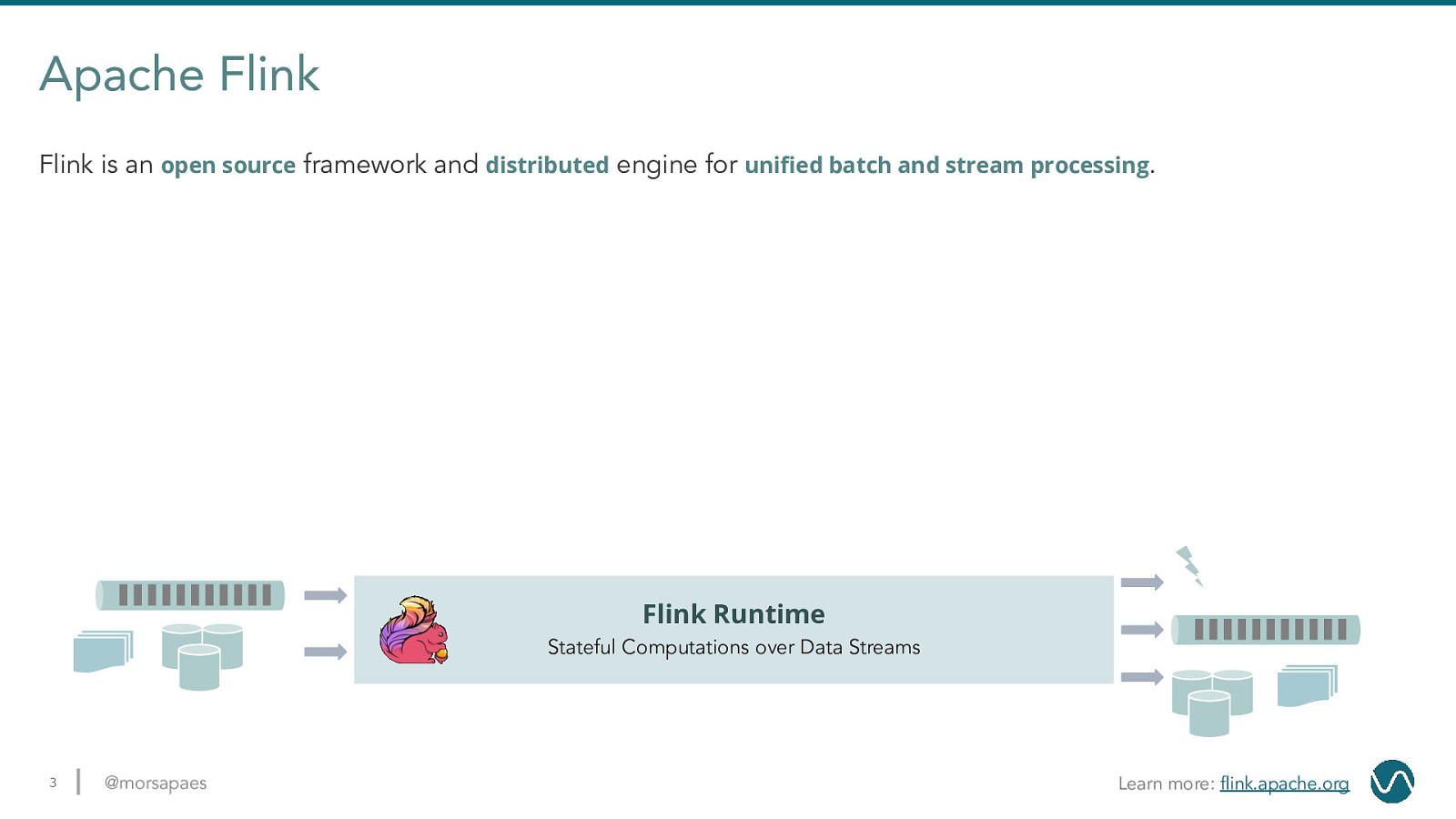

Apache Flink Flink is an open source framework and distributed engine for unified batch and stream processing. Flink Runtime Stateful Computations over Data Streams 3 @morsapaes Learn more: flink.apache.org

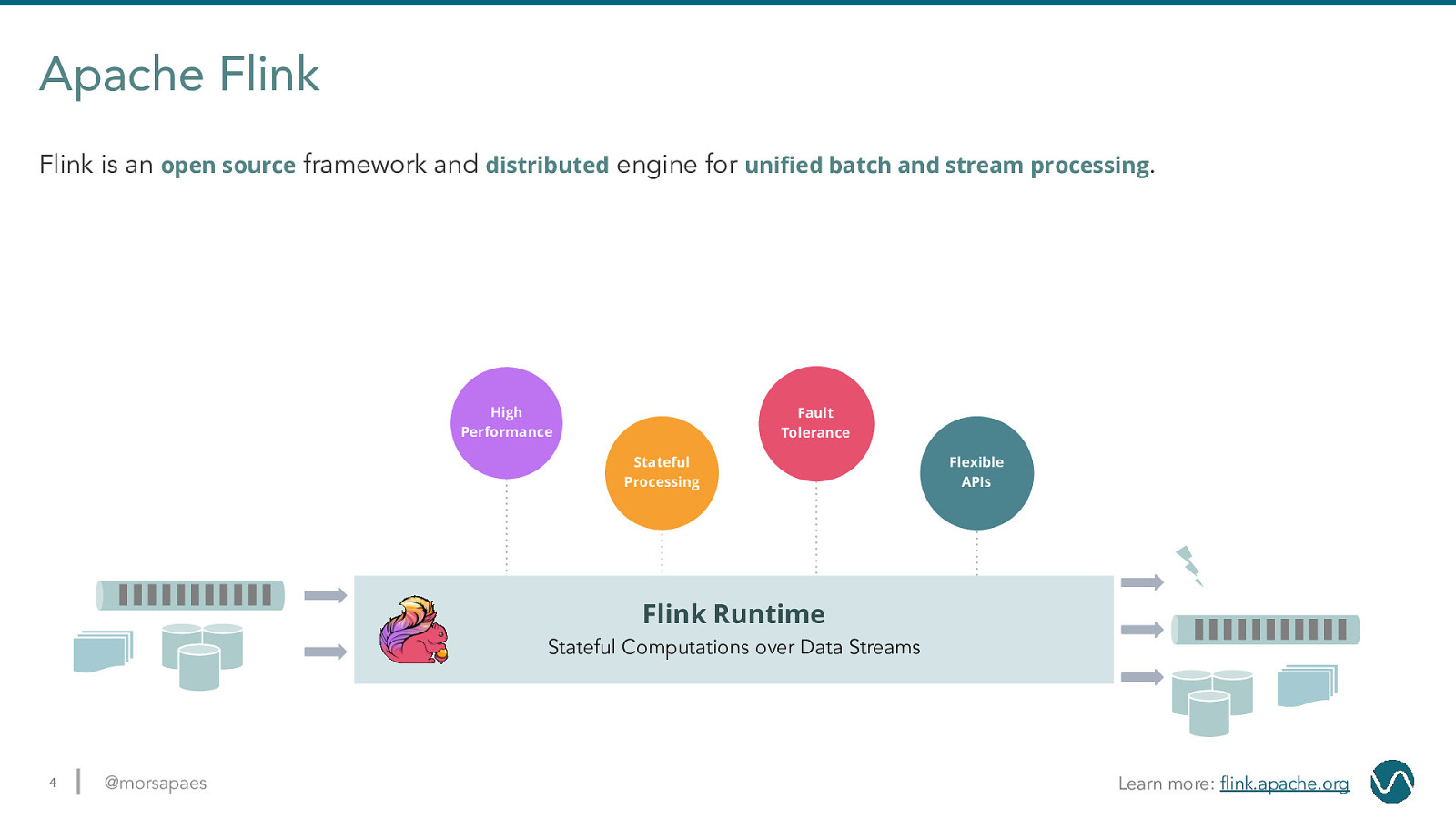

Apache Flink Flink is an open source framework and distributed engine for unified batch and stream processing. High Performance Fault Tolerance Stateful Processing Flexible APIs Flink Runtime Stateful Computations over Data Streams 4 @morsapaes Learn more: flink.apache.org

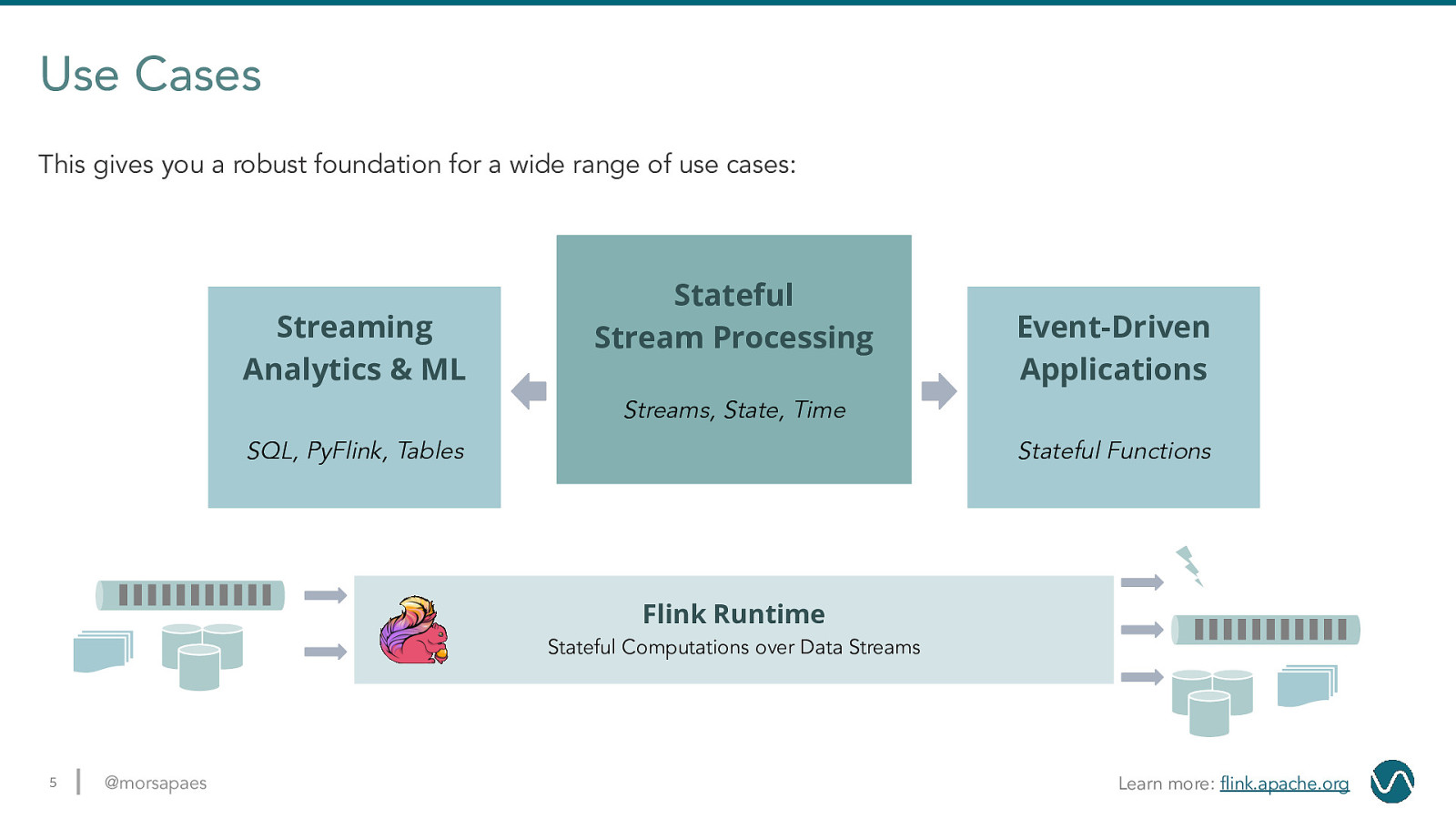

Use Cases This gives you a robust foundation for a wide range of use cases: Streaming Analytics & ML Stateful Stream Processing Event-Driven Applications Streams, State, Time SQL, PyFlink, Tables Stateful Functions Flink Runtime Stateful Computations over Data Streams 5 @morsapaes Learn more: flink.apache.org

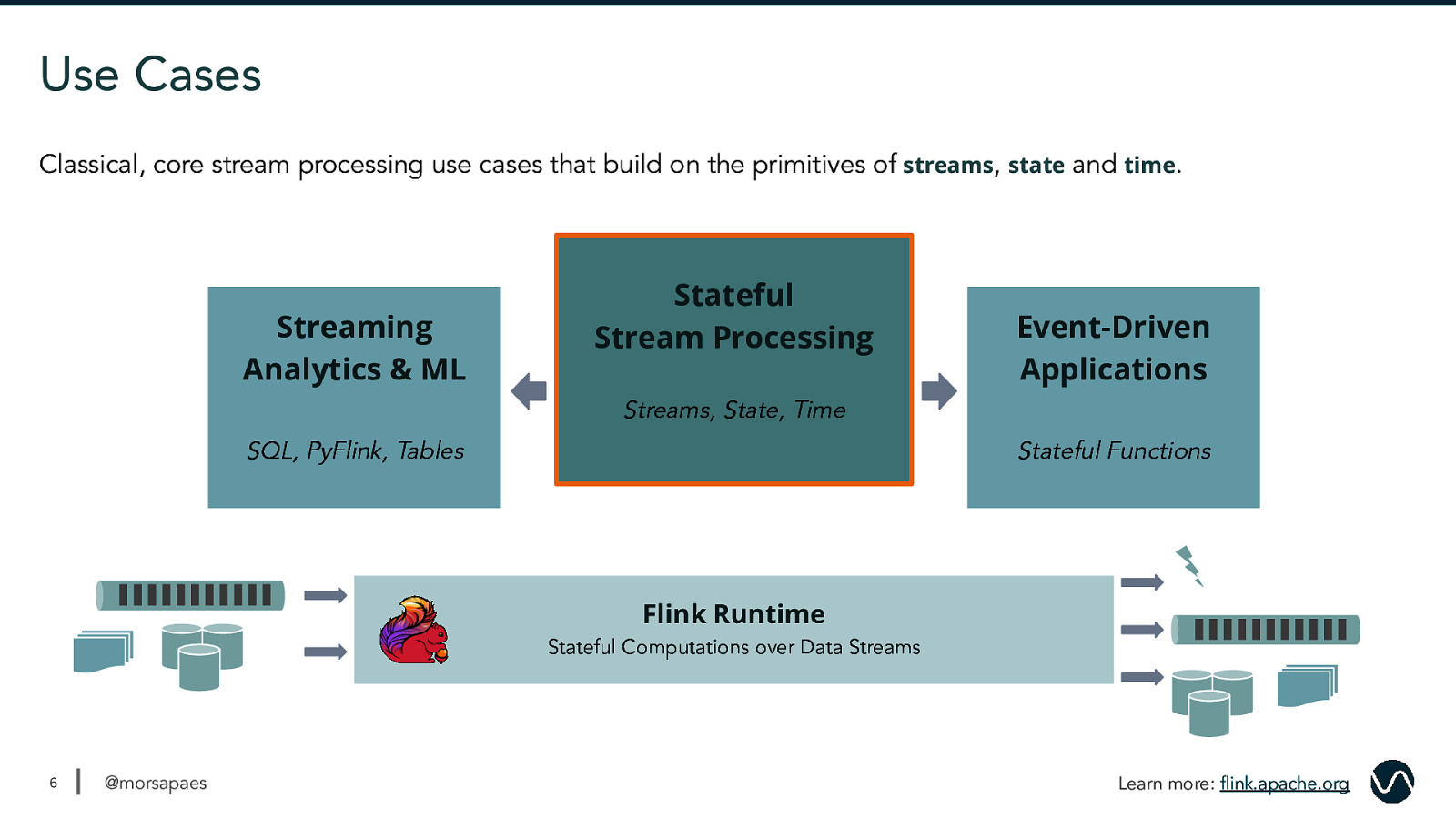

Use Cases Classical, core stream processing use cases that build on the primitives of streams, state and time. Streaming Analytics & ML Stateful Stream Processing Event-Driven Applications Streams, State, Time SQL, PyFlink, Tables Stateful Functions Flink Runtime Stateful Computations over Data Streams 6 @morsapaes Learn more: flink.apache.org

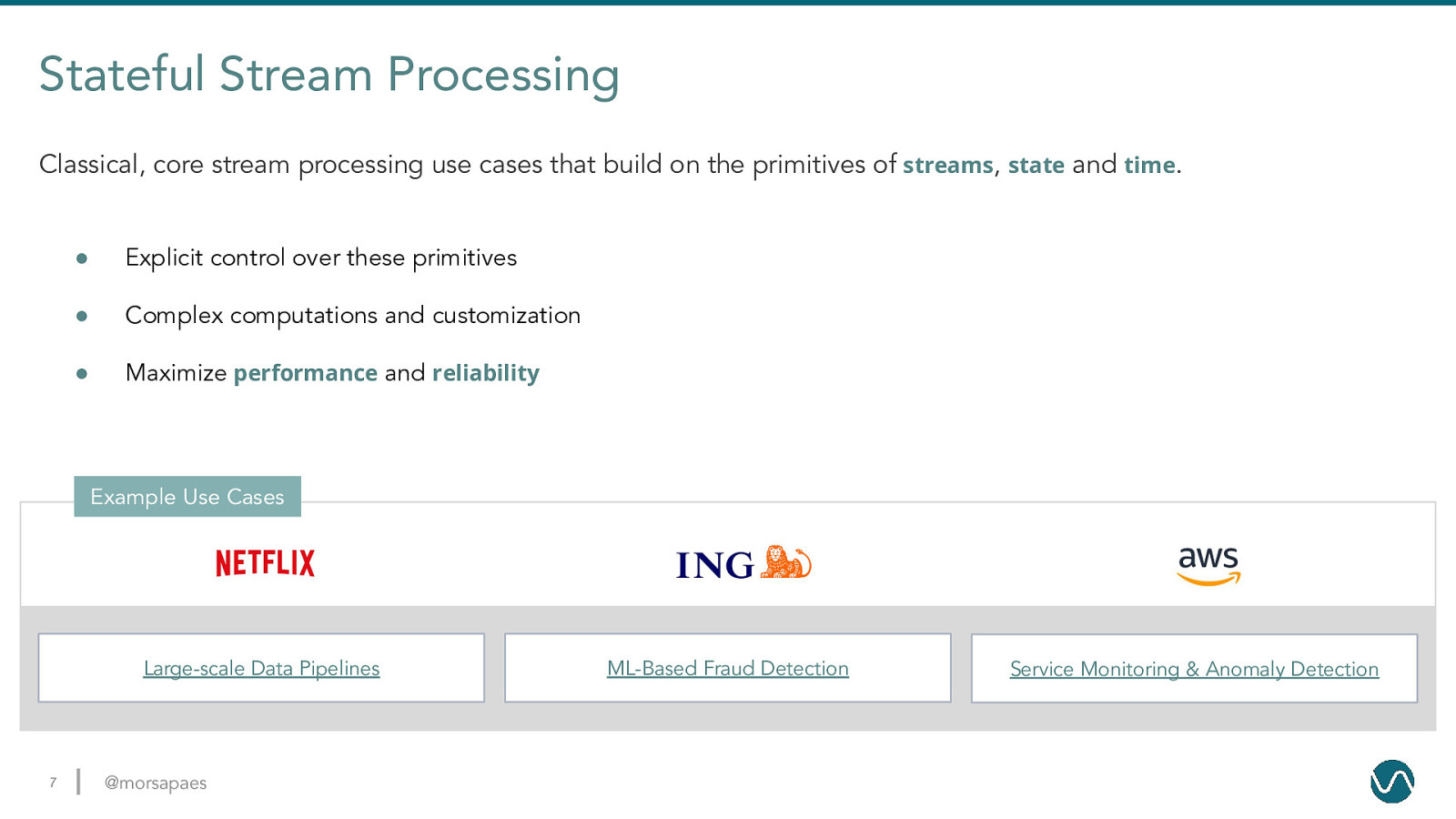

Stateful Stream Processing Classical, core stream processing use cases that build on the primitives of streams, state and time. ● Explicit control over these primitives ● Complex computations and customization ● Maximize performance and reliability Example Use Cases Large-scale Data Pipelines 7 @morsapaes ML-Based Fraud Detection Service Monitoring & Anomaly Detection

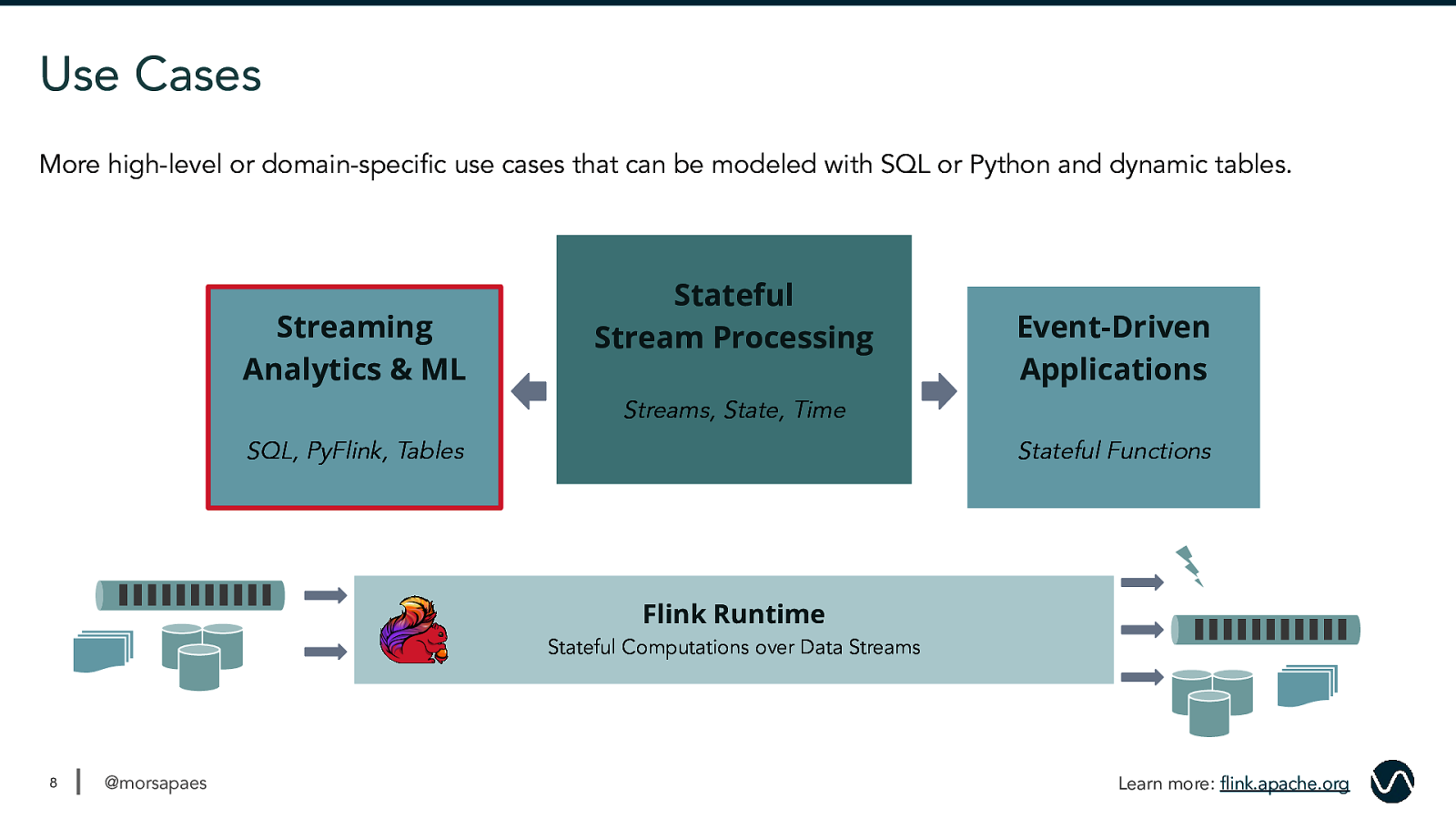

Use Cases More high-level or domain-specific use cases that can be modeled with SQL or Python and dynamic tables. Streaming Analytics & ML Stateful Stream Processing Event-Driven Applications Streams, State, Time SQL, PyFlink, Tables Stateful Functions Flink Runtime Stateful Computations over Data Streams 8 @morsapaes Learn more: flink.apache.org

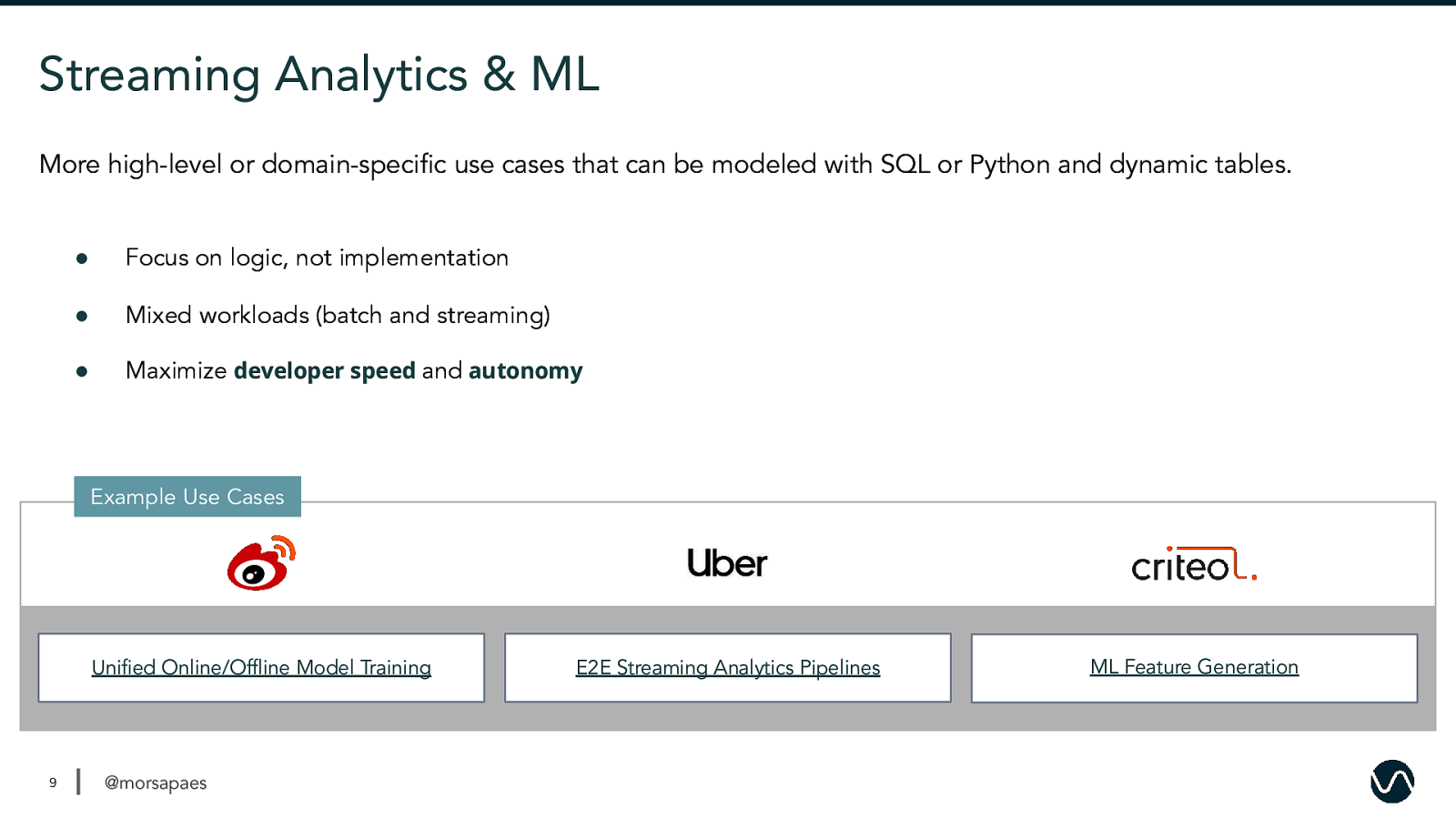

Streaming Analytics & ML More high-level or domain-specific use cases that can be modeled with SQL or Python and dynamic tables. ● Focus on logic, not implementation ● Mixed workloads (batch and streaming) ● Maximize developer speed and autonomy Example Use Cases Unified Online/Offline Model Training 9 @morsapaes E2E Streaming Analytics Pipelines ML Feature Generation

More Flink Users 10 @morsapaes Learn More: Powered by Flink, Speakers – Flink Forward San Francisco 2019, Speakers – Flink Forward Europe 2019

Why PyFlink? 11 @morsapaes

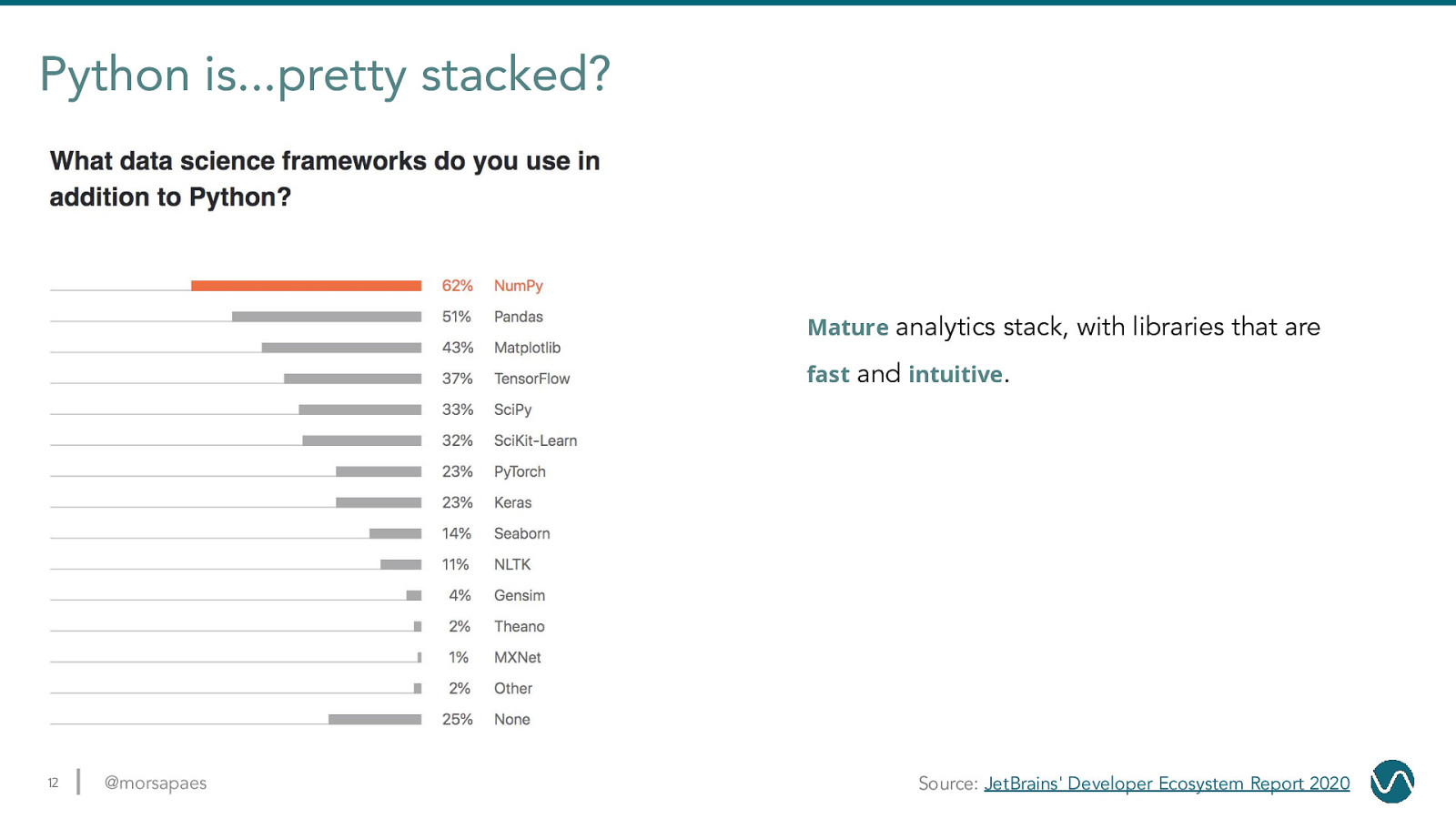

Python is…pretty stacked? Mature analytics stack, with libraries that are fast and intuitive. 12 @morsapaes Source: JetBrains’ Developer Ecosystem Report 2020

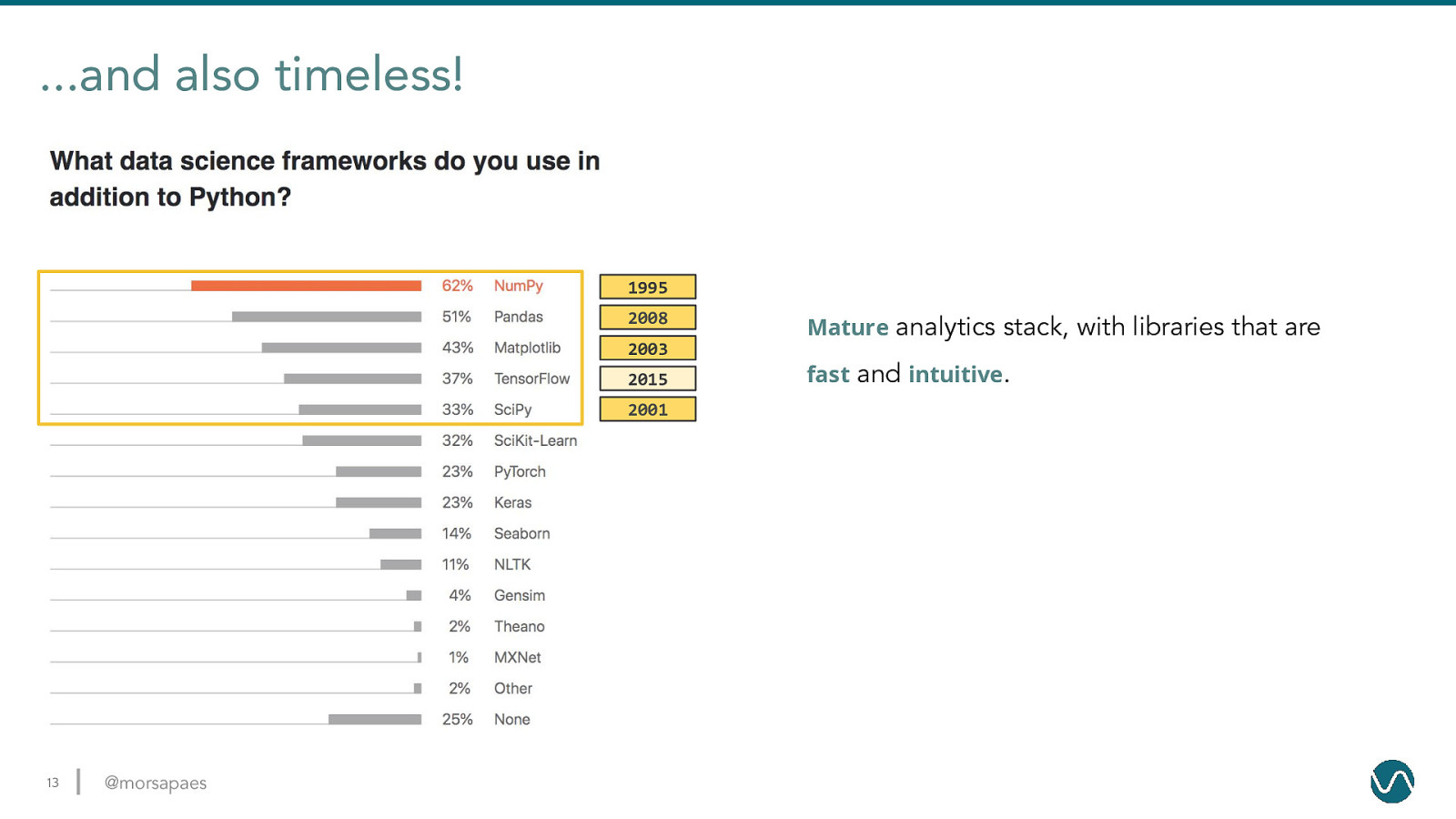

…and also timeless! 1995 2008 2003 2015 2001 13 @morsapaes Mature analytics stack, with libraries that are fast and intuitive.

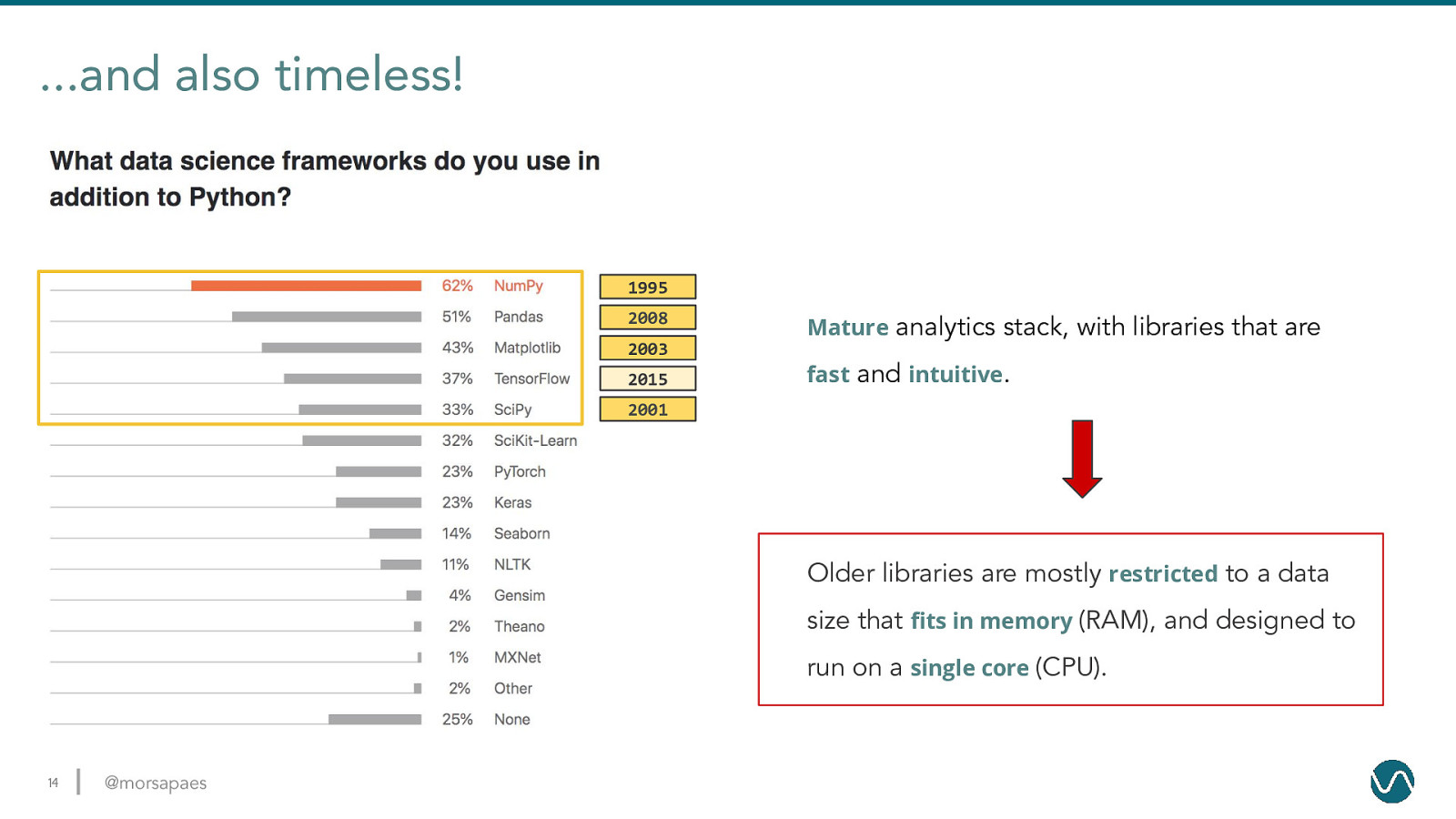

…and also timeless! 1995 2008 2003 2015 Mature analytics stack, with libraries that are fast and intuitive. 2001 Older libraries are mostly restricted to a data size that fits in memory (RAM), and designed to run on a single core (CPU). 14 @morsapaes

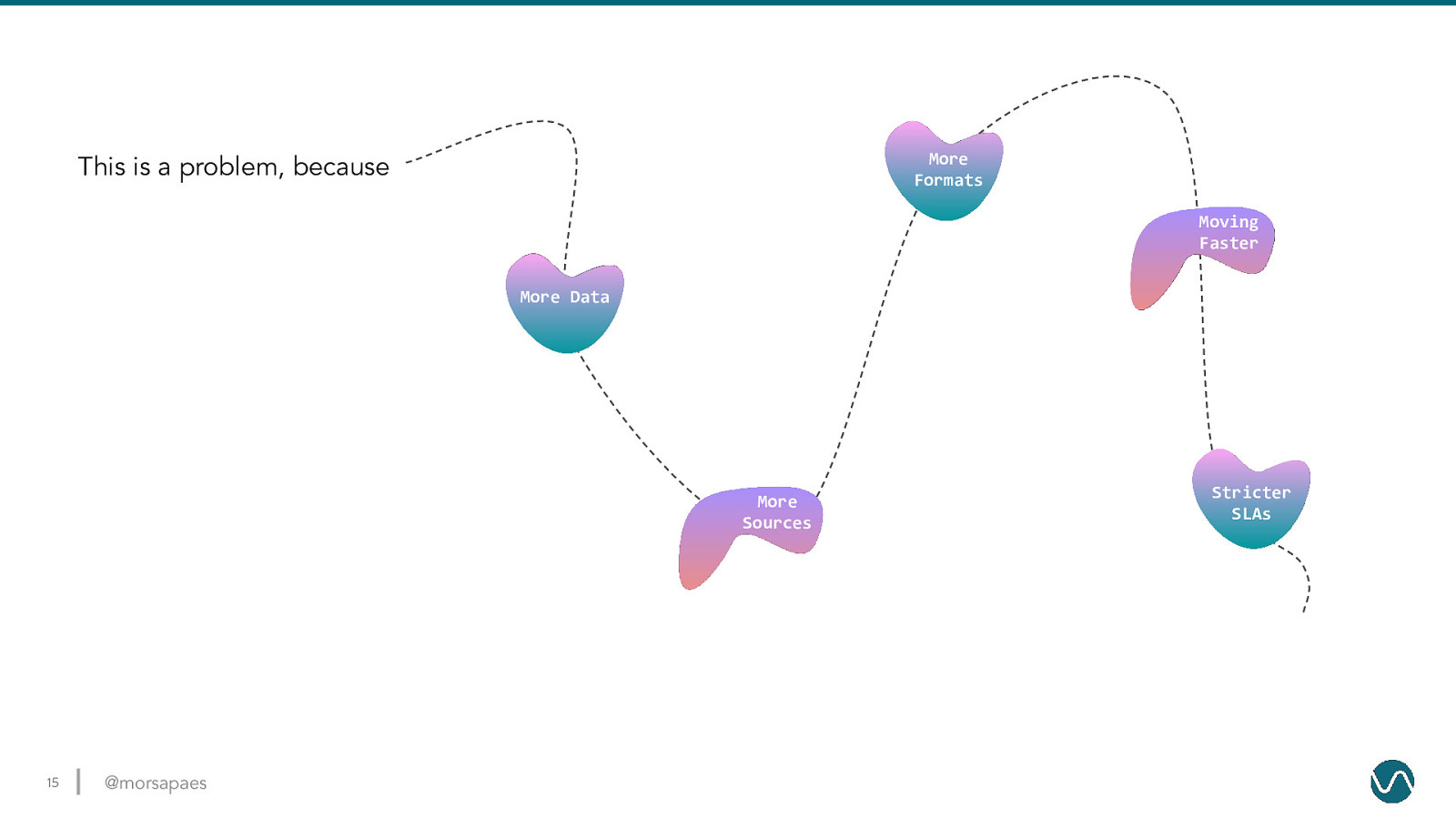

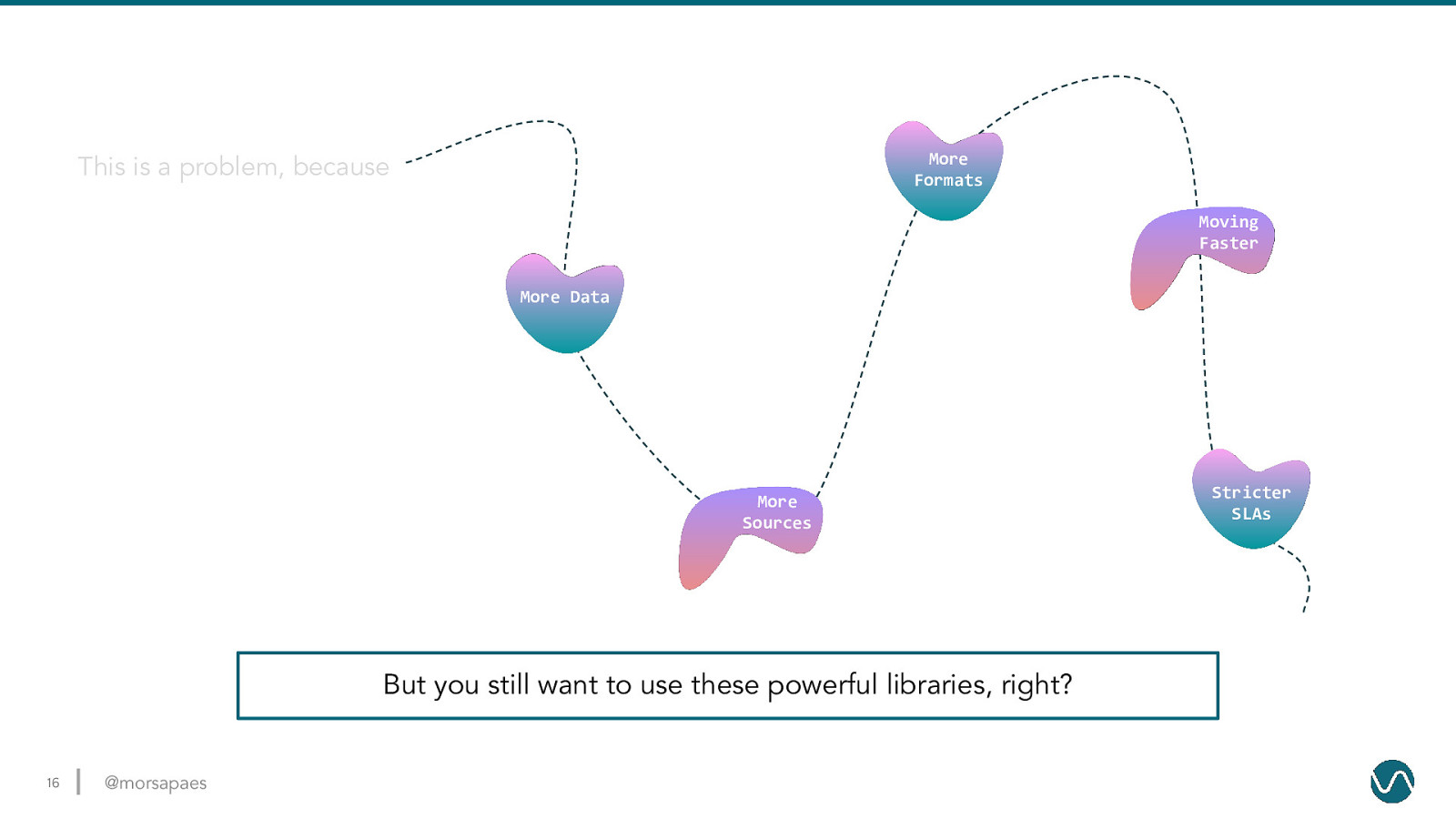

More Formats This is a problem, because Moving Faster More Data More Sources 15 @morsapaes Stricter SLAs

More Formats This is a problem, because Moving Faster More Data More Sources But you still want to use these powerful libraries, right? 16 @morsapaes Stricter SLAs

Why PyFlink? Java Scala 17 @morsapaes

Why PyFlink? Java Python Scala Expose the functionality of Flink beyond the JVM 18 @morsapaes

Why PyFlink? 19 @morsapaes

Why PyFlink? Distribute and scale the functionality of Python through Flink 20 @morsapaes

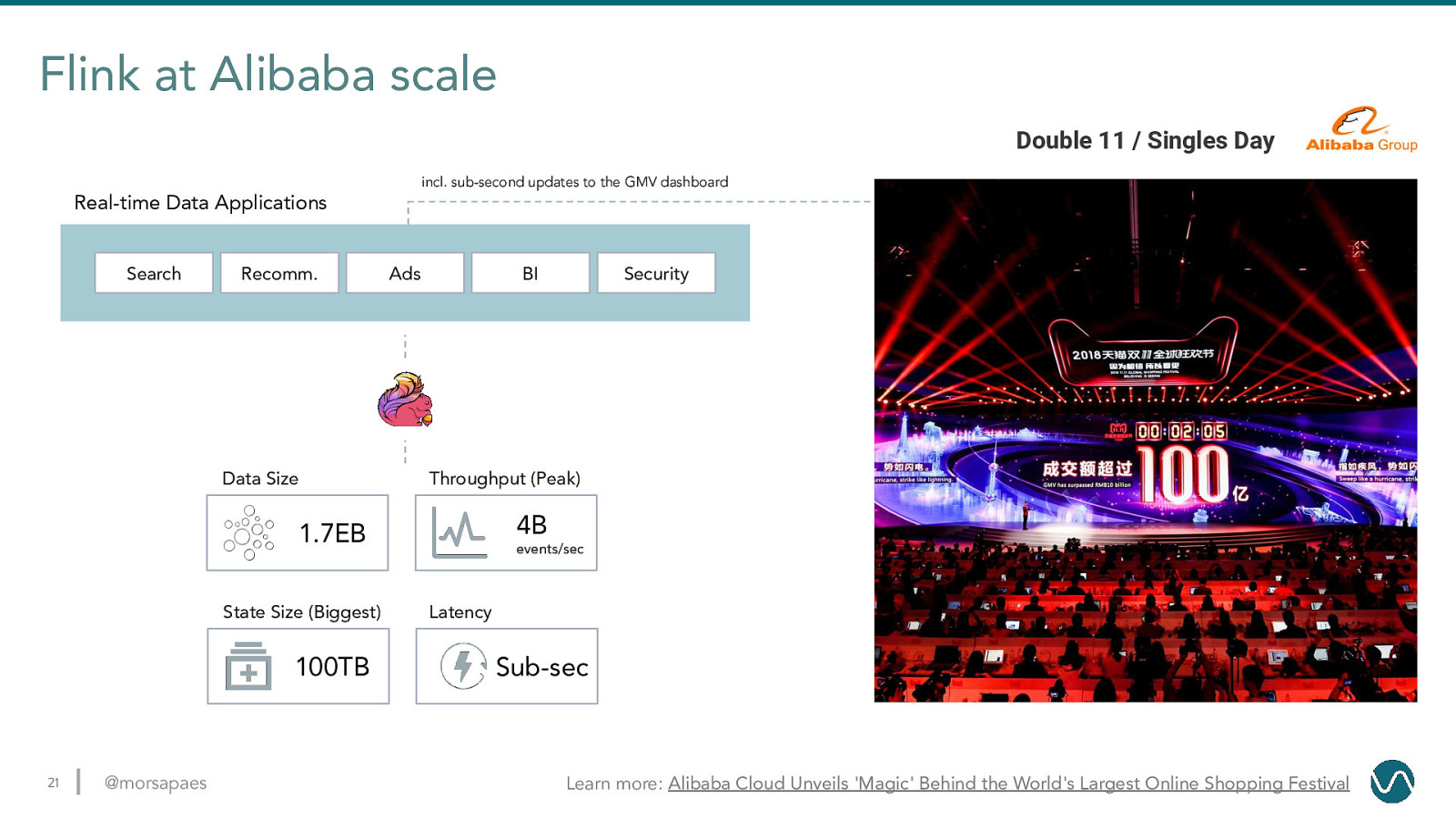

Flink at Alibaba scale Double 11 / Singles Day incl. sub-second updates to the GMV dashboard Real-time Data Applications Search Recomm. Data Size Ads BI Throughput (Peak) 4B 1.7EB State Size (Biggest) 100TB 21 @morsapaes Security events/sec Latency Sub-sec Learn more: Alibaba Cloud Unveils ‘Magic’ Behind the World’s Largest Online Shopping Festival

Demo 22 @morsapaes

Can we use PyFlink to identify the most frequent topics in the Flink User Mailing List? 23 @morsapaes

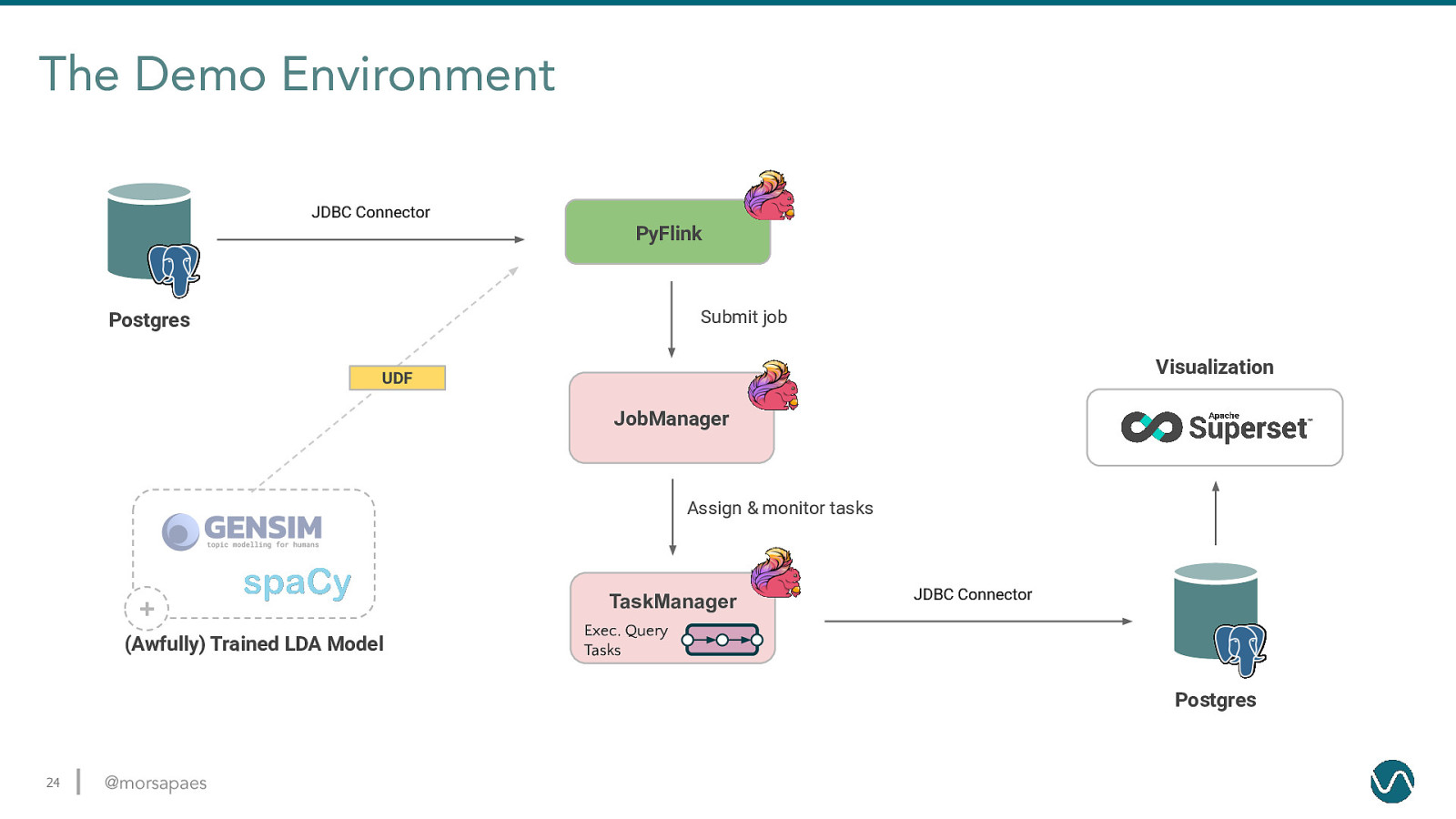

The Demo Environment JDBC Connector PyFlink Submit job Postgres Visualization UDF JobManager Assign & monitor tasks

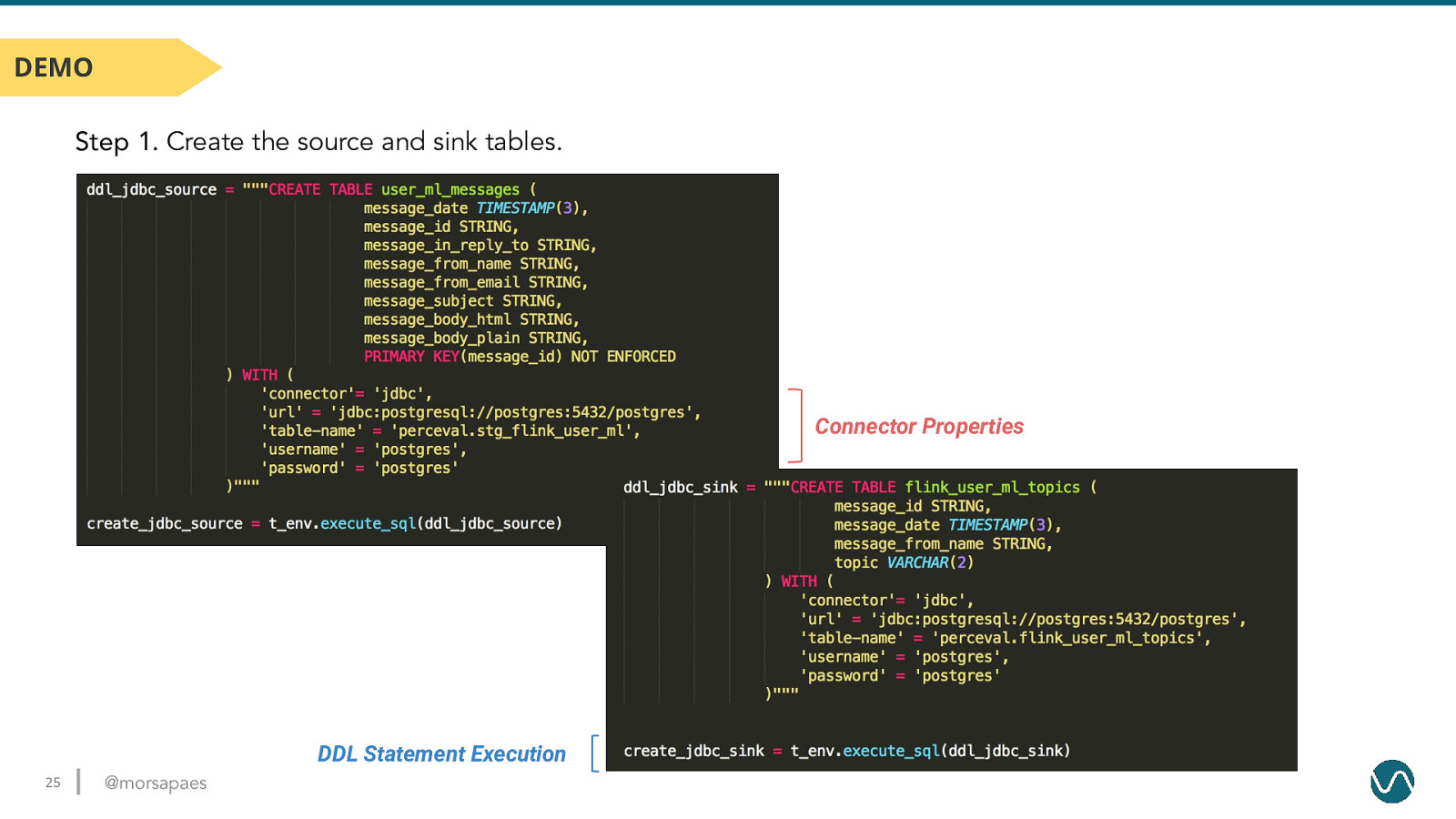

DEMO Step 1. Create the source and sink tables. Connector Properties DDL Statement Execution 25 @morsapaes

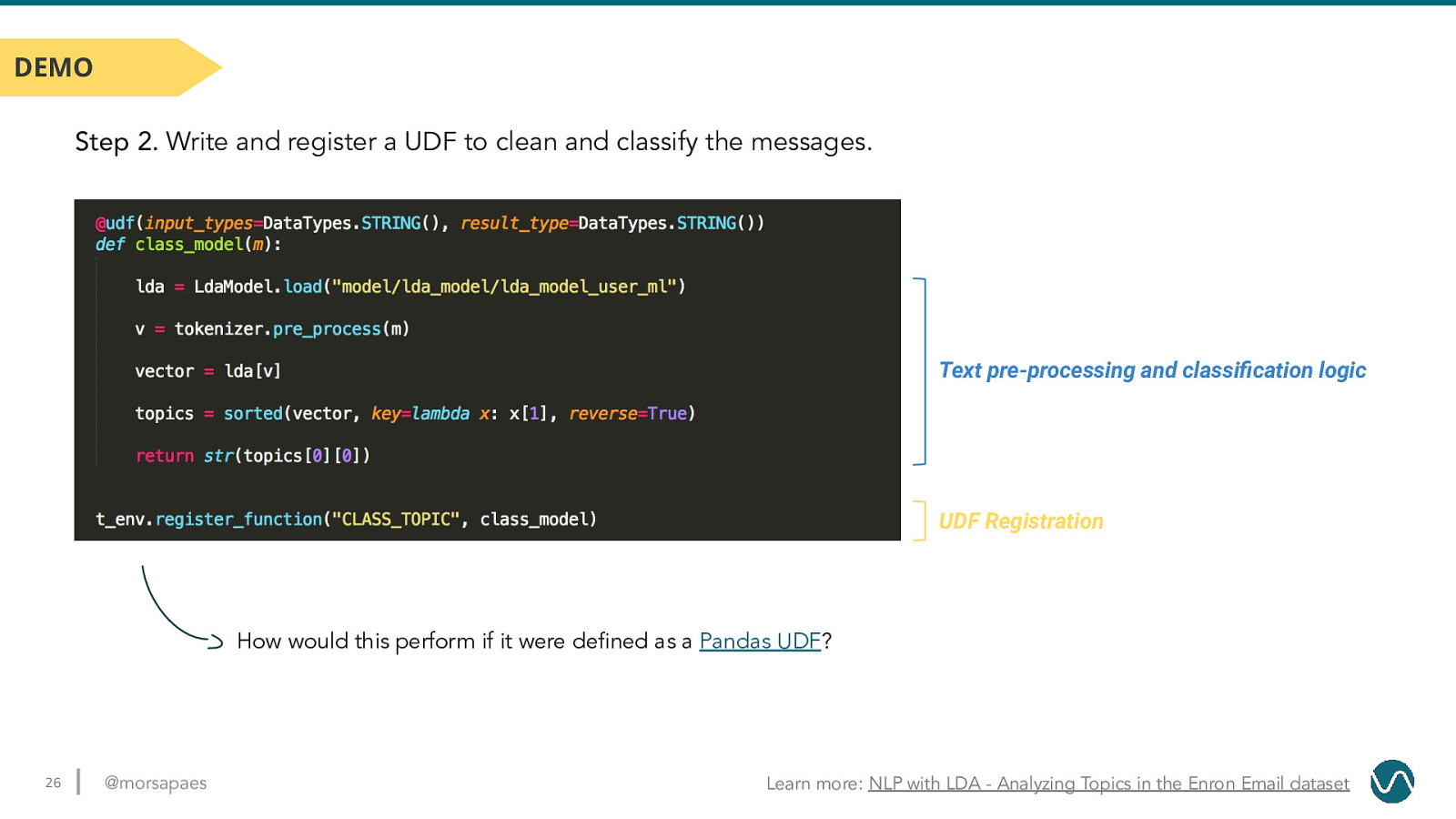

DEMO Step 2. Write and register a UDF to clean and classify the messages. Text pre-processing and classification logic UDF Registration How would this perform if it were defined as a Pandas UDF? 26 @morsapaes Learn more: NLP with LDA - Analyzing Topics in the Enron Email dataset

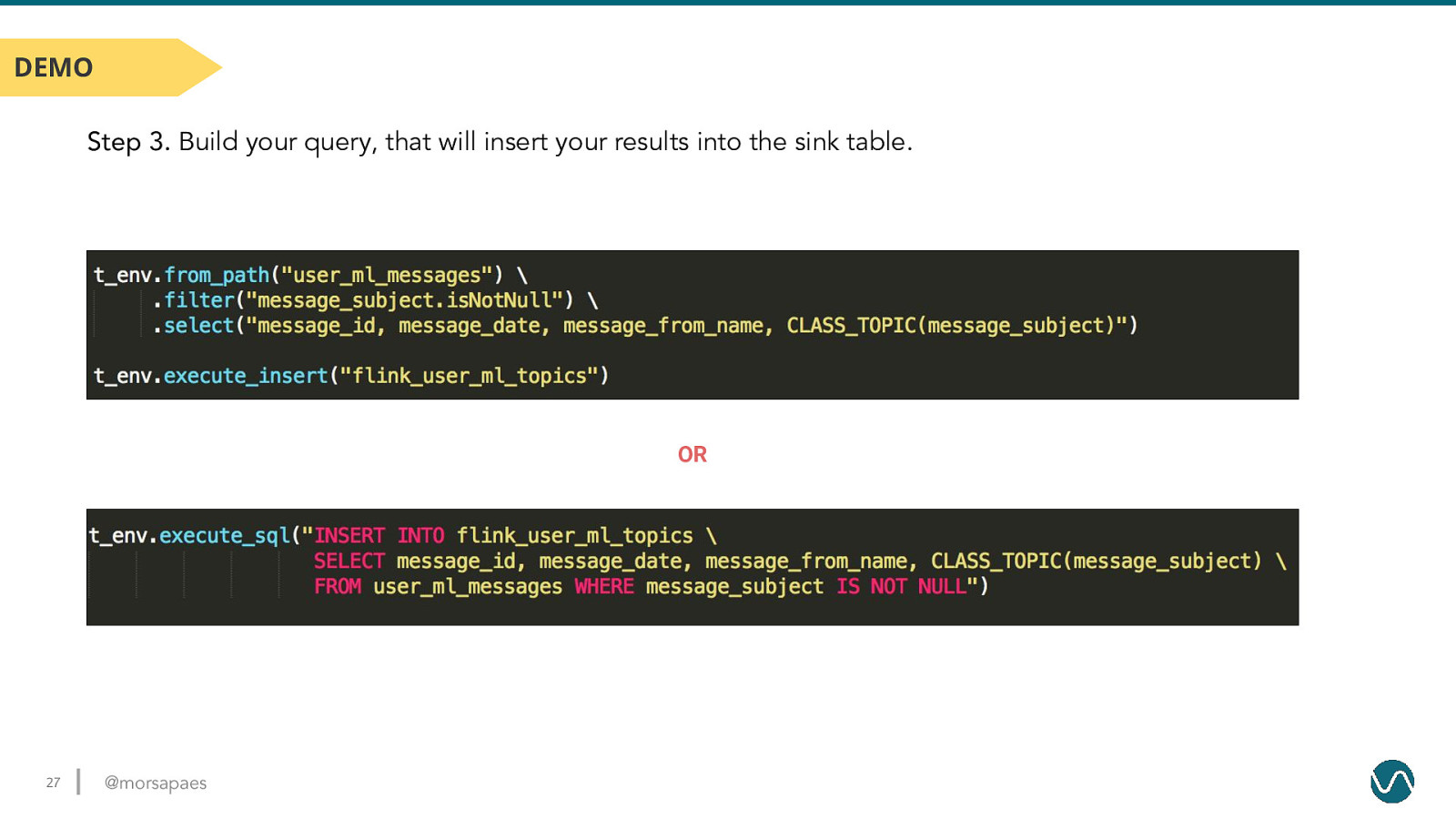

DEMO Step 3. Build your query, that will insert your results into the sink table. OR 27 @morsapaes

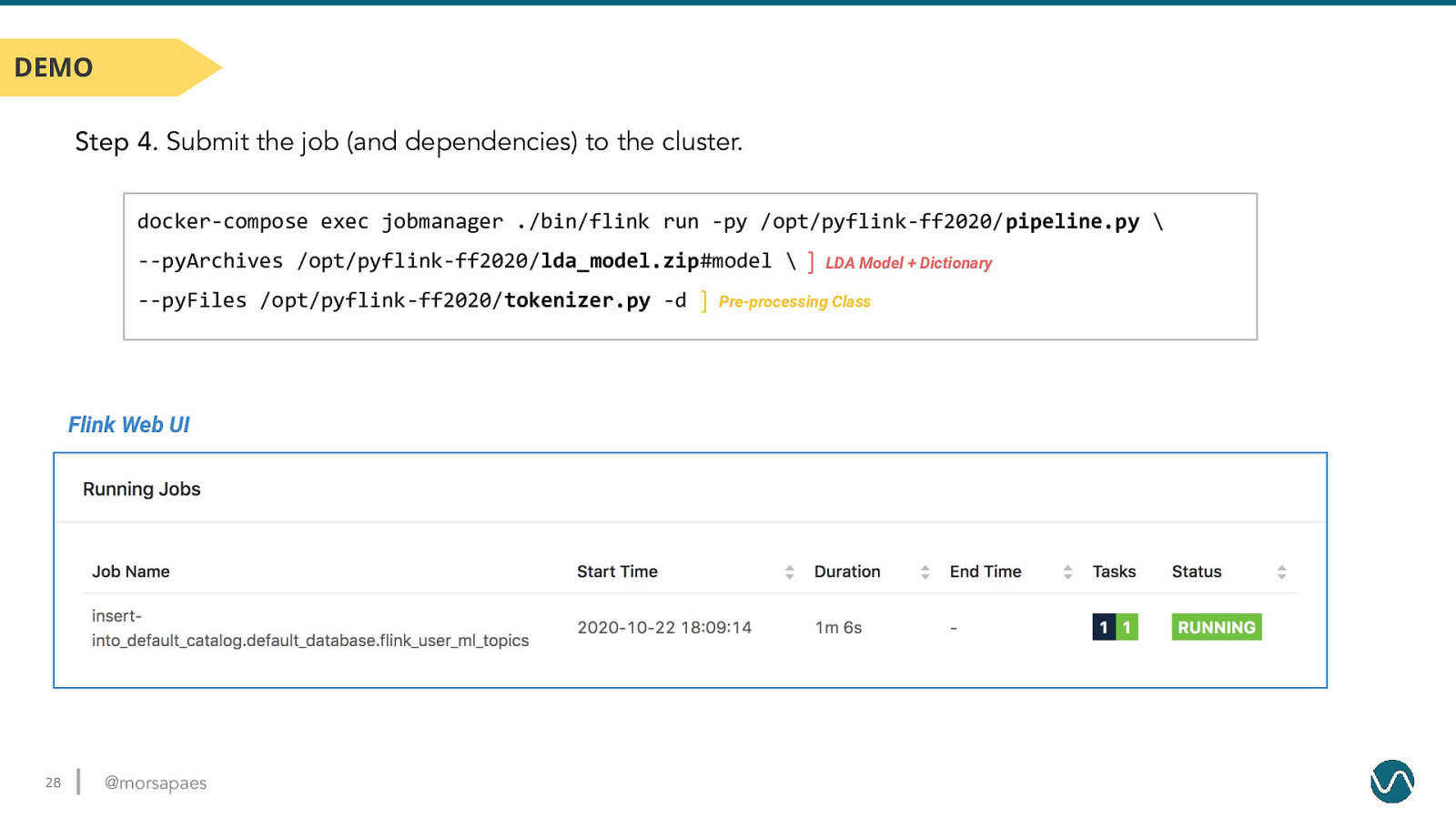

DEMO Step 4. Submit the job (and dependencies) to the cluster. docker-compose exec jobmanager ./bin/flink run -py /opt/pyflink-ff2020/pipeline.py \ —pyArchives /opt/pyflink-ff2020/lda_model.zip#model \ —pyFiles /opt/pyflink-ff2020/tokenizer.py -d Flink Web UI 28 @morsapaes LDA Model + Dictionary Pre-processing Class

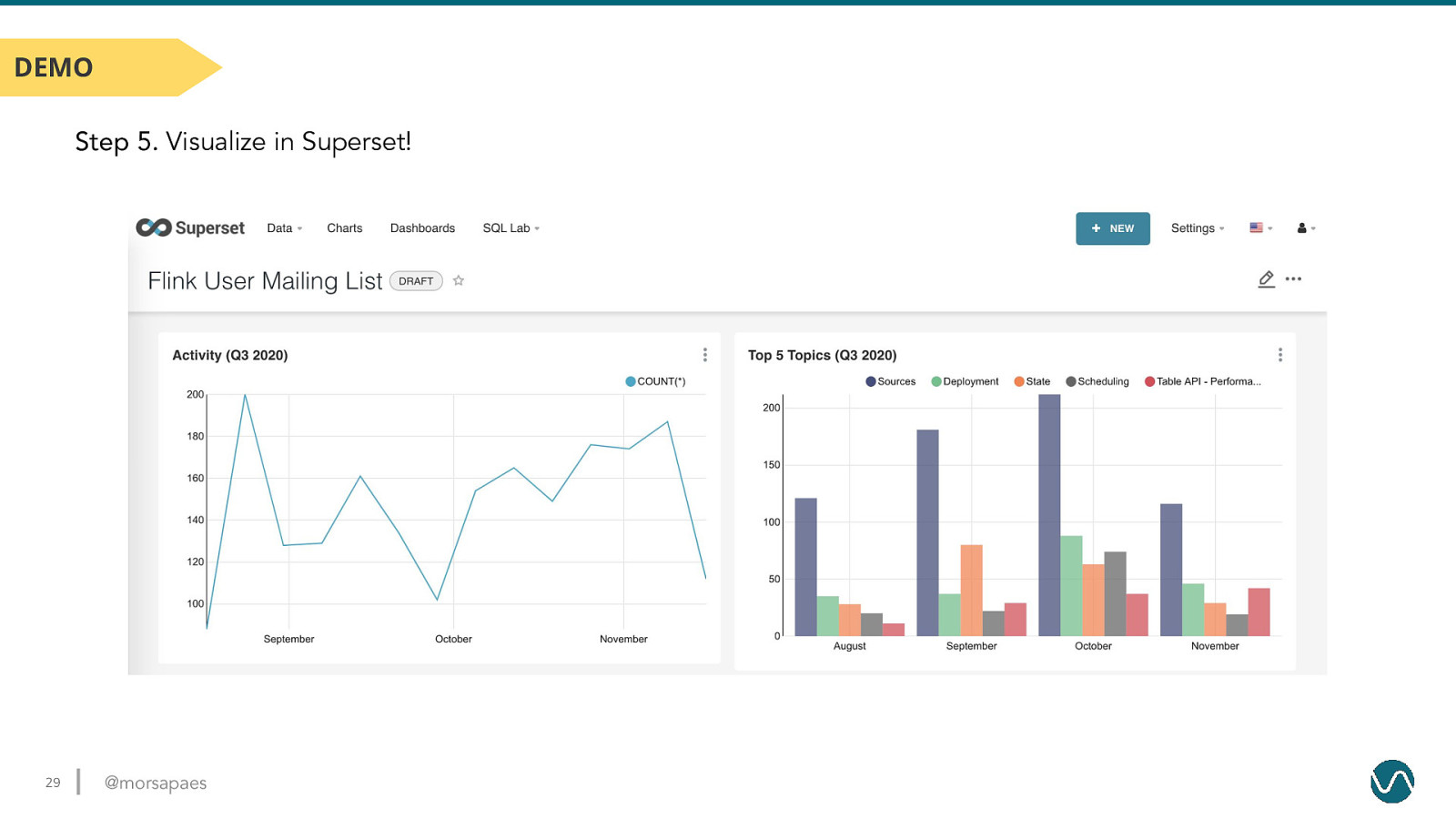

DEMO Step 5. Visualize in Superset! 29 @morsapaes

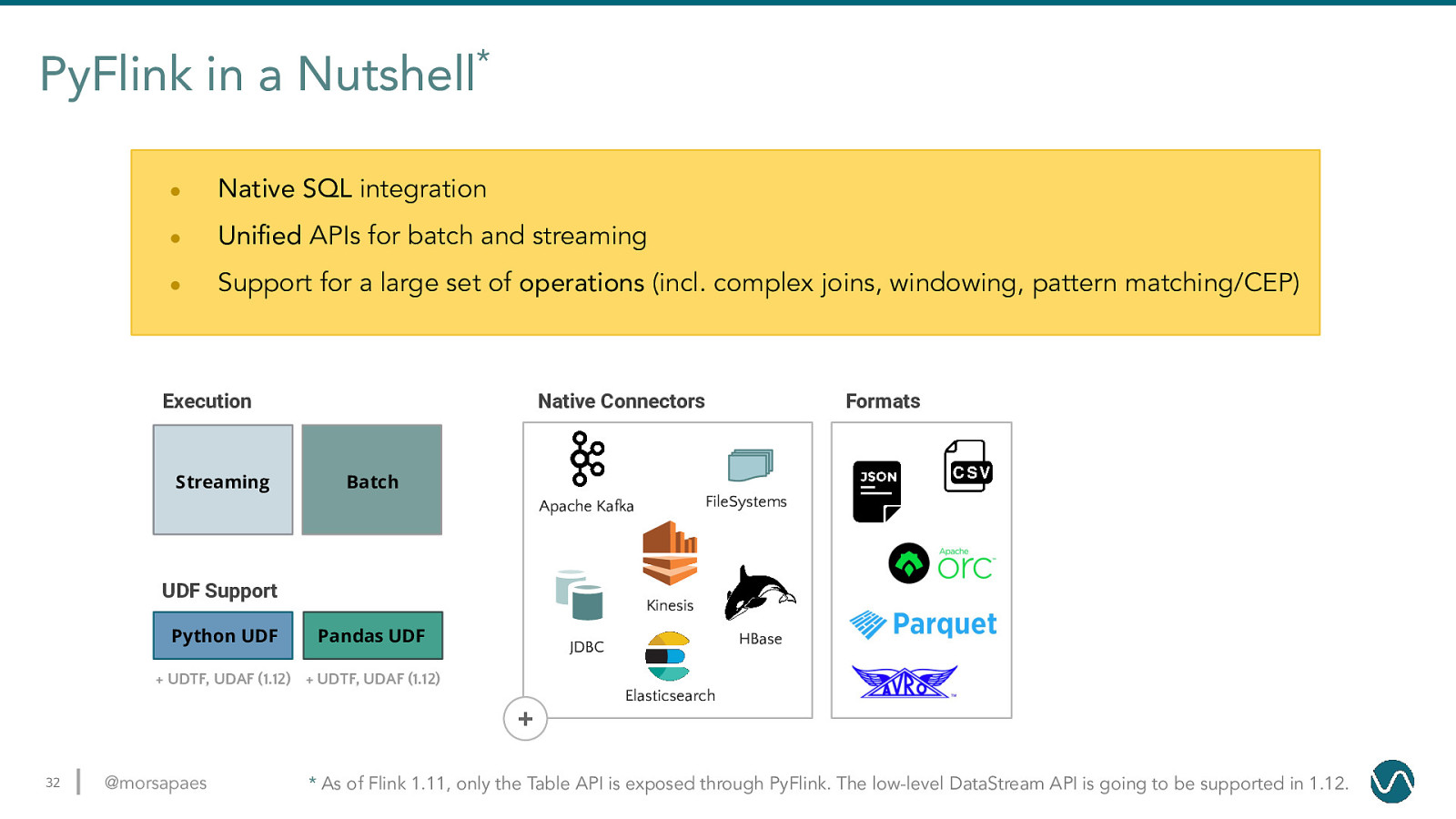

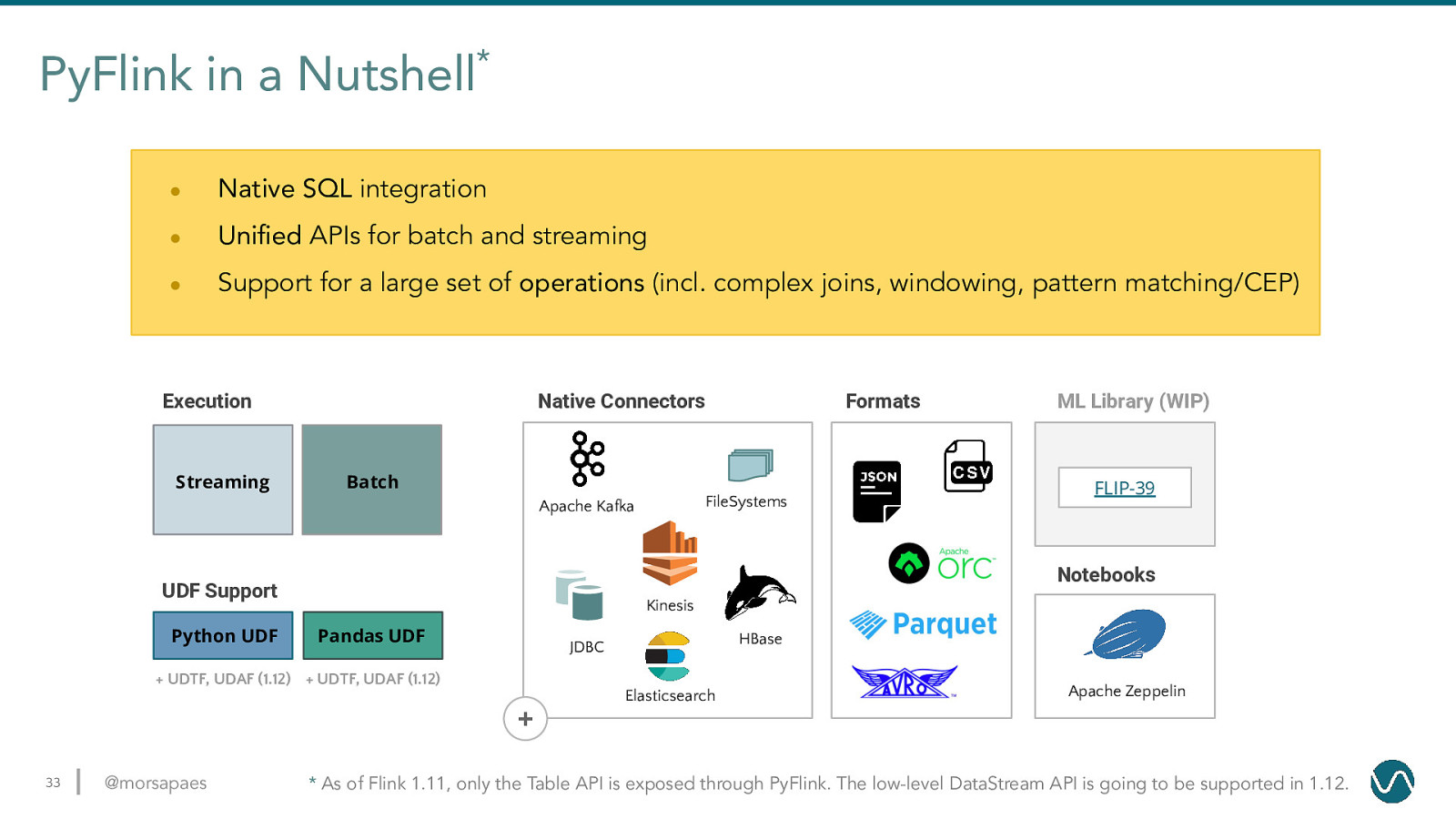

PyFlink in a Nutshell* 30 ● Native SQL integration ● Unified APIs for batch and streaming ● Support for a large set of operations (incl. complex joins, windowing, pattern matching/CEP) @morsapaes

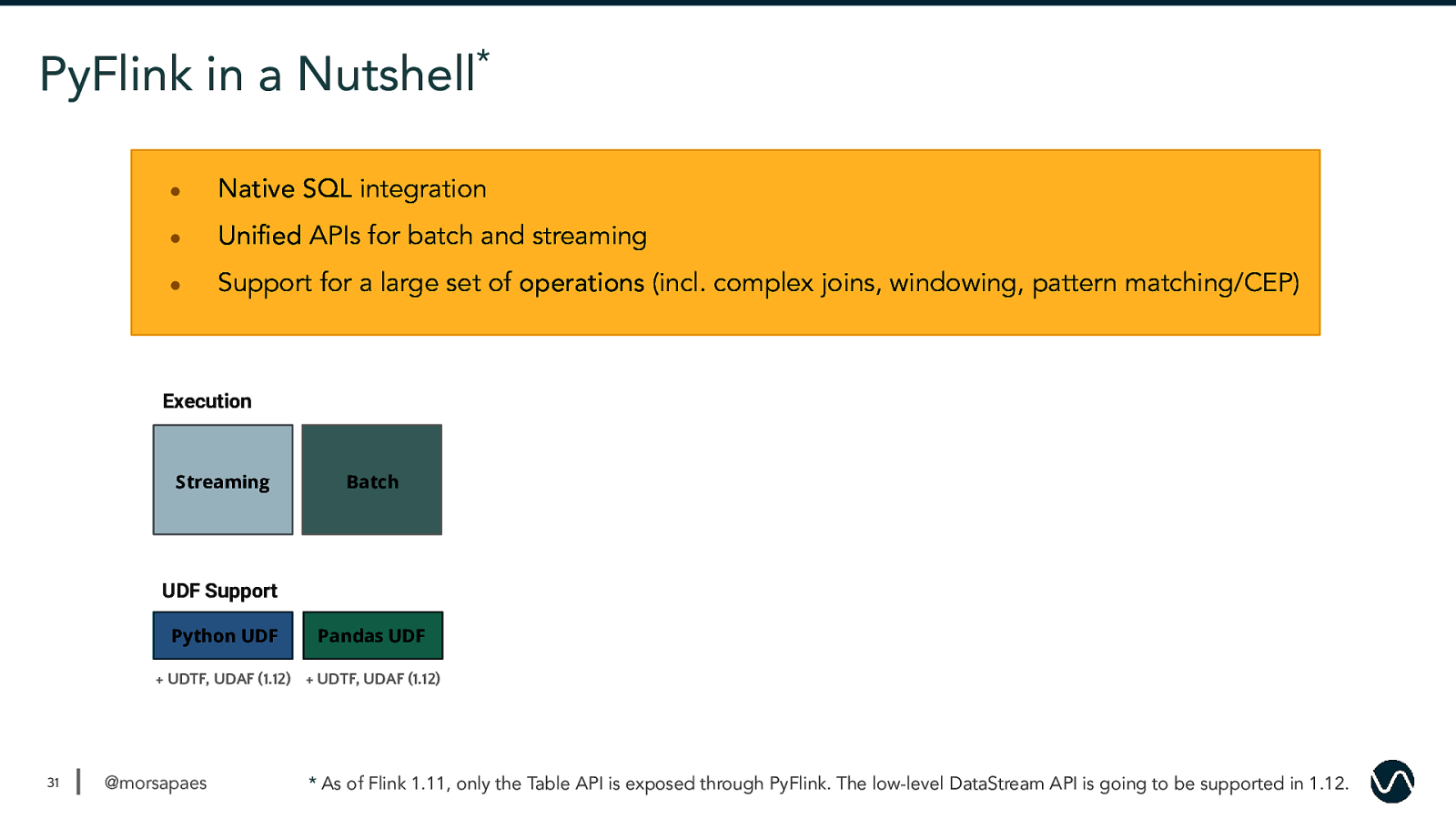

PyFlink in a Nutshell* ● Native SQL integration ● Unified APIs for batch and streaming ● Support for a large set of operations (incl. complex joins, windowing, pattern matching/CEP) Execution Streaming Batch UDF Support Python UDF Pandas UDF

PyFlink in a Nutshell* ● Native SQL integration ● Unified APIs for batch and streaming ● Support for a large set of operations (incl. complex joins, windowing, pattern matching/CEP) Execution Streaming Native Connectors Batch FileSystems Apache Kafka UDF Support Python UDF Formats Kinesis Pandas UDF HBase JDBC

PyFlink in a Nutshell* ● Native SQL integration ● Unified APIs for batch and streaming ● Support for a large set of operations (incl. complex joins, windowing, pattern matching/CEP) Execution Streaming Native Connectors Batch FileSystems Apache Kafka ML Library (WIP) FLIP-39 Notebooks UDF Support Python UDF Formats Kinesis Pandas UDF HBase JDBC

Thank you, Data Science UA! Follow me on Twitter: @morsapaes Learn more about Flink: https://flink.apache.org/ © 2020 Ververica