1

A presentation at Smashing Conference, NYC 2024 in October 2024 in New York, NY, USA by Patrick Brosset

1

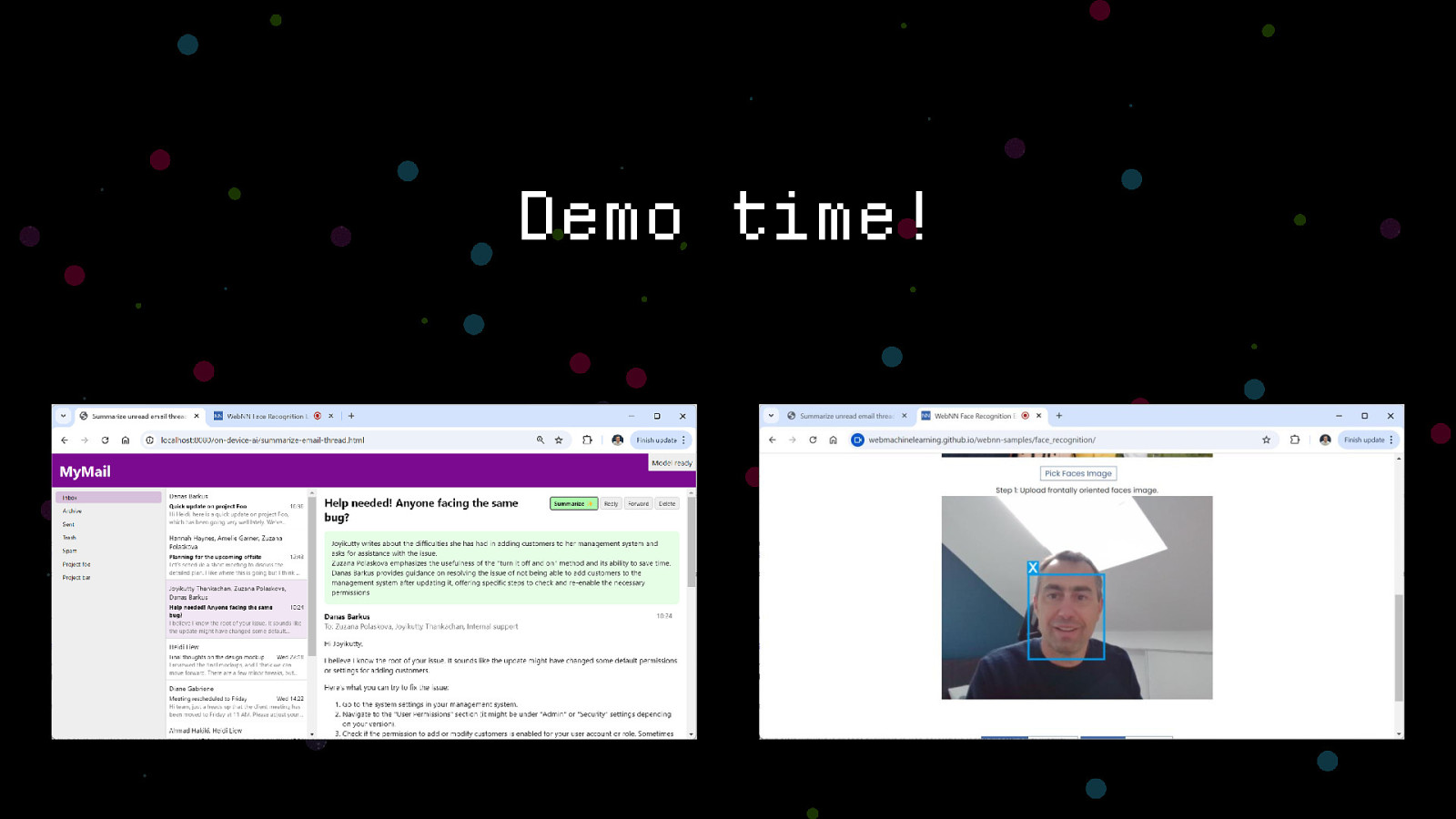

Start with the demos first, without saying anything about how they work yet. Show one SLM Prompt API demo, and one image processing WebNN demo. http://localhost:8080/on-device-ai/summarize-email-thread.html https://webmachinelearning.github.io/webnn-samples/face_recognition/ 2

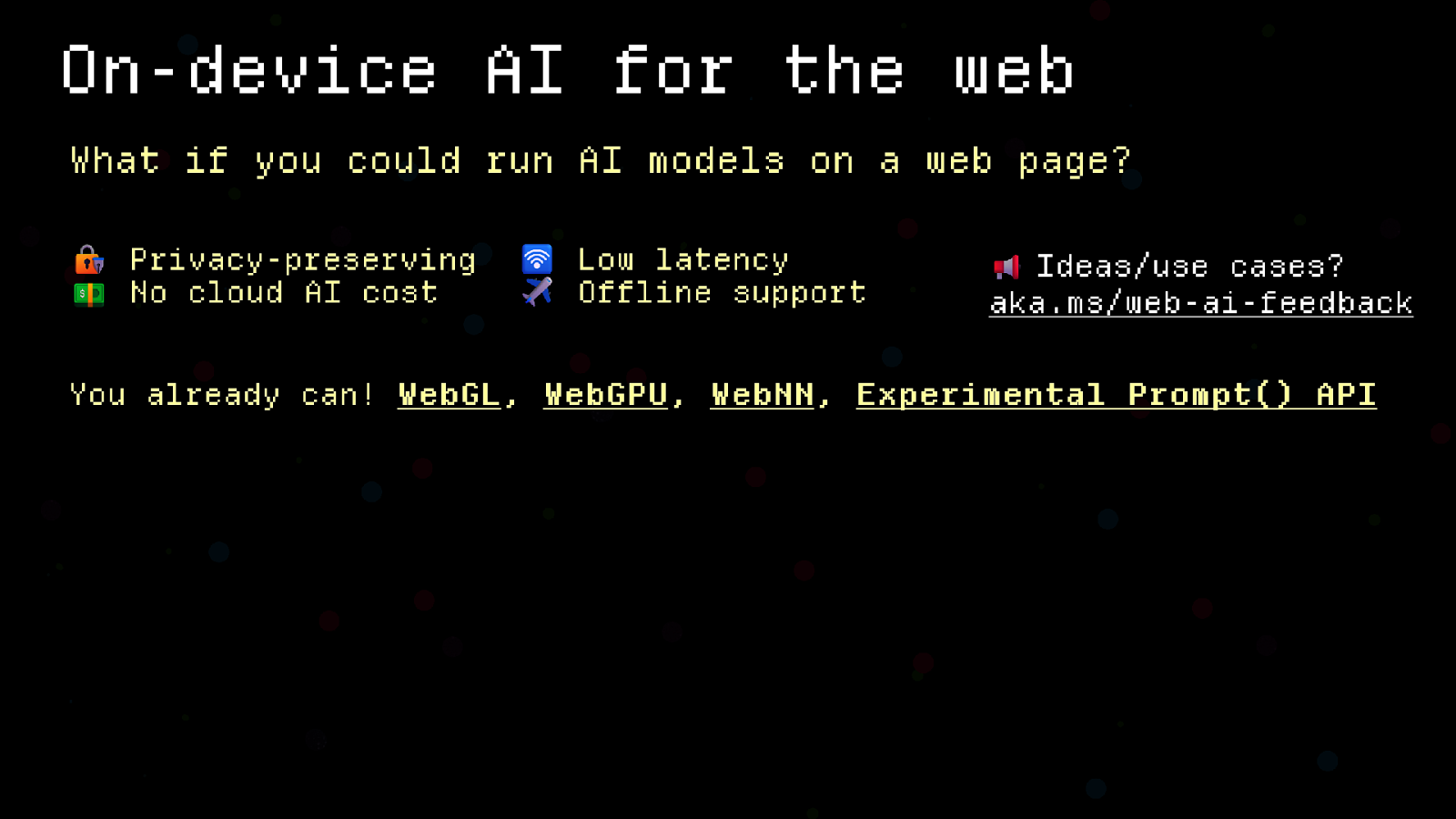

▪ ▪ ▪ ▪ ▪ What if this was running on your device, without relying on a cloud service? No privacy concerns, less latency, no cloud AI cost, and offline support. 3

▪ ▪ ▪ ▪ Does it make sense to have this available on the web platform? 4

▪ The two most important technologies of our time. The Web has revolutionized the way we share information and has permeated every aspect of our lives. AI is the new frontier. Has been expanding in complexity/capability about10x every year for the past 4 years or so. Many ways web and AI overlap: - AI consumes the web content for training. - Web browsers provide AI-powered features, like compose, translate, correct, etc. - The web is often the place where you experience AI, through chatbots and other features. 5

▪ ▪ But what about the overlap between the web platform and AI? The web platform is the collection of languages and technologies that web devs use to create and distribute apps to their users. And, compared to other types of app platforms, like windows, ios, or android, it comes with its own specific traits. - Multi-implementation. No single vendor. This means anyone should be able to create a web client based on standards. - Fundamental law of repeatability. Makes it possible for resources to endure through time. You can still run a web page from 1995. What does it mean for the web platform to offer built-in AI capabilities? Generative AI, and in particular language models, which are at the forefront, couldn’t be further from repeatable. It’s not governed by an algorithm which can be re-implemented in multiple browsers and tested? How do you specify it? How do you provide user features based on AI 6

consistently through browsers, devices, and over time? 6

▪ ▪ ▪ ▪ ▪ Well, it’s already happening. There are two approaches I want to talk about today. The first one is BYOM. Machine learning models run with neural networks that are particularly adapted for GPU chips. That’s why graphics card prices are so high. The Web platform has developed several APIs that let you run models from JavaScript. WebGL, and more recently WebGPU let you run your models on GPU chips. And even more recently, WebNN, which lets you abstract the hardware and make it easy to run models on CPU, GPU, or these new NPU chips. And frameworks like TF Lite and ONNX Runtime Web make it easy to do so. Once you have your model, likely from open source hubs like huggingFace.co, and you 7

have the framework in place, you can use the model fairly easily. Things to keep in mind: - You get to choose your own model - But, it’s per origin - Some code complexity, although frameworks do the heavy lifting. 7

▪ ▪ ▪ But also, Google recently announced they’re working on what they call built-in AI for the web, with an experimental Prompt API. This is actually what the demos I showed earlier are based on. It’s called “built-in” because the model is provided by the browser, so webpages don’t need to download it themselves. No need for framework code. Simple Prompt() API. Model can be reused by all tabs. For now a download still needs to happen. The model doesn’t come when installing Chrome. So the first site that uses the API will suffer through the download. Over time though, the cost is amortized, once more apps start using the model, there’s no need to re-download it. Also various devices might start to come with their own models pre-installed. For example, newer Windows PCs ship with Phi already, which you can use from the Windows Copilot Runtime, when building native apps. 8

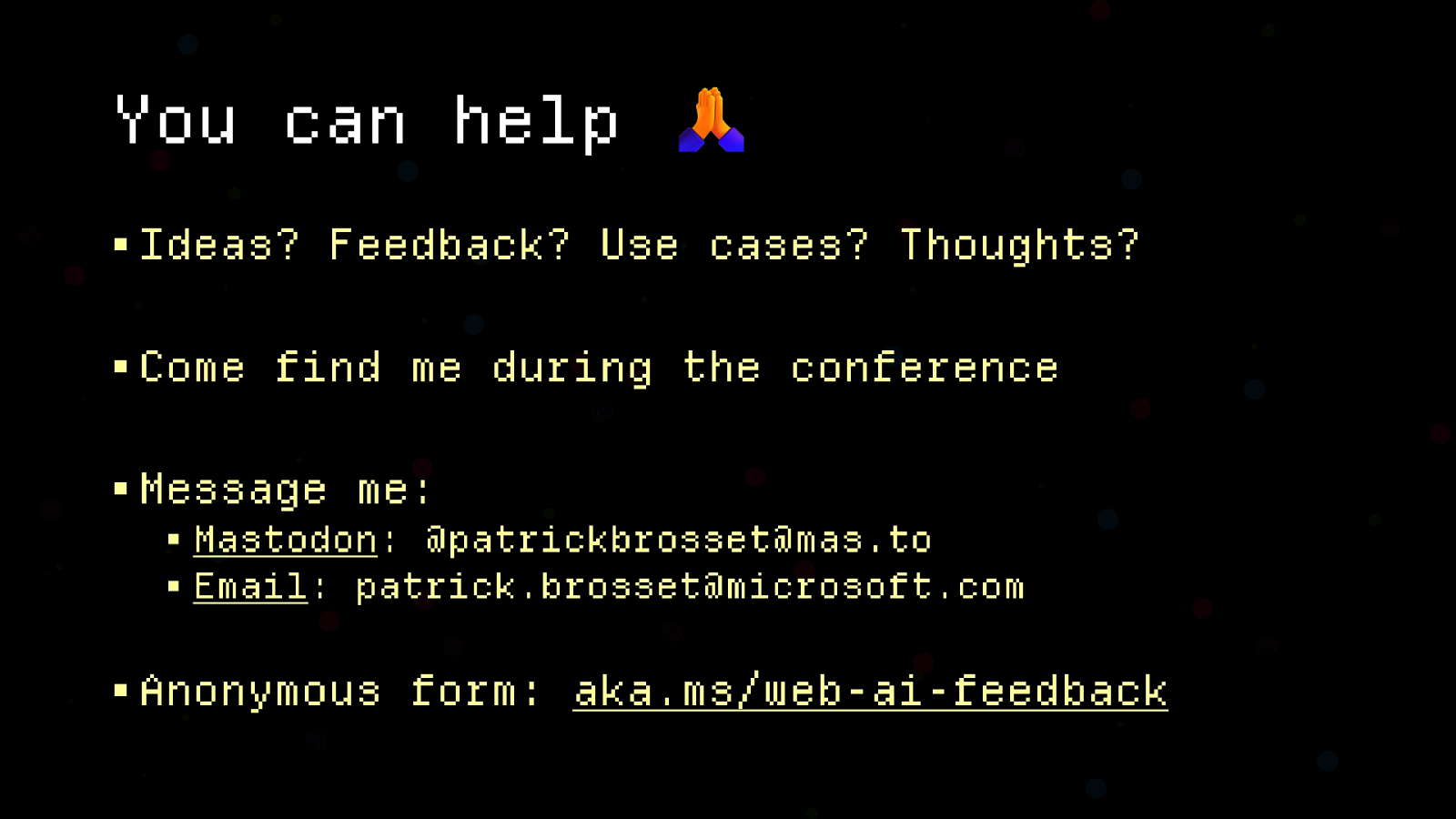

So, it’s already happening, but not shipping yet. This is very early exploration. Google is using this mostly to engage the community and figure out if and which APIs would developers need. 8

▪ ▪ ▪ ▪ ▪ So, why am I talking about this? I’m a PM on the Microsoft Edge team, and this is an area we’re generally looking into as well. We want the web to succeed. Microsoft invests in the web platform, and has been for years. Many of our apps run on the web platform. We contribute a ton of code to the chromium project. In fact we also contribute to WebNN and WebGPU quite heavily. Surveys confirm that devs need the web to be up to par with native. And, on native, you can already use pre-installed models on other app platforms. So why not the web? Our partners also tell us they need this capability. Devices are more and more capable of running complex language models. With more and more apps being based on the web platform, it does make sense to make these models available to web apps. 9