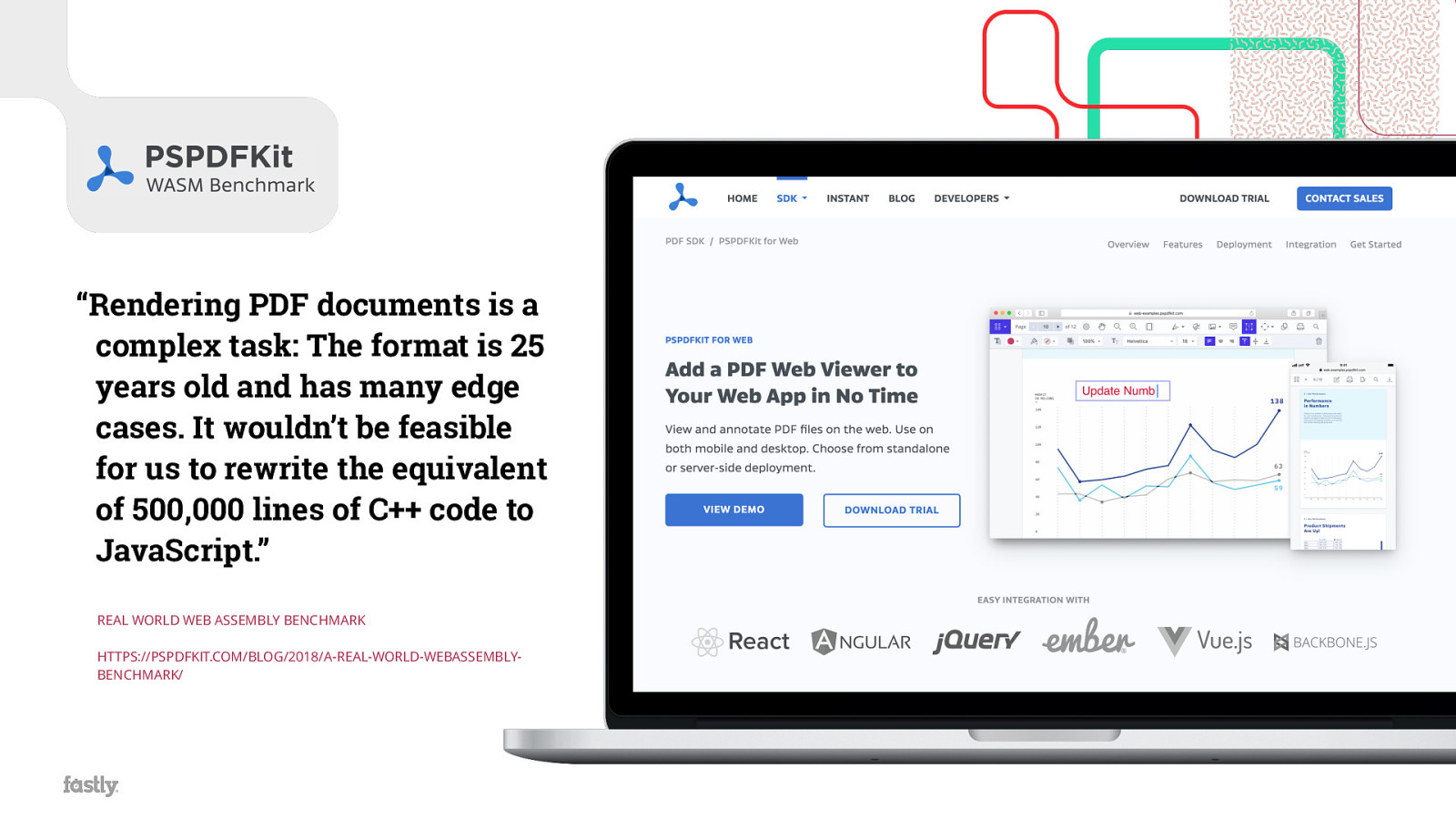

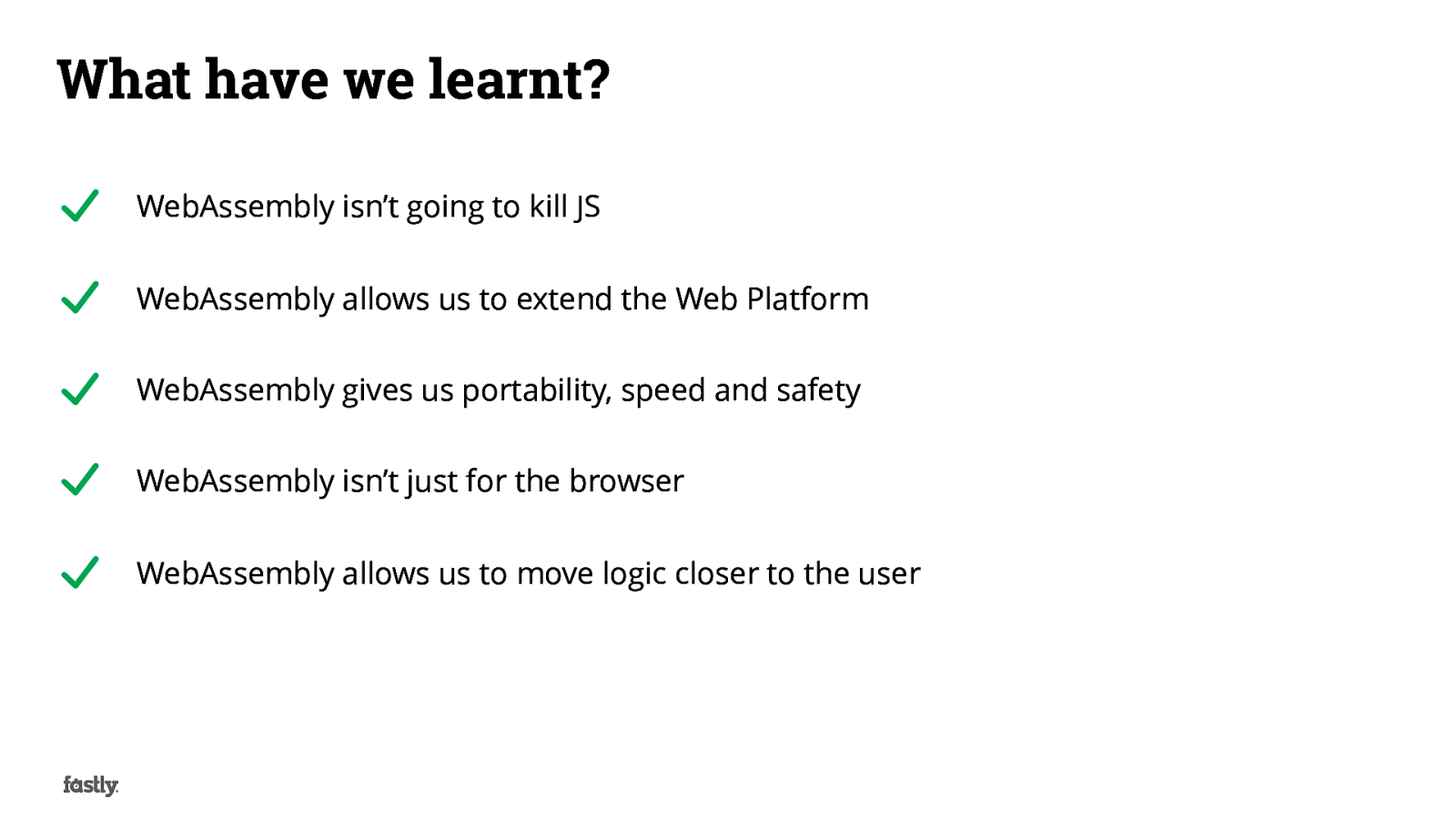

So for the last 20 years, the Web Platform has largely consisted of these three technologies, HTML, CSS and JavaScript. Initially we had HTML to describe documents and CSS for styles. Then as the web grew and the demand for interactivity increased, we realised we needed a “glue language” that was easy to use by programmers, could be embedded within web browsers and the code could be written directly in the Web page markup. Therefore the high-level language JavaScript was created. It’s its a true testament to the design of these technologies and languages that they’ve stood the test of time, yes, we a the occasional fling with the likes of Adobe Flash or Java Applets that have attempted to change how we program for the web, but none of them have survived. Later with the birth of A JAX we were able to request fragments of the document or even just data payloads from our servers and stitch these responses together and inject into the DOM dynamically. This caused a paradigm shift from Thick Servers, Thin Clients to Thick Clients, Thin Servers. With the likes of React and other javascript frameworks we now live in a world of single page web applications where most of our heavy lifting and compute is happening on the client.