@rmoff / 19 Mar 2024 / #kafkasum t 🐲 Here be Dragons Stacktraces Flink SQL for Non-Java Developers i m Robin Moffatt, Principal DevEx Engineer @ Decodable

A presentation at Kafka Summit 2024 in March 2024 in London, UK by Robin Moffatt

@rmoff / 19 Mar 2024 / #kafkasum t 🐲 Here be Dragons Stacktraces Flink SQL for Non-Java Developers i m Robin Moffatt, Principal DevEx Engineer @ Decodable

@decodableco @rmoff / #kafkasum m i i m Actual footage of a SQL Developer looking at Apache Flink for the first t e t

i @rmoff / #kafkasum m @decodableco t

@decodableco @rmoff / #kafkasum i m What Is Apache Flink? t

@decodableco @rmoff / #kafkasum i m A Brief History of Flink t

@decodableco @rmoff / #kafkasum i m or t

i @rmoff / #kafkasum m @decodableco t

@decodableco @rmoff / #kafkasum Back in the t e of dinosaurs Hadoop • Started life as a research project in 2 called Stratosphere. • This was the t 1, 1 0 m m i i i m e of MapReduce. Java and Scala were the only way to do this. t

@rmoff / #kafkasum Flink is a big project • Flink • Stateful Functions • • Kubernetes Operator • CDC Connector i on (incubating) m m i • Pa L M @decodableco t

@decodableco @rmoff / #kafkasum i m Capabilities t

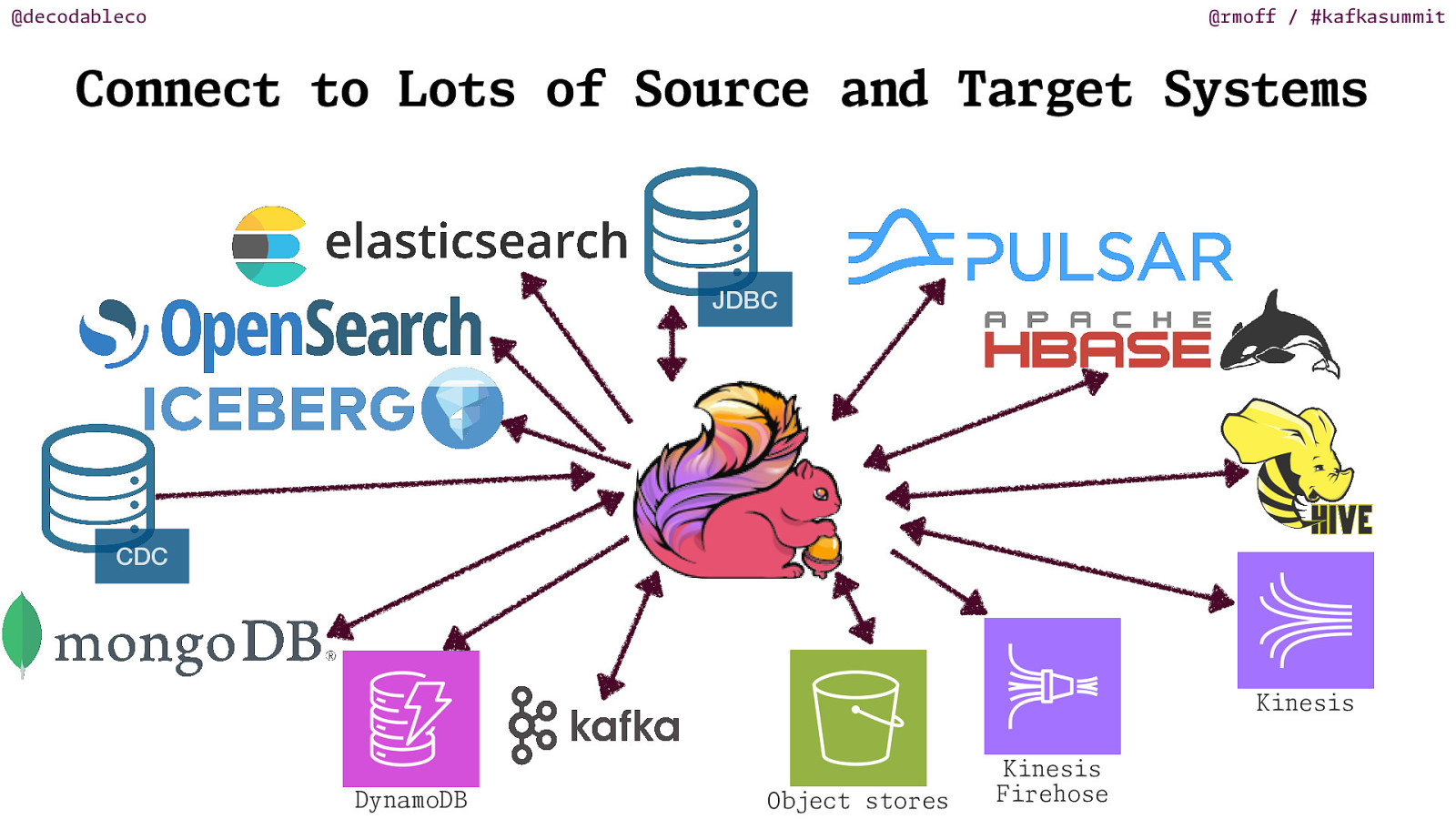

@decodableco @rmoff / #kafkasum Connect to Lots of Source and Target Systems JDBC CDC Kinesis i m DynamoDB Object stores Kinesis Firehose t

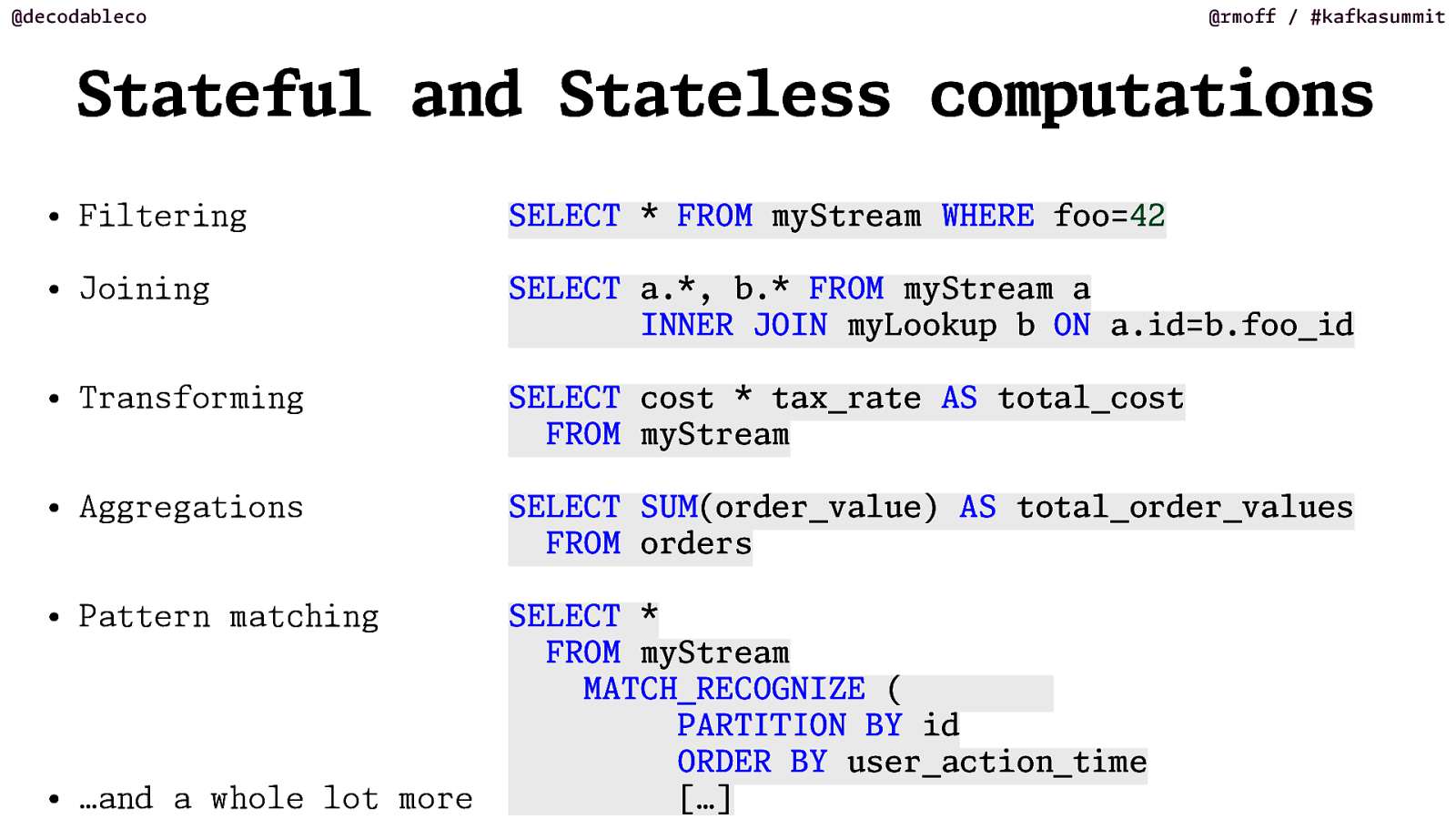

@decodableco @rmoff / #kafkasum Stateful and Stateless computations • Filtering SELECT * FROM myStream WHERE foo=42 • Joining SELECT a., b. FROM myStream a INNER JOIN myLookup b ON a.id=b.foo_id • Transfor ng SELECT cost * tax_rate AS total_cost FROM myStream • Pattern matching SELECT * FROM myStream MATCH_RECOGNIZE ( PART ON BY id ORDER BY user_action_t […] I T I i m M i m • …and a whole lot more m SELECT SU (order_value) AS total_order_values FROM orders i • Aggregations e t

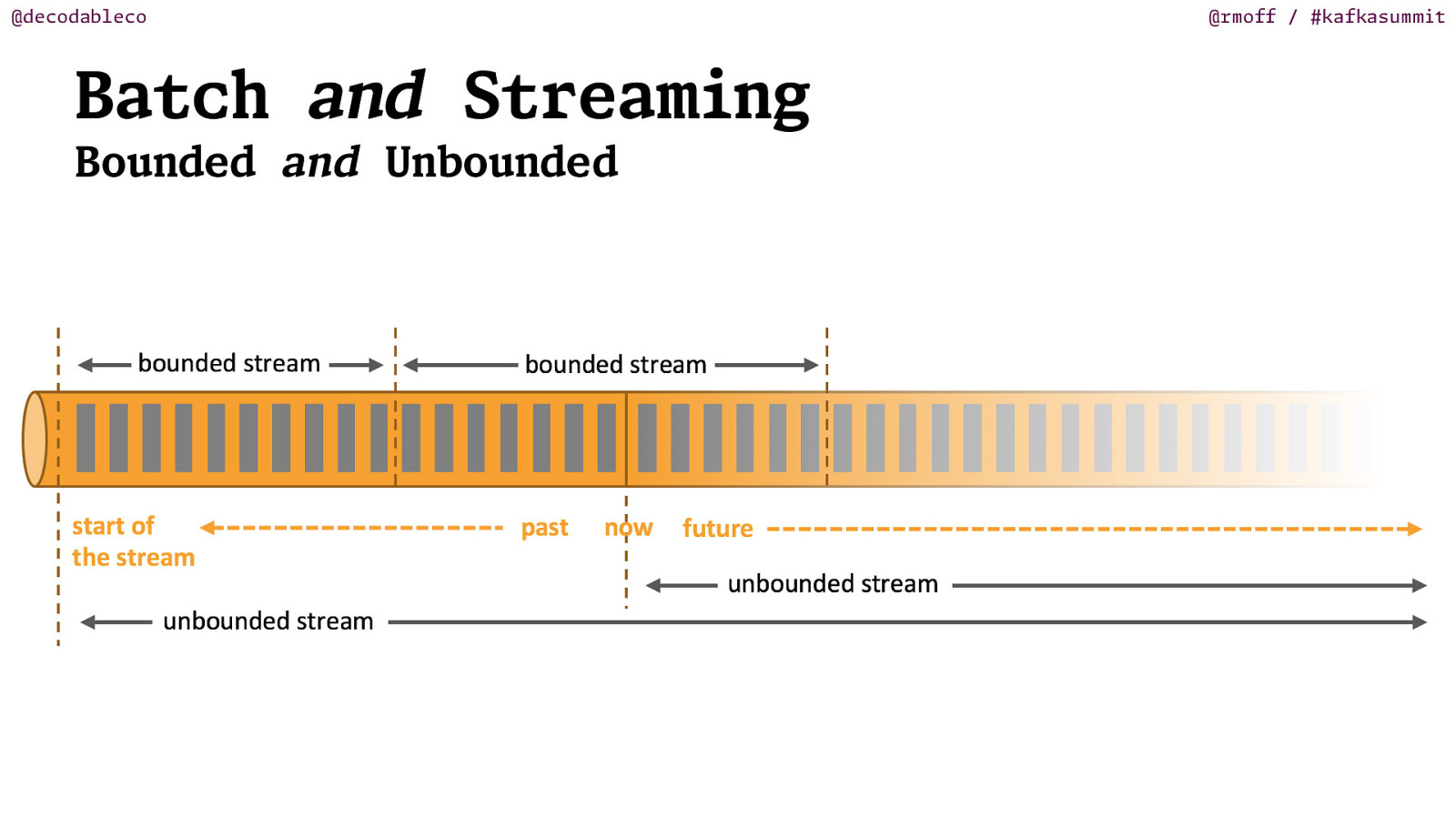

@decodableco @rmoff / #kafkasum Batch and Strea i m i m Bounded and Unbounded ng t

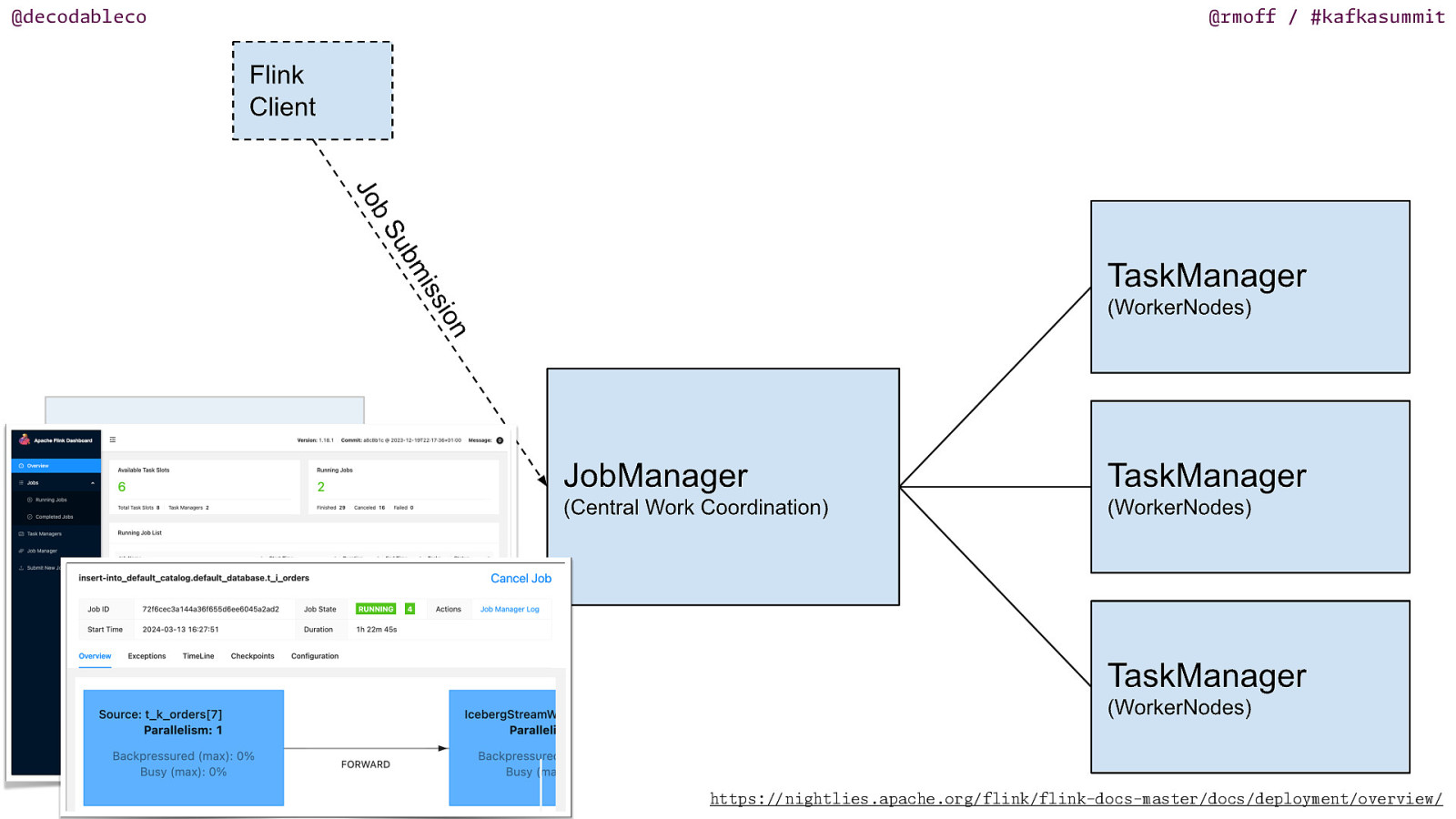

@decodableco @rmoff / #kafkasum i m How Does Flink Work? t

@decodableco @rmoff / #kafkasum t i m << magic 🪄 🧙 >>

@decodableco @rmoff / #kafkasum i m // https: t nightlies.apache.org/flink/flink-docs-master/docs/deployment/overview/

@decodableco @rmoff / #kafkasum t n e p O s ’ t e L : e n i g n E L Q S s ’ ! k m n o o R Fli e n i g n E the r e h t l a W o T 🗣 y a d s e u 📆 T m p 0 3 : 5 ⏰ 2 m o o R t u o k a e r 🗺 B i m m i // https: nightlies.apache.org/flink/flink-docs-master/docs/deployment/overview/

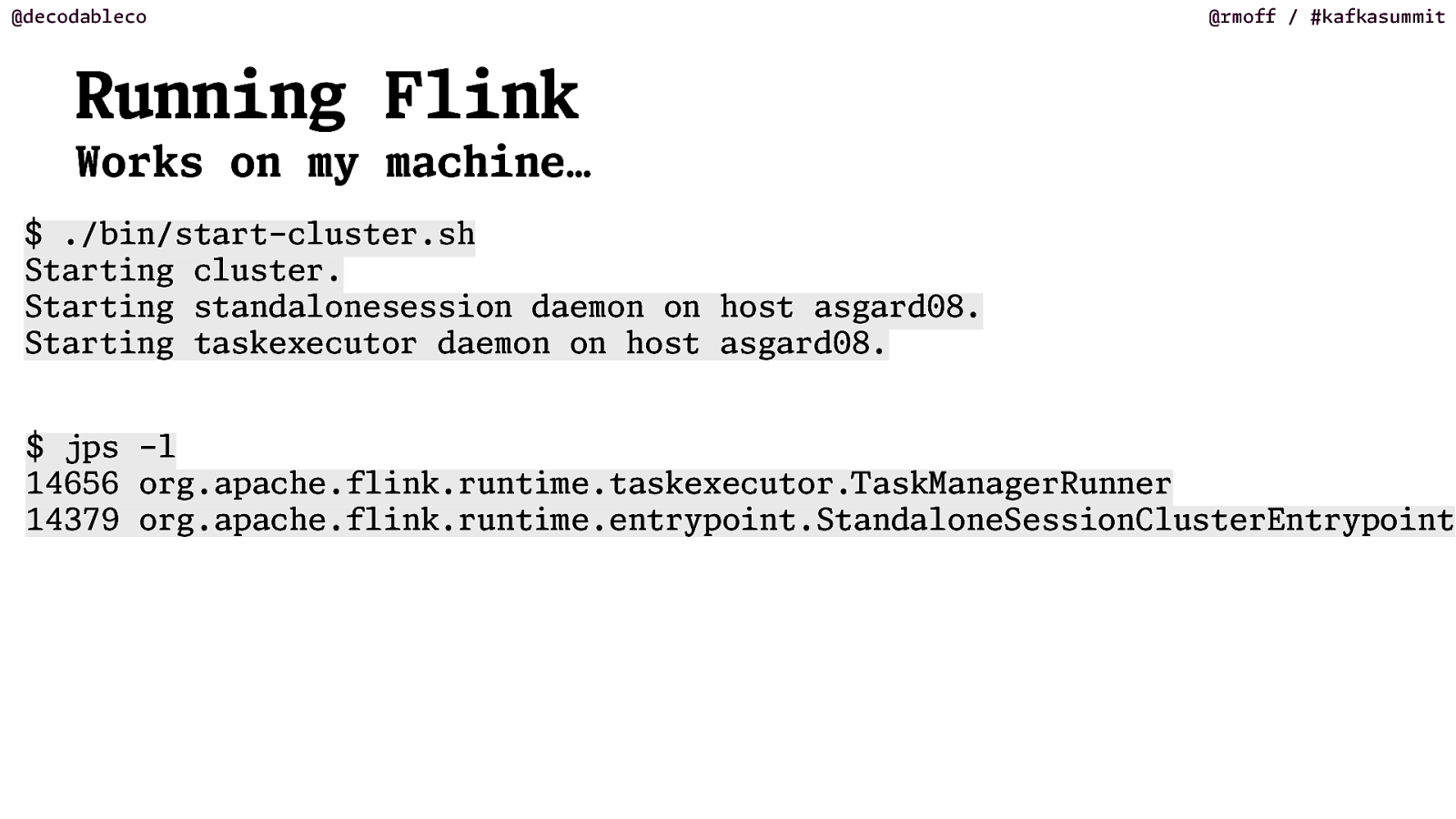

@decodableco @rmoff / #kafkasum t Running Flink Works on my machine… $ ./bin/start-cluster.sh Starting cluster. Starting standalonesession daemon on host asgard08. Starting taskexecutor daemon on host asgard08. e.taskexecutor. askManagerRunner e.entrypoint.StandaloneSessionClusterEntrypoint T m m i i i m $ jps -l 14656 org.apache.flink.runt 14379 org.apache.flink.runt

@decodableco @rmoff / #kafkasum i m Using Flink t

@decodableco @rmoff / #kafkasum It’s not just Java • PyFlink • added in 1.9. in 2 9 in 2 8 • Flink SQL 1 1 0 0 0 0 i m • Added in 1.5. t

@decodableco @rmoff / #kafkasum i m Flink SQL t

@decodableco @rmoff / #kafkasum SQL Language Support • Built on Apache Calcite • Common Table Expression (C ) ( • Set-based operations • Joins • Aggregations T I W E T i m • And lots more… H) t

@decodableco @rmoff / #kafkasum Running Flink SQL • SQL Client • SQL Gateway • RE API • Hive • JDBC Driver i m T S • From Java or Python t

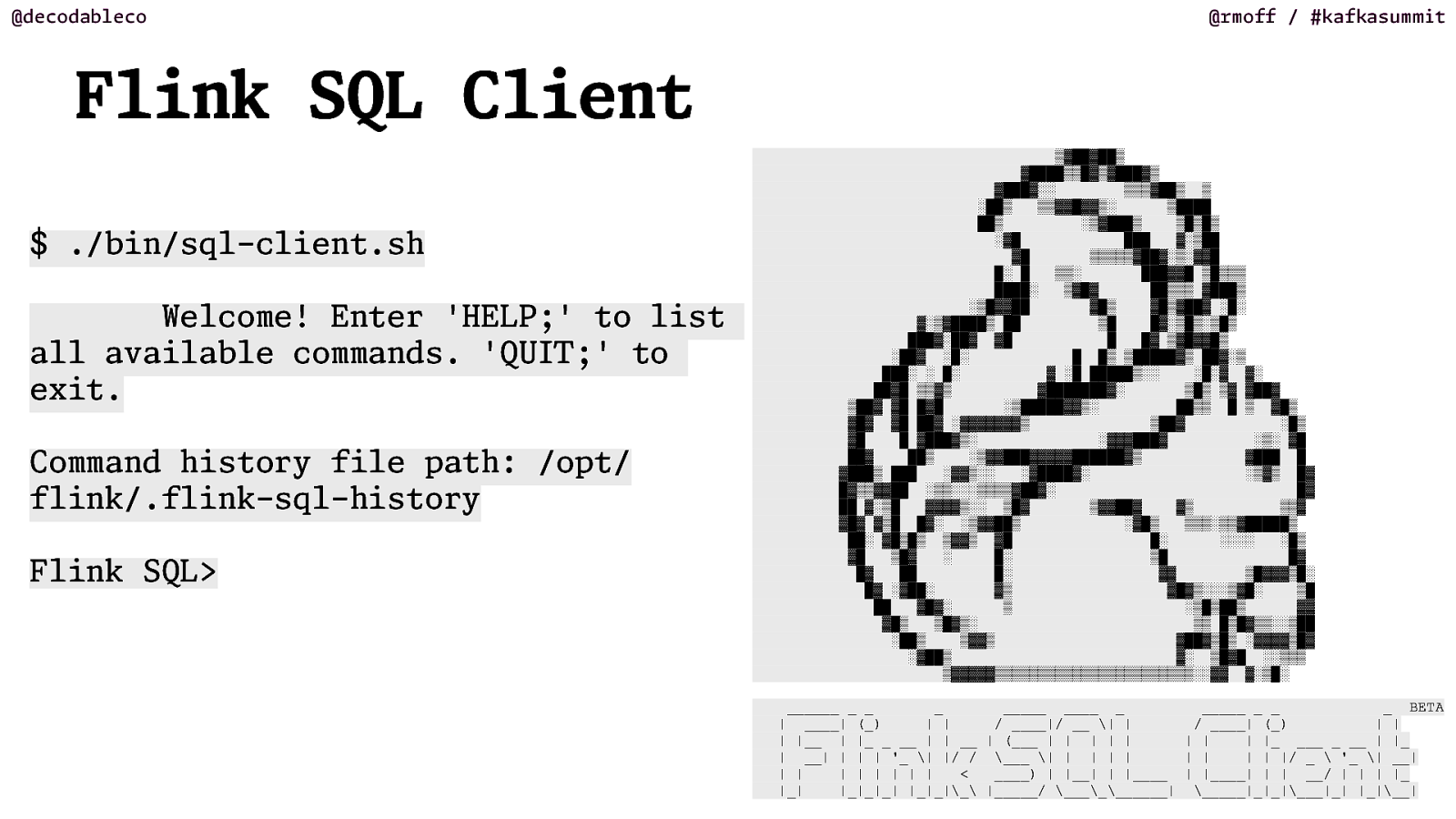

@decodableco @rmoff / #kafkasum t Flink SQL Client $ ./bin/sql-client.sh Welcome! Enter ‘HELP;’ to list all available commands. ‘QU ;’ to exit. Command history file path: /opt/ flink/.flink-sql-history Flink SQL> ▒▓██▓██▒ ▓████▒▒█▓▒▓███▓▒ ▓███▓░░ ▒▒▒▓██▒ ▒ ░██▒ ▒▒▓▓█▓▓▒░ ▒████ ██▒ ░▒▓███▒ ▒█▒█▒ ░▓█ ███ ▓░▒██ ▓█ ▒▒▒▒▒▓██▓░▒░▓▓█ █░ █ ▒▒░ ███▓▓█ ▒█▒▒▒ ████░ ▒▓█▓ ██▒▒▒ ▓███▒ ░▒█▓▓██ ▓█▒ ▓█▒▓██▓ ░█░ ▓░▒▓████▒ ██ ▒█ █▓░▒█▒░▒█▒ ███▓░██▓ ▓█ █ █▓ ▒▓█▓▓█▒ ░██▓ ░█░ █ █▒ ▒█████▓▒ ██▓░▒ ███░ ░ █░ ▓ ░█ █████▒░░ ░█░▓ ▓░ ██▓█ ▒▒▓▒ ▓███████▓░ ▒█▒ ▒▓ ▓██▓ ▒██▓ ▓█ █▓█ ░▒█████▓▓▒░ ██▒▒ █ ▒ ▓█▒ ▓█▓ ▓█ ██▓ ░▓▓▓▓▓▓▓▒ ▒██▓ ░█▒ ▓█ █ ▓███▓▒░ ░▓▓▓███▓ ░▒░ ▓█ ██▓ ██▒ ░▒▓▓███▓▓▓▓▓██████▓▒ ▓███ █ ▓███▒ ███ ░▓▓▒░░ ░▓████▓░ ░▒▓▒ █▓ █▓▒▒▓▓██ ░▒▒░░░▒▒▒▒▓██▓░ █▓ ██ ▓░▒█ ▓▓▓▓▒░░ ▒█▓ ▒▓▓██▓ ▓▒ ▒▒▓ ▓█▓ ▓▒█ █▓░ ░▒▓▓██▒ ░▓█▒ ▒▒▒░▒▒▓█████▒ ██░ ▓█▒█▒ ▒▓▓▒ ▓█ █░ ░░░░ ░█▒ ▓█ ▒█▓ ░ █░ ▒█ █▓ █▓ ██ █░ ▓▓ ▒█▓▓▓▒█░ █▓ ░▓██░ ▓▒ ▓█▓▒░░░▒▓█░ ▒█ ██ ▓█▓░ ▒ ░▒█▒██▒ ▓▓ ▓█▒ ▒█▓▒░ ▒▒ █▒█▓▒▒░░▒██ ░██▒ ▒▓▓▒ ▓██▓▒█▒ ░▓▓▓▓▒█▓ ░▓██▒ ▓░ ▒█▓█ ░░▒▒▒ ▒▓▓▓▓▓▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░▓▓ ▓░▒█░ T I i m ______ _ _ _ _____ ____ _ _____ _ _ _ BETA | | () | | / |/ __ | | / | () | | | | | | _ __ | | __ | ( | | | | | | | | | ___ _ __ | | | | | | | ‘ | |/ / _ | | | | | | | | | |/ _ \ ‘ | | | | | | | | | | < ____) | || | |____ | || | | _/ | | | | || |||| |||_\ |/ ___| ___|||_|| ||__|

@decodableco @rmoff / #kafkasum D i m // M E https: O github.com/decodableco/examples/kafka-iceberg t

@decodableco @rmoff / #kafkasum i m A Few Useful Settings t

@decodableco @rmoff / #kafkasum Runt S e Mode ‘execution.runt • strea e-mode’ = ‘strea ng [default] i m m i i m m i i m T E • batch ng’; t

@decodableco @rmoff / #kafkasum Result Mode S ‘sql-client.execution.result-mode’ = ‘table’; • table [default] • changelog i m T E • tableau t

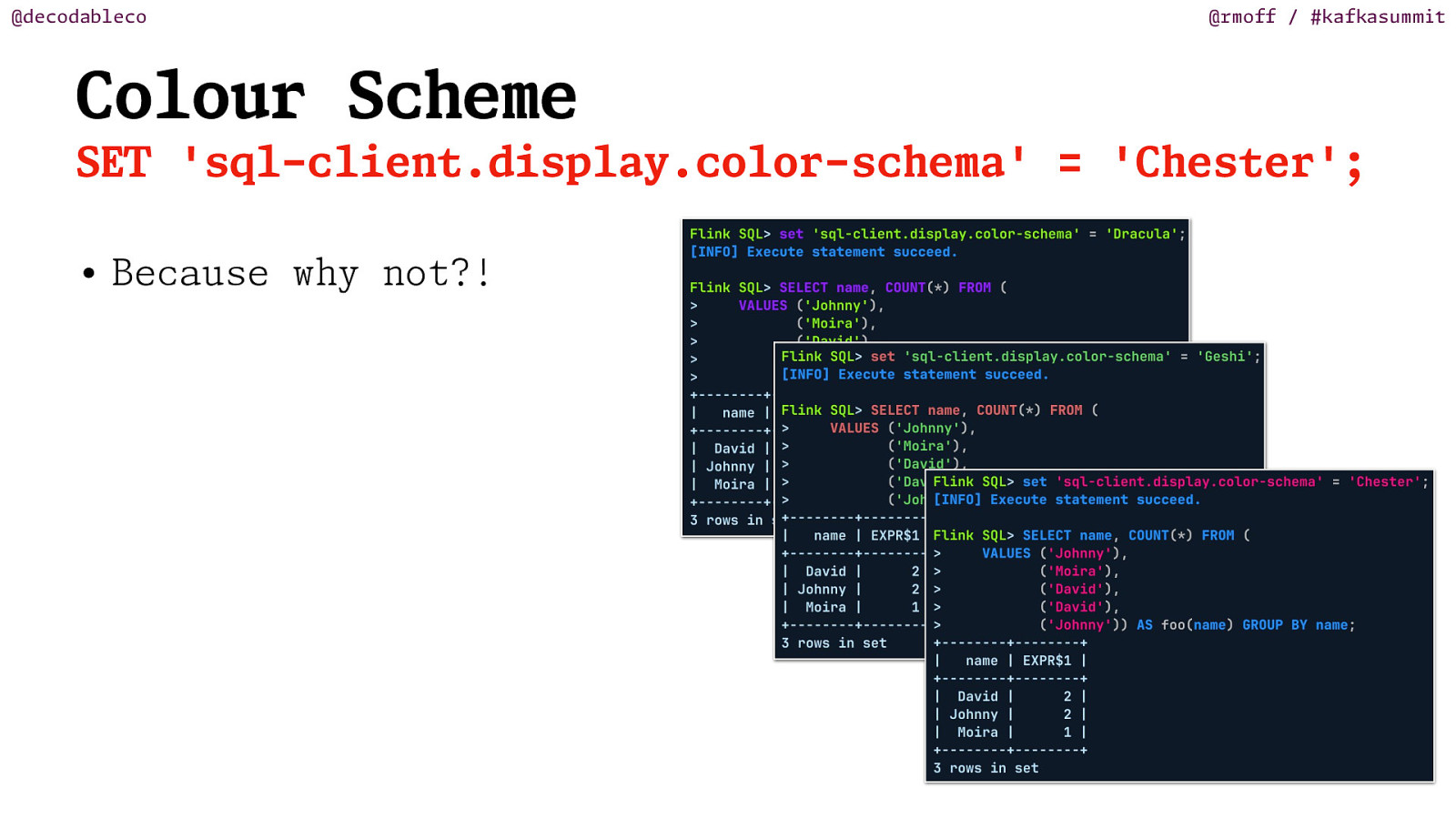

@decodableco @rmoff / #kafkasum Colour Scheme S ‘sql-client.display.color-schema’ = ‘Chester’; i m T E • Because why not?! t

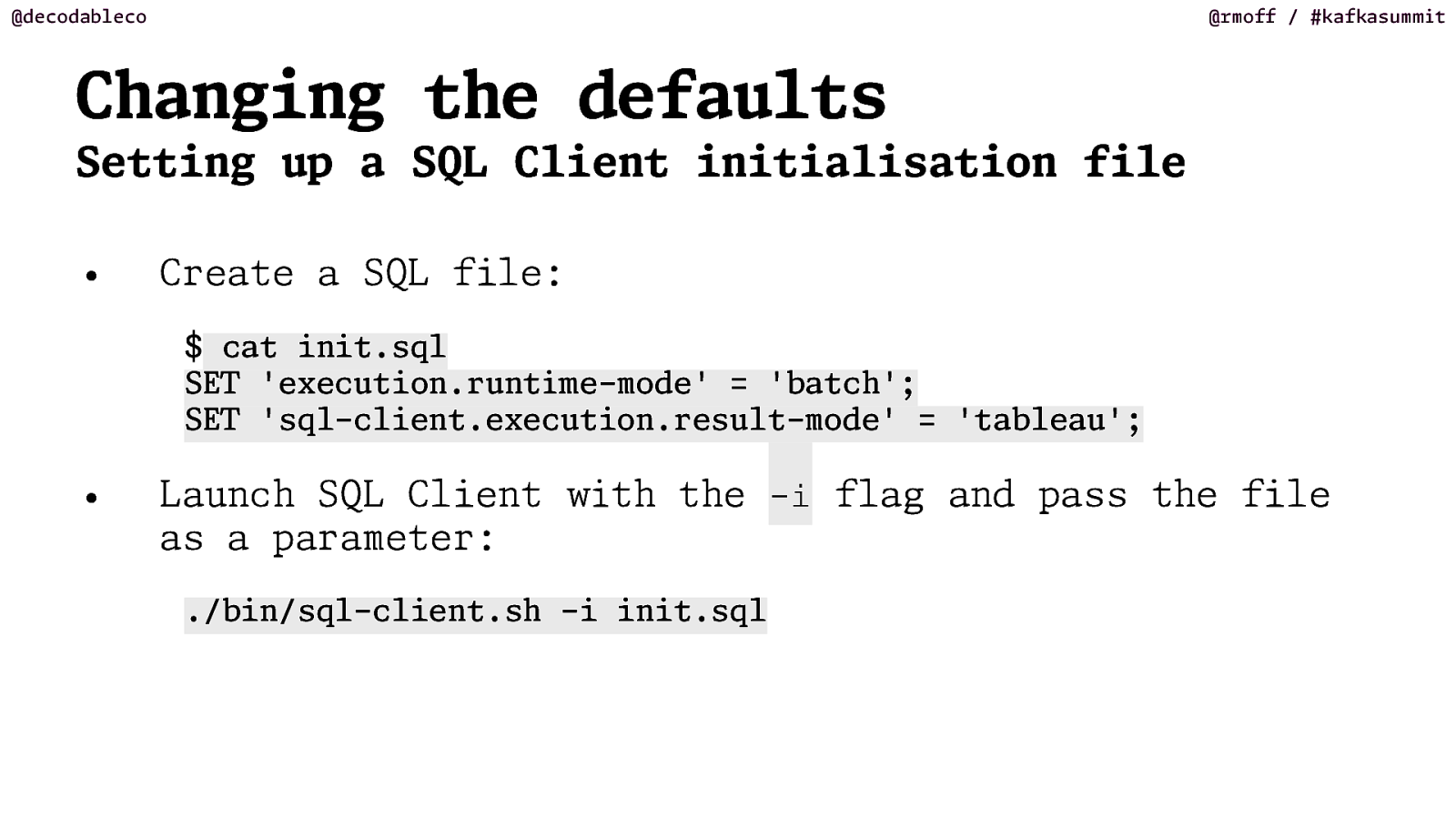

@decodableco @rmoff / #kafkasum Changing the defaults Setting up a SQL Client initialisation file • Create a SQL file: $ cat init.sql S ‘execution.runt e-mode’ = ‘batch’; S ‘sql-client.execution.result-mode’ = ‘tableau’; • Launch SQL Client as a parameter: th the -i flag and pass the file i w m i i m T T E E ./bin/sql-client.sh -i init.sql t

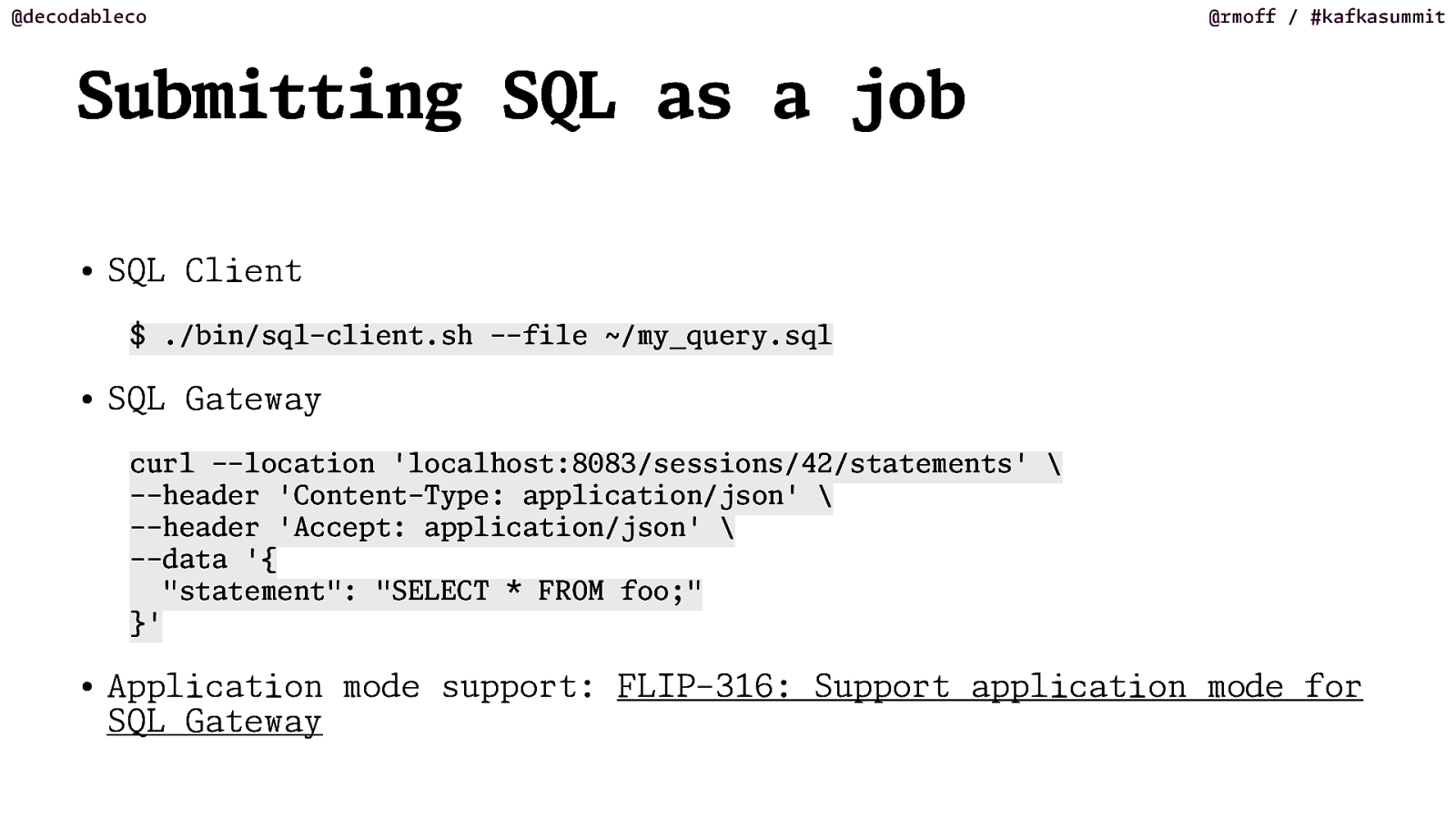

@decodableco @rmoff / #kafkasum Sub tting SQL as a job • SQL Client $ ./bin/sql-client.sh —file ~/my_query.sql • SQL Gateway curl —location ‘localhost:8083/sessions/42/statements’ \ —header ‘Content-Type: application/json’ \ —header ‘Accept: application/json’ \ —data ‘{ “statement”: “SELECT * FROM foo;” }’ • Application mode support: FLIP-316: Support application mode for i m i m SQL Gateway t

@decodableco @rmoff / #kafkasum i m Some of the Gnarly Stuff t

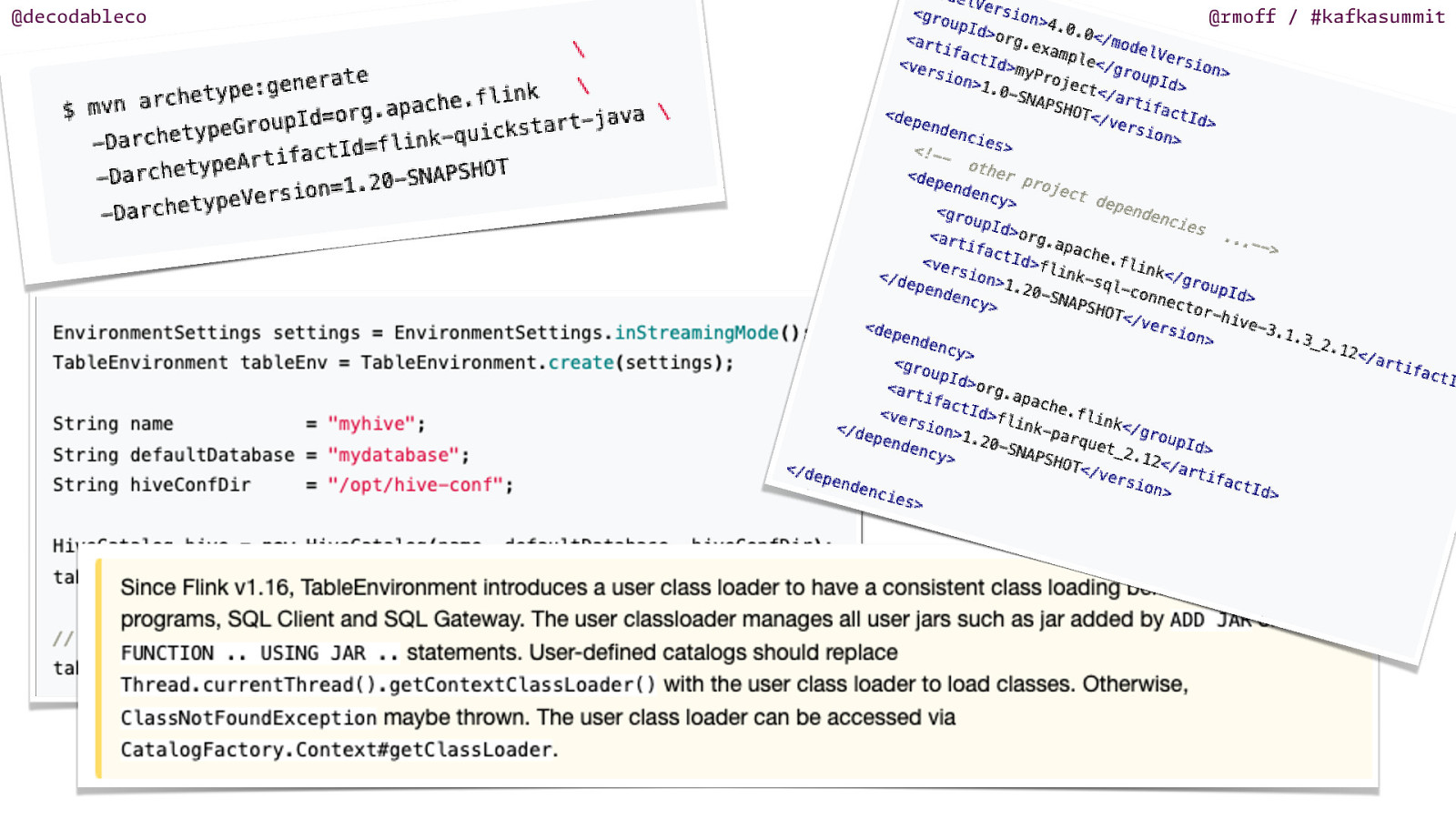

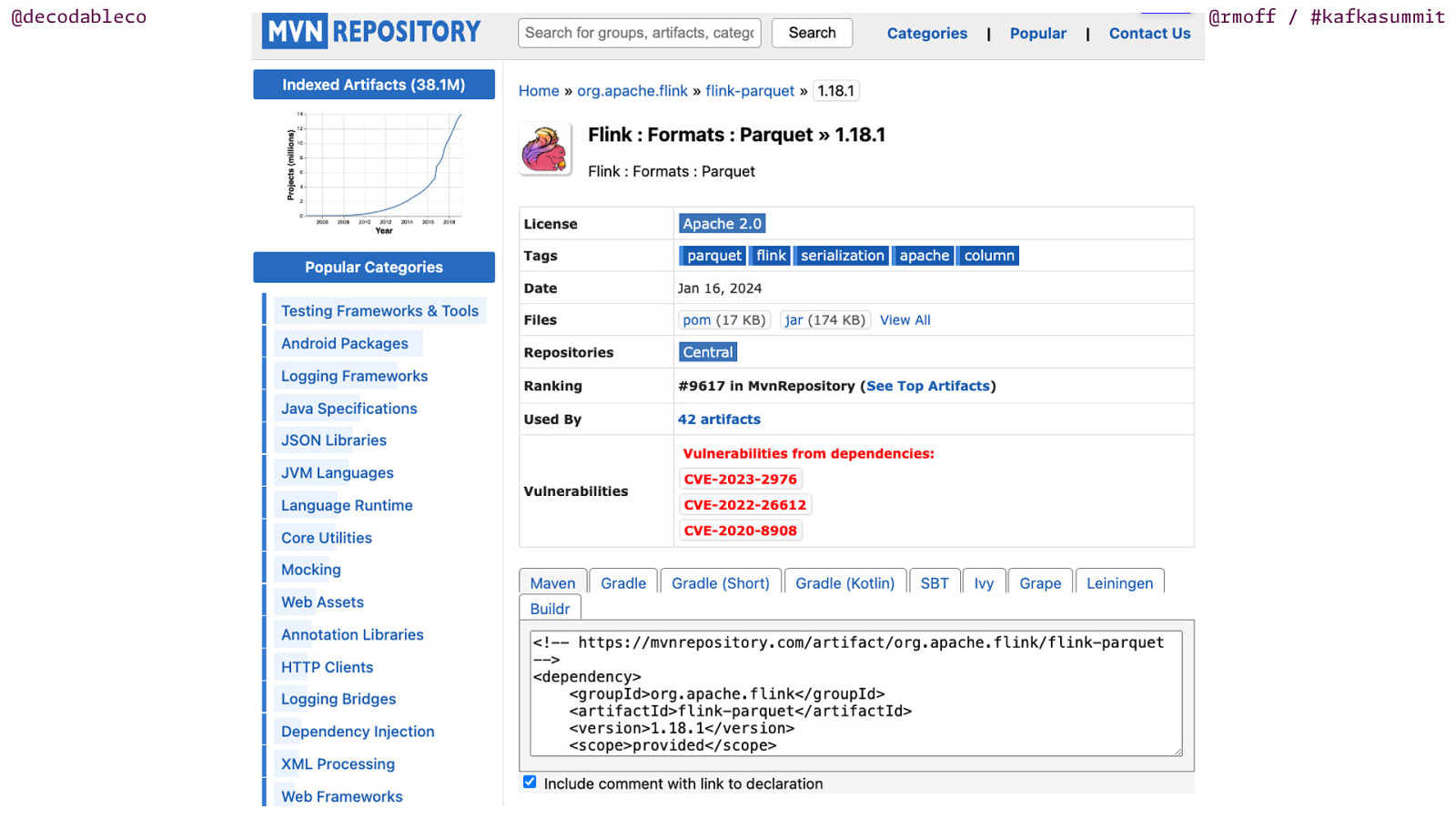

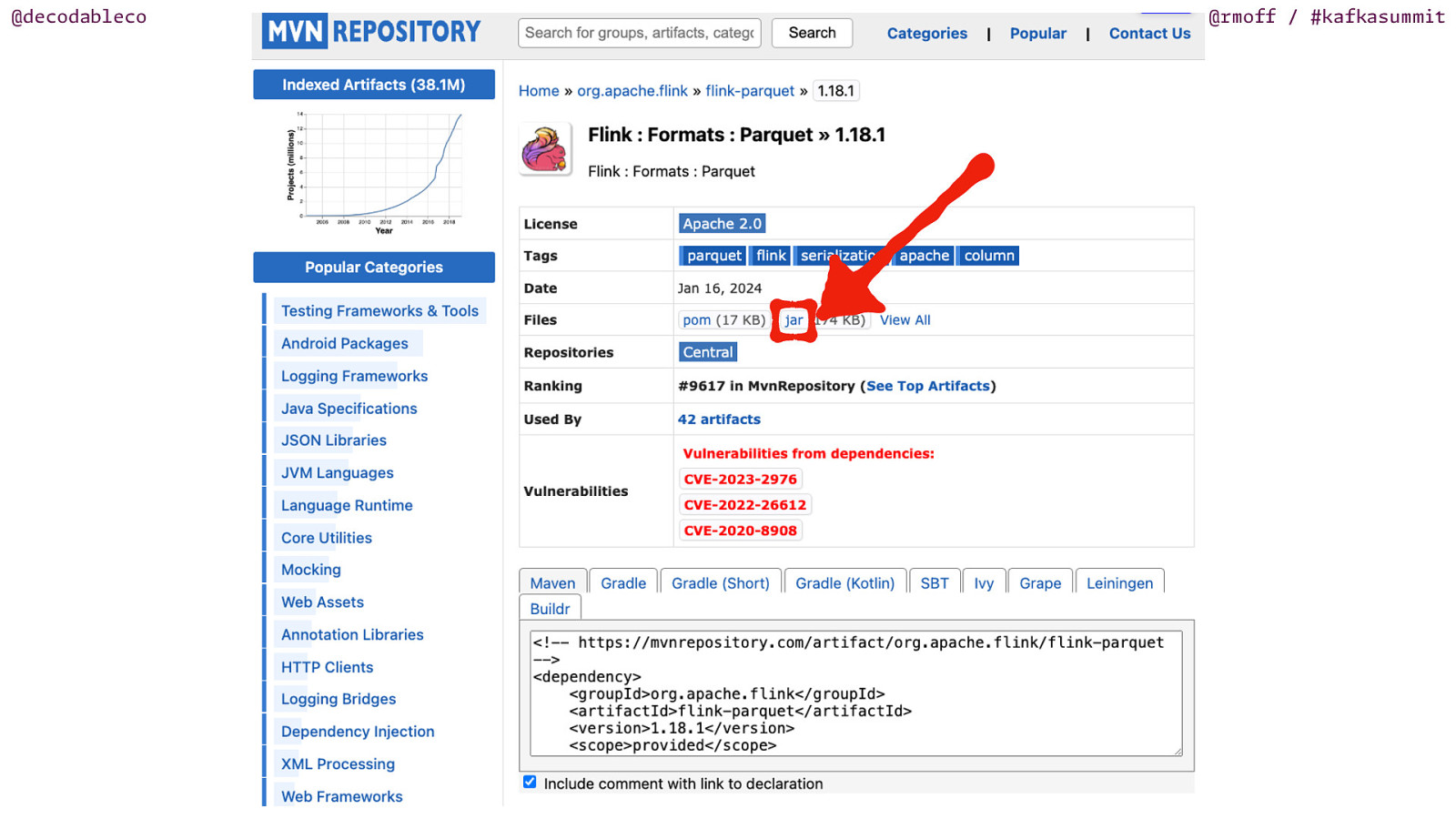

@decodableco @rmoff / #kafkasum The Joy of JARs • For each connector, format, and catalog you need to install dependencies. • All of these are i m available as JARs (Java ARchive) t

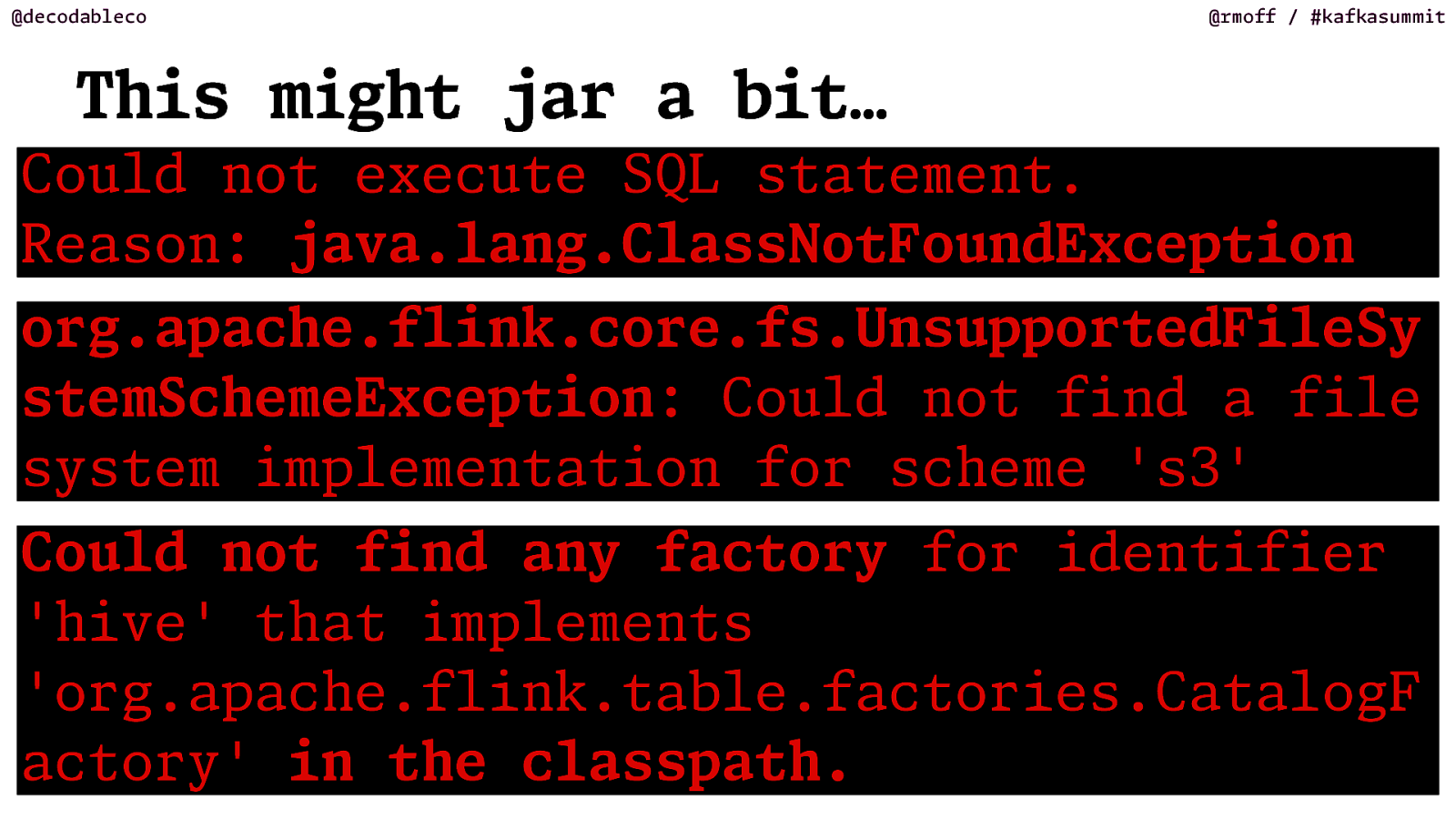

@decodableco @rmoff / #kafkasum This ght jar a bit… Could not execute SQL statement. Reason: java.lang.ClassNotFoundException org.apache.flink.core.fs.UnsupportedFileSy ste chemeException: Could not find a file system plementation for scheme ‘s3’ m i m i i i m m S m Could not find any factory for identifier ‘hive’ that plements ‘org.apache.flink.table.factories.CatalogF actory’ in the classpath. t

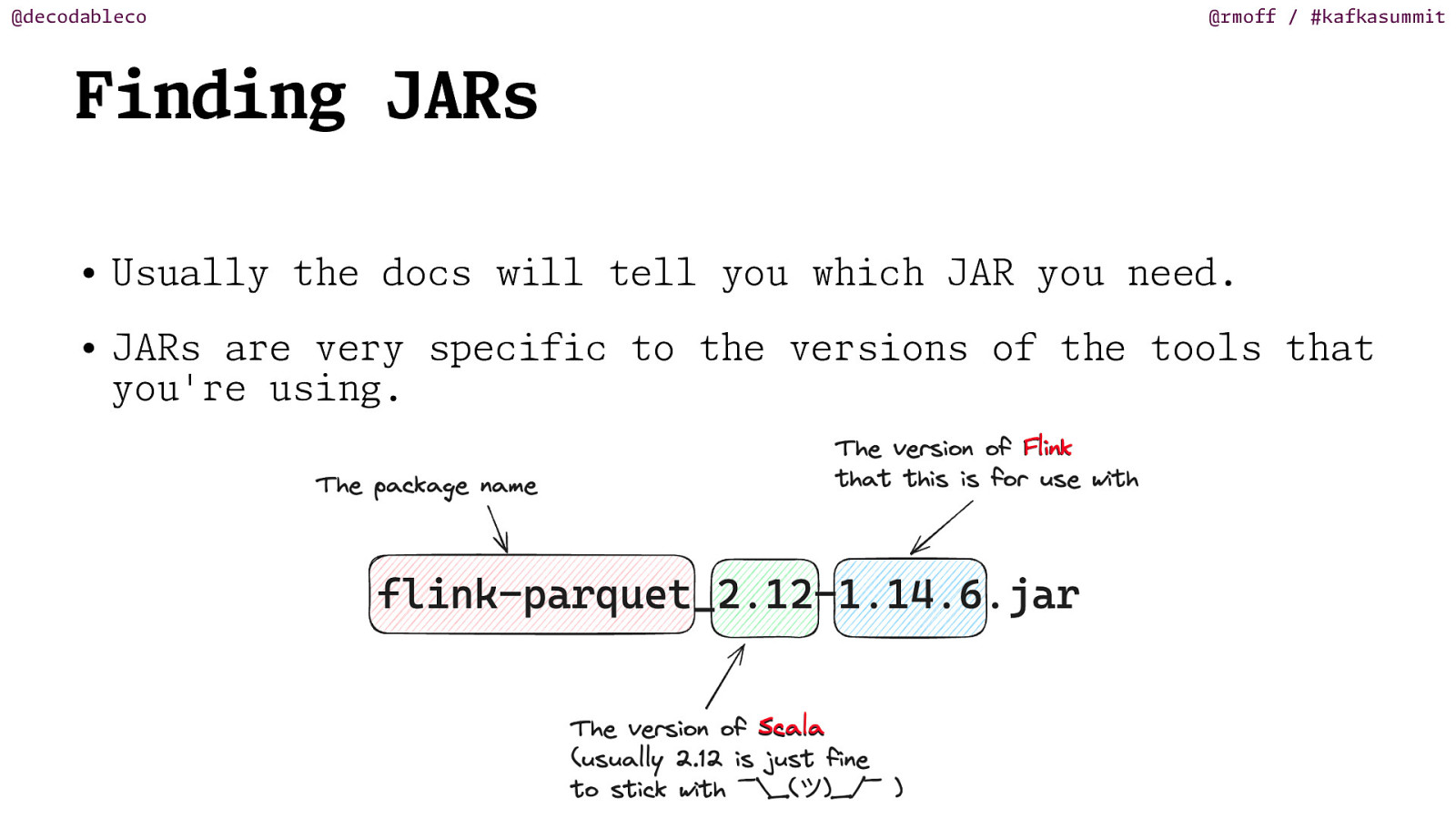

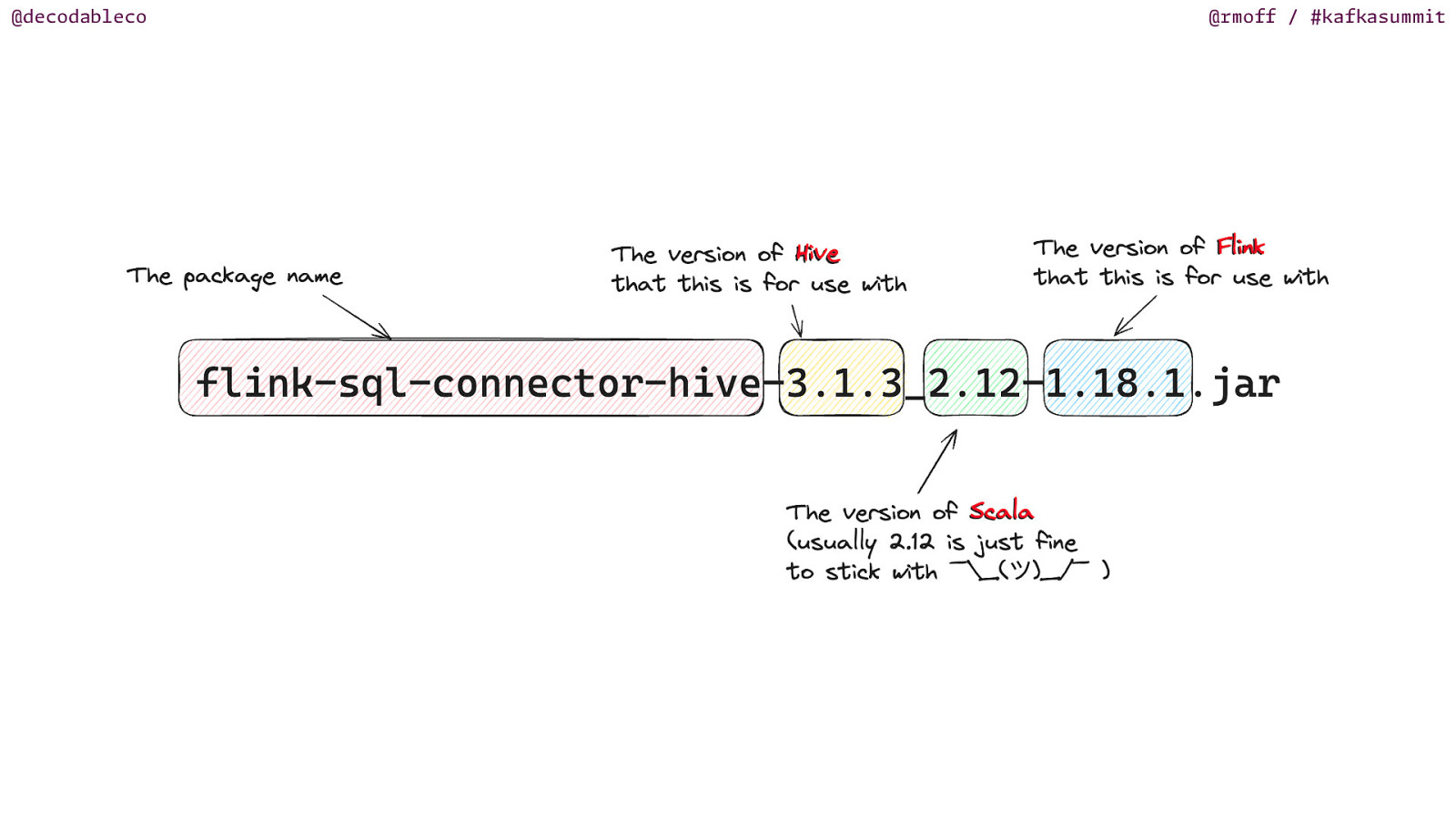

@decodableco @rmoff / #kafkasum Finding JARs • Usually the docs ll tell you which JAR you need. • JARs are very specific to the versions of the tools that i w i m you’re using. t

i @rmoff / #kafkasum m @decodableco t

i @rmoff / #kafkasum m @decodableco t

i @rmoff / #kafkasum m @decodableco t

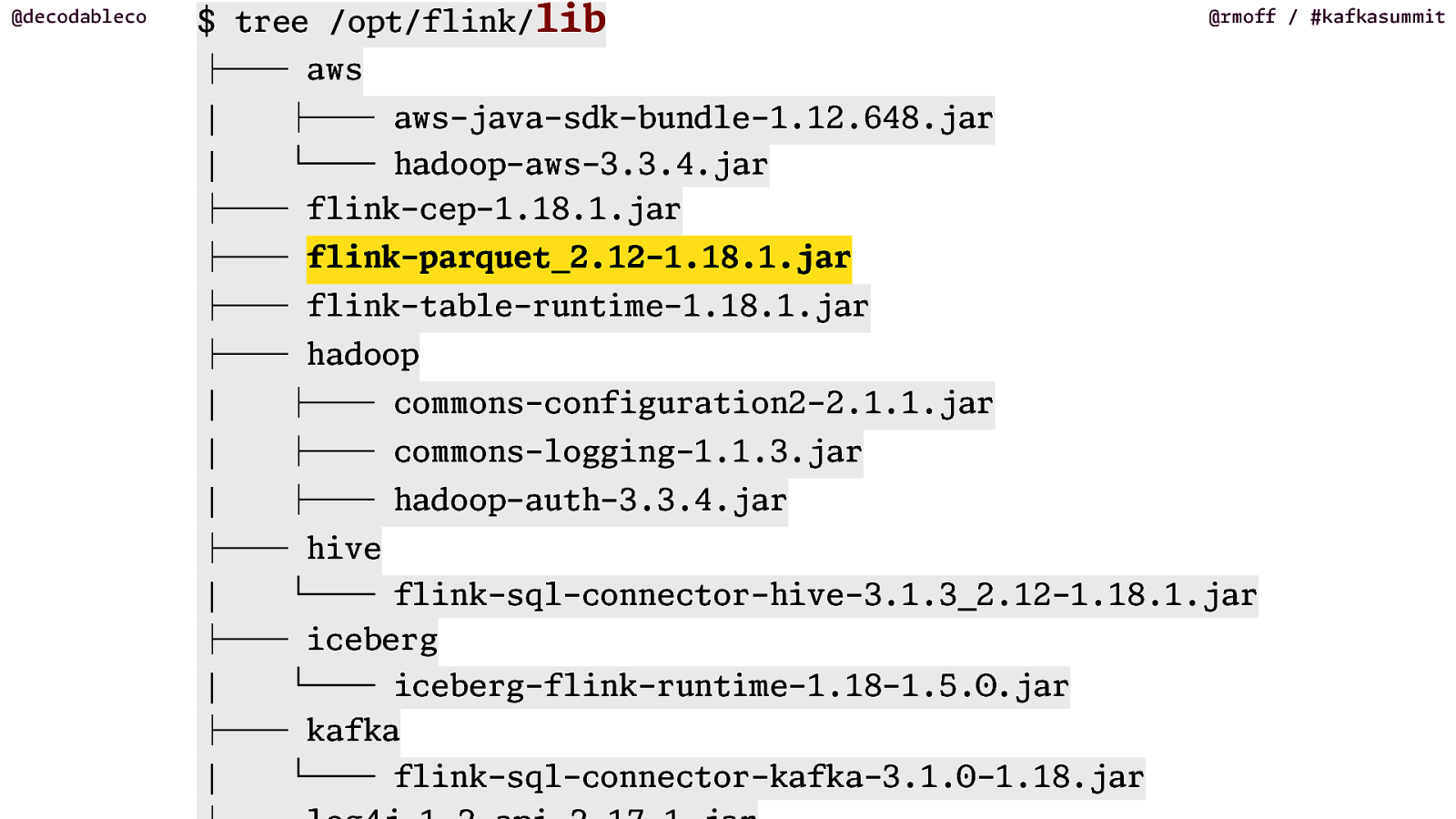

$ tree /opt/flink/lib @rmoff / #kafkasum ├── aws │ ├── aws-java-sdk-bundle-1.12.648.jar │ └── hadoop-aws-3.3.4.jar ├── flink-cep-1.18.1.jar ├── flink-parquet_2.12-1.18.1.jar ├── flink-table-runt e-1.18.1.jar ├── hadoop │ ├── commons-configuration2-2.1.1.jar │ ├── commons-logging-1.1.3.jar │ ├── hadoop-auth-3.3.4.jar ├── hive │ └── flink-sql-connector-hive-3.1.3_2.12-1.18.1.jar ├── iceberg │ └── iceberg-flink-runt ├── kafka e-1.18-1.5. .jar 0 0 m i m └── flink-sql-connector-kafka-3.1. -1.18.jar i i │ m @decodableco t

@decodableco @rmoff / #kafkasum i m Don’t forget to restart! t

@decodableco @rmoff / #kafkasum i m Tables, Connectors, and Catalogs t

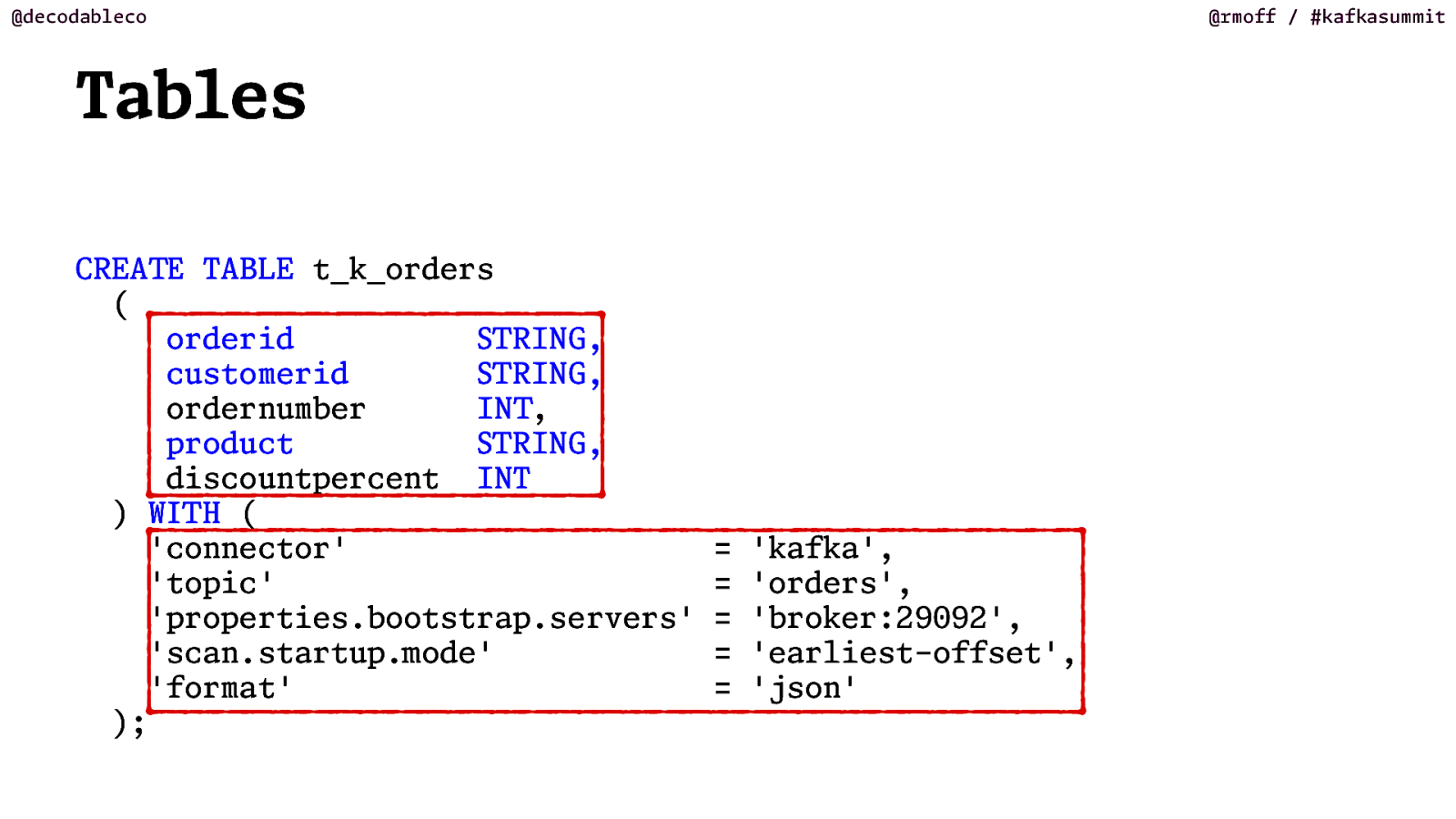

@decodableco @rmoff / #kafkasum Tables CREA ( TABLE t_k_orders T T T T S S S m i m T E I T orderid RING, customerid RING, ordernumber IN , product RING, discountpercent INT ) W H ( ‘connector’ ‘topic’ ‘properties.bootstrap.servers’ ‘scan.startup. ode’ ‘format’ ); = = = = = ‘kafka’, ‘orders’, ‘broker:29092’, ‘earliest-offset’, ‘json’ t

@decodableco @rmoff / #kafkasum This used to be s ple • The data and information about the data was all stored in the database • Information Schema • System Catalog m i i m • Data Dictionary Views t

@decodableco @rmoff / #kafkasum m i i m Now it’s not so s ple t

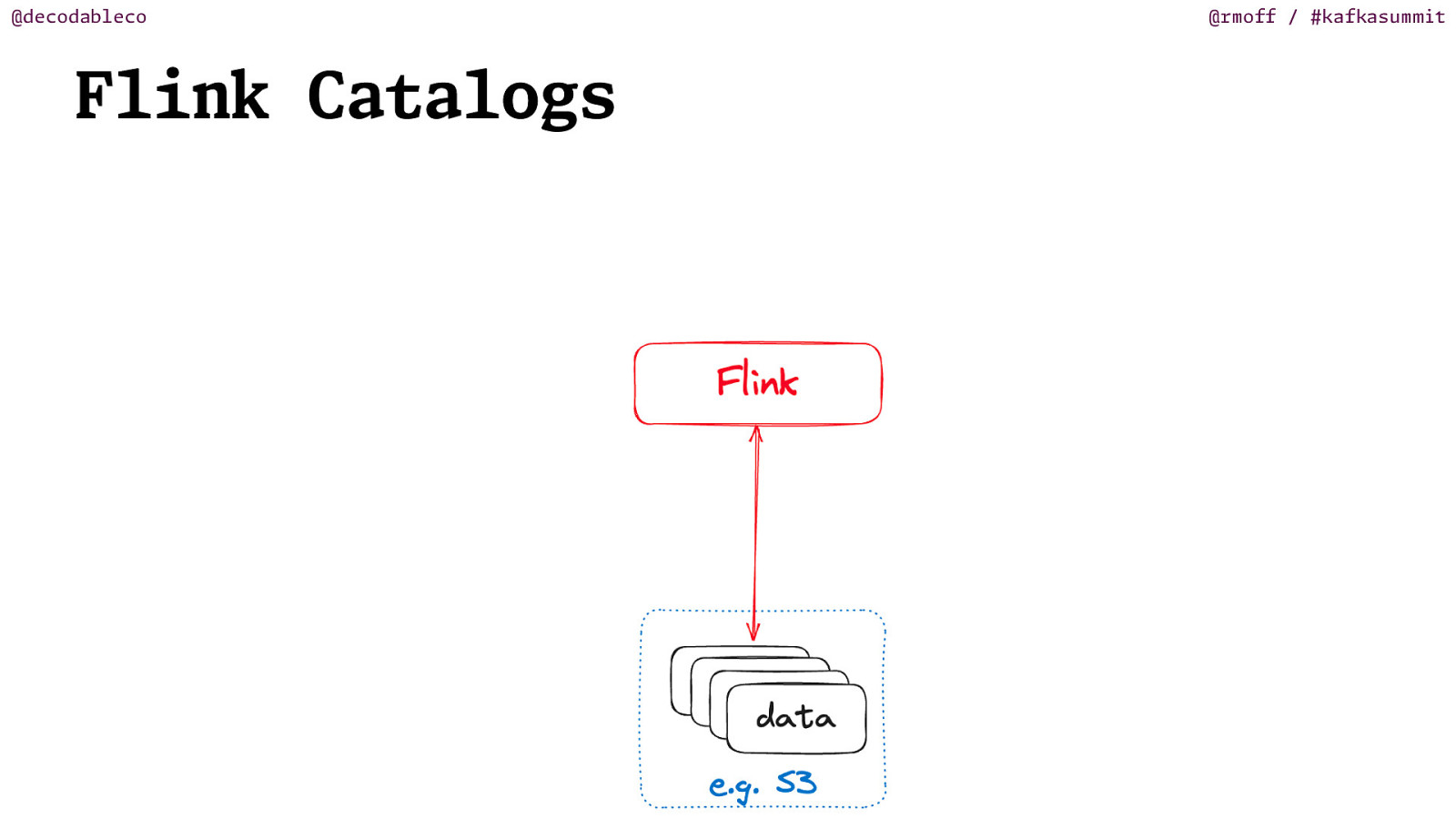

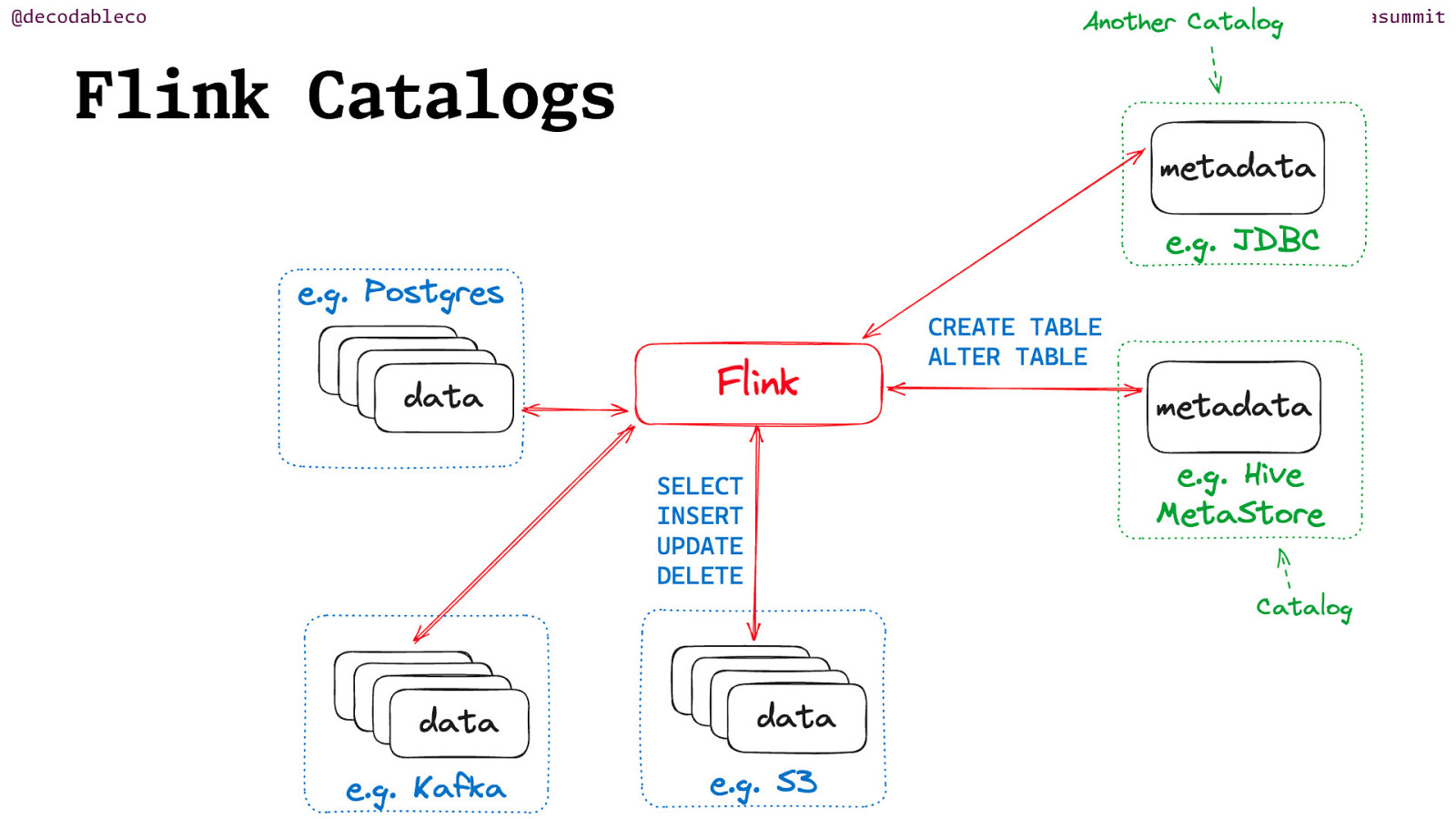

@decodableco @rmoff / #kafkasum i m Flink Catalogs t

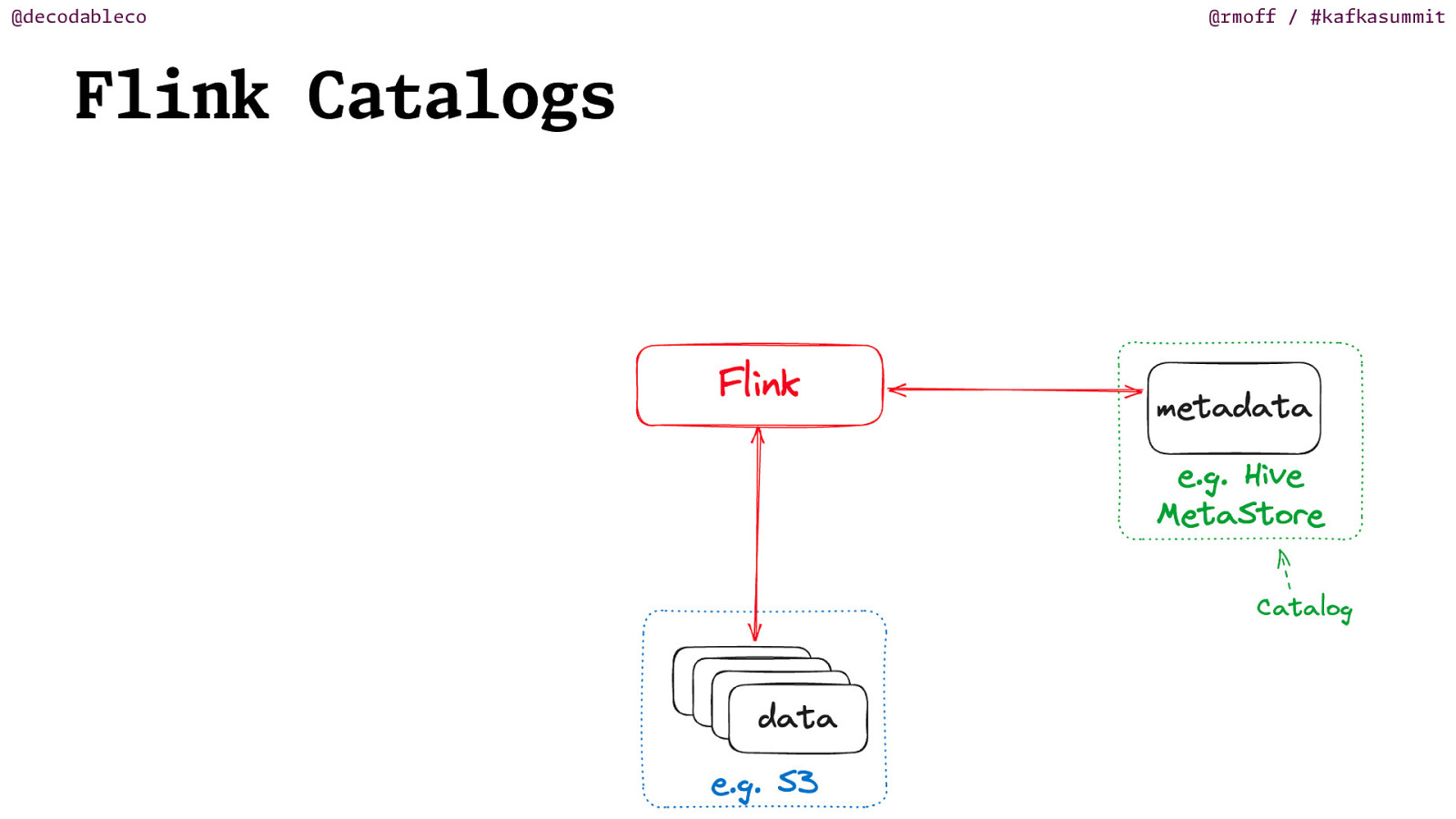

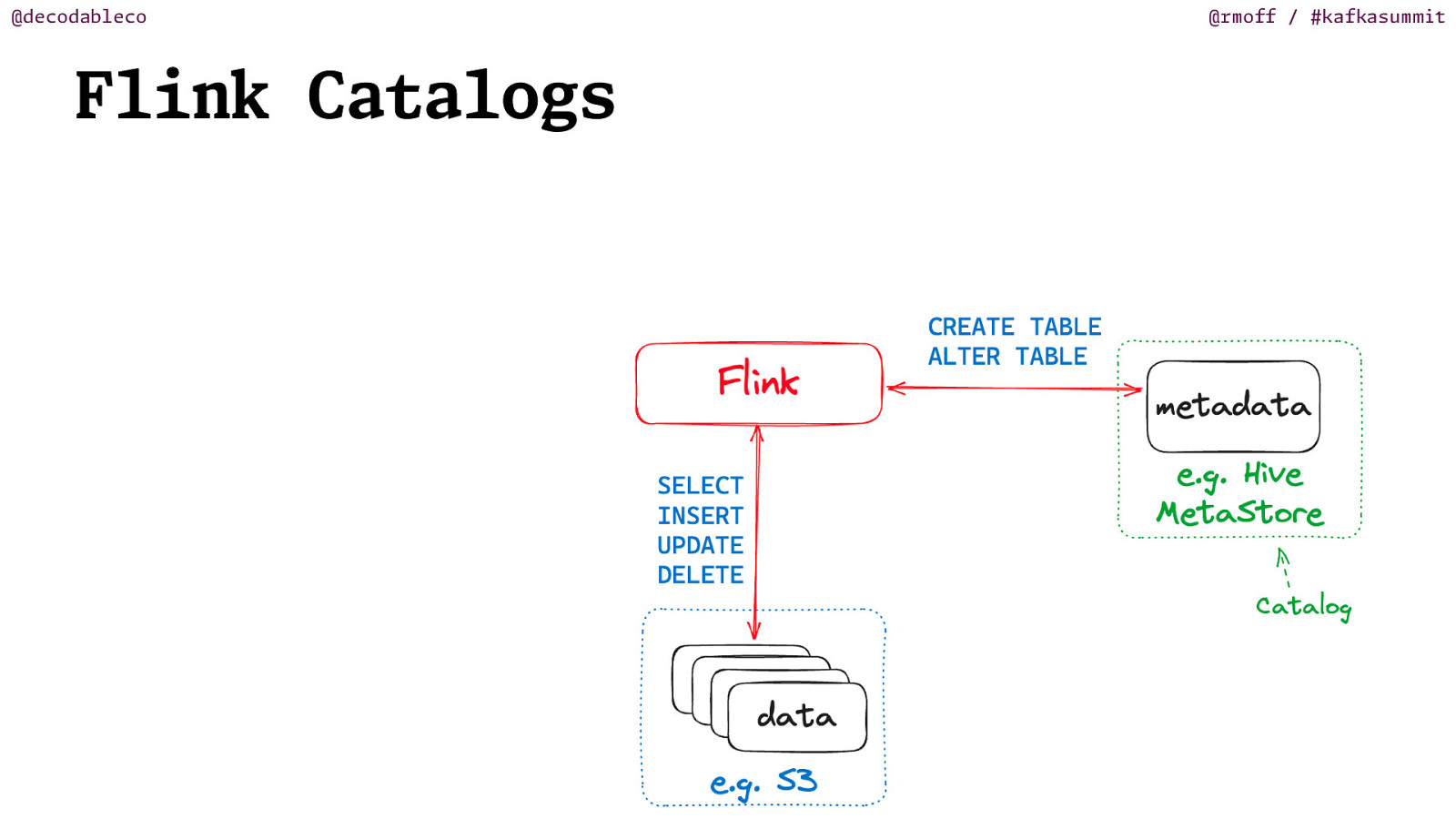

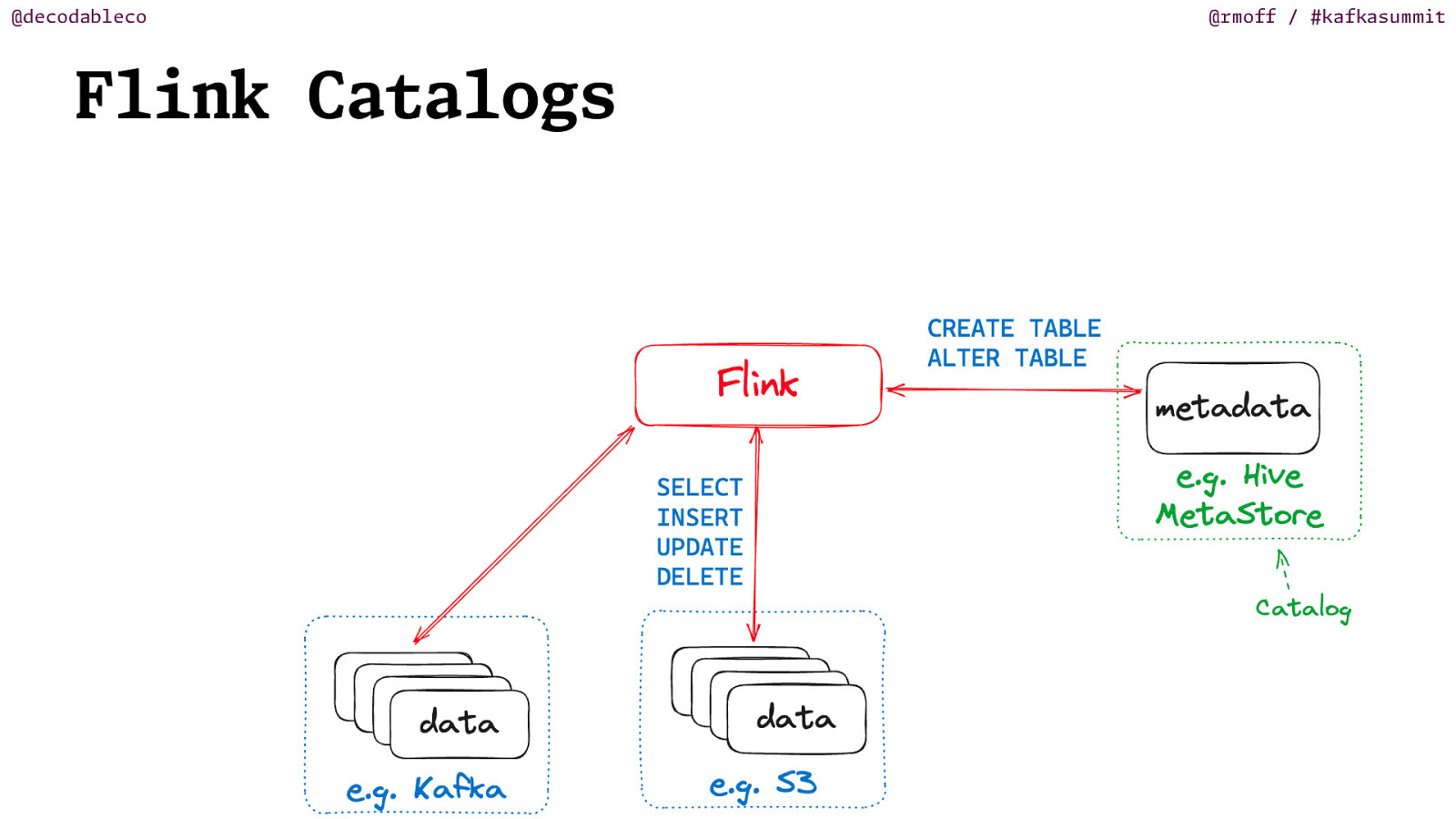

@decodableco @rmoff / #kafkasum i m Flink Catalogs t

@decodableco @rmoff / #kafkasum i m Flink Catalogs t

@decodableco @rmoff / #kafkasum i m Flink Catalogs t

@decodableco @rmoff / #kafkasum i m Flink Catalogs t

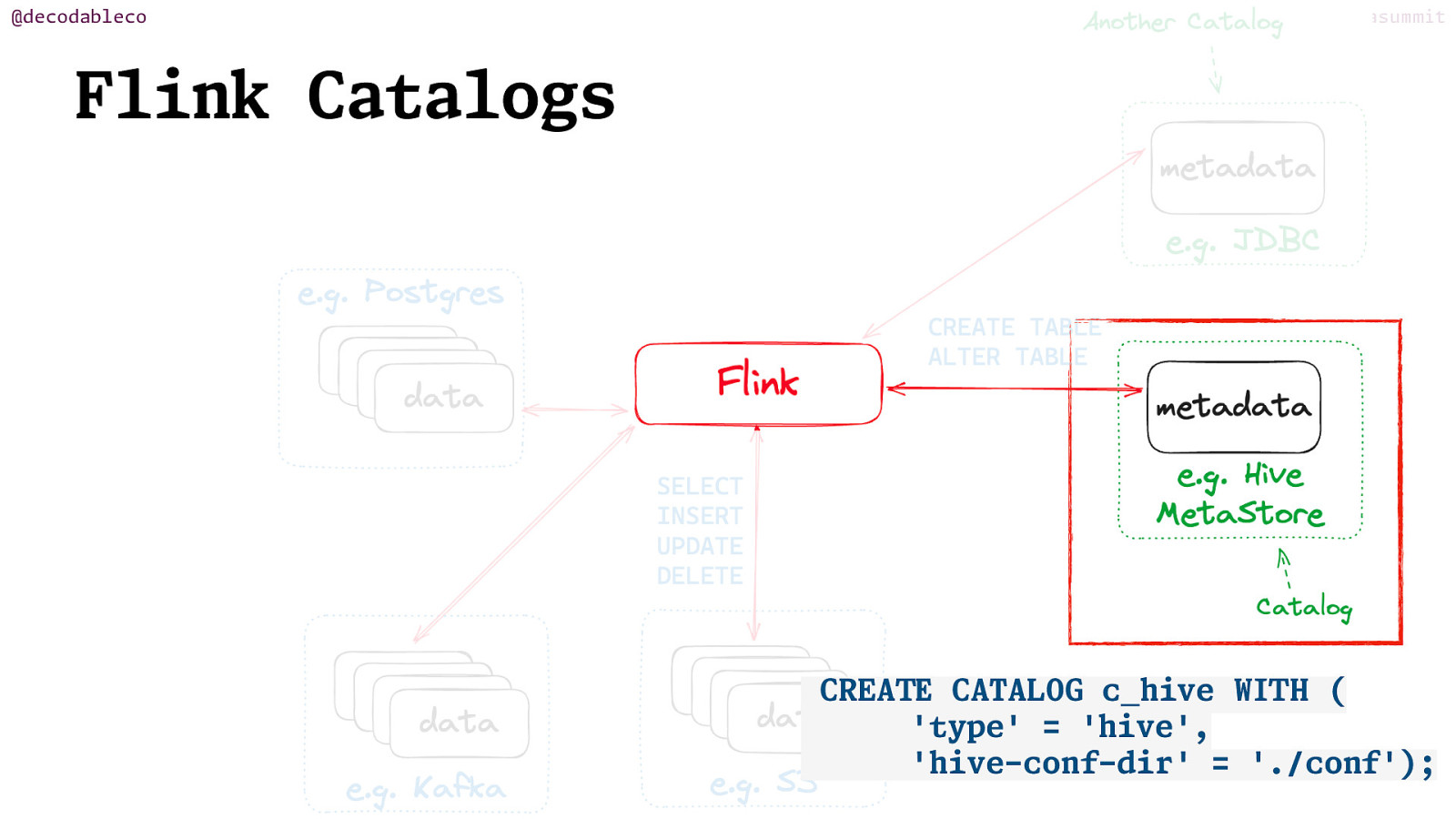

@decodableco @rmoff / #kafkasum t Flink Catalogs T I i m E T CREA CATALOG c_hive W H ( ‘type’ = ‘hive’, ‘hive-conf-dir’ = ‘./conf’);

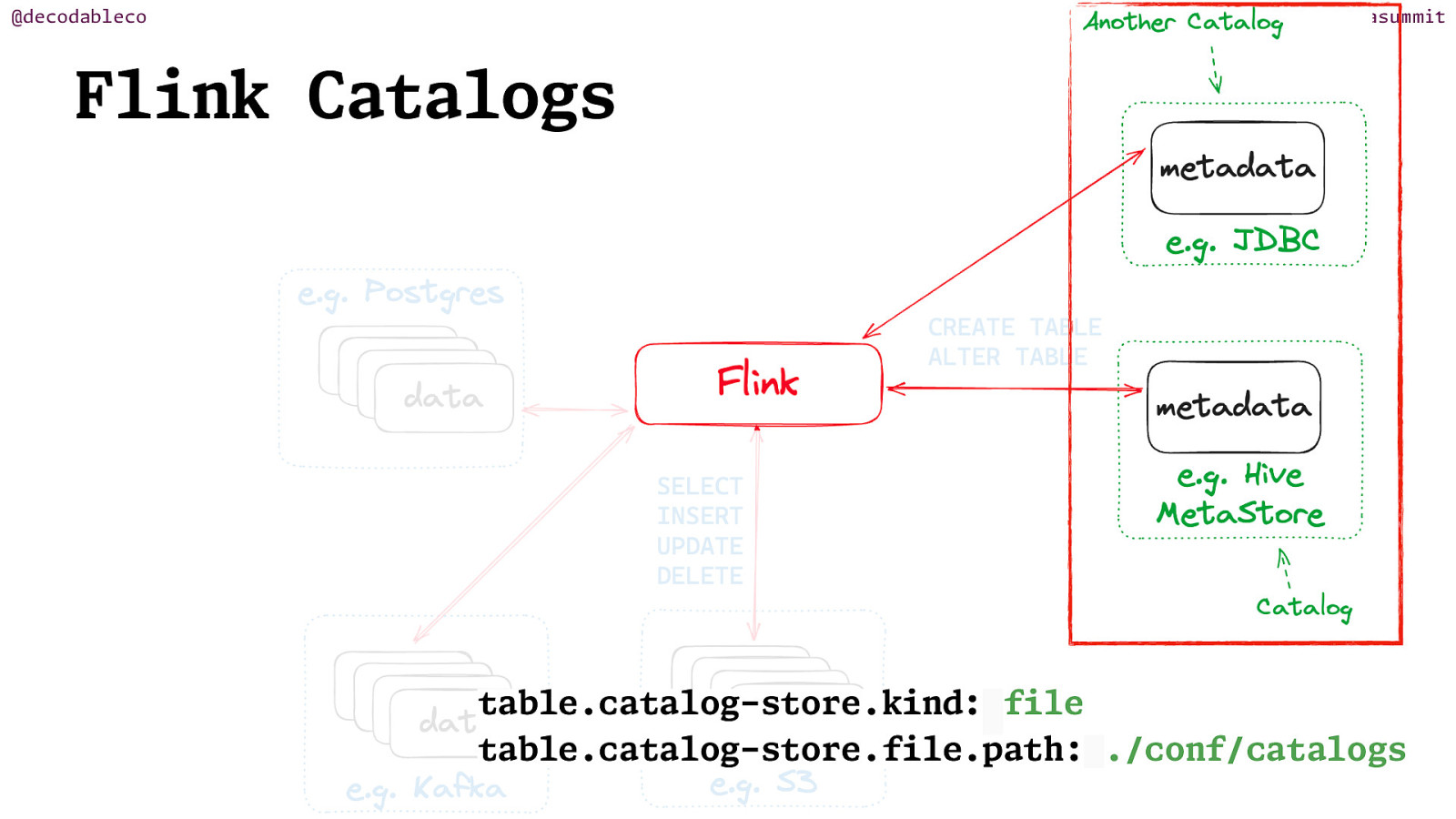

@decodableco @rmoff / #kafkasum Flink Catalogs i m table.catalog-store.kind: file table.catalog-store.file.path: ./conf/catalogs t

@decodableco @rmoff / #kafkasum D i m // M E https: O github.com/decodableco/examples/kafka-iceberg t

@decodableco @rmoff / #kafkasum i m In Conclusion… t

@decodableco @rmoff / #kafkasum Flink SQL is Fun! But there’s a bit of a learning curve • Run ad-hoc queries th the SQL Client • Understand JAR dependencies for connectors, catalogs, formats, etc • Don’t be put off by the docs - there i i w i m there if you look hard enough s SQL content t

@decodableco @rmoff / #kafkasum i m decodable.co/blog t

@rmoff / #kafkasum t OF i m i m @rmoff / 19 Mar 2024 / #kafkasum E

@decodableco t