Building stream processing applications with Apache Kafka @rmoff #BigDataLDN

A presentation at BigDataLDN in November 2019 in London, UK by Robin Moffatt

Building stream processing applications with Apache Kafka @rmoff #BigDataLDN

STREAM PROCESSING

PROCESSING STREAM

PROCESSING STREAM a of EVENTS

STREAMS ARE of EVENTS EVERYWHERE

A Customer Experience @rmoff #BigDataLDN Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN A Sale Building stream processing applications for Apache Kafka using KSQL

A Sensor Reading @rmoff #BigDataLDN Building stream processing applications for Apache Kafka using KSQL

An Application Log Entry @rmoff #BigDataLDN Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN Databases Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN Immutable event log Building stream processing applications for Apache Kafka using KSQL

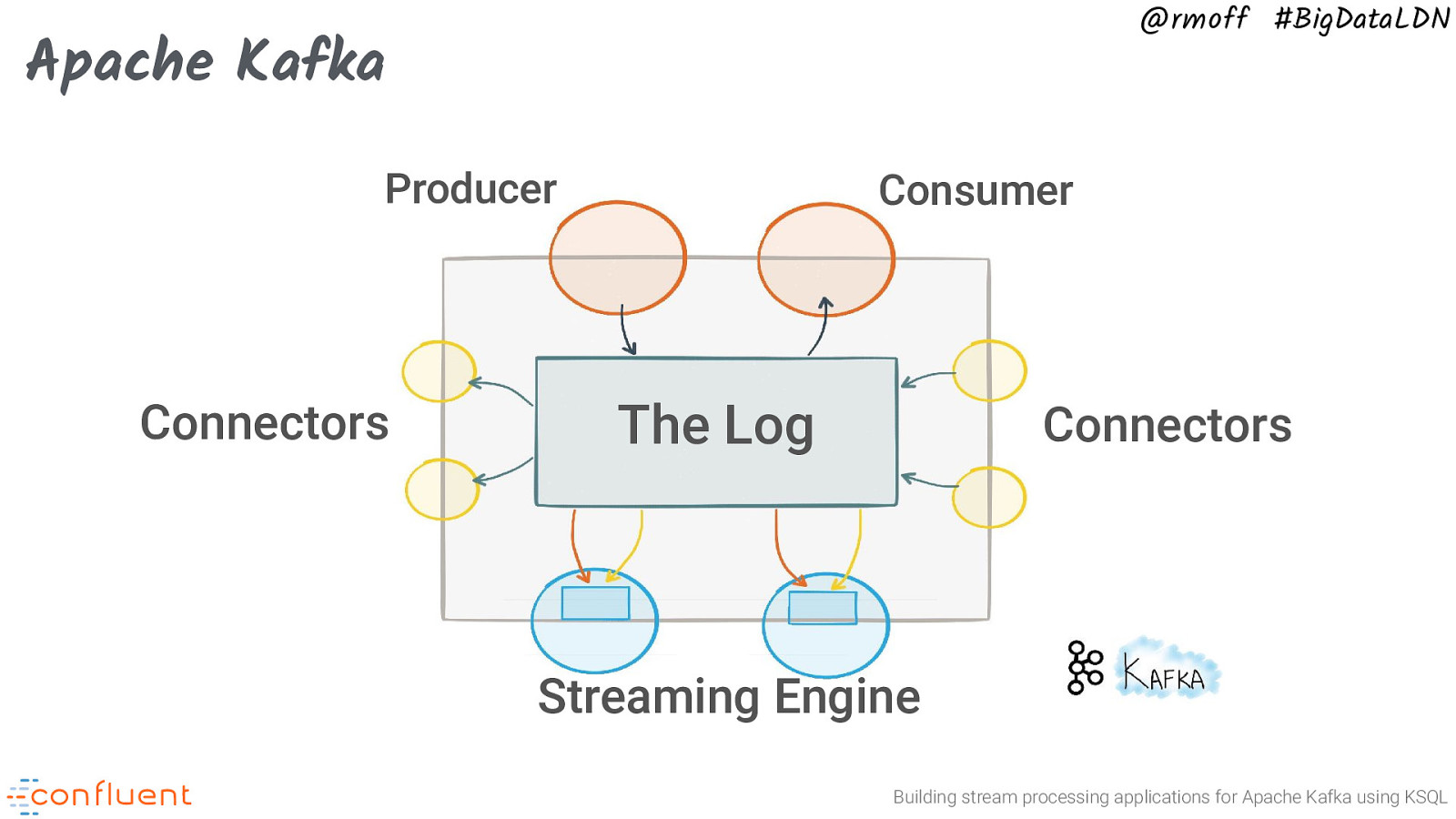

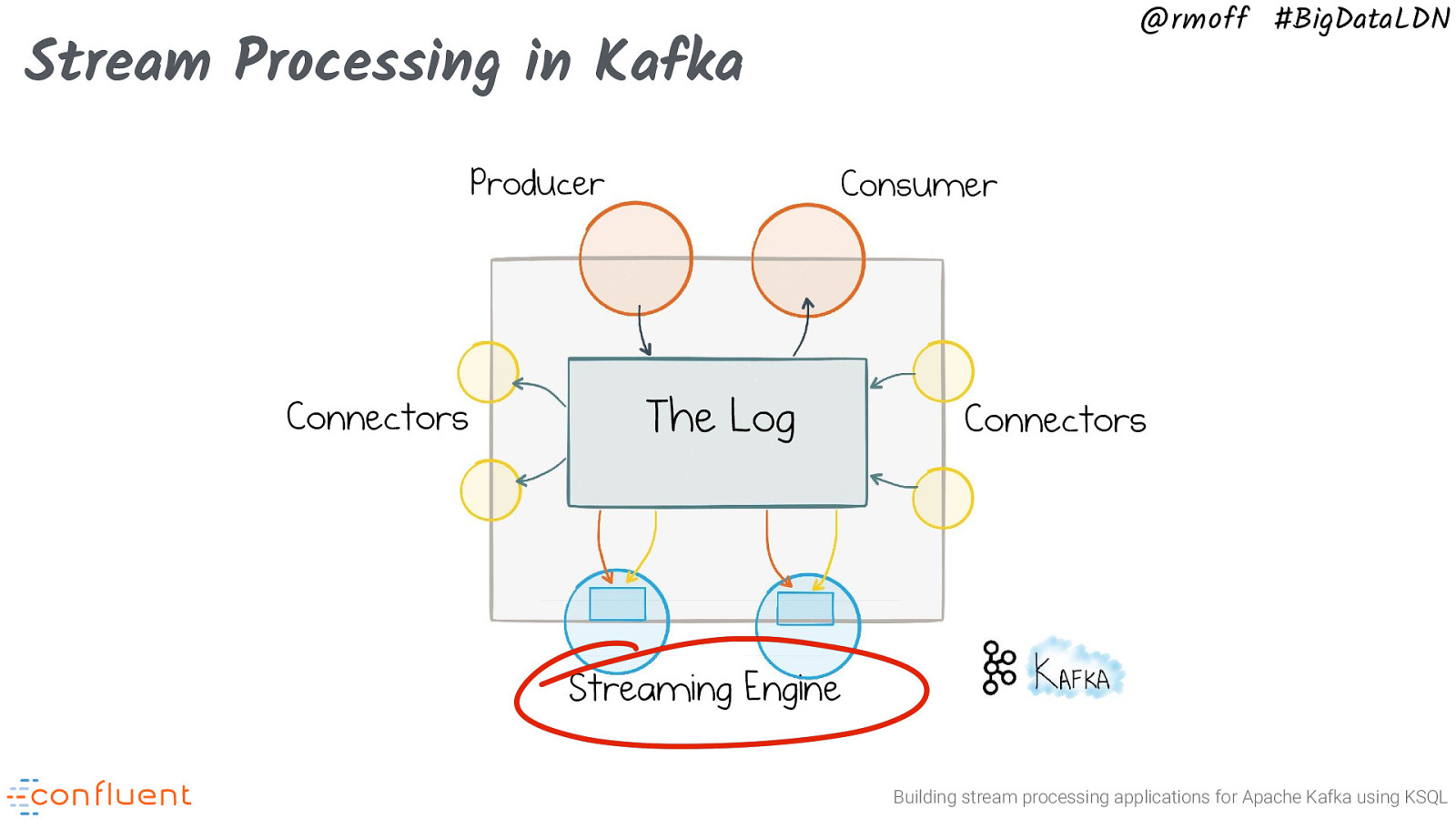

@rmoff #BigDataLDN Apache Kafka Producer Connectors Consumer The Log Connectors Streaming Engine Building stream processing applications for Apache Kafka using KSQL

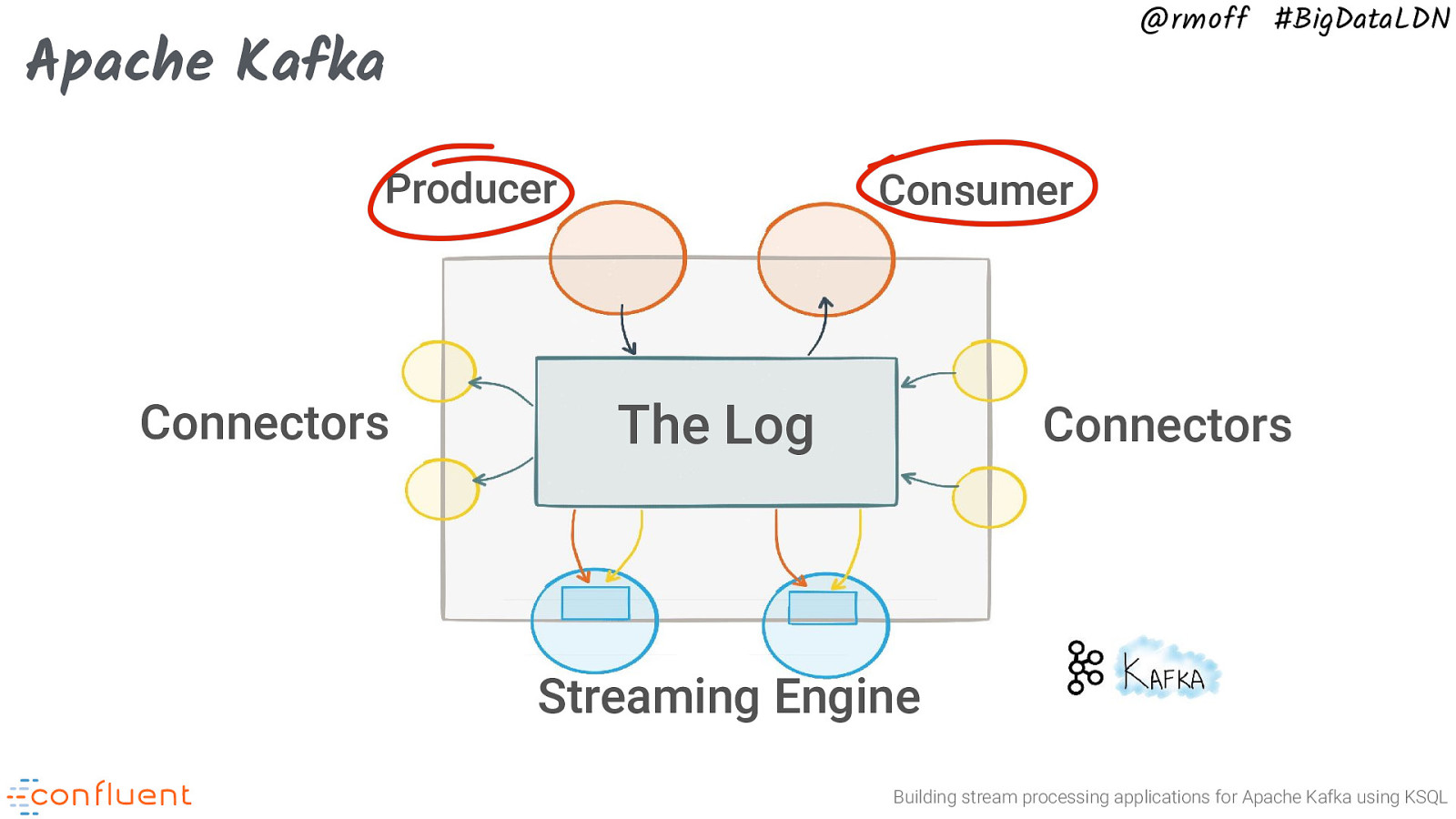

@rmoff #BigDataLDN Apache Kafka Producer Connectors Consumer The Log Connectors Streaming Engine Building stream processing applications for Apache Kafka using KSQL

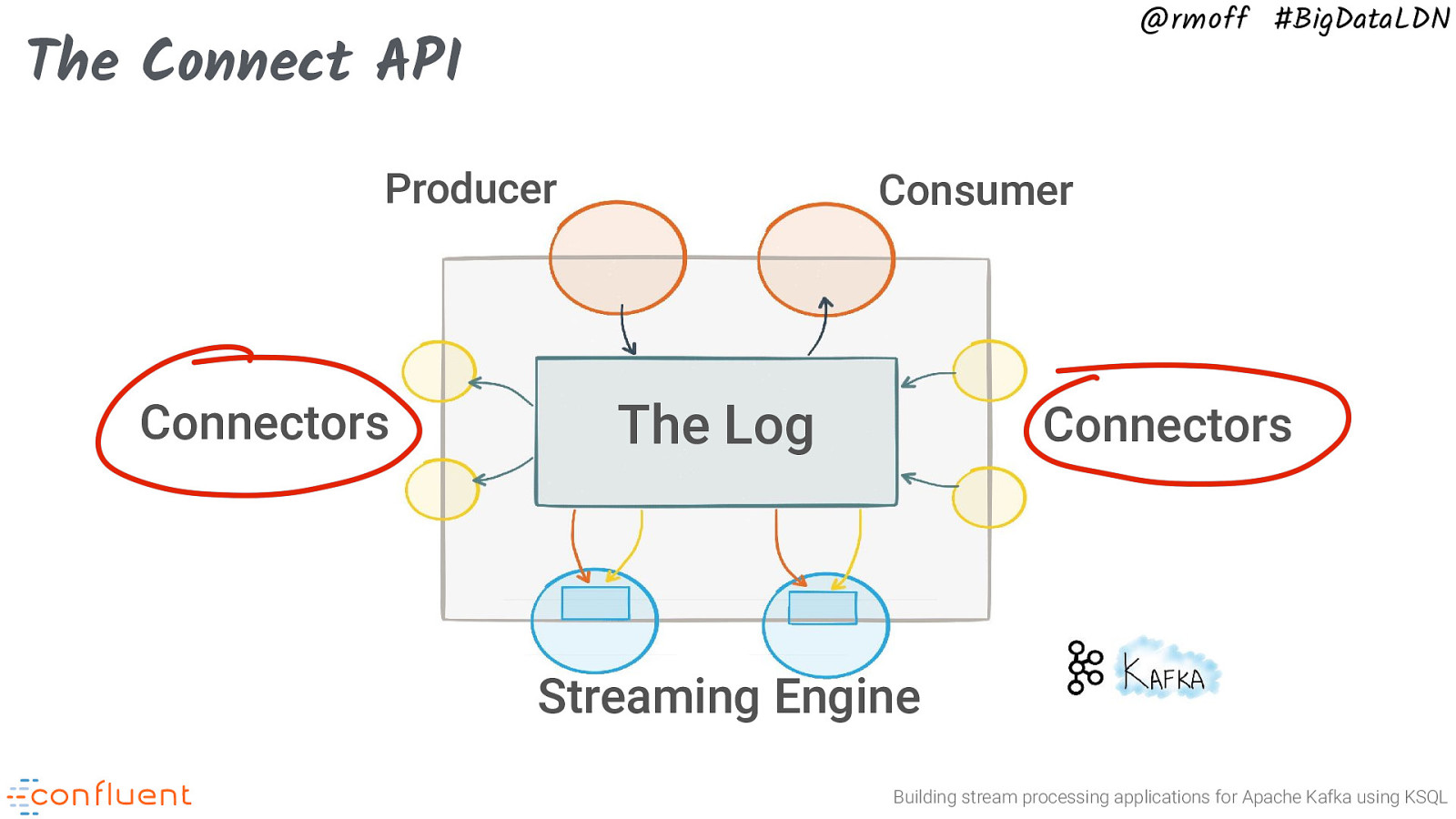

@rmoff #BigDataLDN The Connect API Producer Connectors Consumer The Log Connectors Streaming Engine Building stream processing applications for Apache Kafka using KSQL

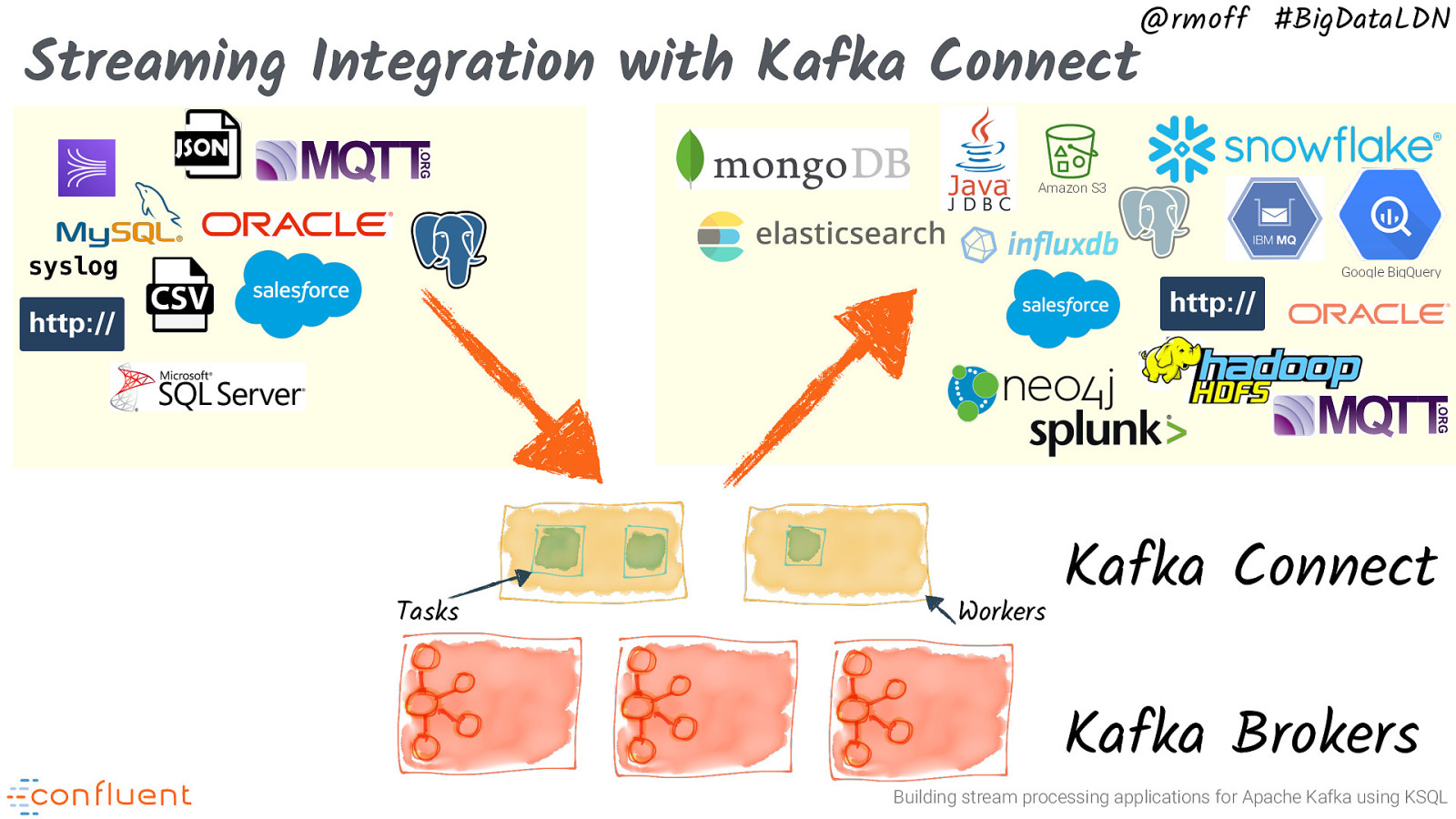

@rmoff #BigDataLDN Streaming Integration with Kafka Connect Amazon S3 syslog Google BigQuery Tasks Workers Kafka Connect Kafka Brokers Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN Stream Processing in Kafka Producer Connectors Consumer The Log Connectors Streaming Engine Building stream processing applications for Apache Kafka using KSQL

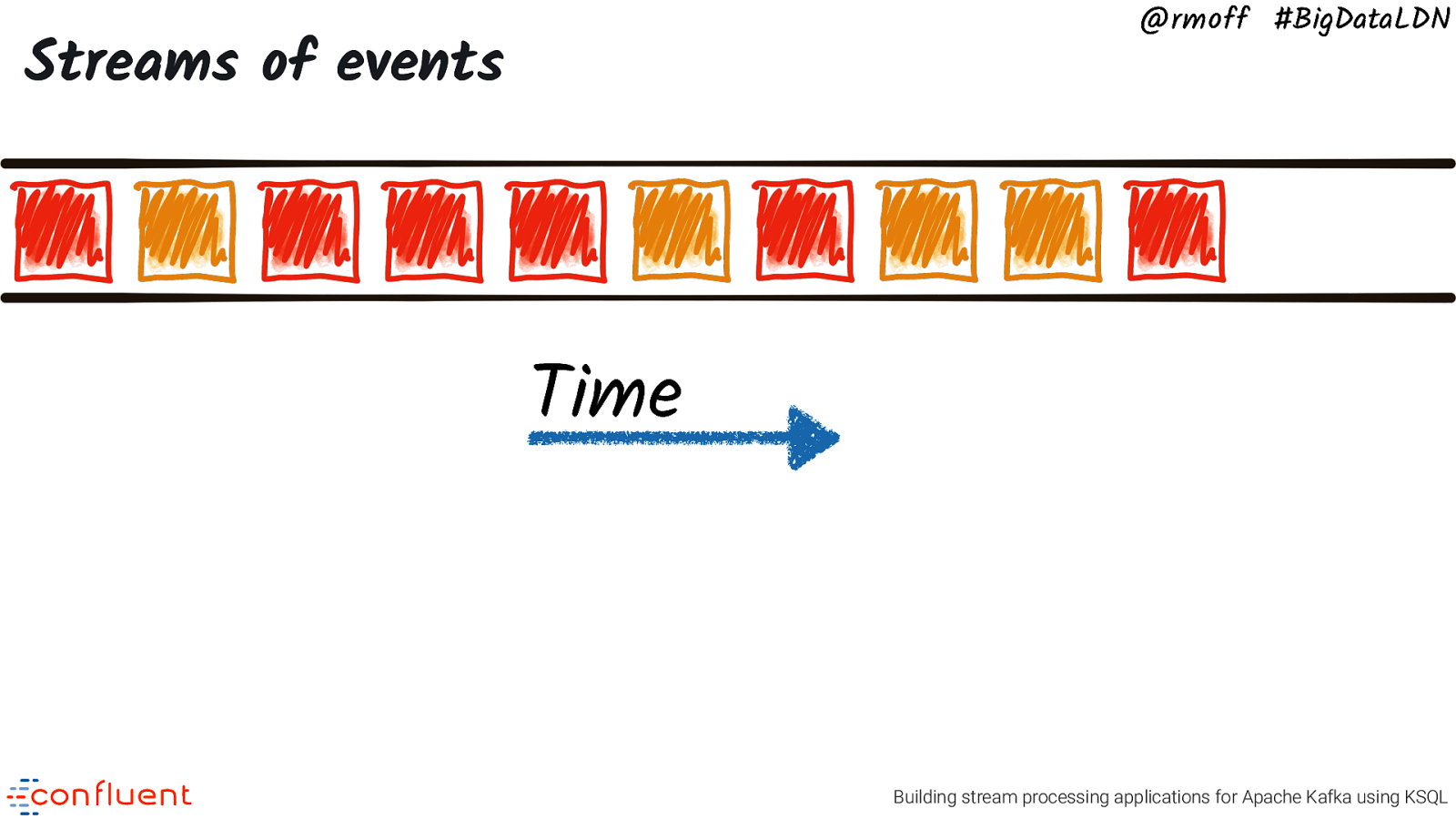

@rmoff #BigDataLDN Streams of events Time Building stream processing applications for Apache Kafka using KSQL

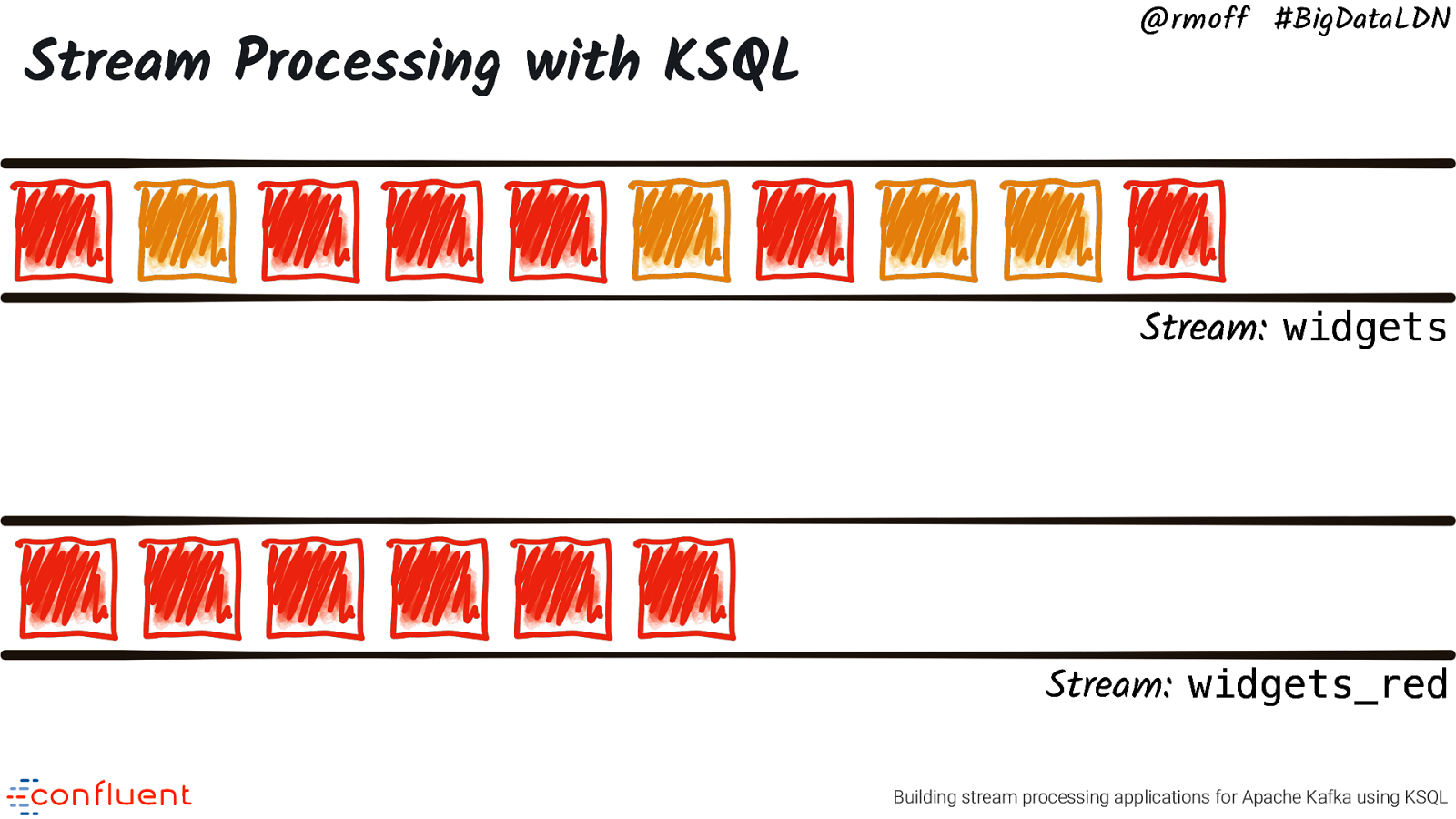

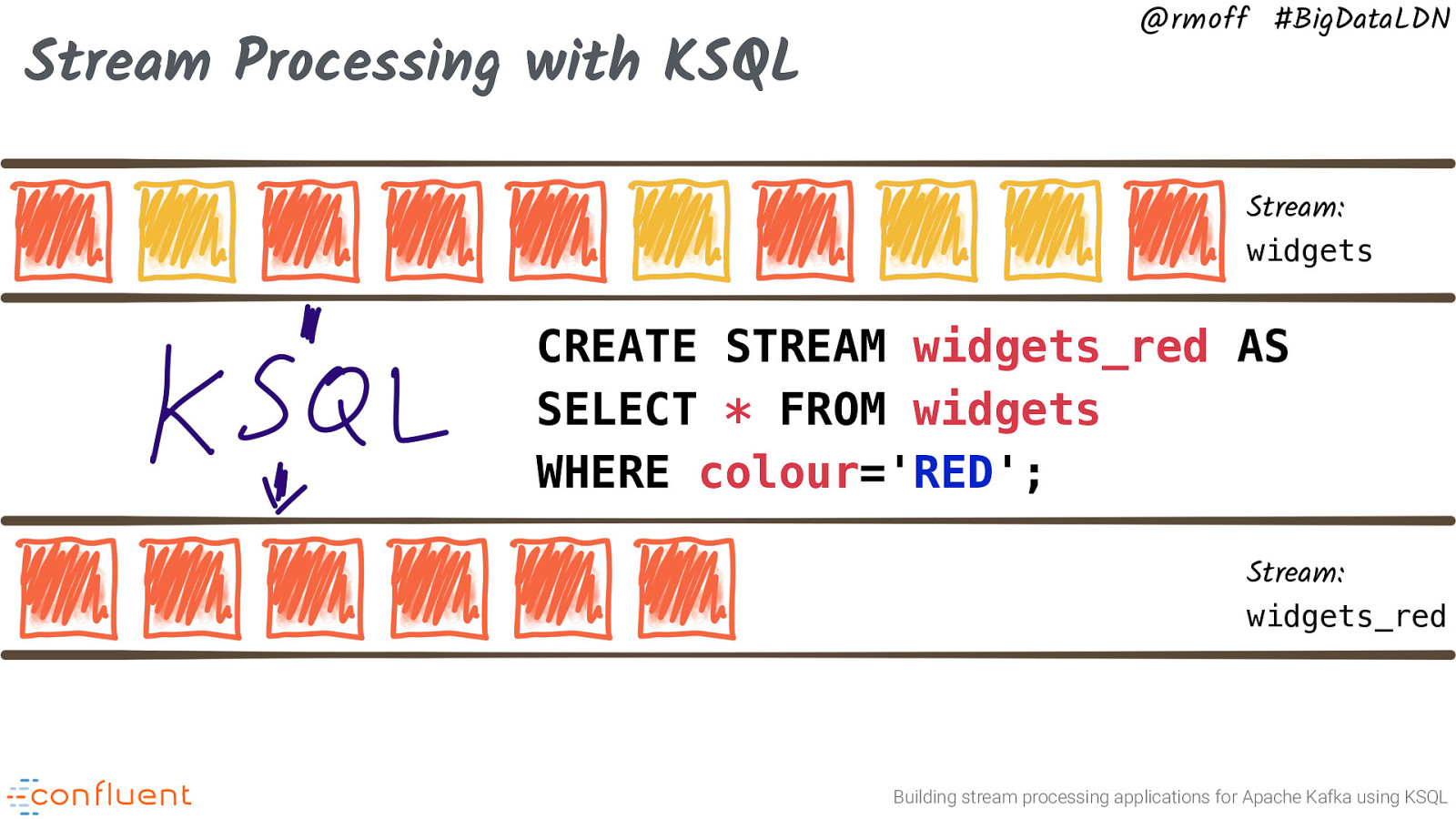

Stream Processing with KSQL @rmoff #BigDataLDN Stream: widgets Stream: widgets_red Building stream processing applications for Apache Kafka using KSQL

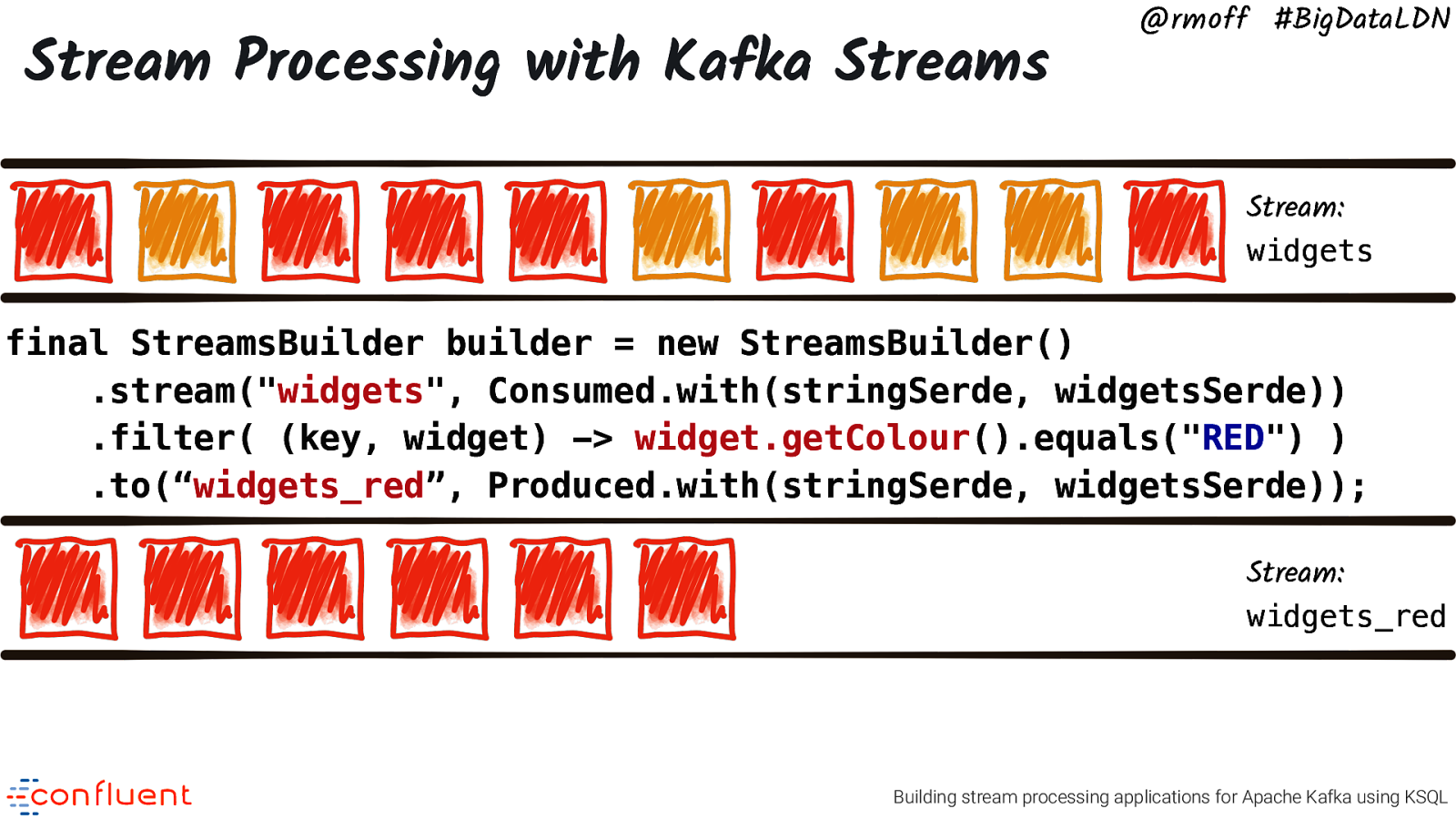

Stream Processing with Kafka Streams @rmoff #BigDataLDN Stream: widgets final StreamsBuilder builder = new StreamsBuilder() .stream(“widgets”, Consumed.with(stringSerde, widgetsSerde)) .filter( (key, widget) -> widget.getColour().equals(“RED”) ) .to(“widgets_red”, Produced.with(stringSerde, widgetsSerde)); Stream: widgets_red Building stream processing applications for Apache Kafka using KSQL

Stream Processing with KSQL @rmoff #BigDataLDN Stream: widgets CREATE STREAM widgets_red AS SELECT * FROM widgets WHERE colour=’RED’; Stream: widgets_red Building stream processing applications for Apache Kafka using KSQL

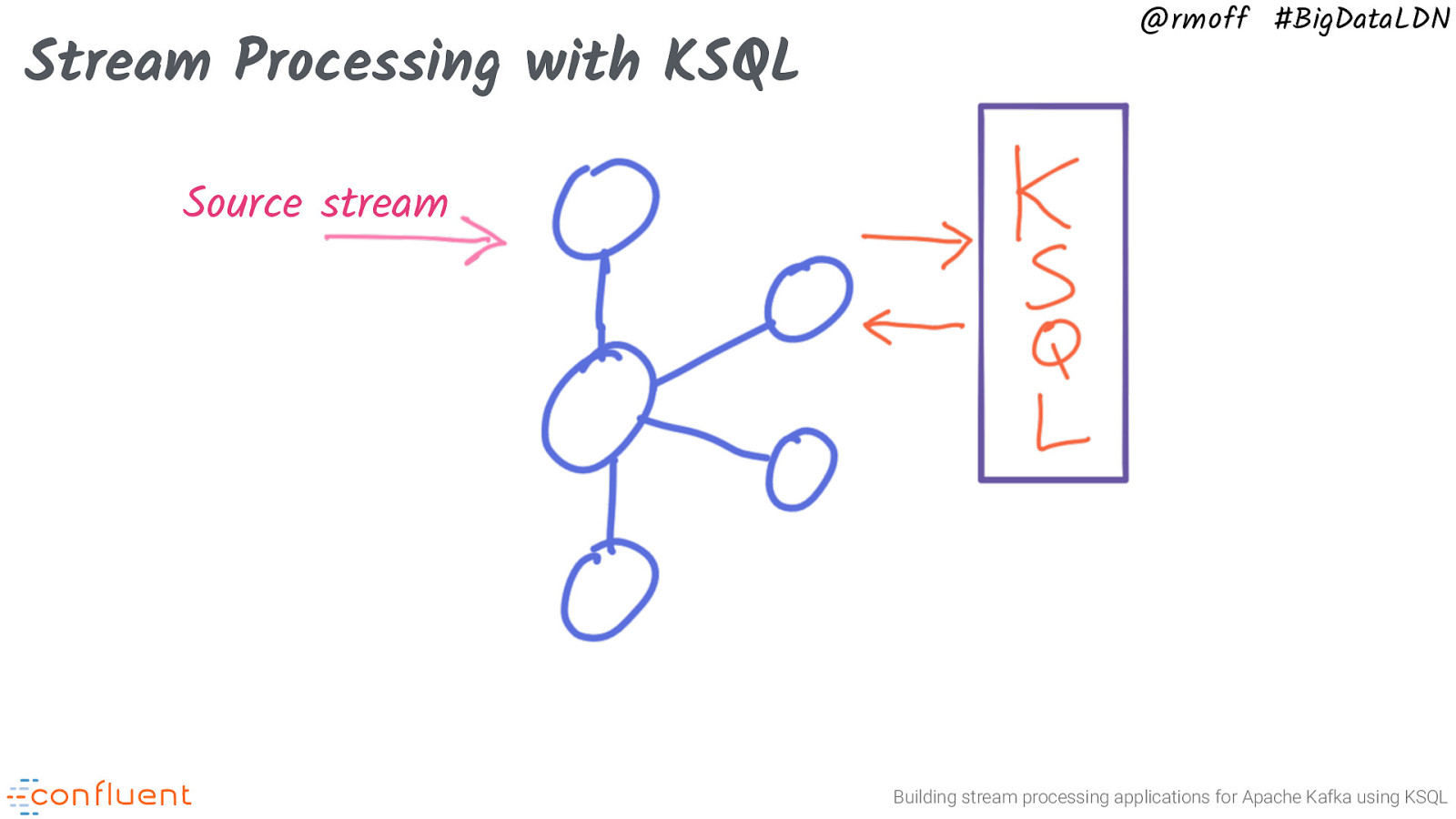

Stream Processing with KSQL @rmoff #BigDataLDN Source stream Building stream processing applications for Apache Kafka using KSQL

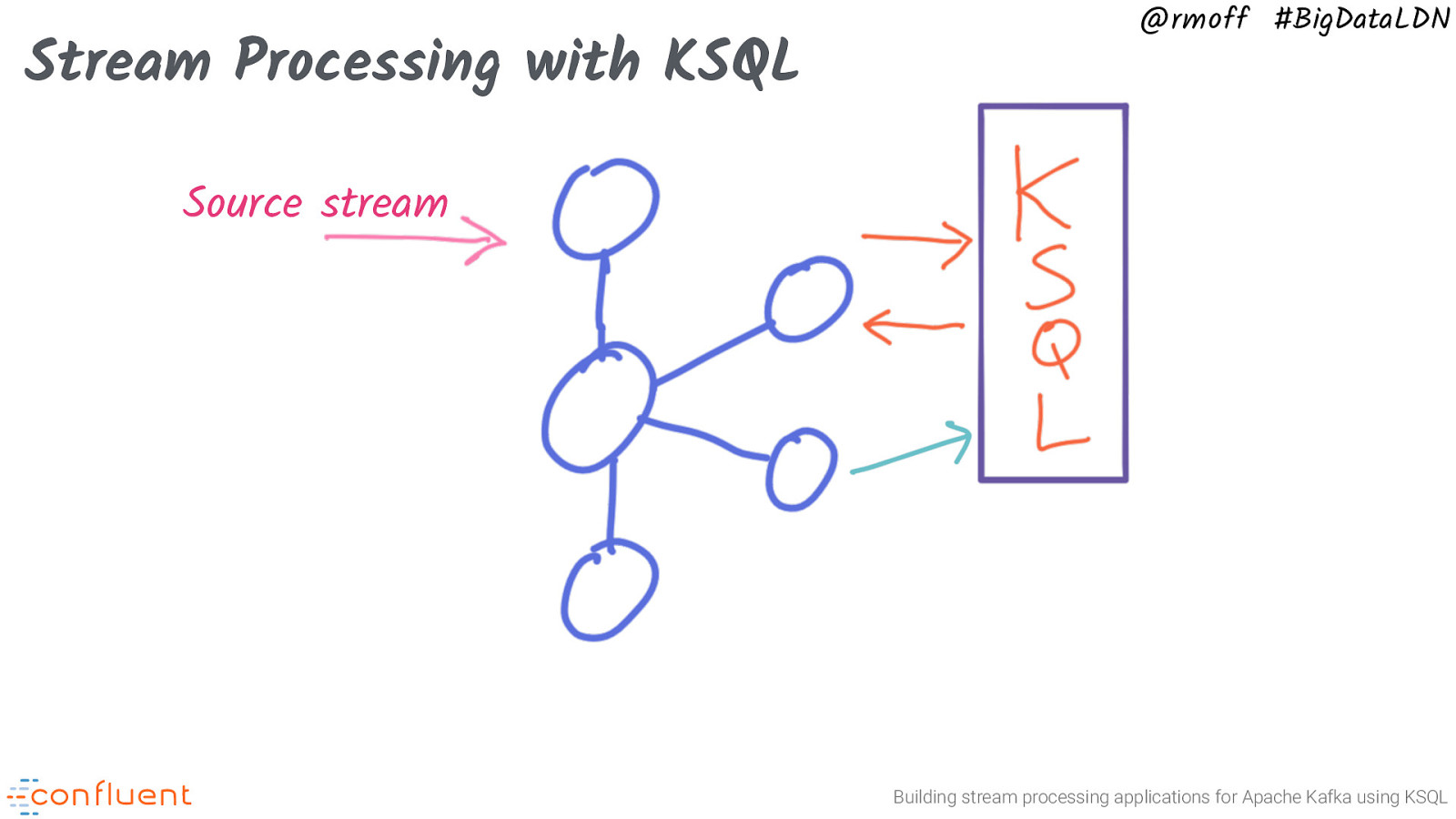

Stream Processing with KSQL @rmoff #BigDataLDN Source stream Building stream processing applications for Apache Kafka using KSQL

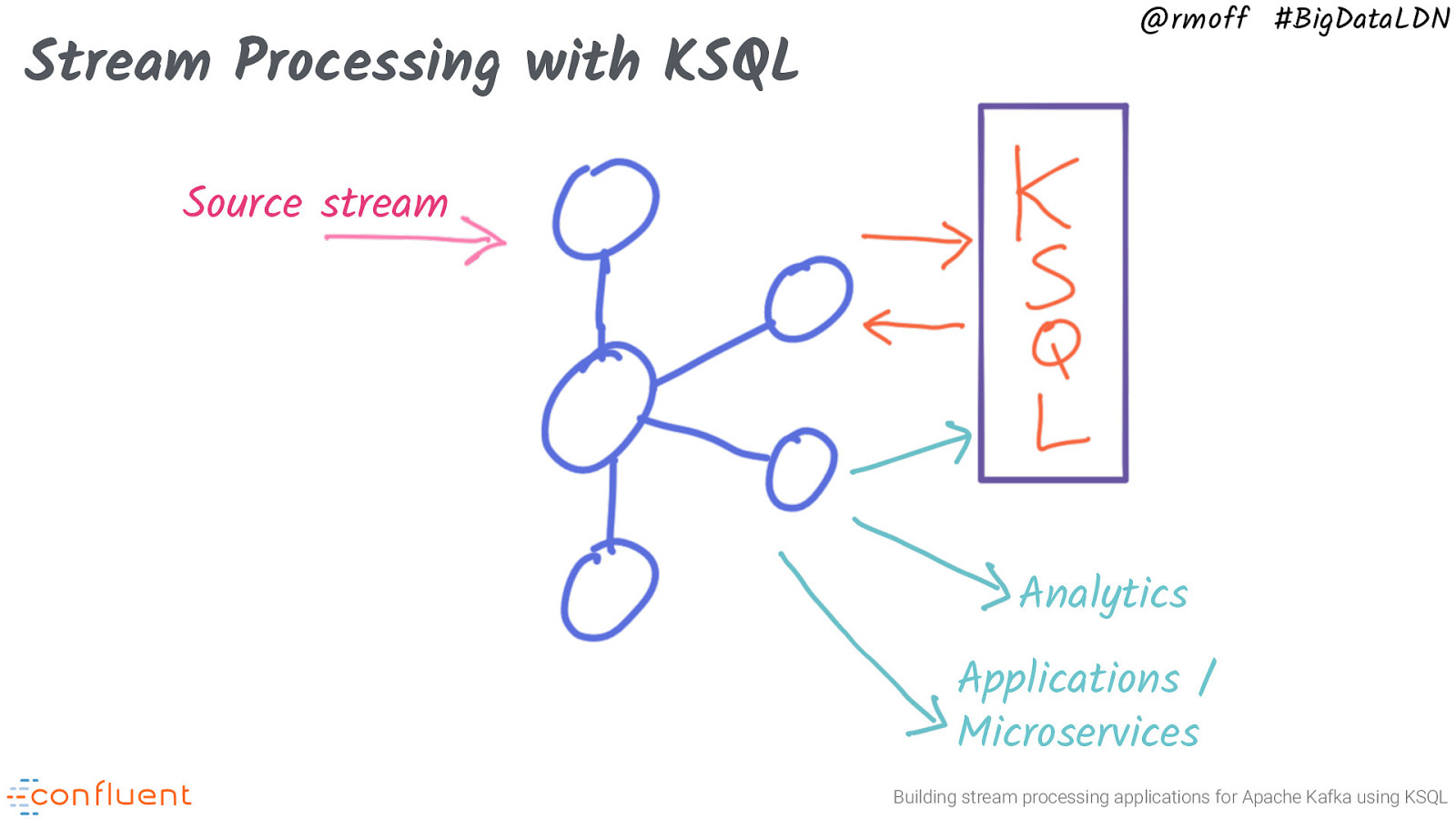

Stream Processing with KSQL @rmoff #BigDataLDN Source stream Analytics Applications / Microservices Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN KSQL in action 🚀 https://rmoff.dev/kssf19-ksql-code Building stream processing applications for Apache Kafka using KSQL

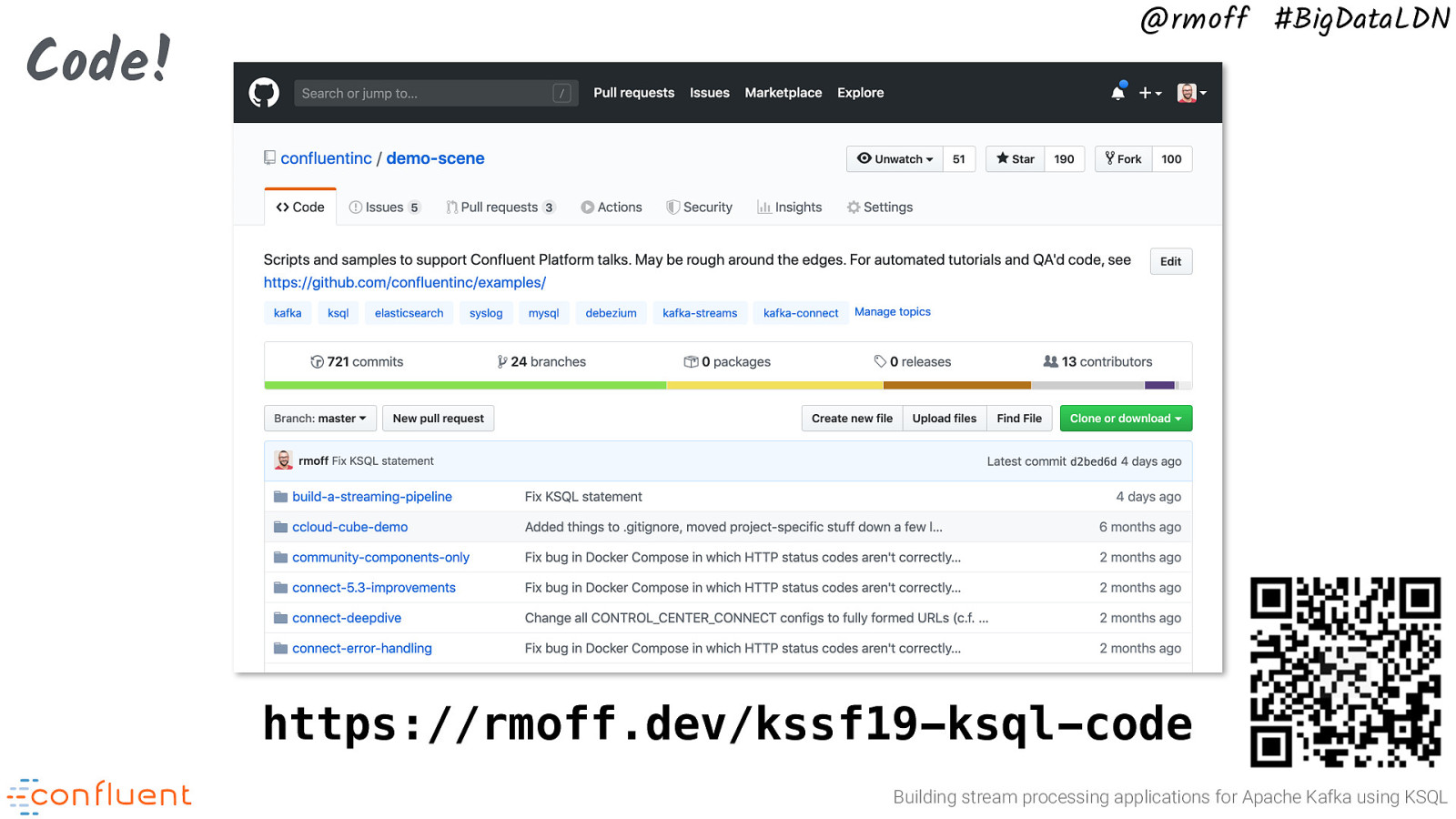

Code! @rmoff #BigDataLDN https://rmoff.dev/kssf19-ksql-code Building stream processing applications for Apache Kafka using KSQL

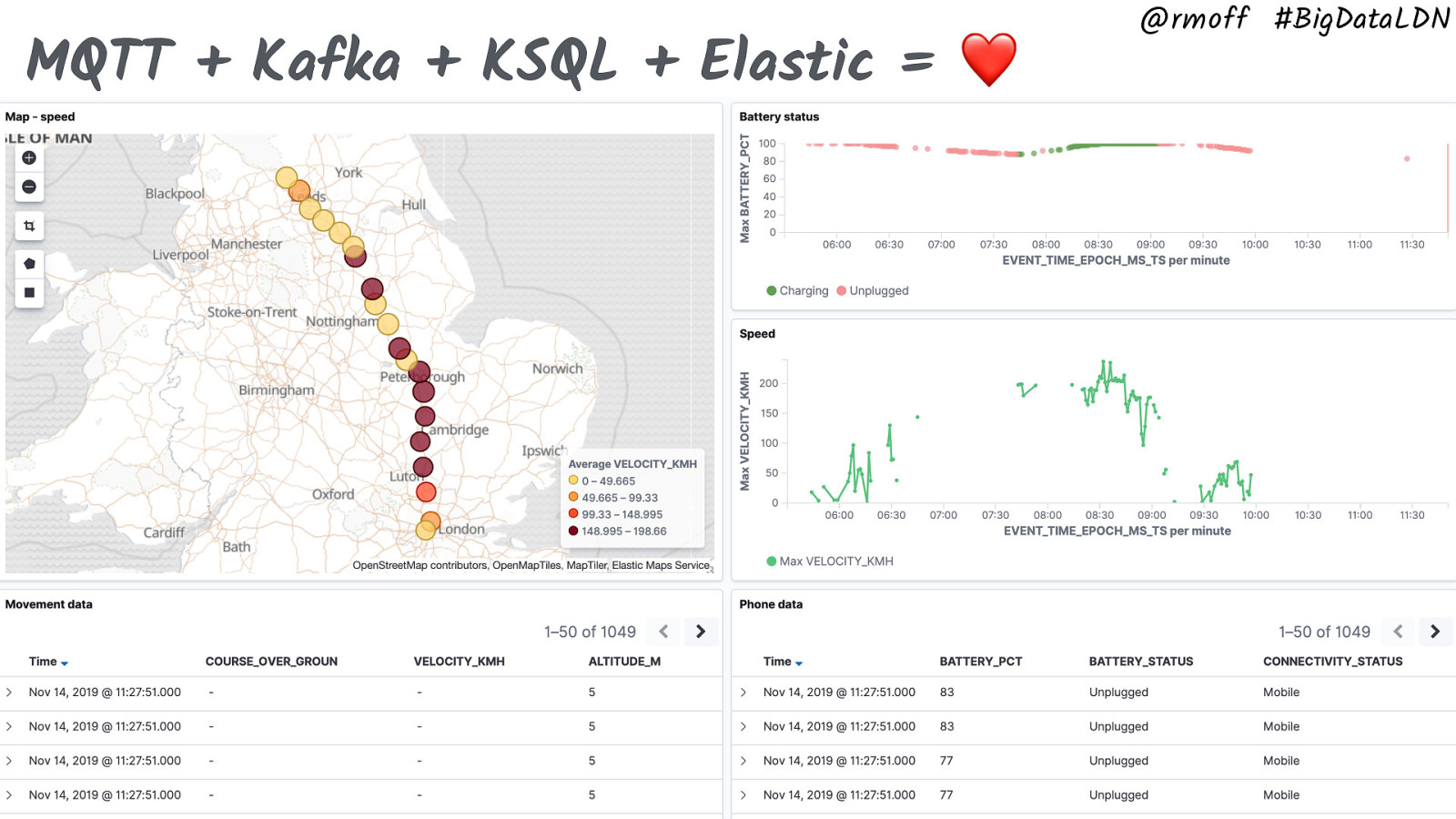

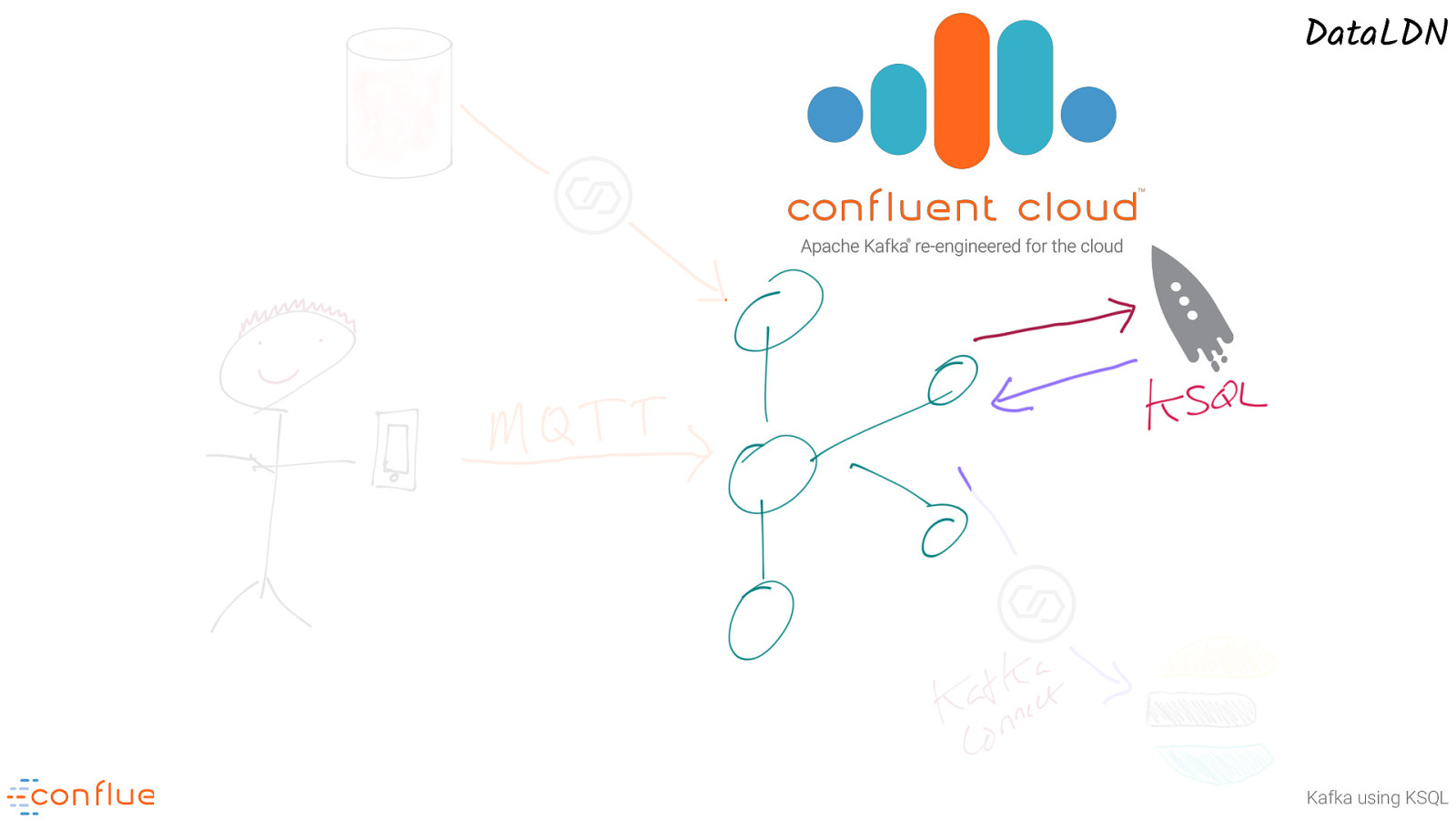

MQTT + Kafka + KSQL + Elastic = ❤ @rmoff #BigDataLDN Building stream processing applications for Apache Kafka using KSQL

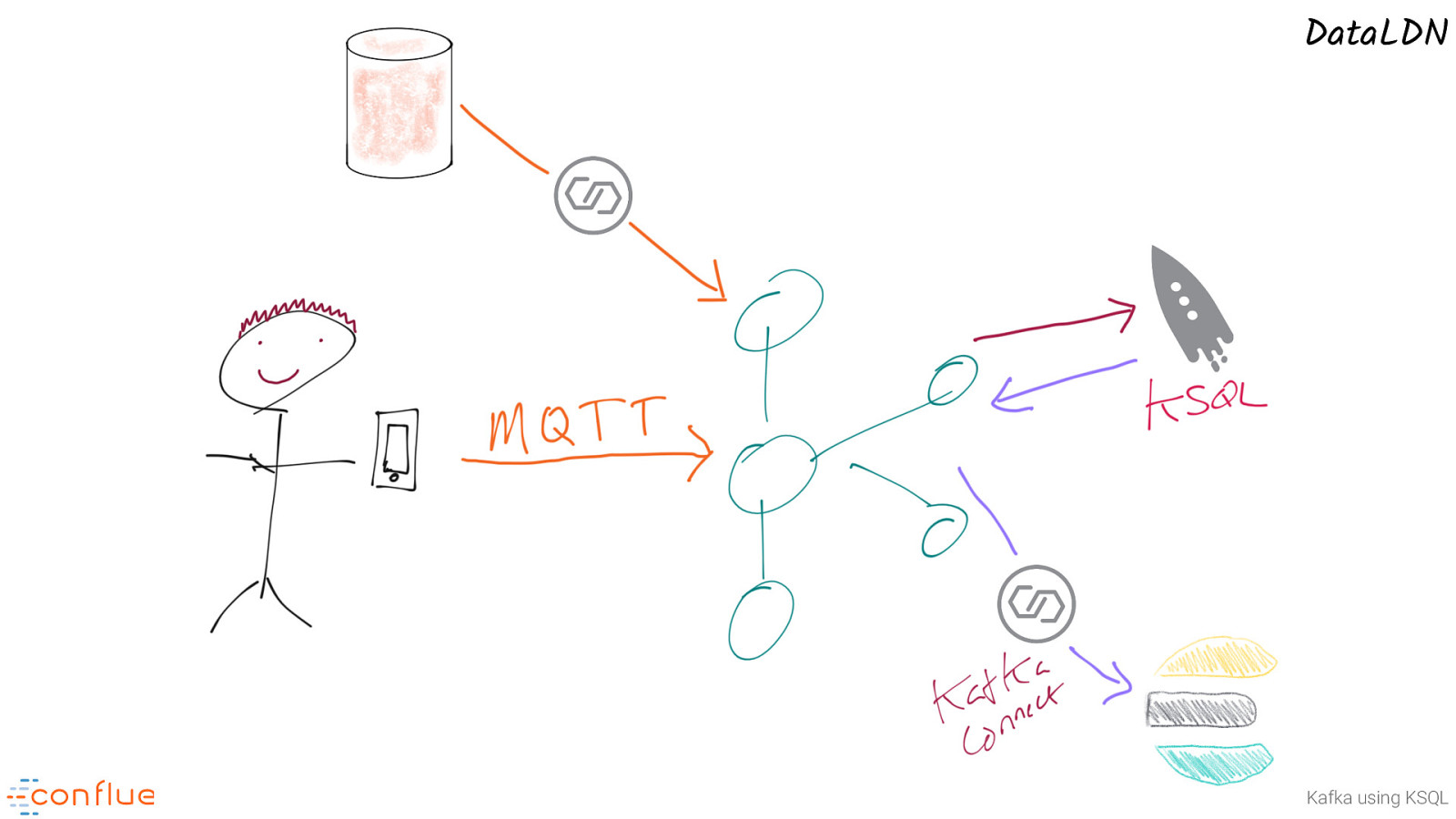

@rmoff #BigDataLDN Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN http://confluent.cloud/signup Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN Interacting with KSQL 📬 Building stream processing applications for Apache Kafka using KSQL

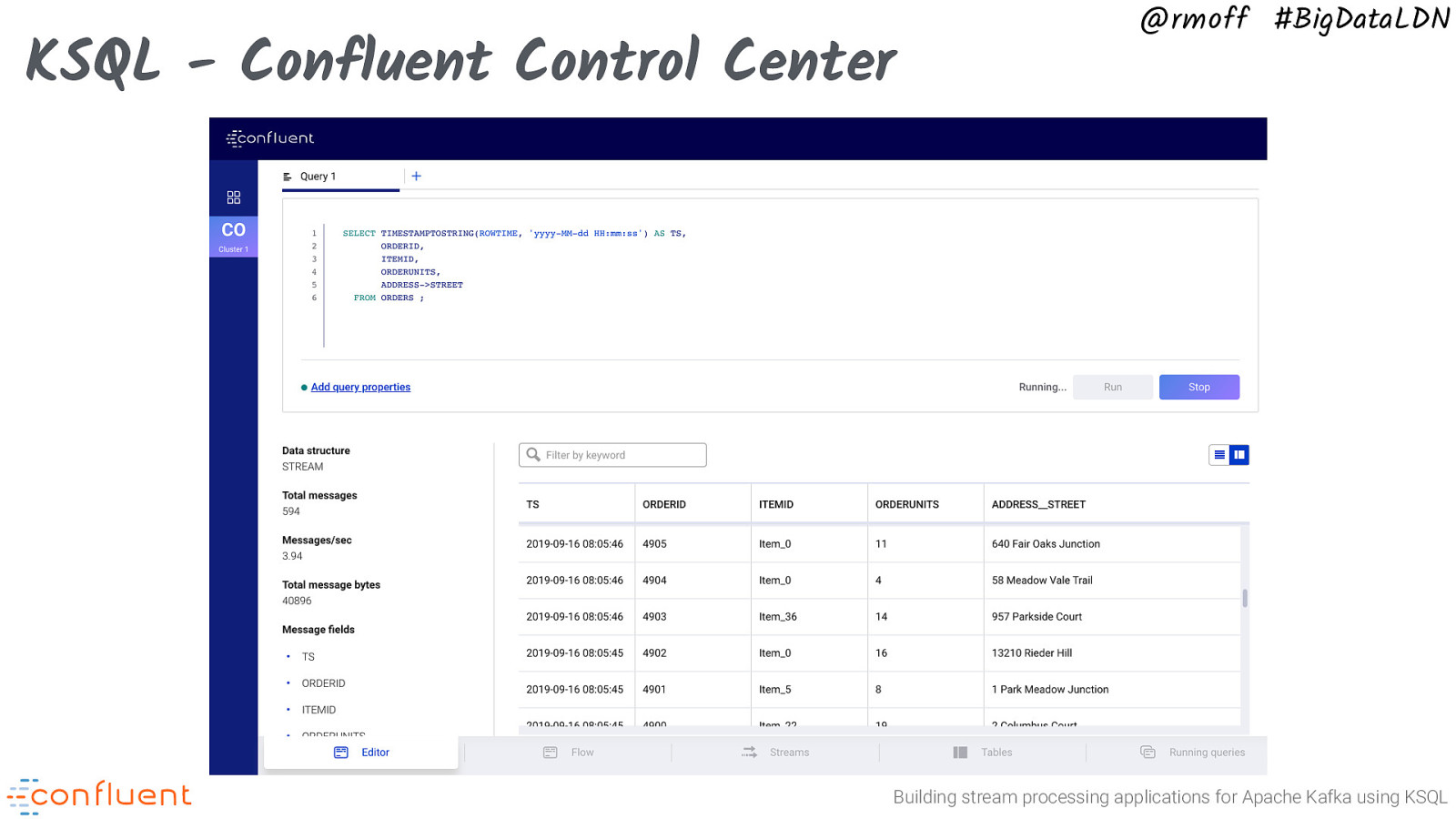

KSQL - Confluent Control Center @rmoff #BigDataLDN Building stream processing applications for Apache Kafka using KSQL

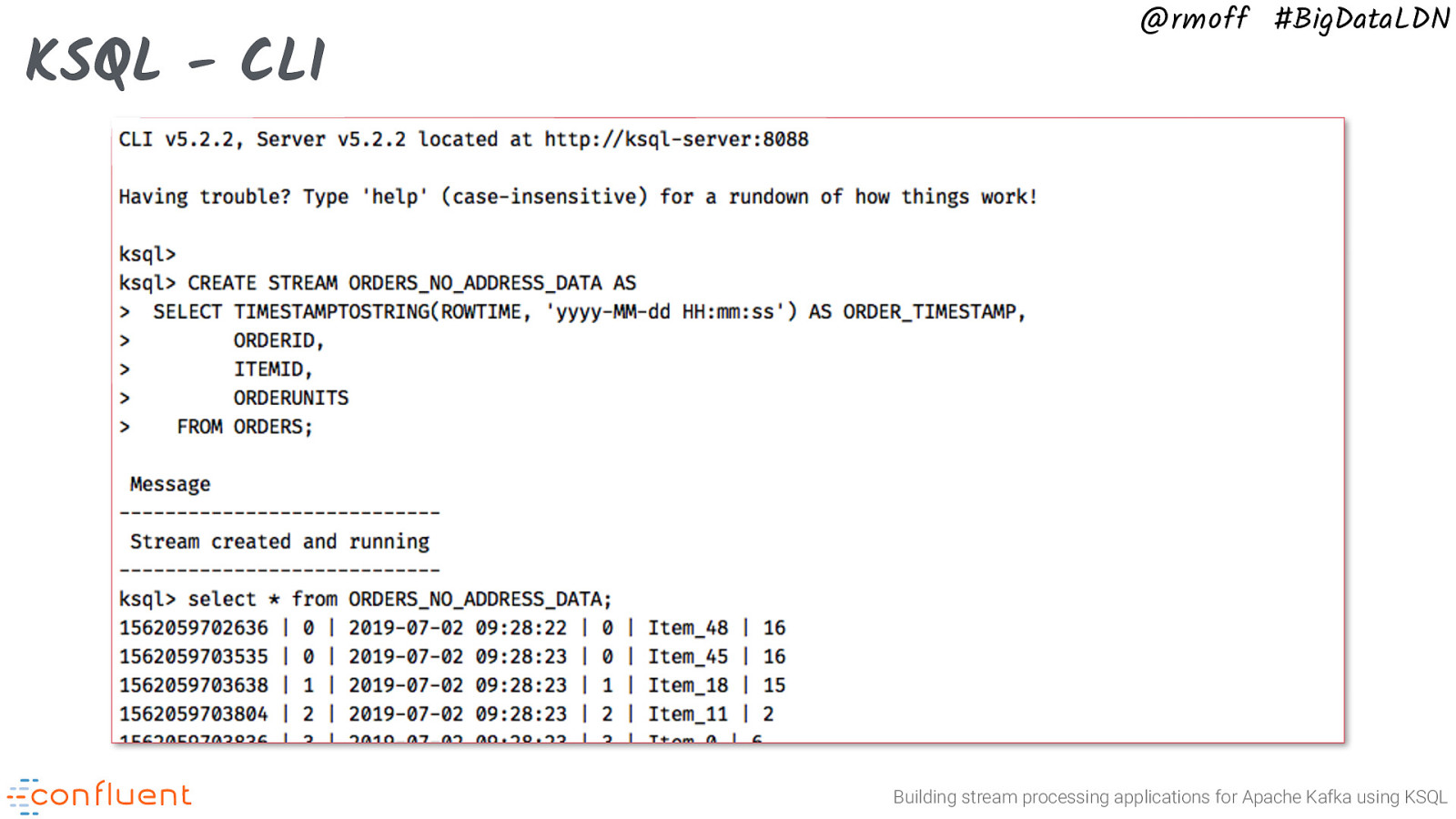

KSQL - CLI @rmoff #BigDataLDN Building stream processing applications for Apache Kafka using KSQL

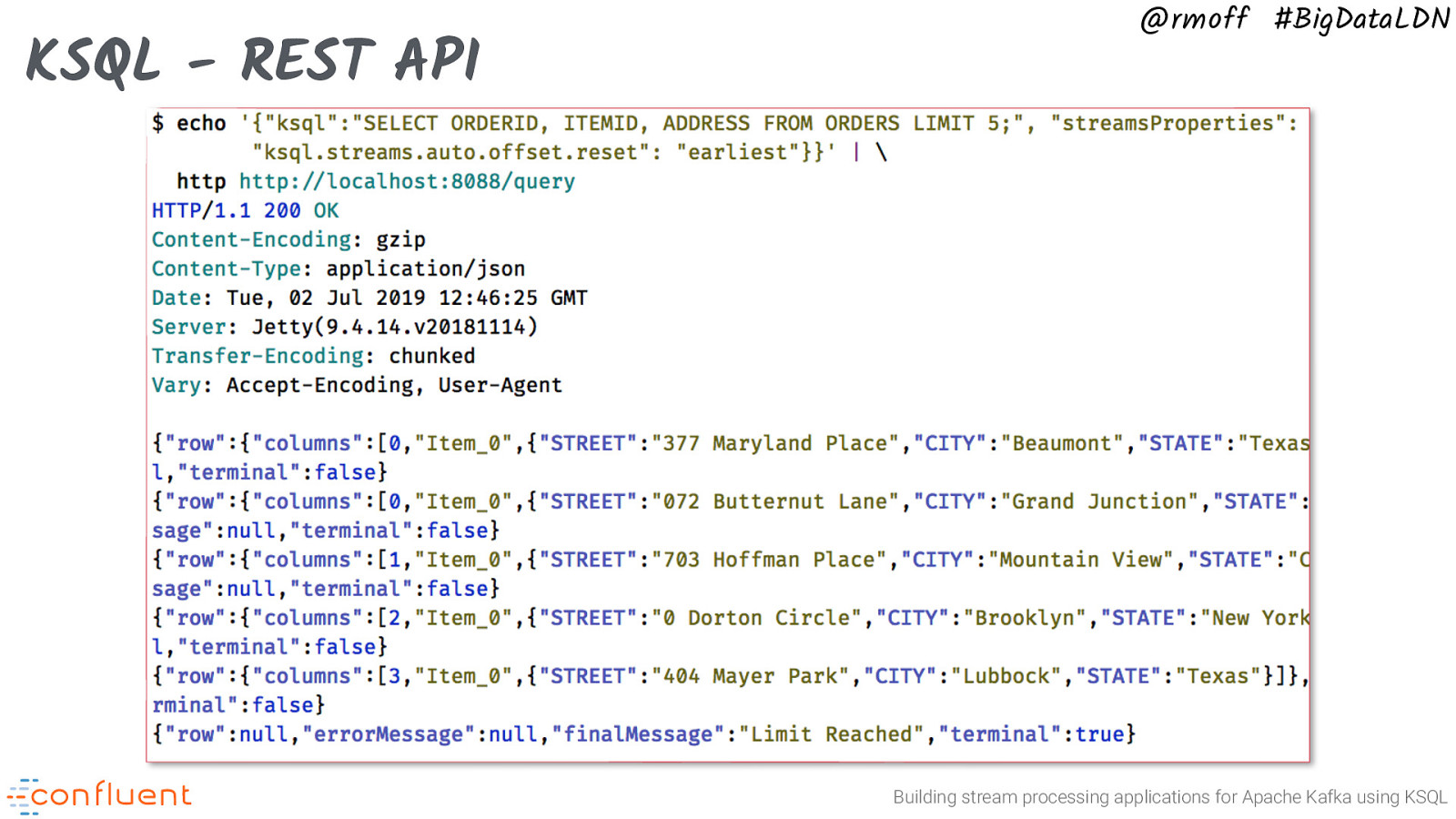

KSQL - REST API @rmoff #BigDataLDN Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN KSQL operations and deployment 💾 Building stream processing applications for Apache Kafka using KSQL

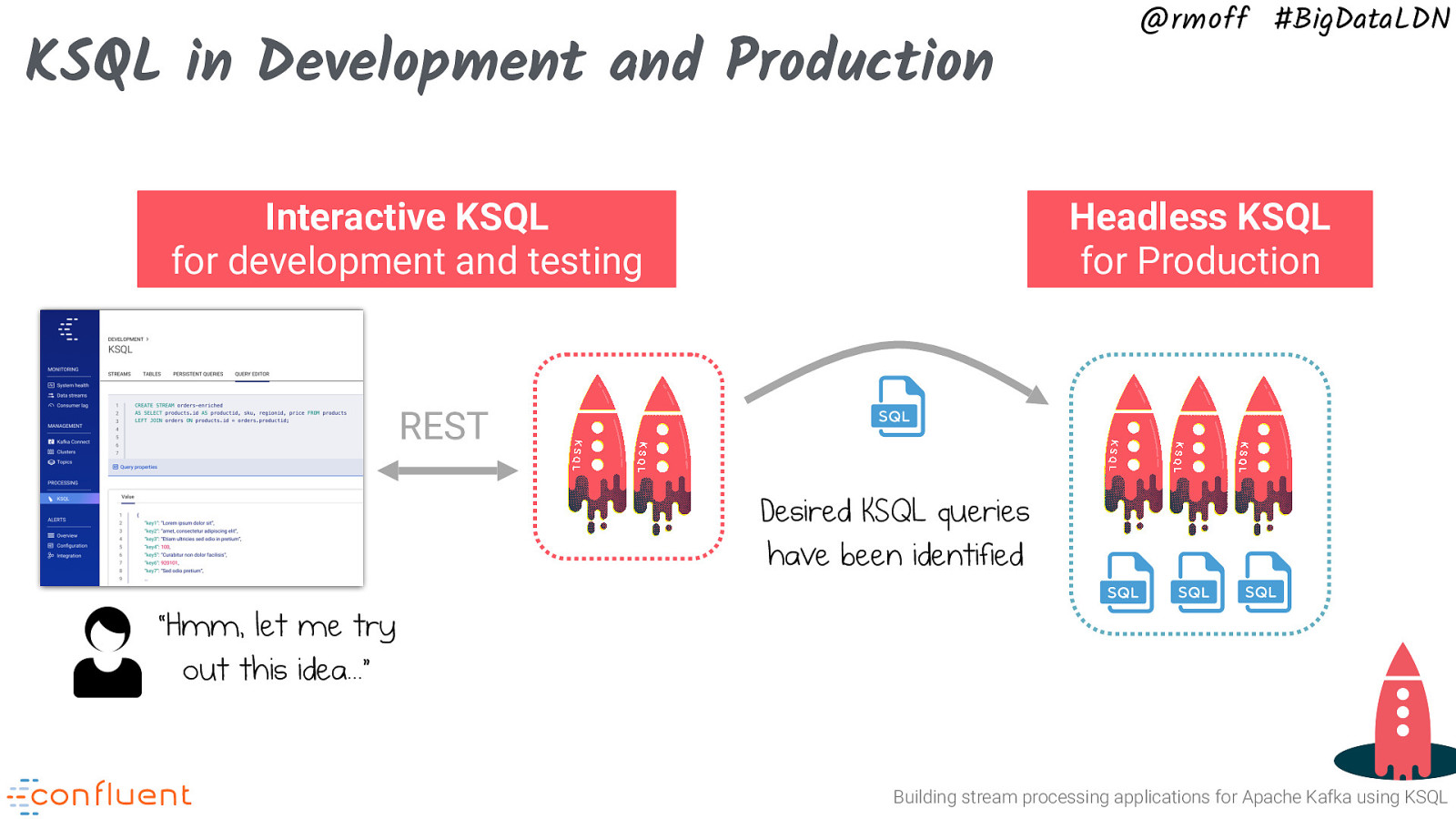

KSQL in Development and Production Interactive KSQL for development and testing @rmoff #BigDataLDN Headless KSQL for Production REST Desired KSQL queries have been identified “Hmm, let me try out this idea…” Building stream processing applications for Apache Kafka using KSQL

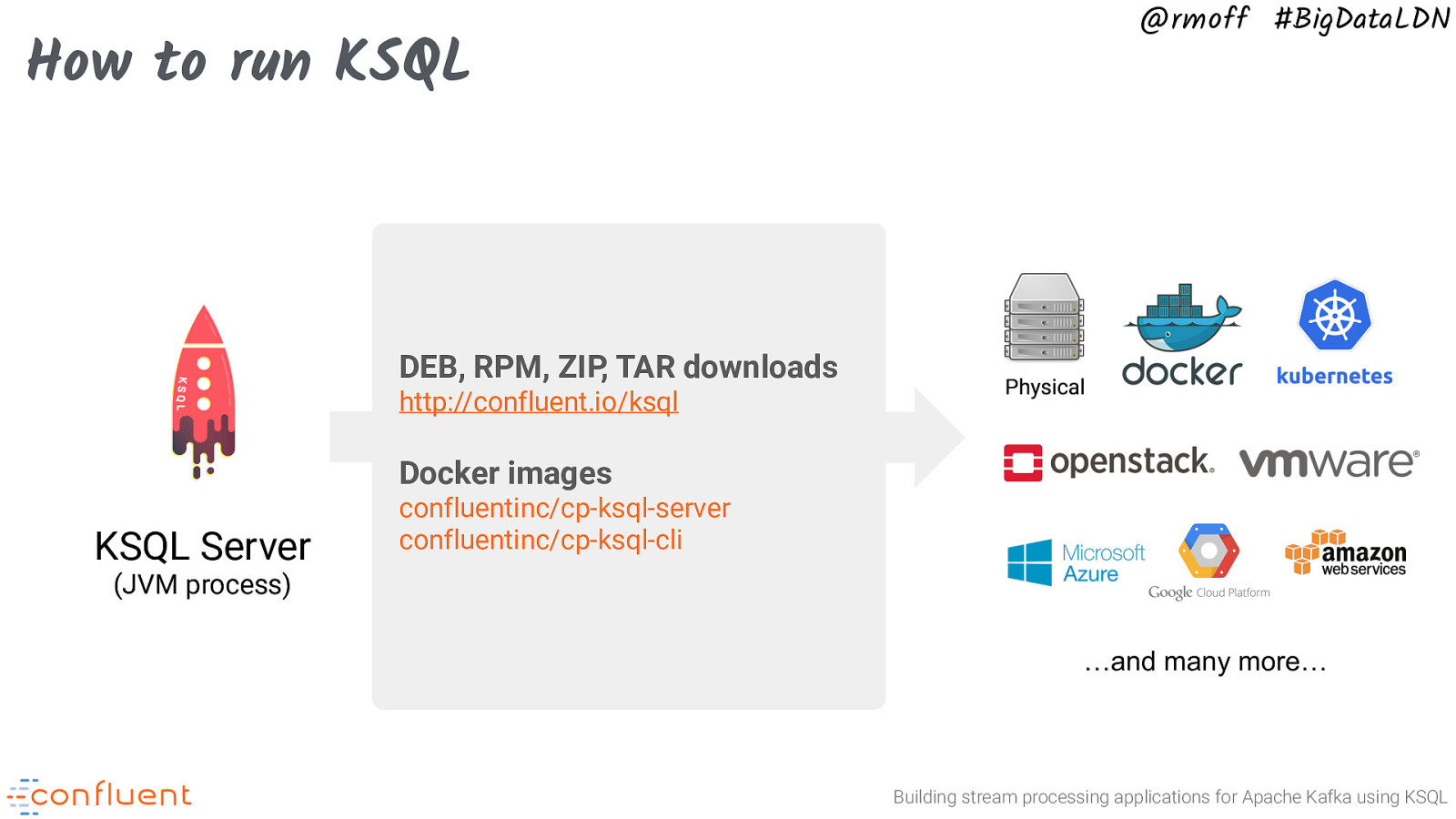

How to run KSQL @rmoff #BigDataLDN DEB, RPM, ZIP, TAR downloads http://confluent.io/ksql Docker images KSQL Server confluentinc/cp-ksql-server confluentinc/cp-ksql-cli (JVM process) …and many more… Building stream processing applications for Apache Kafka using KSQL

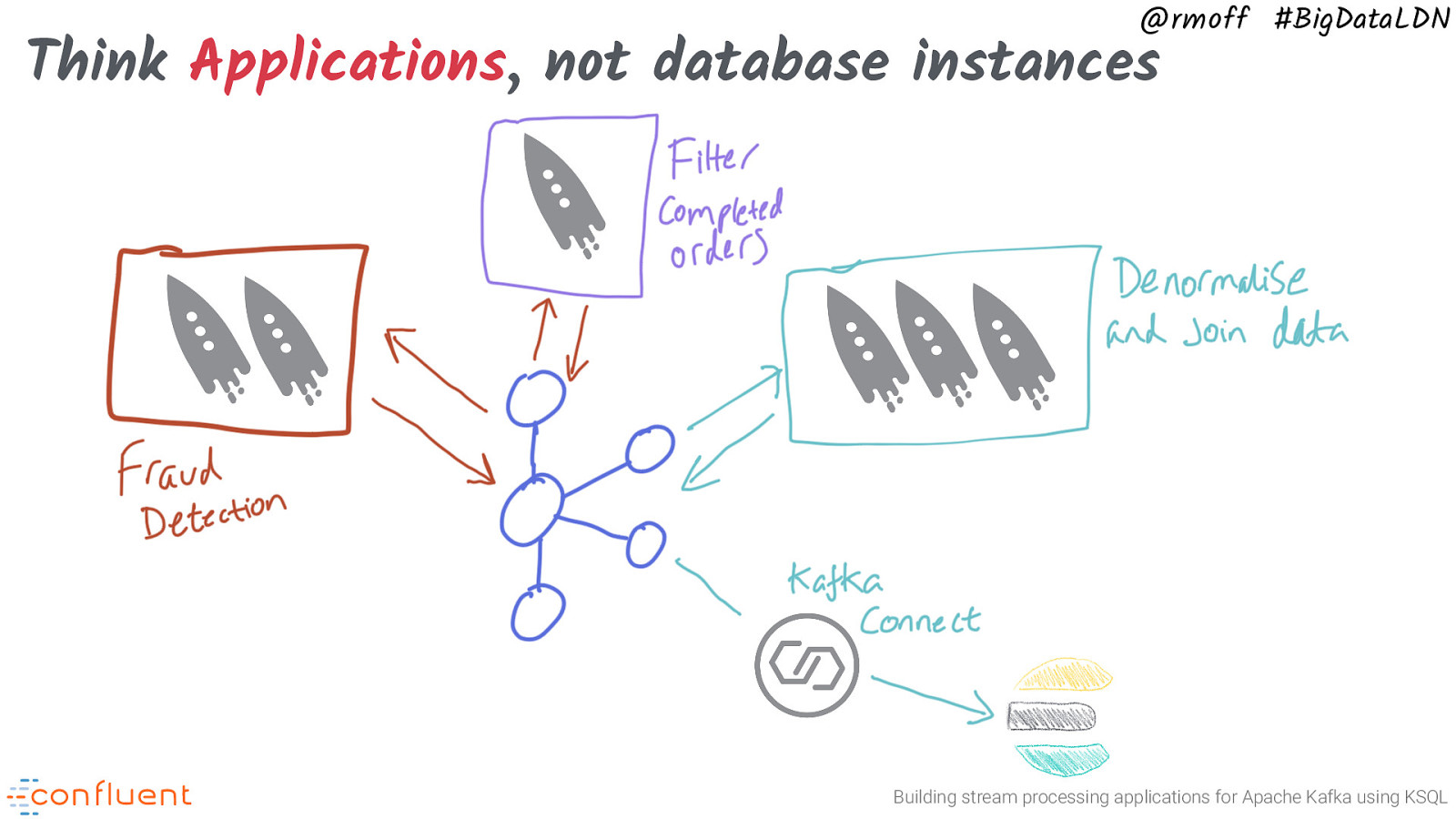

@rmoff #BigDataLDN Think Applications, not database instances Building stream processing applications for Apache Kafka using KSQL

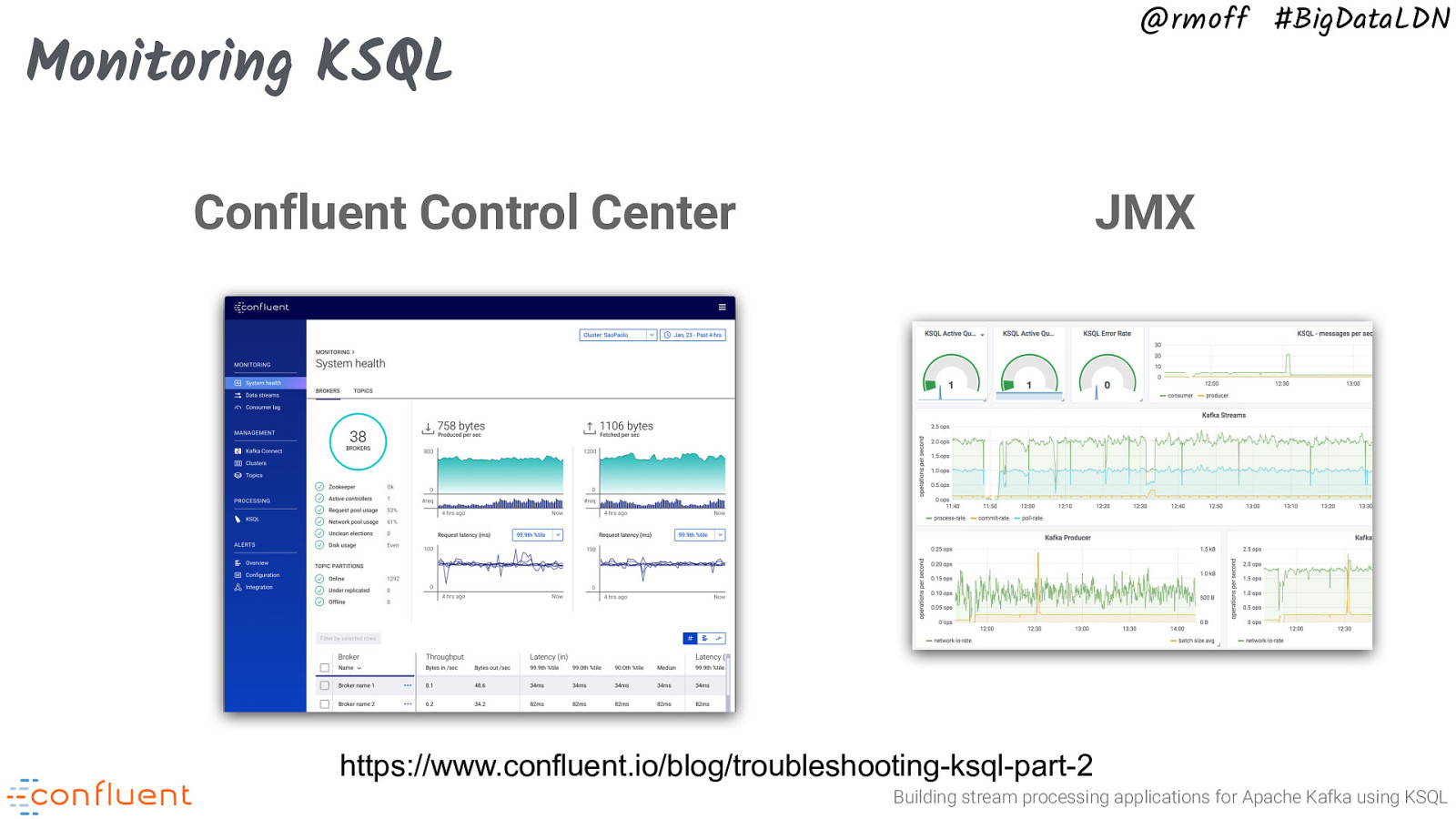

@rmoff #BigDataLDN Monitoring KSQL Confluent Control Center JMX https://www.confluent.io/blog/troubleshooting-ksql-part-2 Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN http://cnfl.io/book-bundle Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN #EOF 💬 Join the Confluent Community Slack group at http://cnfl.io/slack https://talks.rmoff.net

@rmoff #BigDataLDN Related Talks •The Changing Face of ETL: Event-Driven Architectures for Data Engineers •Apache Kafka and KSQL in Action : Let’s Build a Streaming Data Pipeline! • 📖 Slides • 📖 Slides • 📽 Recording • 👾 Code • 📽 Recording •ATM Fraud detection with Kafka and KSQL • 📖 Slides •No More Silos: Integrating Databases and Apache Kafka • 👾 Code • 📖 Slides • 📽 Recording • 👾 Code (MySQL) • 👾 Code (Oracle) •Embrace the Anarchy: Apache Kafka’s Role in Modern Data Architectures • 📽 Recording • 📖 Slides • 📽 Recording Building stream processing applications for Apache Kafka using KSQL

Bonus content!

@rmoff #BigDataLDN KSQL in action 🚀 Building stream processing applications for Apache Kafka using KSQL

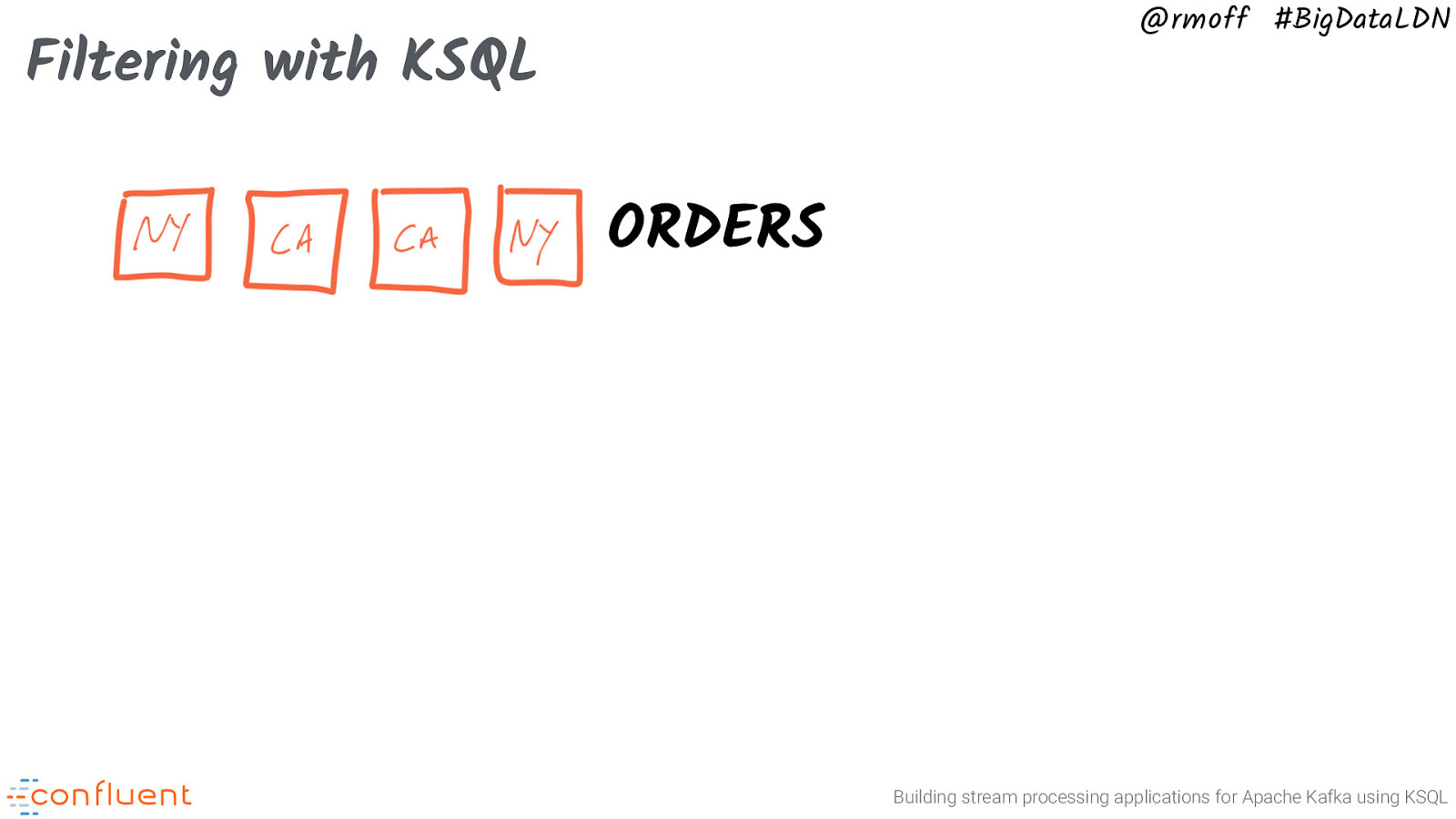

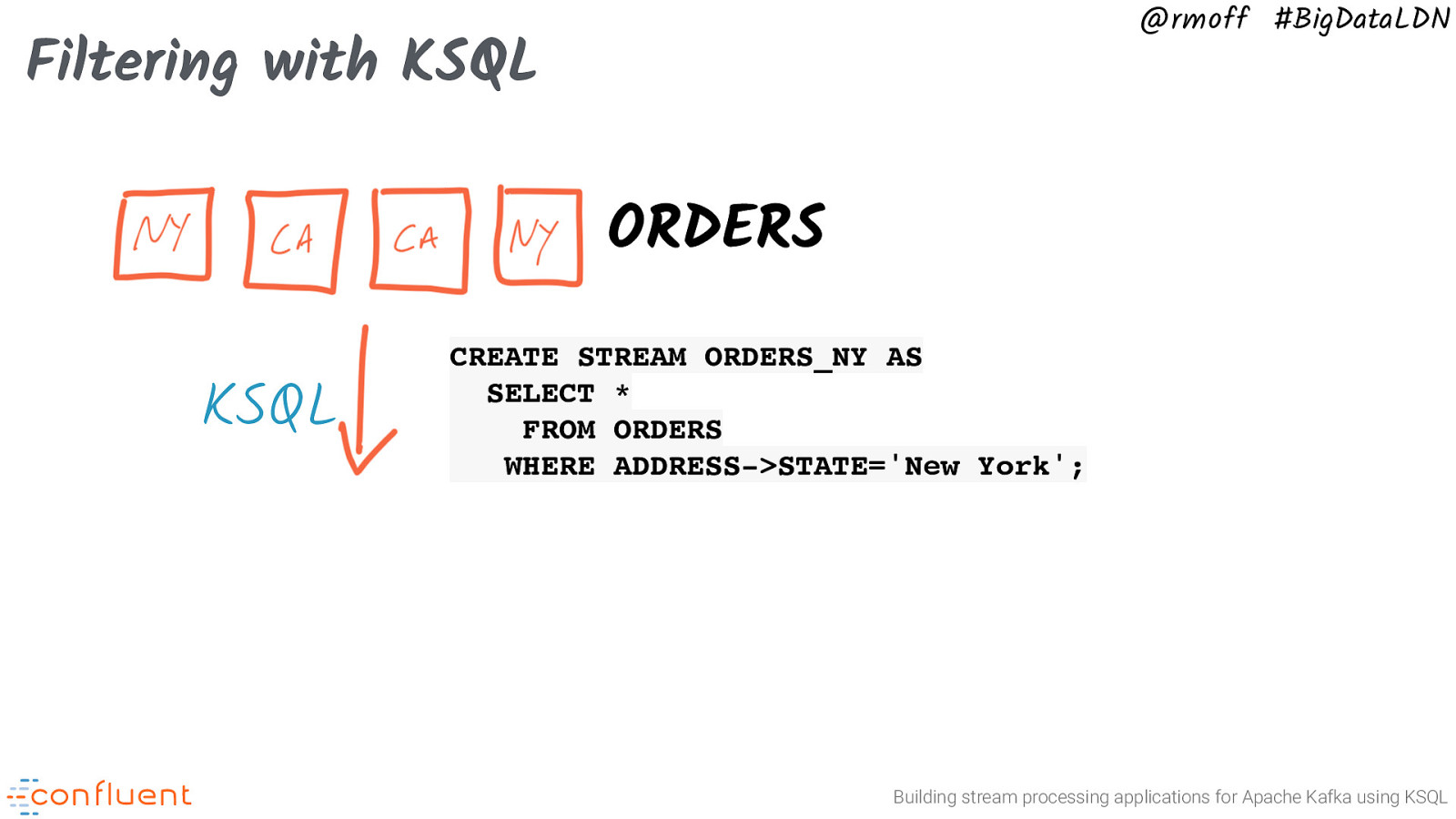

@rmoff #BigDataLDN Filtering with KSQL ORDERS Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN Filtering with KSQL ORDERS KSQL CREATE STREAM ORDERS_NY AS SELECT * FROM ORDERS WHERE ADDRESS->STATE=’New York’; Building stream processing applications for Apache Kafka using KSQL

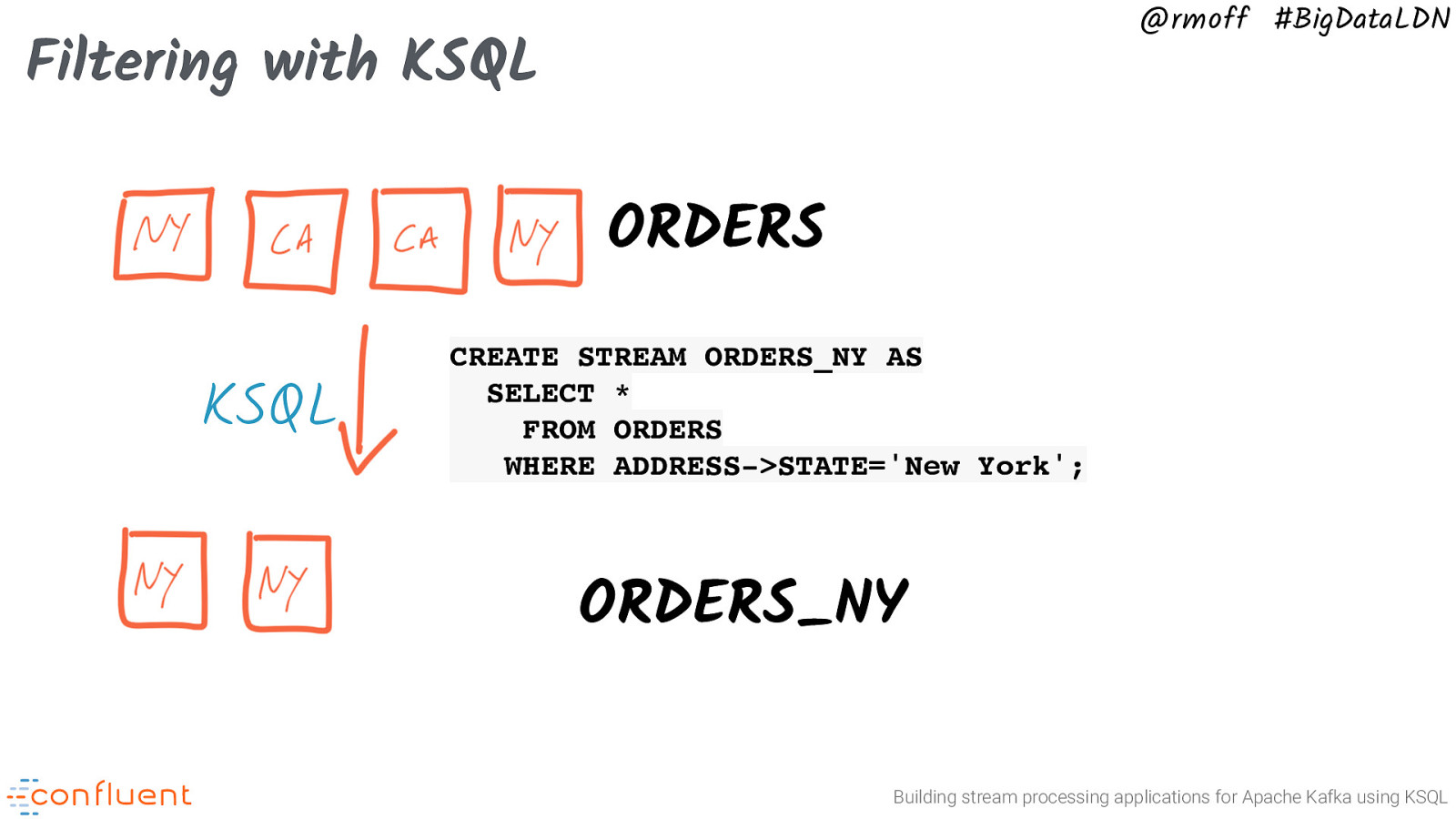

@rmoff #BigDataLDN Filtering with KSQL ORDERS KSQL CREATE STREAM ORDERS_NY AS SELECT * FROM ORDERS WHERE ADDRESS->STATE=’New York’; ORDERS_NY Building stream processing applications for Apache Kafka using KSQL

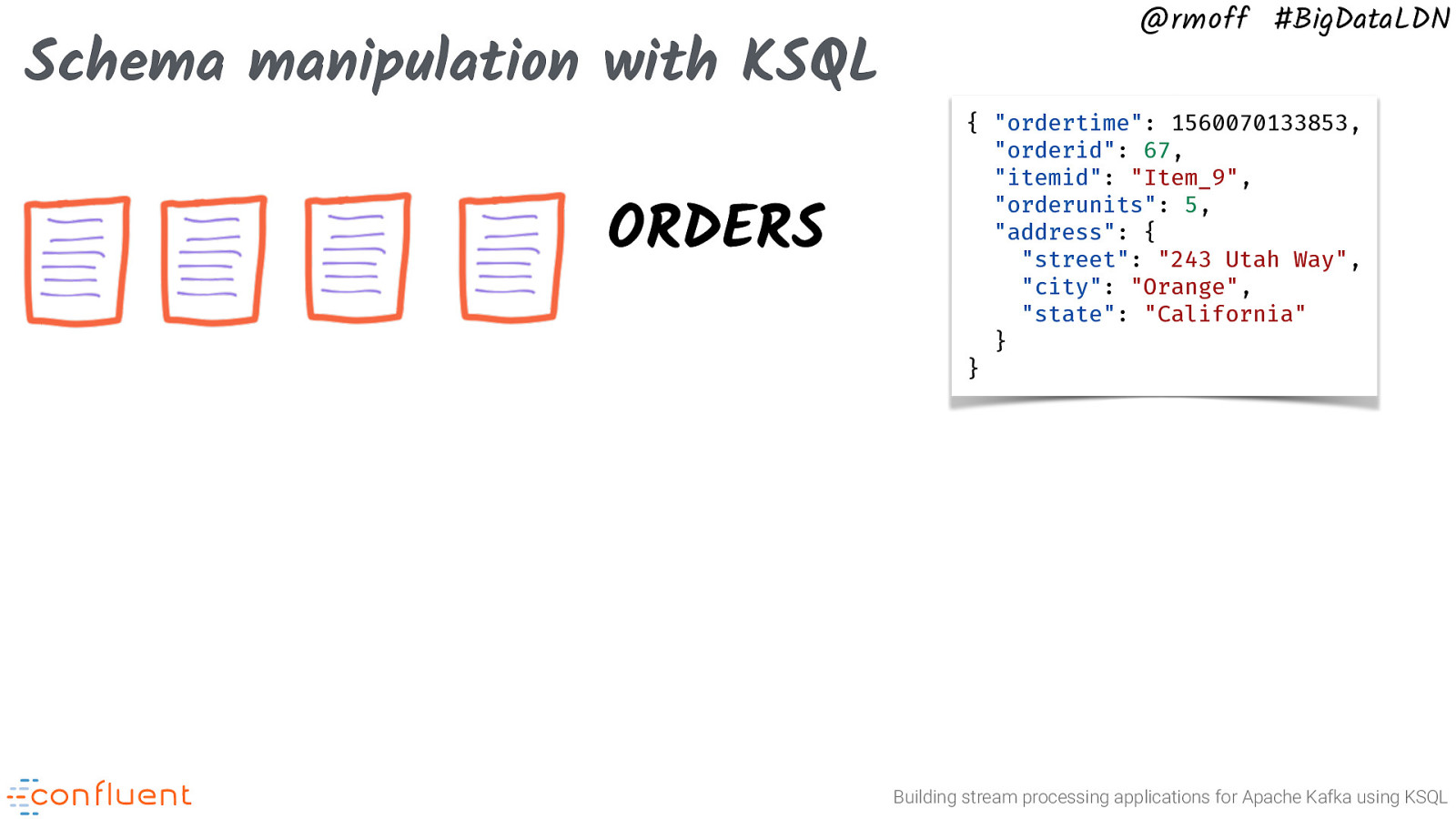

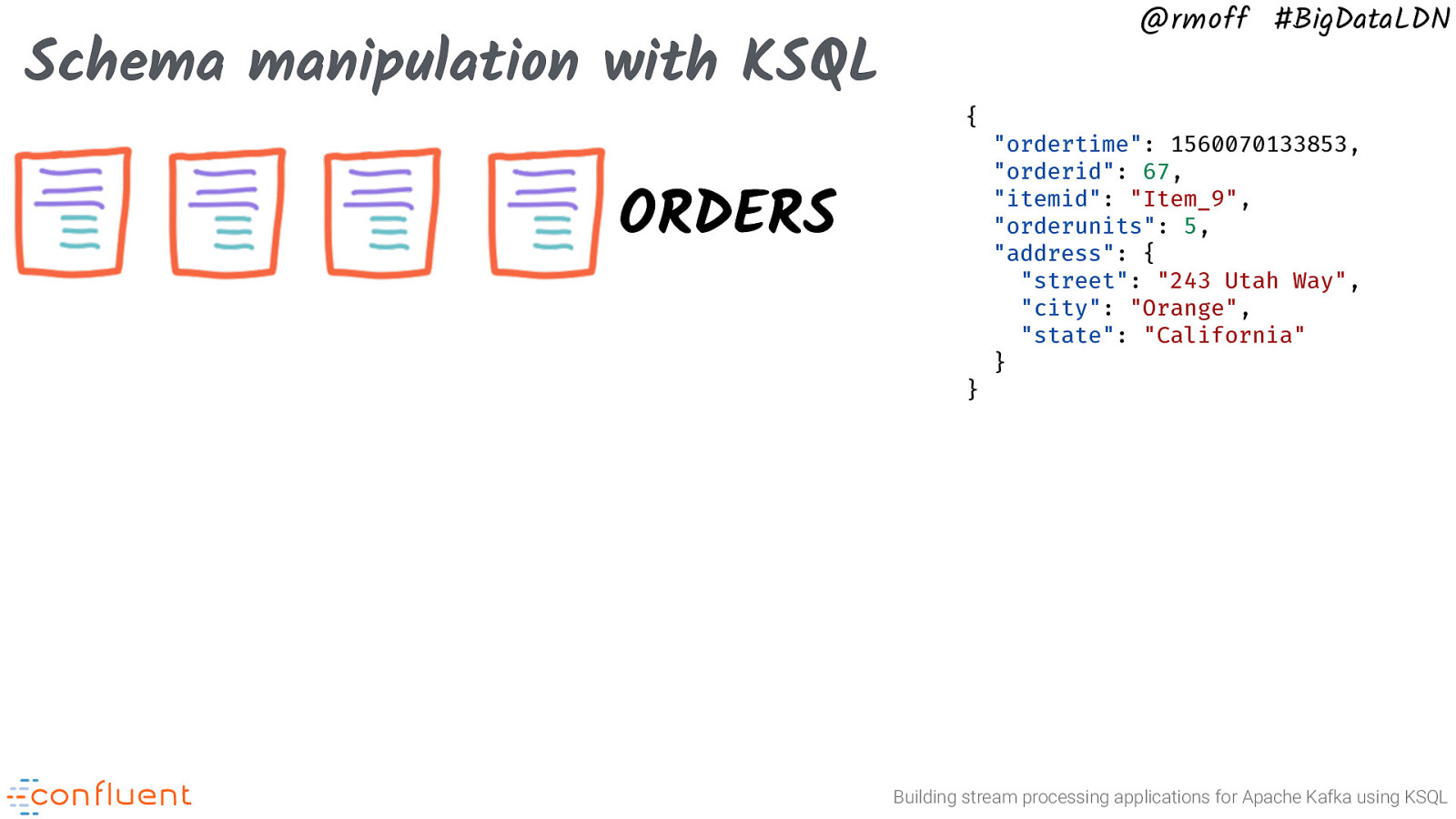

Schema manipulation with KSQL ORDERS @rmoff #BigDataLDN { “ordertime”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5, “address”: { “street”: “243 Utah Way”, “city”: “Orange”, “state”: “California” } } Building stream processing applications for Apache Kafka using KSQL

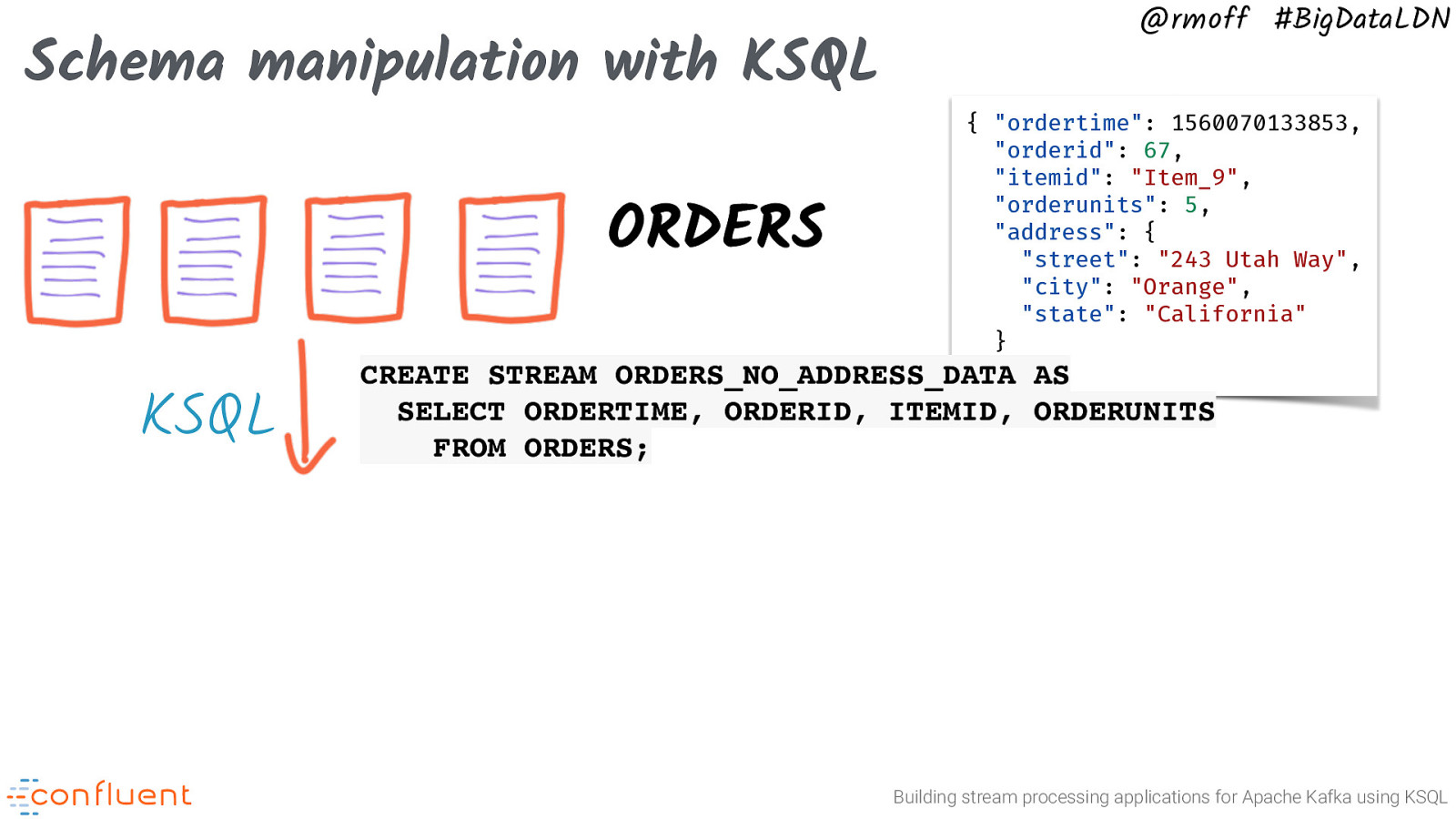

Schema manipulation with KSQL @rmoff #BigDataLDN { “ordertime”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5, “address”: { “street”: “243 Utah Way”, “city”: “Orange”, “state”: “California” } } ORDERS_NO_ADDRESS_DATA AS ORDERS KSQL CREATE STREAM SELECT ORDERTIME, ORDERID, ITEMID, ORDERUNITS FROM ORDERS; Building stream processing applications for Apache Kafka using KSQL

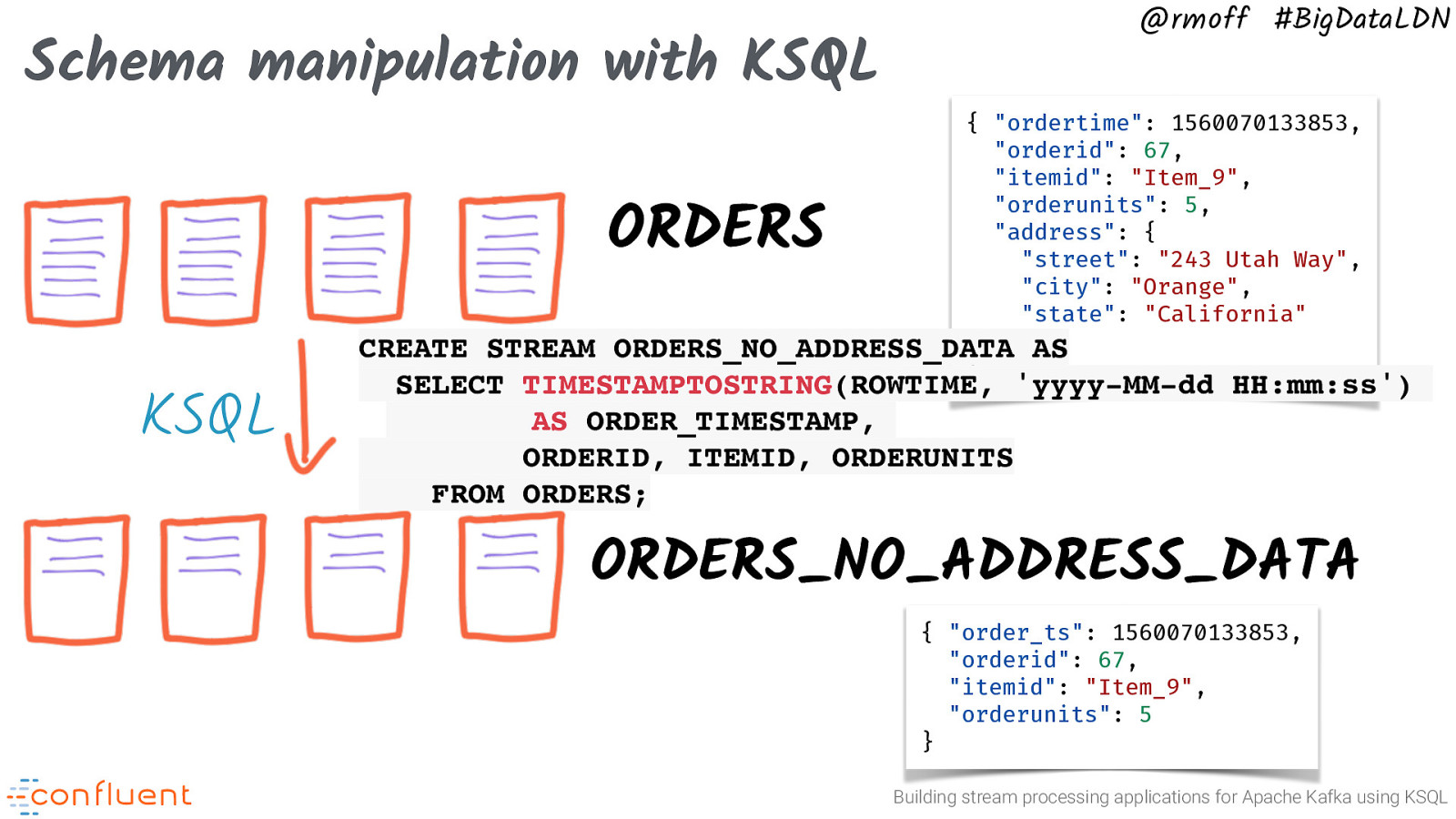

Schema manipulation with KSQL @rmoff #BigDataLDN { “ordertime”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5, “address”: { “street”: “243 Utah Way”, “city”: “Orange”, “state”: “California” } AS ORDERS_NO_ADDRESS_DATA } ORDERS KSQL CREATE STREAM SELECT TIMESTAMPTOSTRING(ROWTIME, ‘yyyy-MM-dd HH:mm:ss’) AS ORDER_TIMESTAMP, ORDERID, ITEMID, ORDERUNITS FROM ORDERS; ORDERS_NO_ADDRESS_DATA { “order_ts”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5 } Building stream processing applications for Apache Kafka using KSQL

Schema manipulation with KSQL @rmoff #BigDataLDN { ORDERS } “ordertime”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5, “address”: { “street”: “243 Utah Way”, “city”: “Orange”, “state”: “California” } Building stream processing applications for Apache Kafka using KSQL

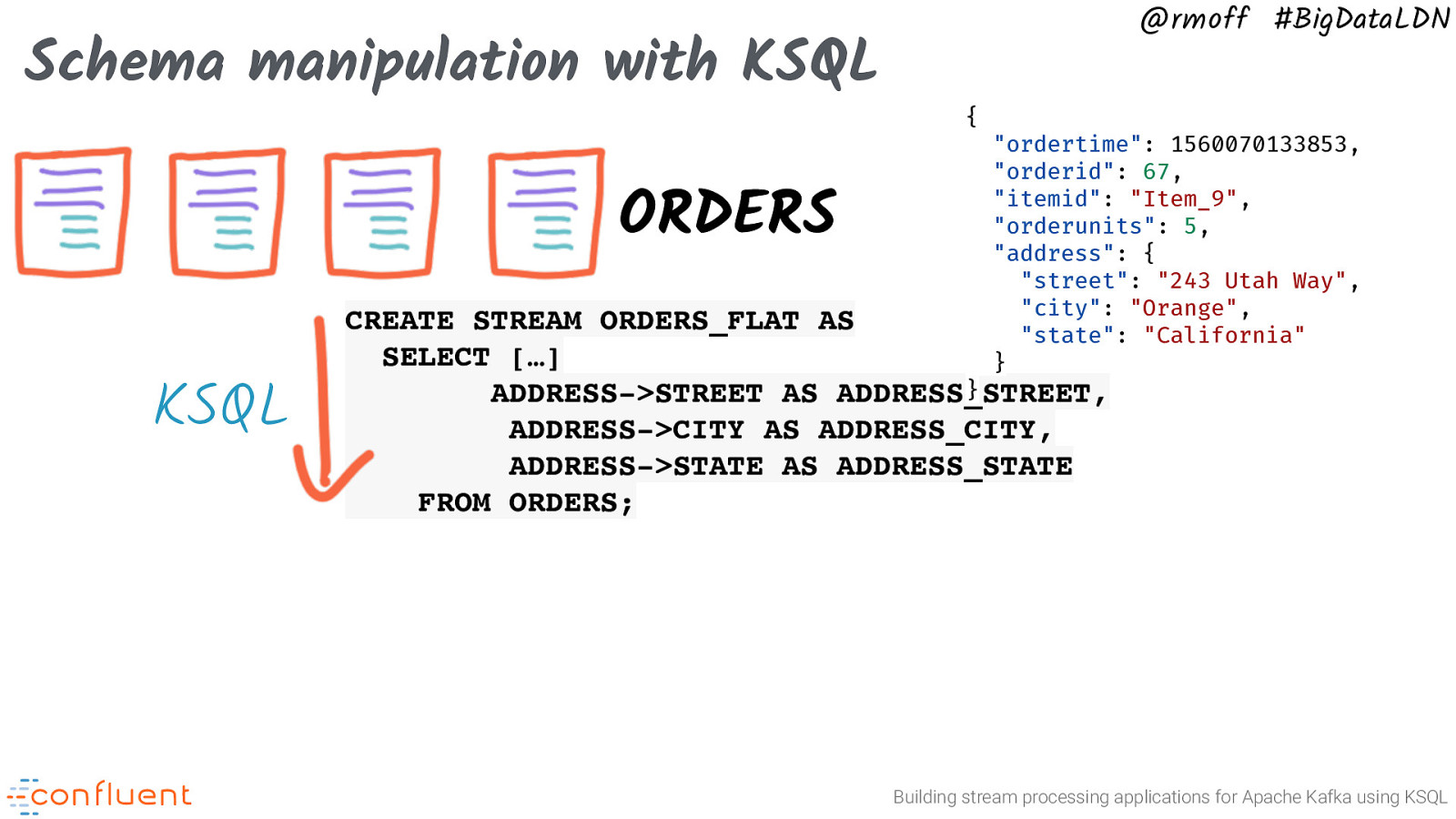

Schema manipulation with KSQL ORDERS KSQL @rmoff #BigDataLDN { “ordertime”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5, “address”: { “street”: “243 Utah Way”, “city”: “Orange”, “state”: “California” } CREATE STREAM ORDERS_FLAT AS SELECT […] } ADDRESS->STREET AS ADDRESS_STREET, ADDRESS->CITY AS ADDRESS_CITY, ADDRESS->STATE AS ADDRESS_STATE FROM ORDERS; Building stream processing applications for Apache Kafka using KSQL

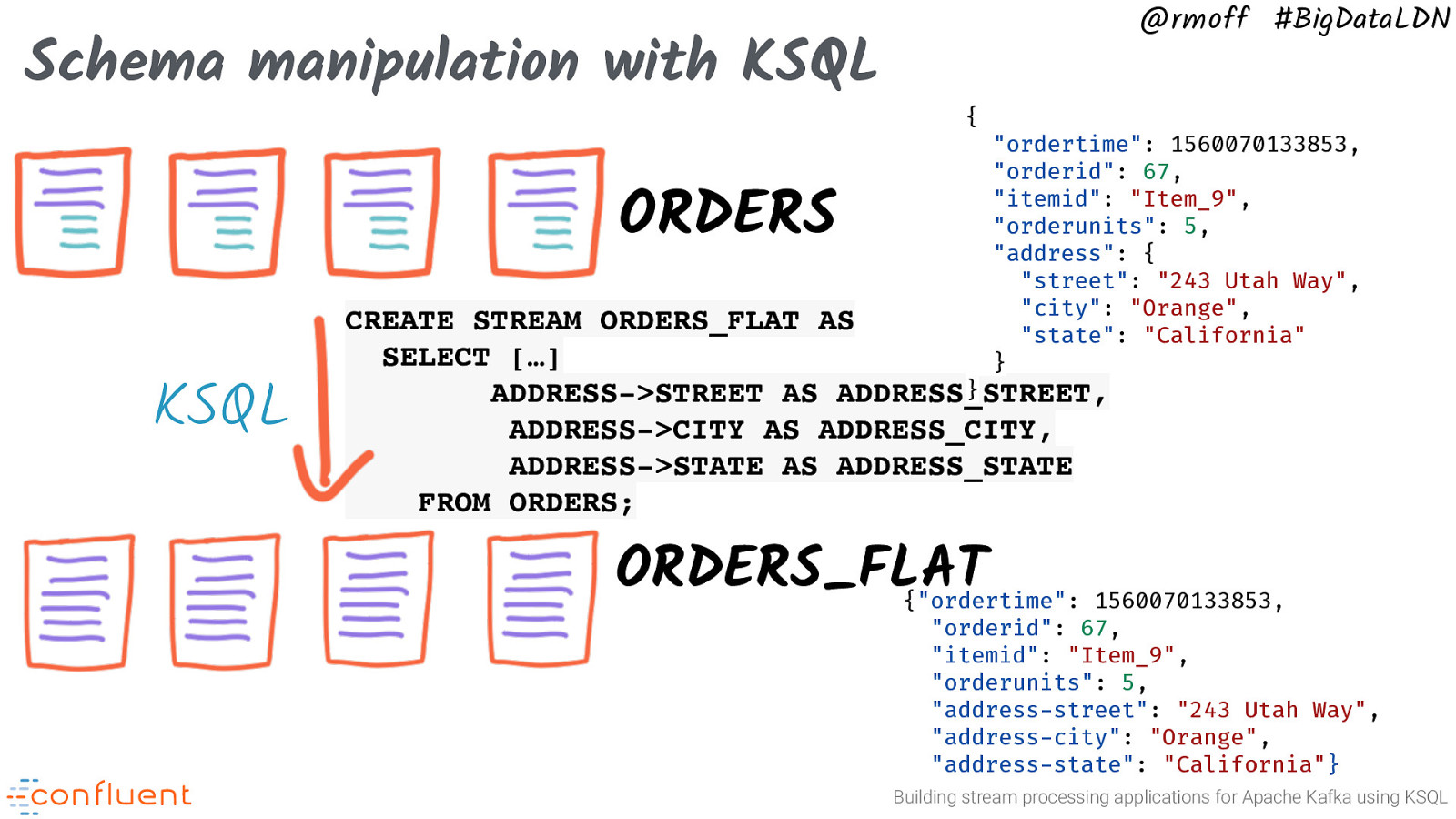

Schema manipulation with KSQL @rmoff #BigDataLDN { ORDERS KSQL “ordertime”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5, “address”: { “street”: “243 Utah Way”, “city”: “Orange”, “state”: “California” } CREATE STREAM ORDERS_FLAT AS SELECT […] } ADDRESS->STREET AS ADDRESS_STREET, ADDRESS->CITY AS ADDRESS_CITY, ADDRESS->STATE AS ADDRESS_STATE FROM ORDERS; ORDERS_FLAT {“ordertime”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5, “address-street”: “243 Utah Way”, “address-city”: “Orange”, “address-state”: “California”} Building stream processing applications for Apache Kafka using KSQL

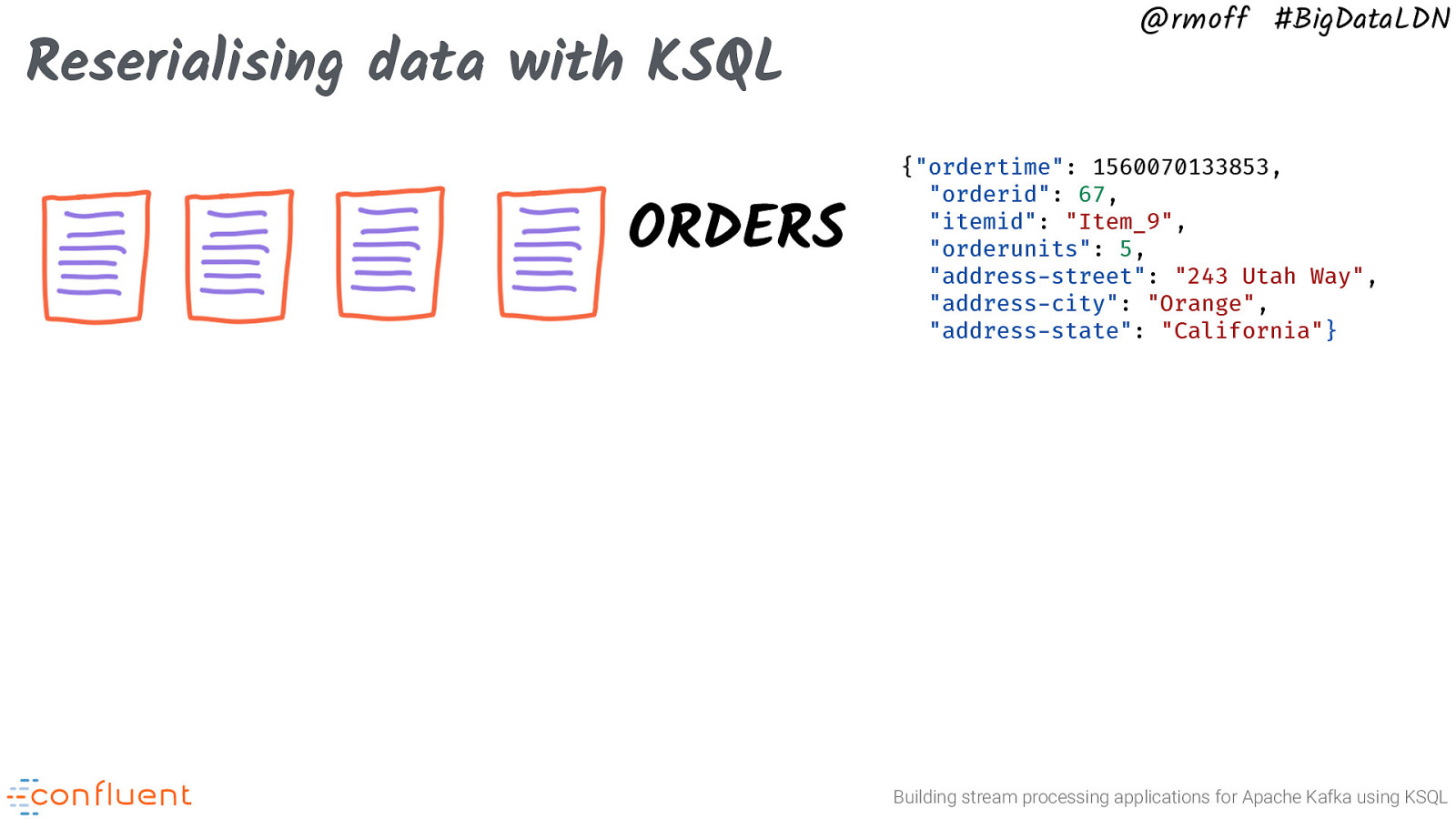

Reserialising data with KSQL ORDERS @rmoff #BigDataLDN {“ordertime”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5, “address-street”: “243 Utah Way”, “address-city”: “Orange”, “address-state”: “California”} Building stream processing applications for Apache Kafka using KSQL

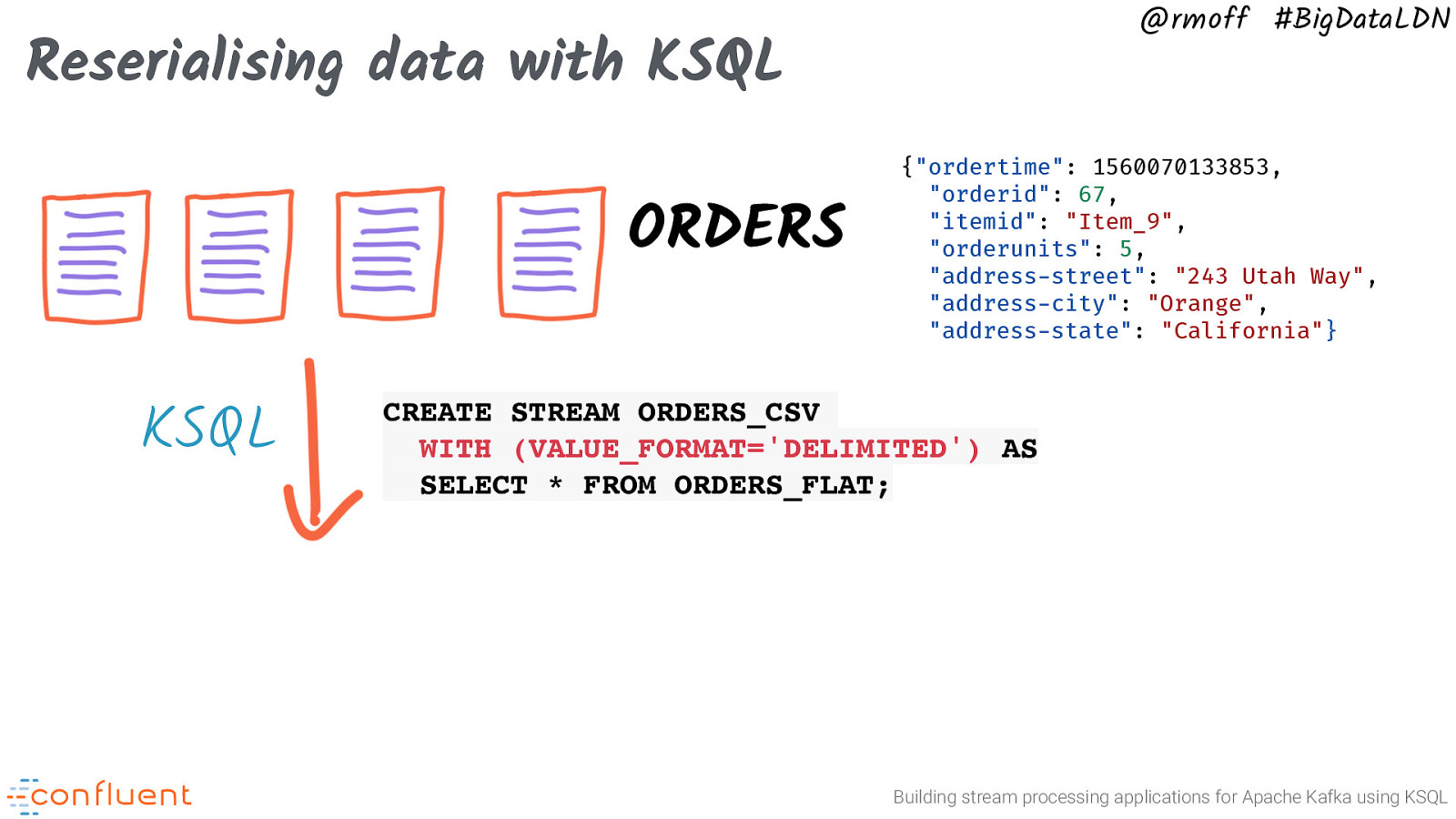

@rmoff #BigDataLDN Reserialising data with KSQL ORDERS KSQL {“ordertime”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5, “address-street”: “243 Utah Way”, “address-city”: “Orange”, “address-state”: “California”} CREATE STREAM ORDERS_CSV WITH (VALUE_FORMAT=’DELIMITED’) AS SELECT * FROM ORDERS_FLAT; Building stream processing applications for Apache Kafka using KSQL

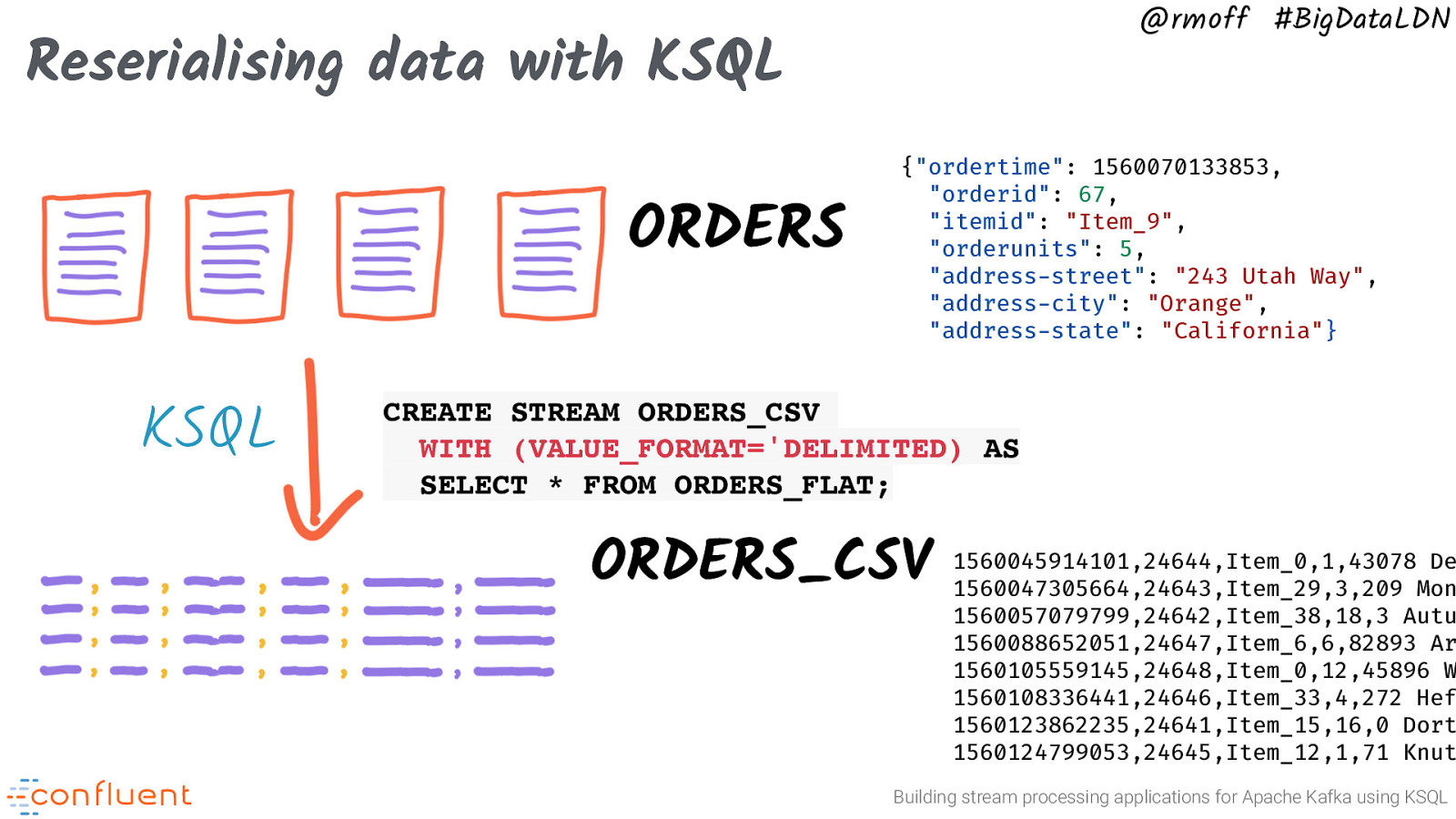

@rmoff #BigDataLDN Reserialising data with KSQL ORDERS KSQL {“ordertime”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5, “address-street”: “243 Utah Way”, “address-city”: “Orange”, “address-state”: “California”} CREATE STREAM ORDERS_CSV WITH (VALUE_FORMAT=’DELIMITED) AS SELECT * FROM ORDERS_FLAT; ORDERS_CSV 1560045914101,24644,Item_0,1,43078 De 1560047305664,24643,Item_29,3,209 Mon 1560057079799,24642,Item_38,18,3 Autu 1560088652051,24647,Item_6,6,82893 Ar 1560105559145,24648,Item_0,12,45896 W 1560108336441,24646,Item_33,4,272 Hef 1560123862235,24641,Item_15,16,0 Dort 1560124799053,24645,Item_12,1,71 Knut Building stream processing applications for Apache Kafka using KSQL

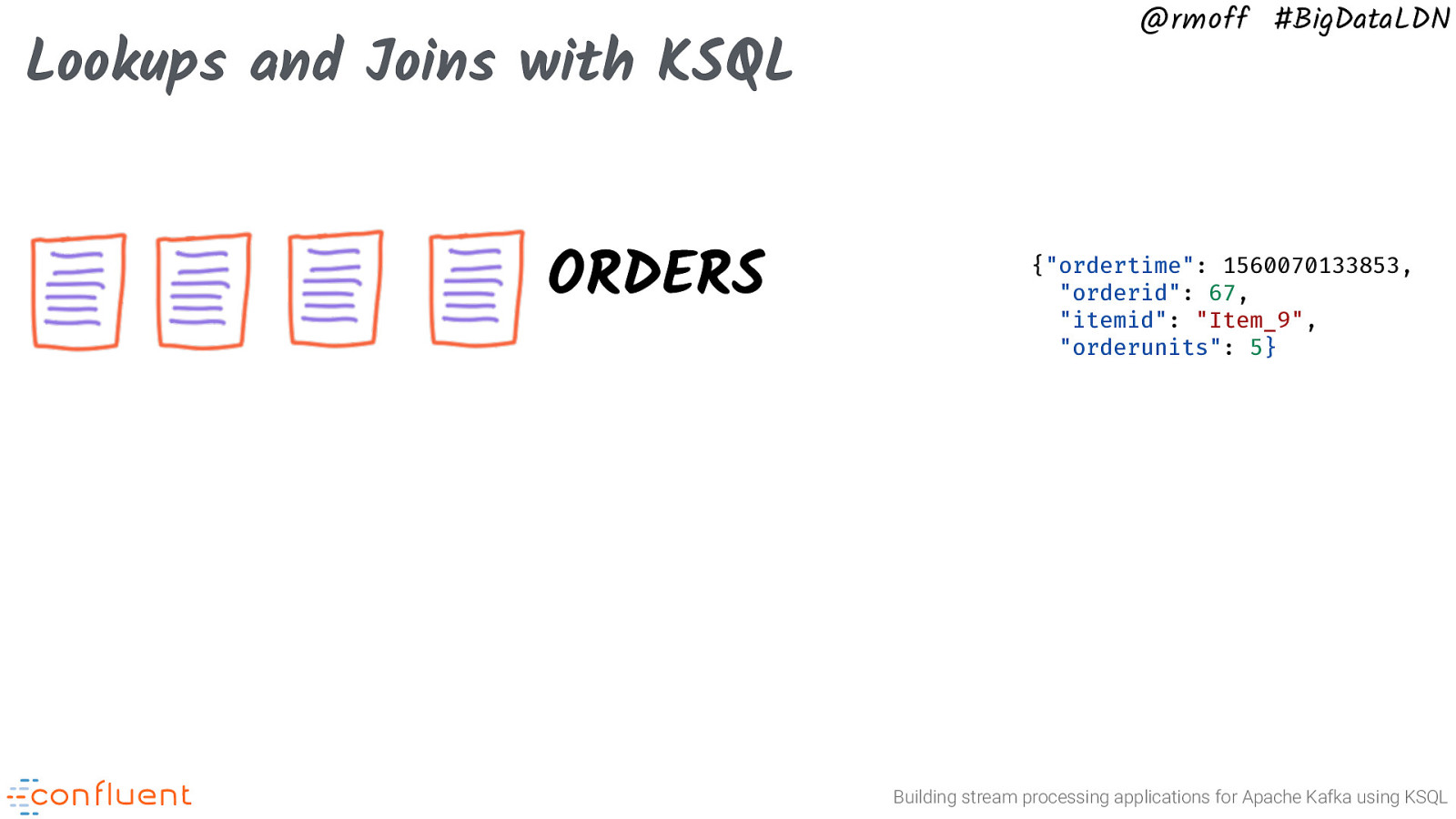

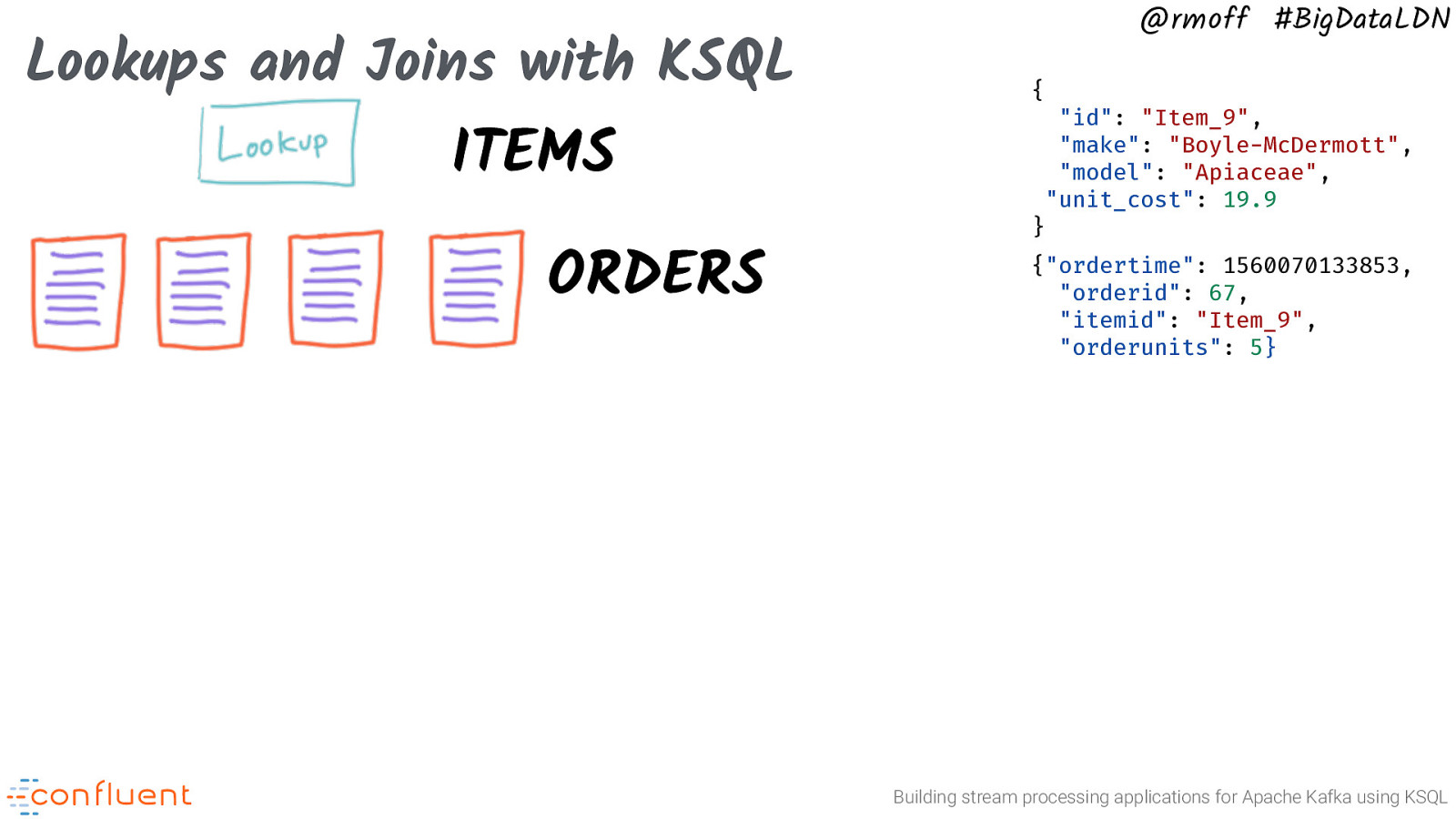

Lookups and Joins with KSQL ORDERS @rmoff #BigDataLDN {“ordertime”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5} Building stream processing applications for Apache Kafka using KSQL

Lookups and Joins with KSQL @rmoff #BigDataLDN { “id”: “Item_9”, “make”: “Boyle-McDermott”, “model”: “Apiaceae”, “unit_cost”: 19.9 ITEMS ORDERS } {“ordertime”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5} Building stream processing applications for Apache Kafka using KSQL

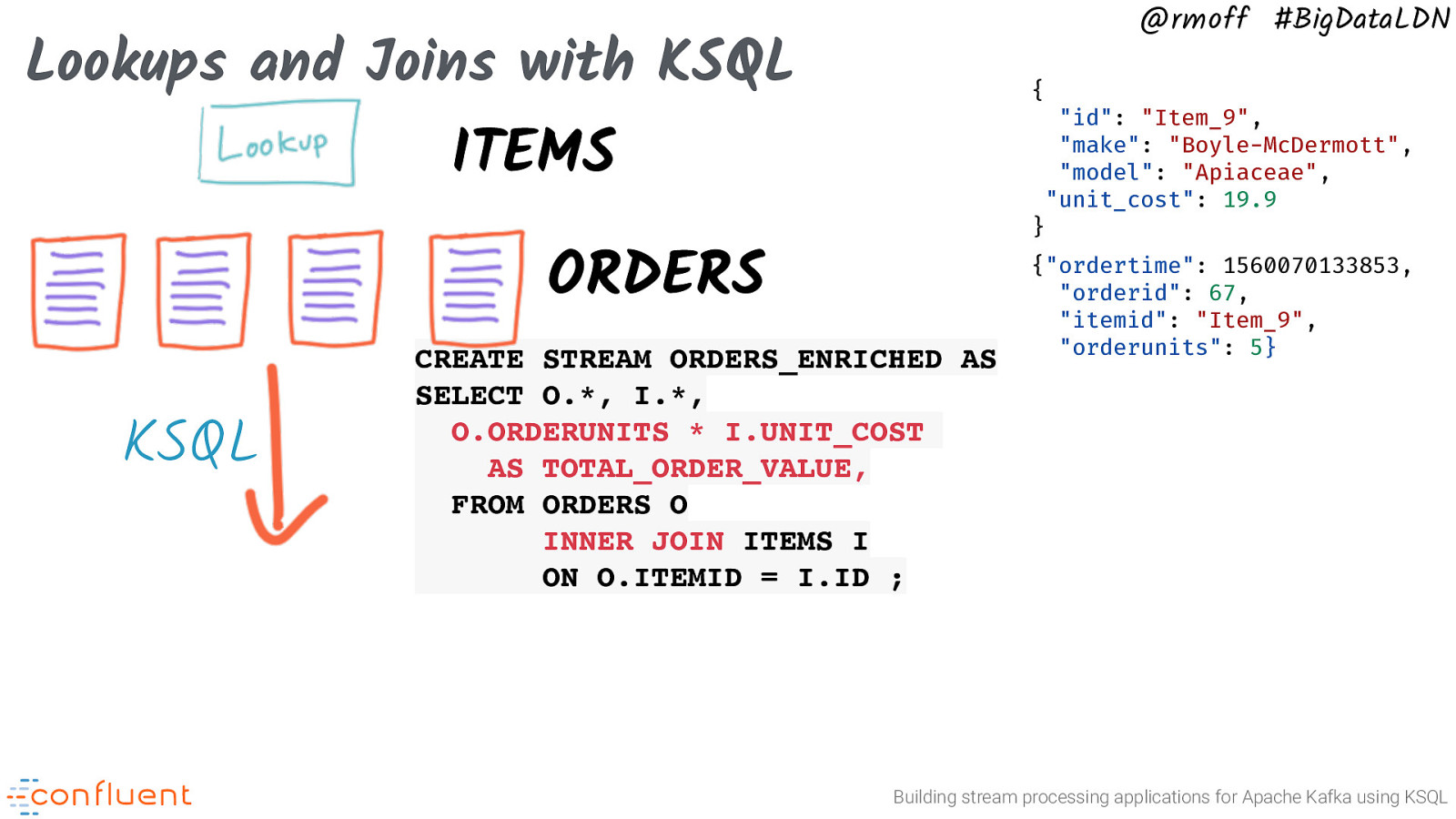

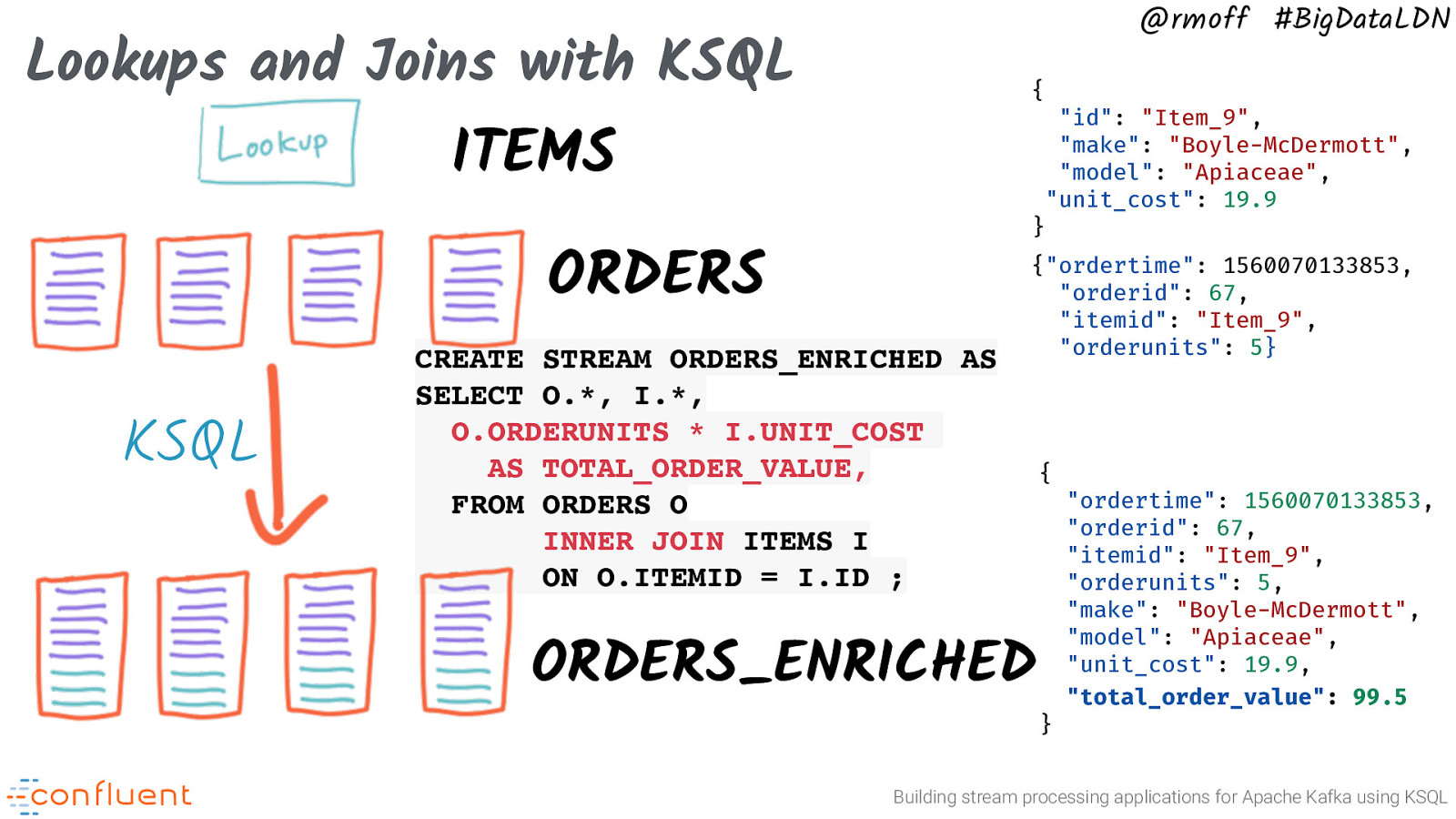

@rmoff #BigDataLDN Lookups and Joins with KSQL { “id”: “Item_9”, “make”: “Boyle-McDermott”, “model”: “Apiaceae”, “unit_cost”: 19.9 ITEMS } ORDERS KSQL CREATE STREAM ORDERS_ENRICHED AS SELECT O., I., O.ORDERUNITS * I.UNIT_COST AS TOTAL_ORDER_VALUE, FROM ORDERS O INNER JOIN ITEMS I ON O.ITEMID = I.ID ; {“ordertime”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5} Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN Lookups and Joins with KSQL { “id”: “Item_9”, “make”: “Boyle-McDermott”, “model”: “Apiaceae”, “unit_cost”: 19.9 ITEMS } ORDERS KSQL CREATE STREAM ORDERS_ENRICHED AS SELECT O., I., O.ORDERUNITS * I.UNIT_COST AS TOTAL_ORDER_VALUE, FROM ORDERS O INNER JOIN ITEMS I ON O.ITEMID = I.ID ; {“ordertime”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5} ORDERS_ENRICHED { } “ordertime”: 1560070133853, “orderid”: 67, “itemid”: “Item_9”, “orderunits”: 5, “make”: “Boyle-McDermott”, “model”: “Apiaceae”, “unit_cost”: 19.9, “total_order_value”: 99.5 Building stream processing applications for Apache Kafka using KSQL

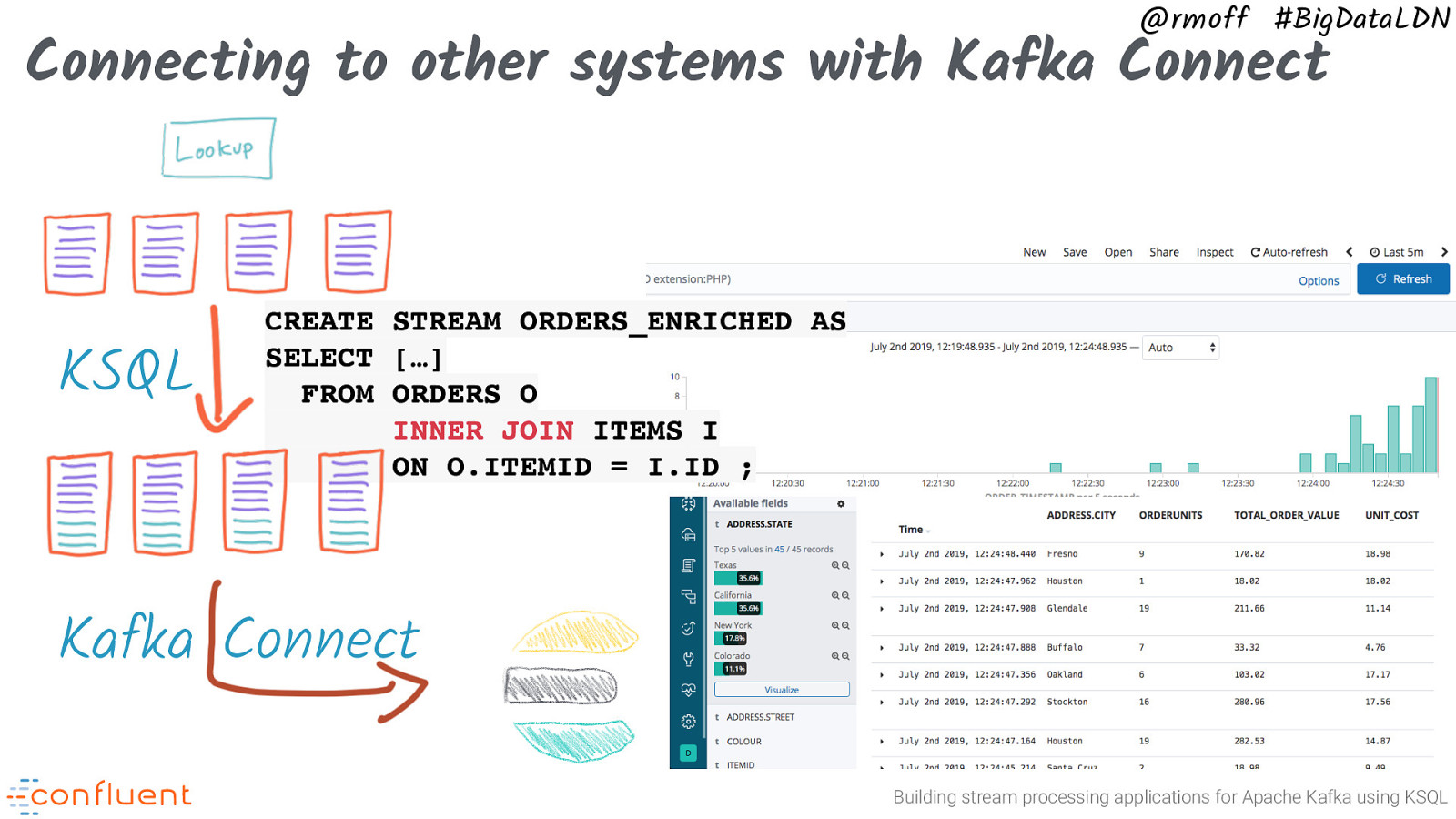

@rmoff #BigDataLDN Connecting to other systems with Kafka Connect KSQL CREATE STREAM ORDERS_ENRICHED AS SELECT […] FROM ORDERS O INNER JOIN ITEMS I ON O.ITEMID = I.ID ; Kafka Connect Building stream processing applications for Apache Kafka using KSQL

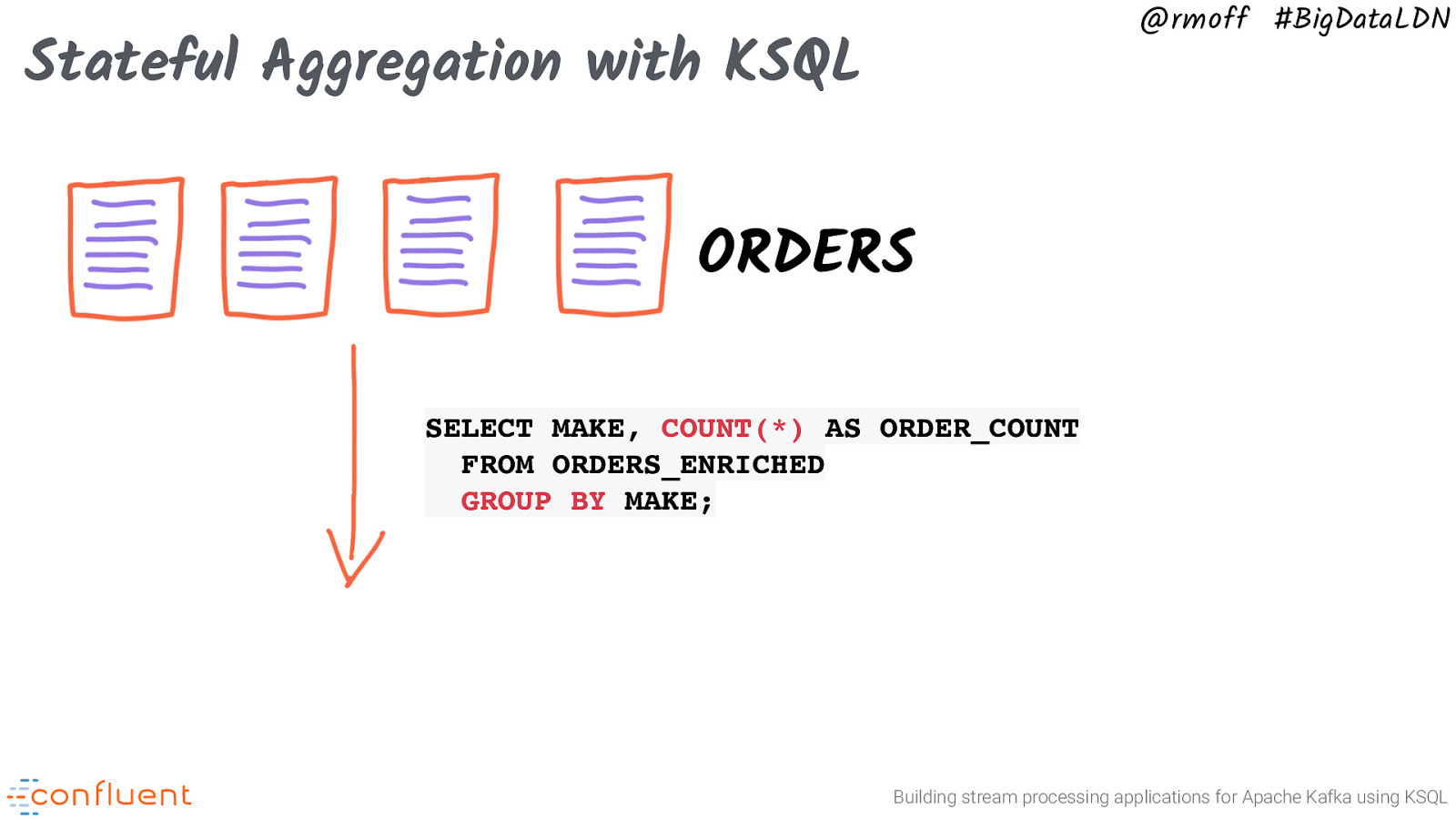

@rmoff #BigDataLDN Stateful Aggregation with KSQL ORDERS Building stream processing applications for Apache Kafka using KSQL

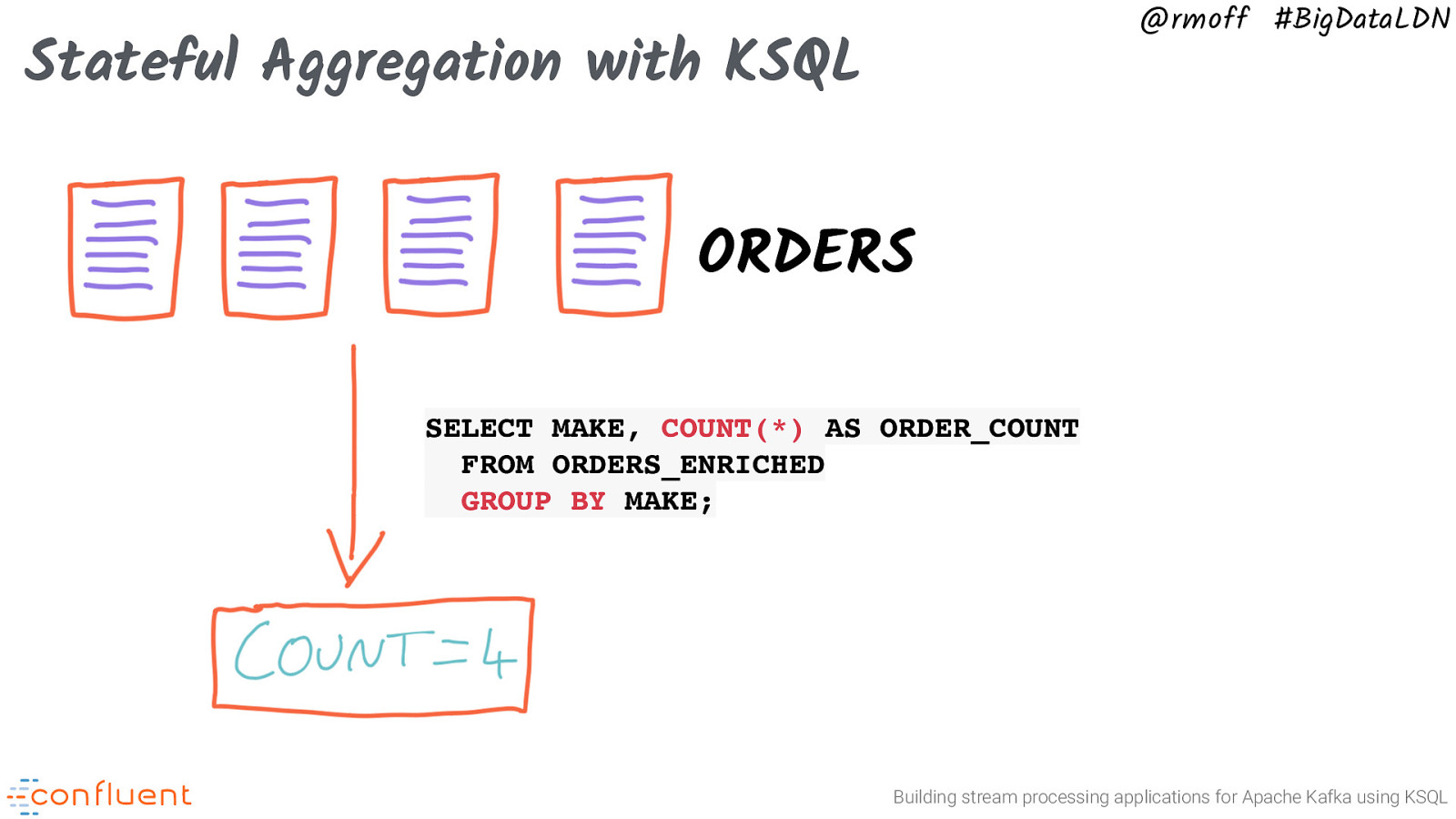

@rmoff #BigDataLDN Stateful Aggregation with KSQL ORDERS SELECT MAKE, COUNT(*) AS ORDER_COUNT FROM ORDERS_ENRICHED GROUP BY MAKE; Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN Stateful Aggregation with KSQL ORDERS SELECT MAKE, COUNT(*) AS ORDER_COUNT FROM ORDERS_ENRICHED GROUP BY MAKE; Building stream processing applications for Apache Kafka using KSQL

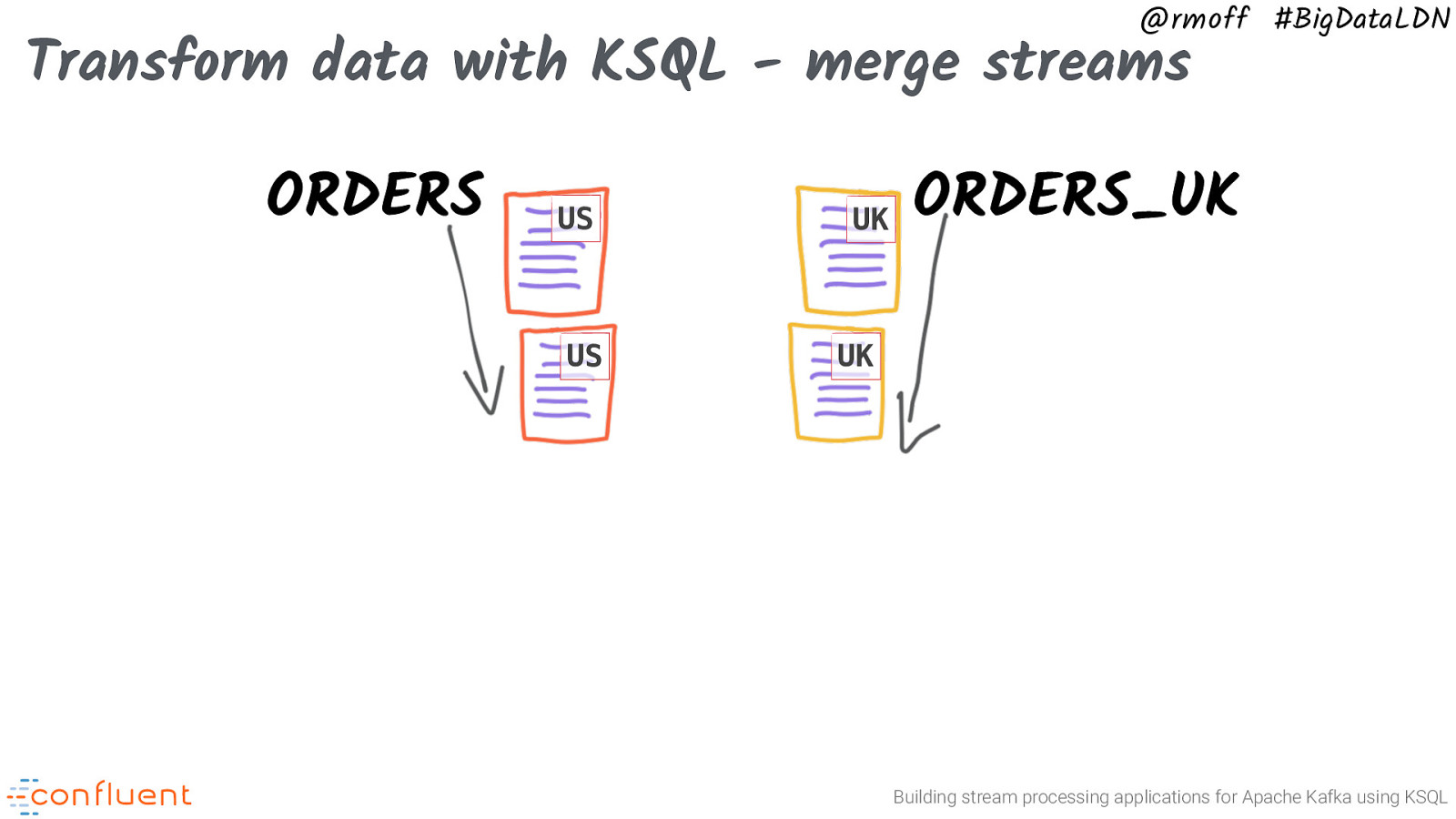

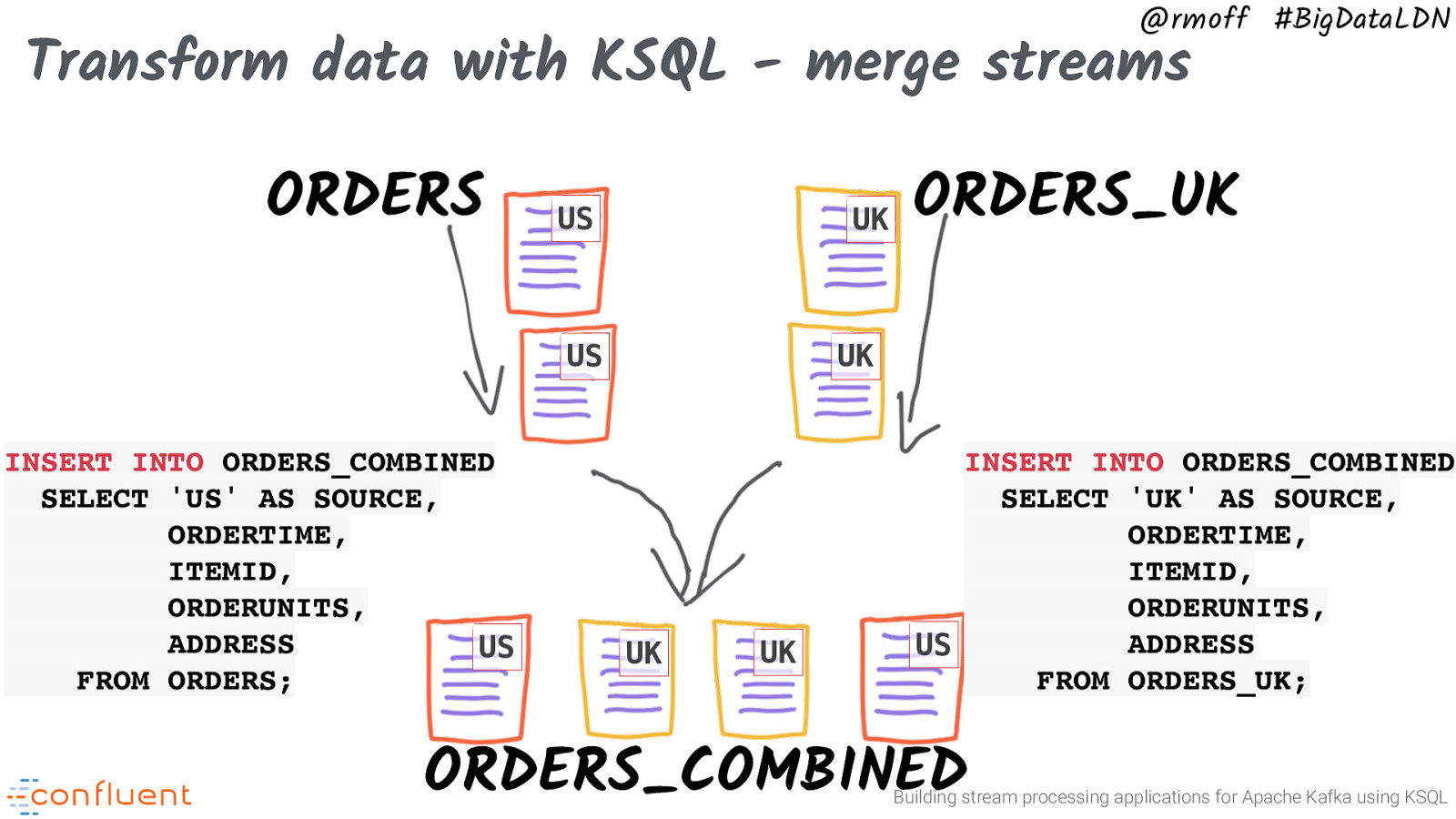

@rmoff #BigDataLDN Transform data with KSQL - merge streams ORDERS US US UK ORDERS_UK UK Building stream processing applications for Apache Kafka using KSQL

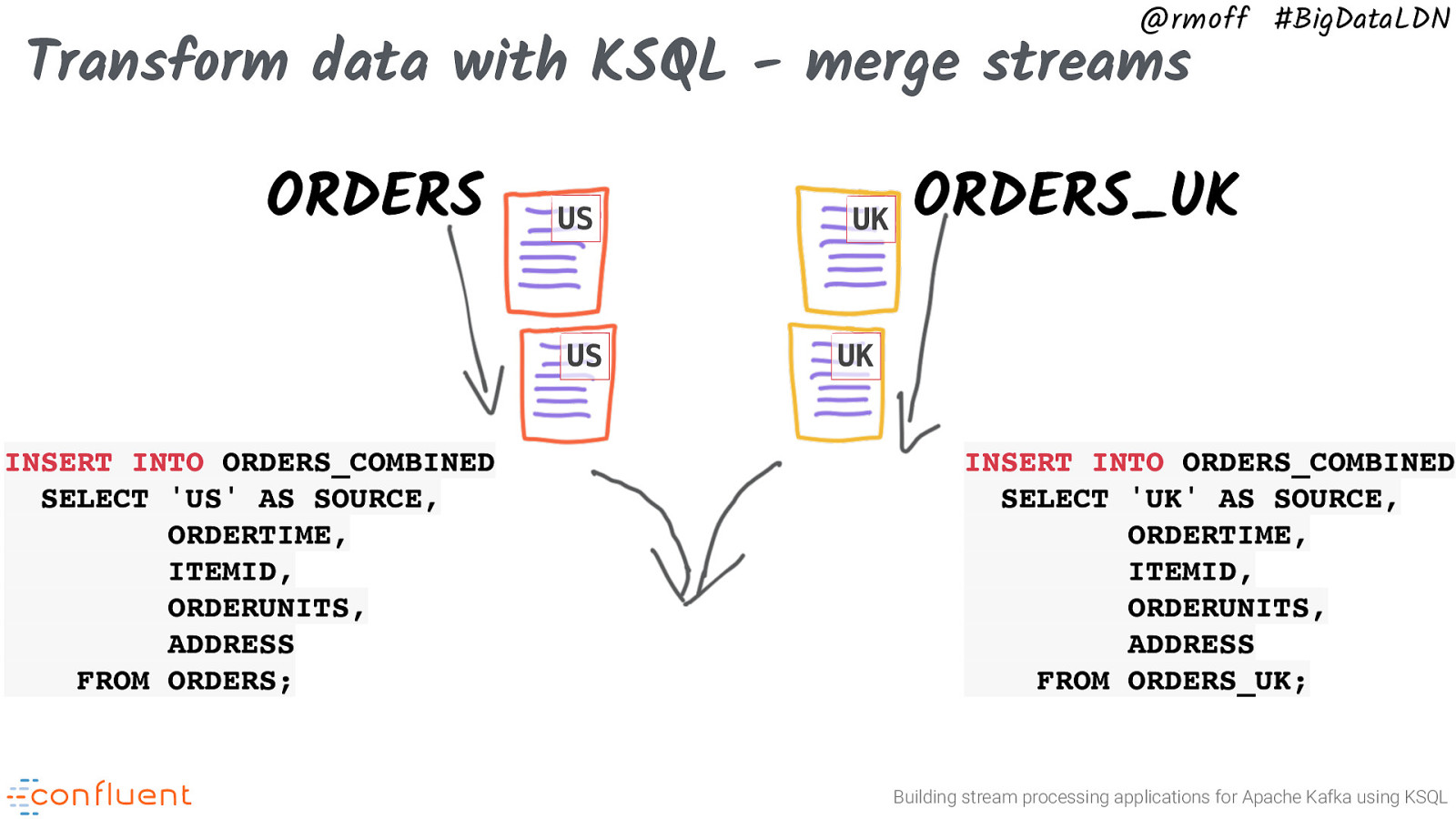

@rmoff #BigDataLDN Transform data with KSQL - merge streams ORDERS US US INSERT INTO ORDERS_COMBINED SELECT ‘US’ AS SOURCE, ORDERTIME, ITEMID, ORDERUNITS, ADDRESS FROM ORDERS; UK ORDERS_UK UK INSERT INTO ORDERS_COMBINED SELECT ‘UK’ AS SOURCE, ORDERTIME, ITEMID, ORDERUNITS, ADDRESS FROM ORDERS_UK; Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN Transform data with KSQL - merge streams ORDERS US UK US INSERT INTO ORDERS_COMBINED SELECT ‘US’ AS SOURCE, ORDERTIME, ITEMID, ORDERUNITS, ADDRESS US FROM ORDERS; ORDERS_UK UK UK UK INSERT INTO ORDERS_COMBINED SELECT ‘UK’ AS SOURCE, ORDERTIME, ITEMID, ORDERUNITS, ADDRESS US FROM ORDERS_UK; ORDERS_COMBINED Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN Transform data with KSQL - split streams US UK UK US ORDERS_COMBINED Building stream processing applications for Apache Kafka using KSQL

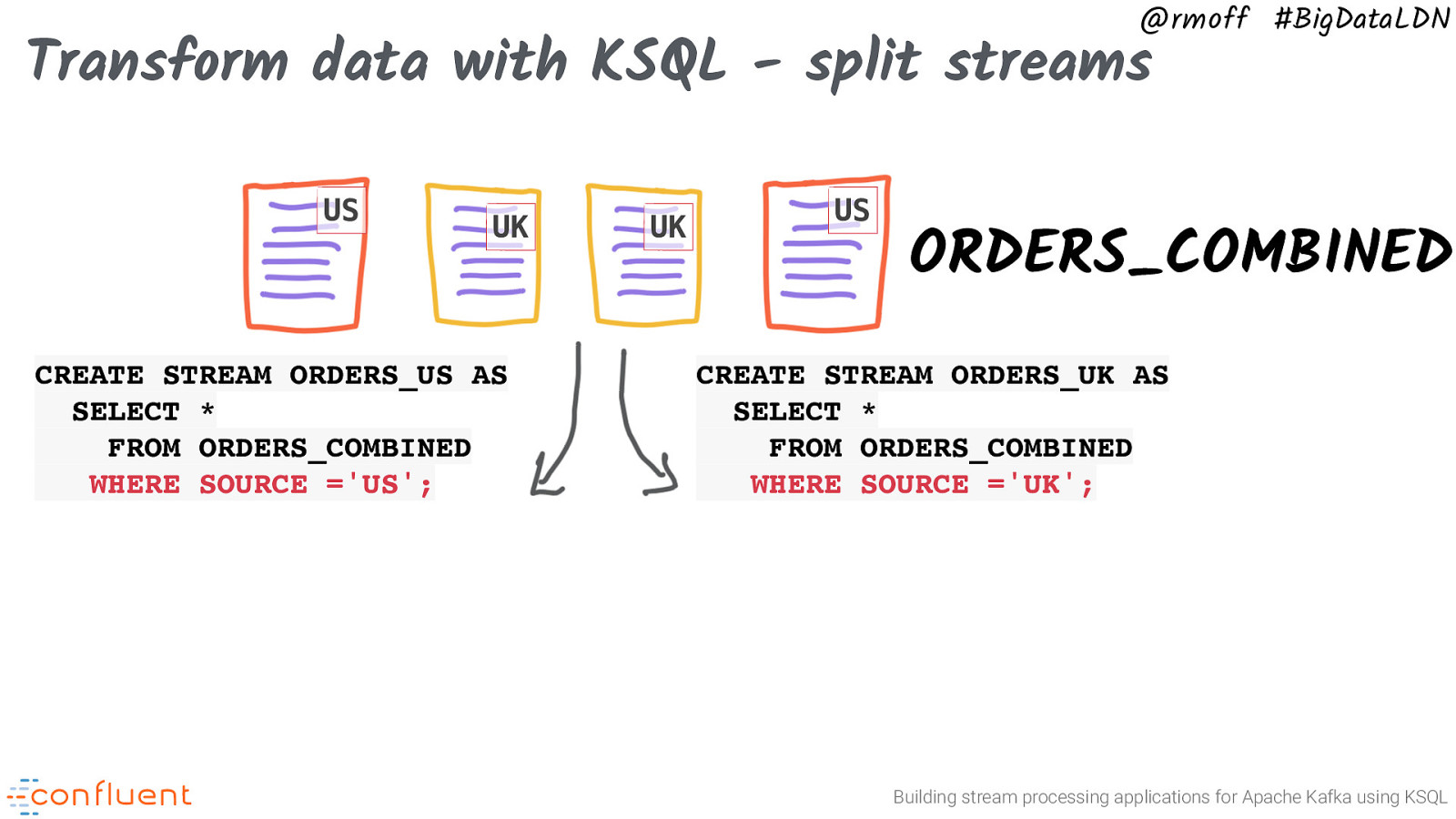

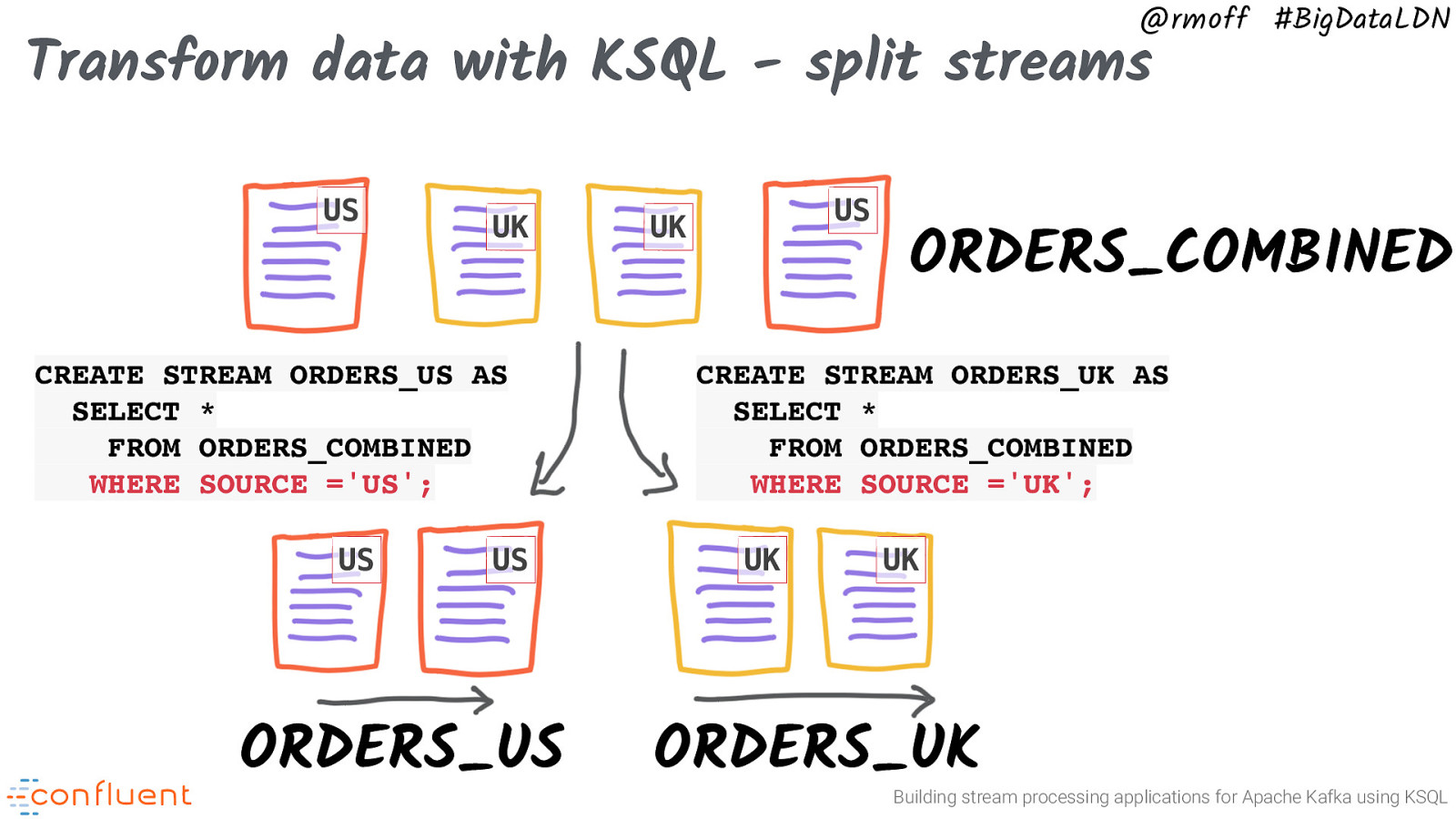

@rmoff #BigDataLDN Transform data with KSQL - split streams US UK CREATE STREAM ORDERS_US AS SELECT * FROM ORDERS_COMBINED WHERE SOURCE =’US’; UK US ORDERS_COMBINED CREATE STREAM ORDERS_UK AS SELECT * FROM ORDERS_COMBINED WHERE SOURCE =’UK’; Building stream processing applications for Apache Kafka using KSQL

@rmoff #BigDataLDN Transform data with KSQL - split streams US UK CREATE STREAM ORDERS_US AS SELECT * FROM ORDERS_COMBINED WHERE SOURCE =’US’; US US ORDERS_US US UK ORDERS_COMBINED CREATE STREAM ORDERS_UK AS SELECT * FROM ORDERS_COMBINED WHERE SOURCE =’UK’; UK UK ORDERS_UK Building stream processing applications for Apache Kafka using KSQL