A presentation at Kafka Summit 2020 in August 2020 in by Robin Moffatt

$ whoami • Robin Moffatt (@rmoff) • Senior Developer Advocate at Confluent (Apache Kafka, not Wikis 😉) • Working in data & analytics since 2001 • Oracle ACE Director (Alumnus) http://rmoff.dev/talks · http://rmoff.dev/blog · http://rmoff.dev/youtube @rmoff | #ConfluentVUG | @confluentinc

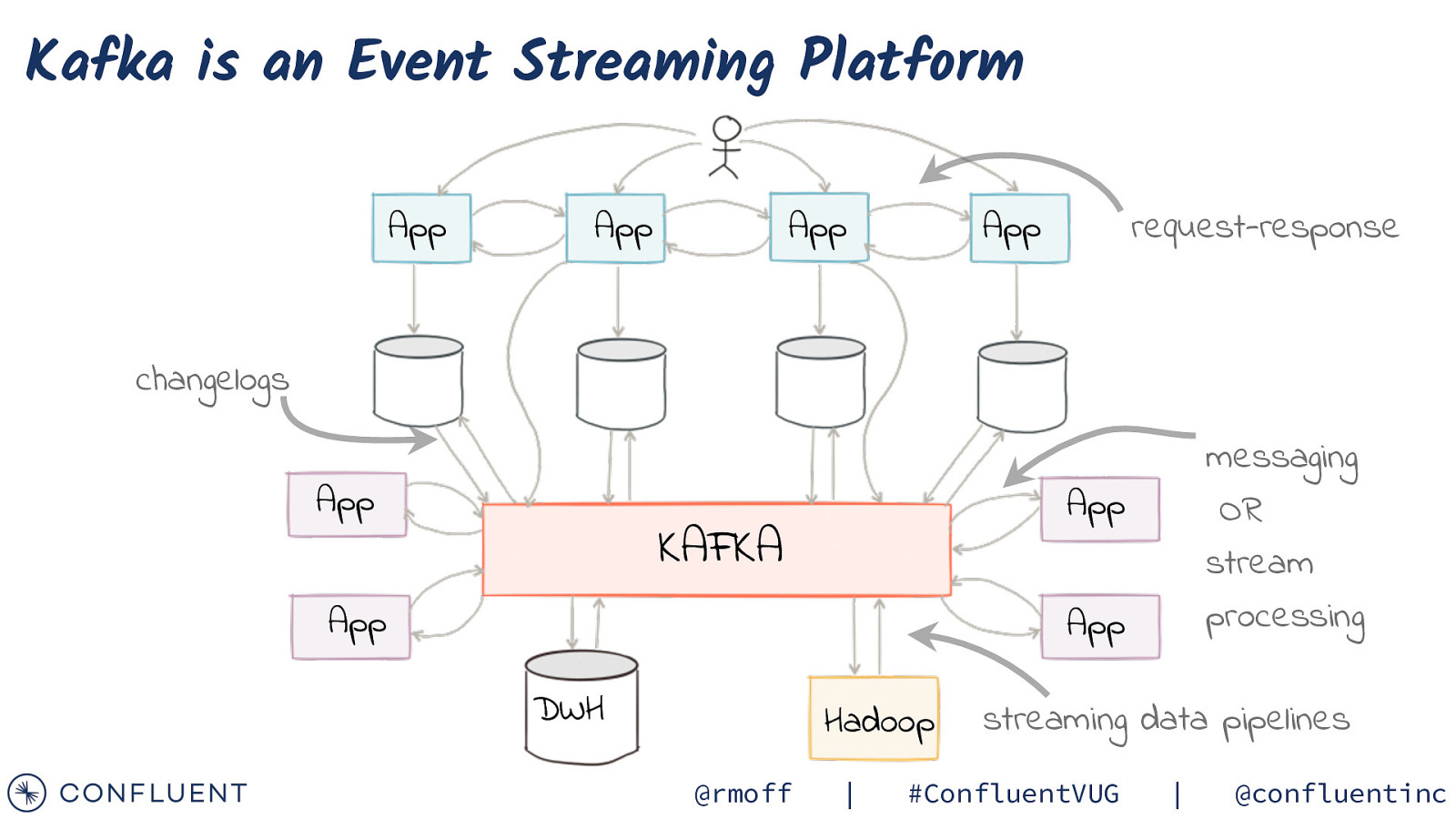

Kafka is an Event Streaming Platform App App App App request-response changelogs App messaging OR stream processing App KAFKA App App DWH Hadoop @rmoff | streaming data pipelines #ConfluentVUG | @confluentinc

@rmoff | #ConfluentVUG | @confluentinc Photo by Victor Garcia on Unsplash Streaming Data Pipelines

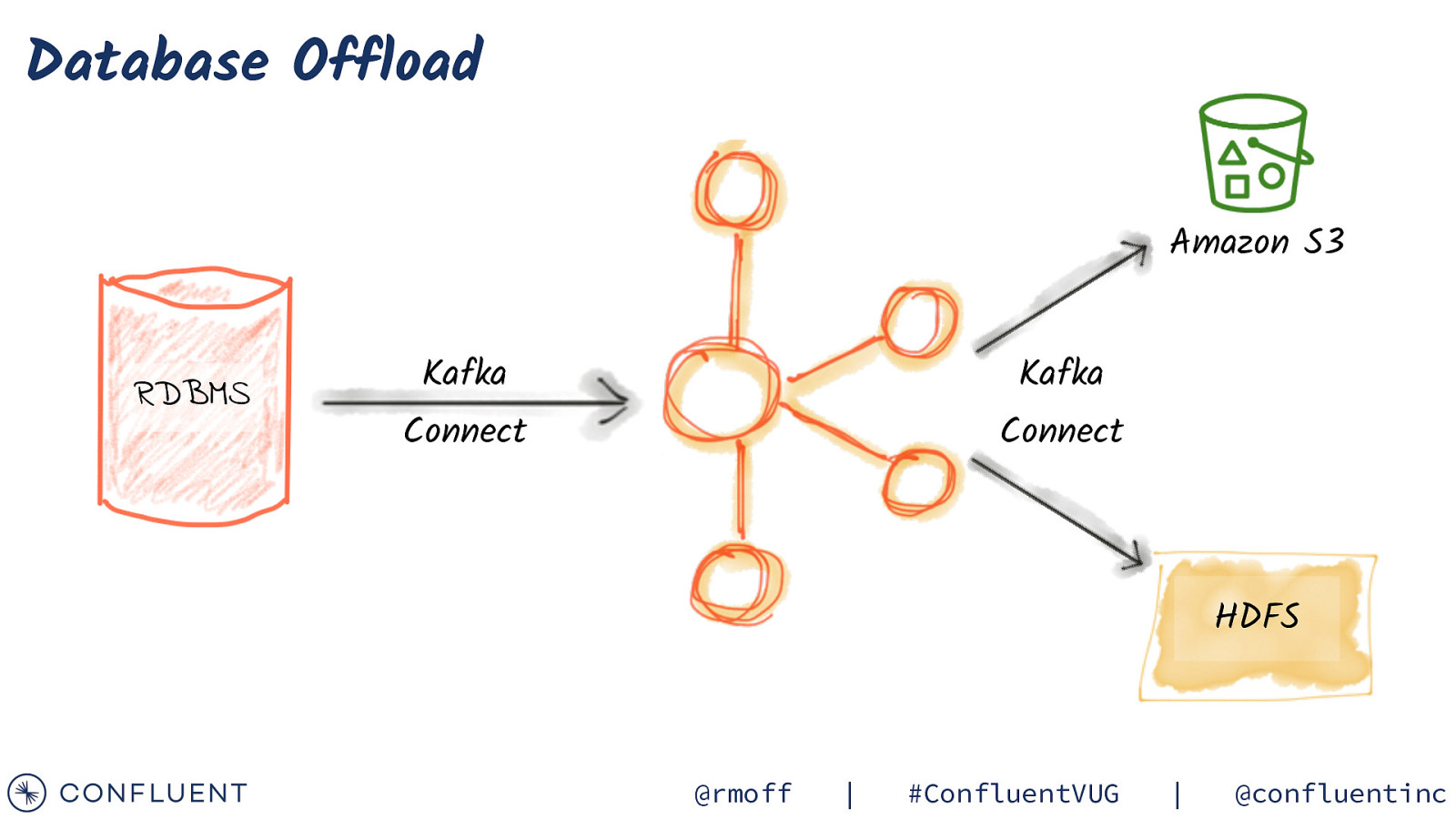

Database Offload Amazon S3 RDBMS Kafka Kafka Connect Connect HDFS @rmoff | #ConfluentVUG | @confluentinc

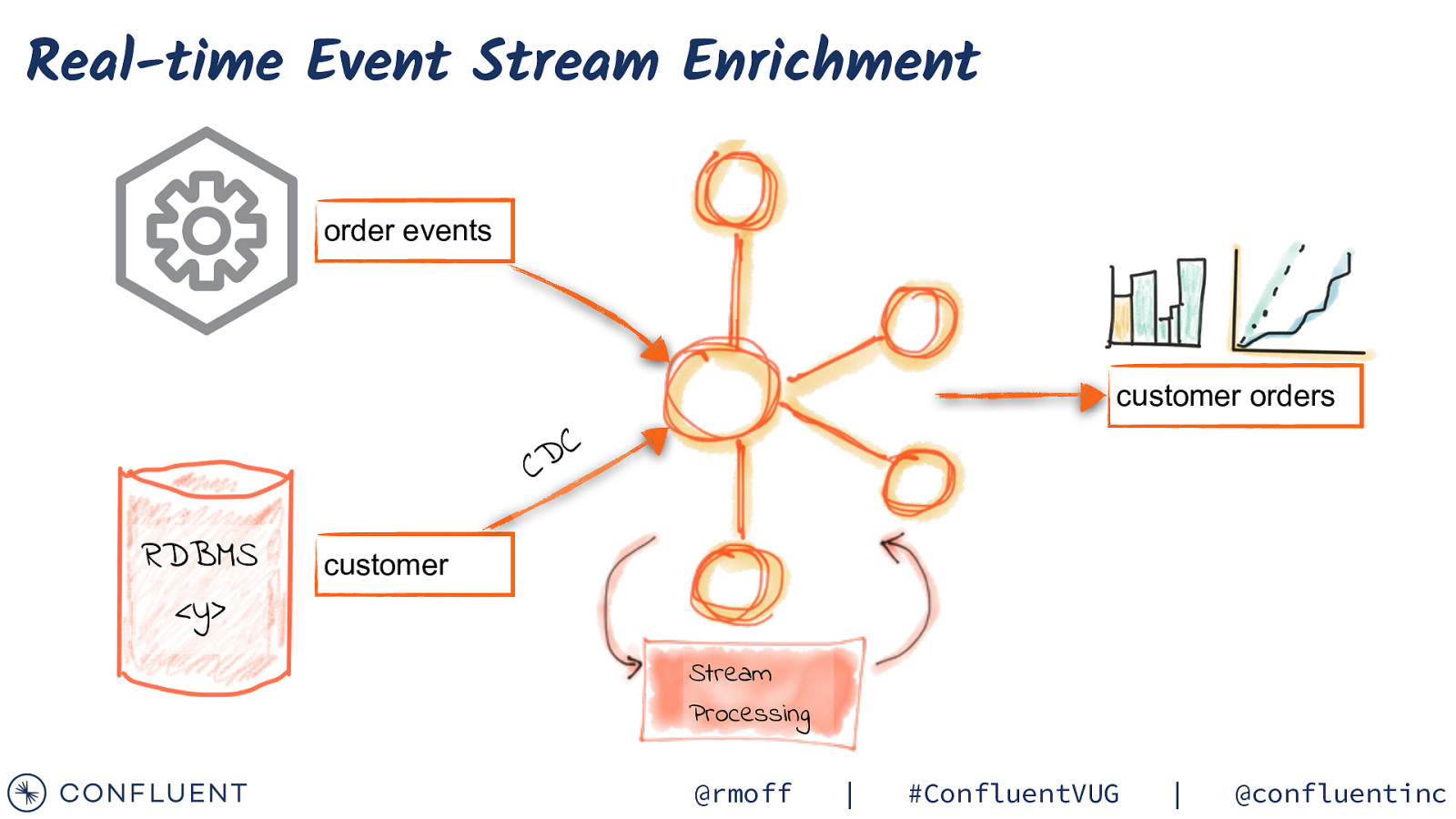

Real-time Event Stream Enrichment order events customer orders C D C RDBMS <y> customer Stream Processing @rmoff | #ConfluentVUG | @confluentinc

Building a streaming data pipeline @rmoff | #ConfluentVUG | @confluentinc

Stream Integration + Processing @rmoff | #ConfluentVUG | @confluentinc

Integration @rmoff | #ConfluentVUG | @confluentinc

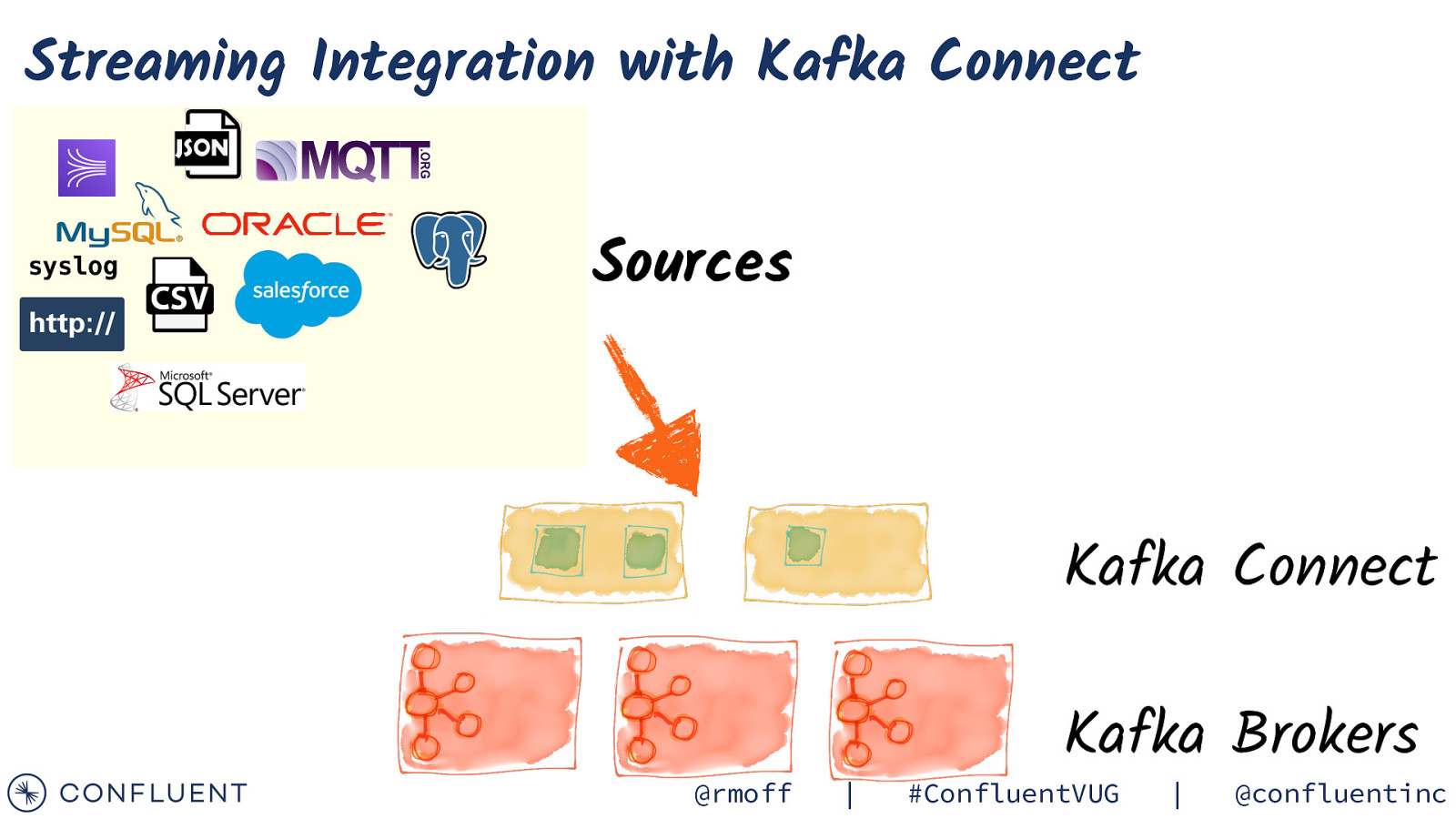

Streaming Integration with Kafka Connect syslog Sources Kafka Connect @rmoff | Kafka Brokers #ConfluentVUG | @confluentinc

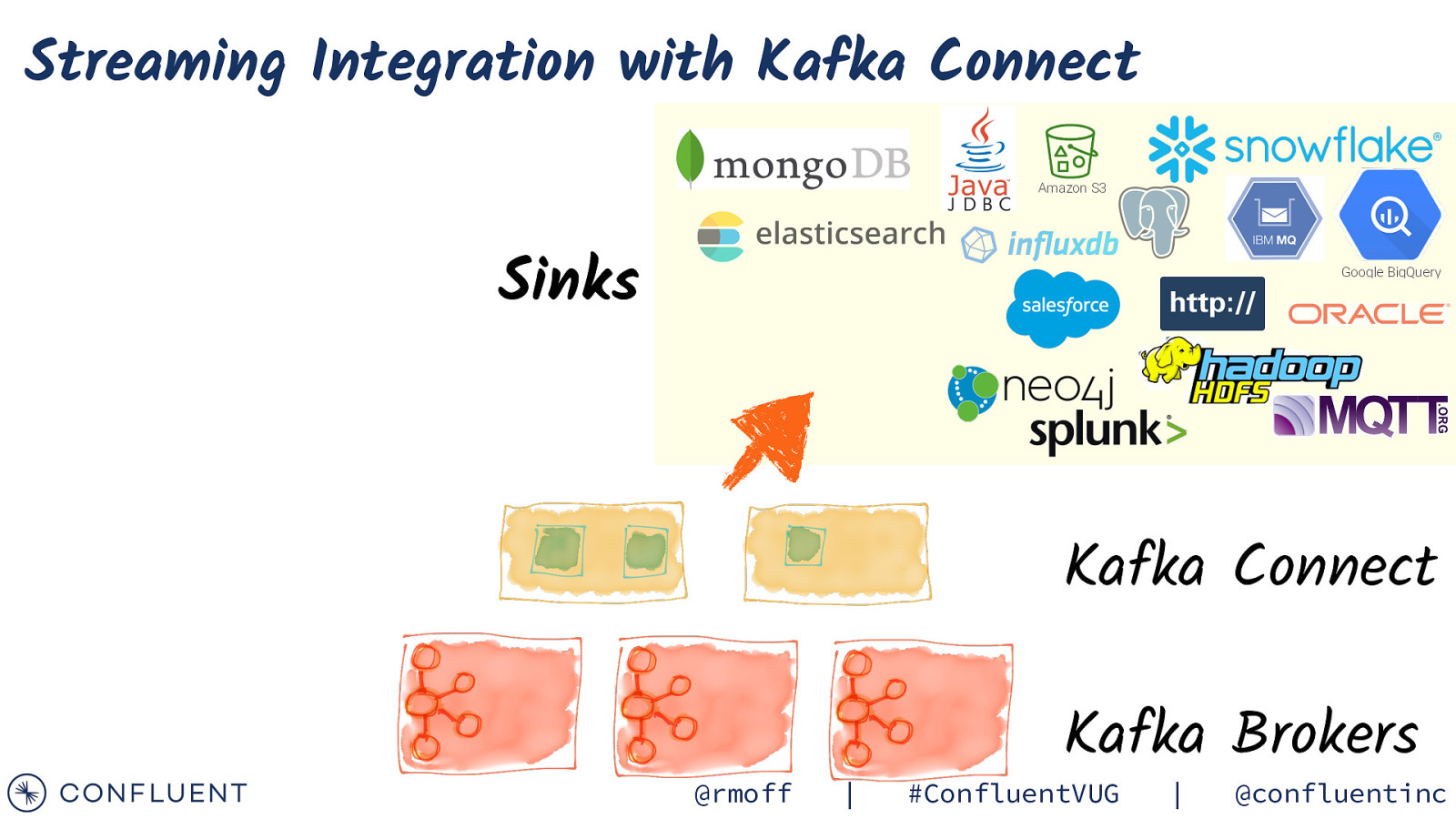

Streaming Integration with Kafka Connect Amazon S3 Sinks Google BigQuery Kafka Connect @rmoff | Kafka Brokers #ConfluentVUG | @confluentinc

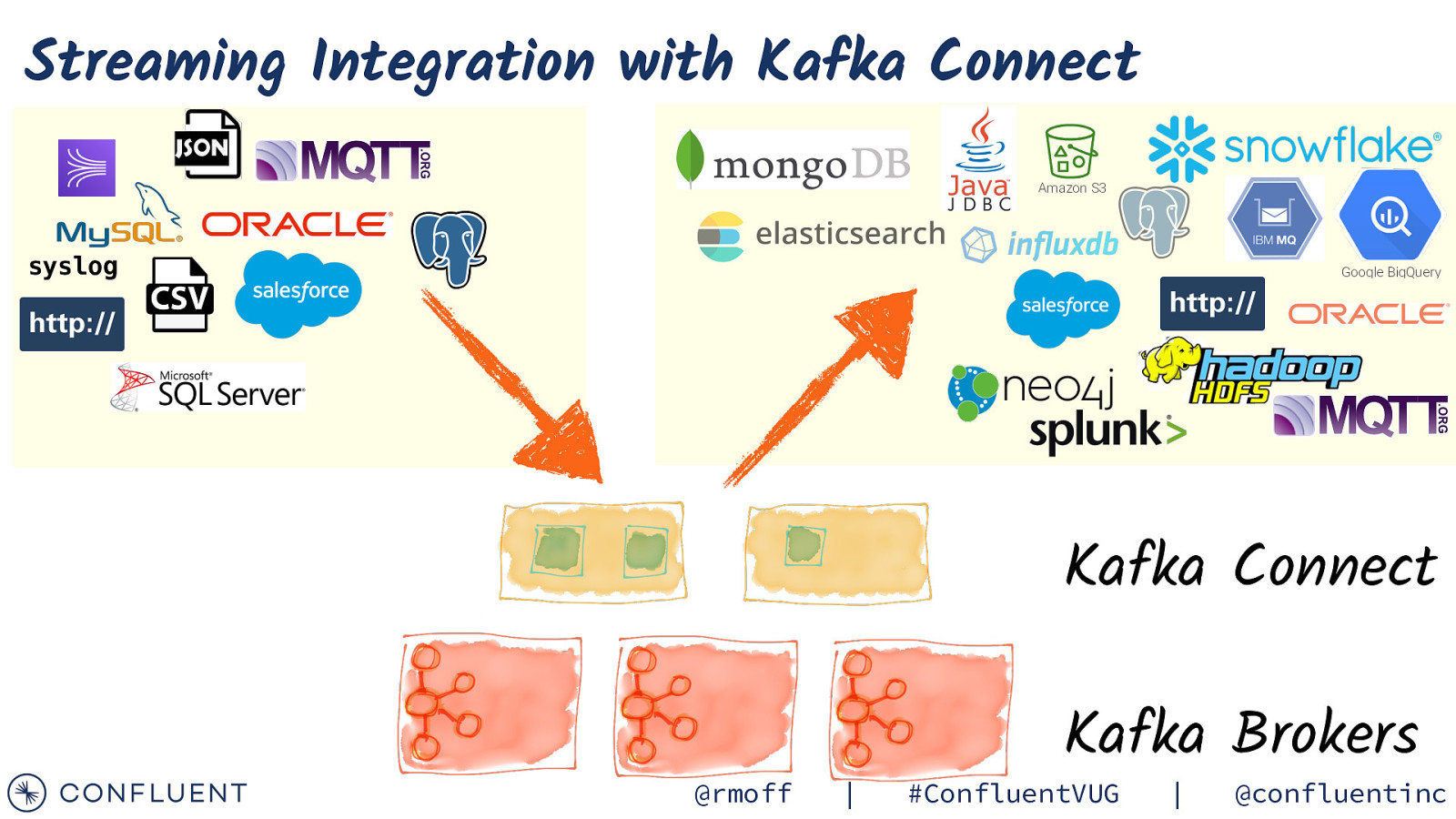

Streaming Integration with Kafka Connect Amazon S3 syslog Google BigQuery Kafka Connect @rmoff | Kafka Brokers #ConfluentVUG | @confluentinc

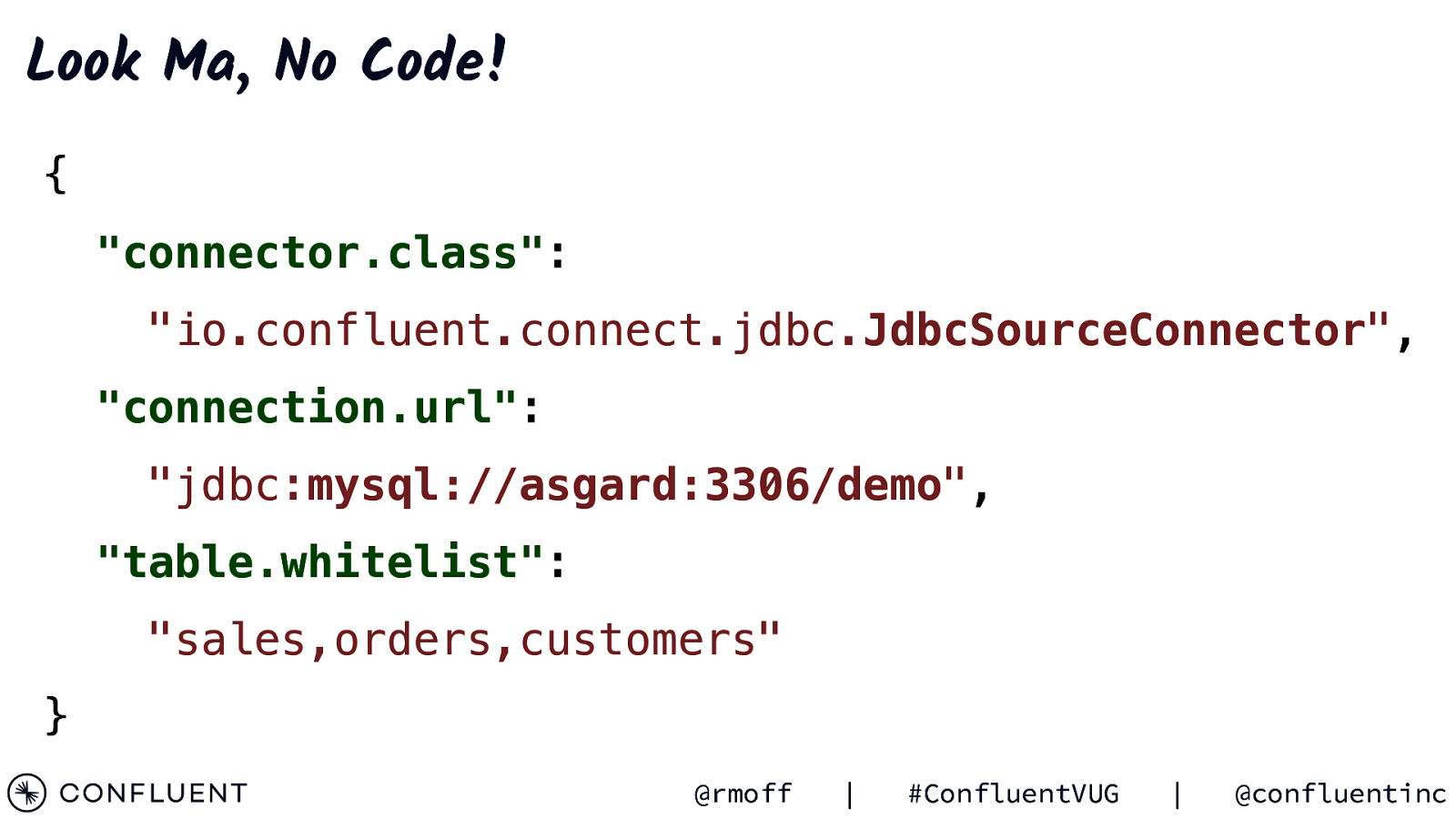

Look Ma, No Code! { “connector.class”: “io.confluent.connect.jdbc.JdbcSourceConnector”, “connection.url”: “jdbc:mysql://asgard:3306/demo”, “table.whitelist”: “sales,orders,customers” } @rmoff | #ConfluentVUG | @confluentinc

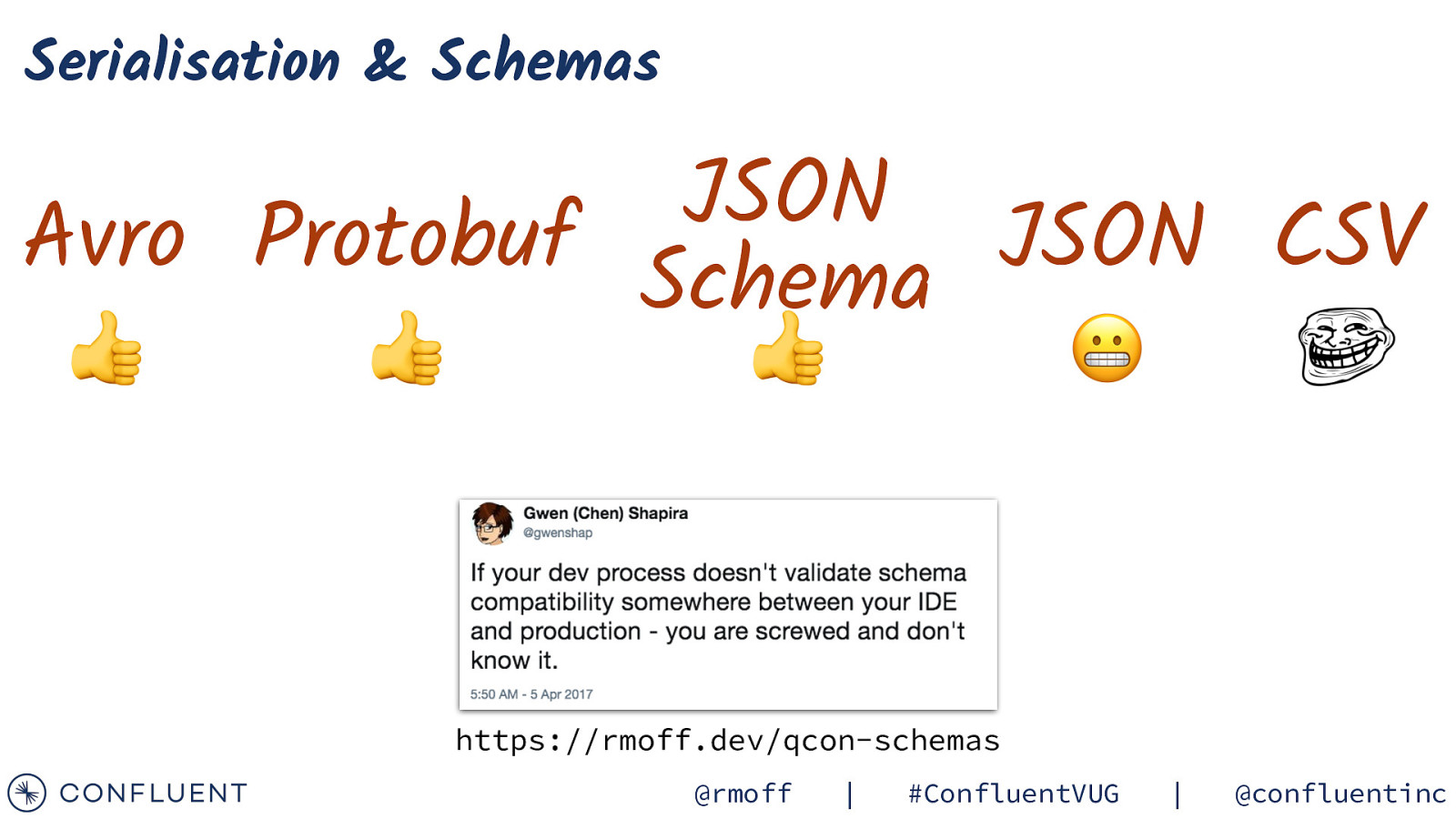

Serialisation & Schemas JSON Avro Protobuf Schema JSON CSV 👍 👍 👍 😬 https://rmoff.dev/qcon-schemas @rmoff | #ConfluentVUG | @confluentinc

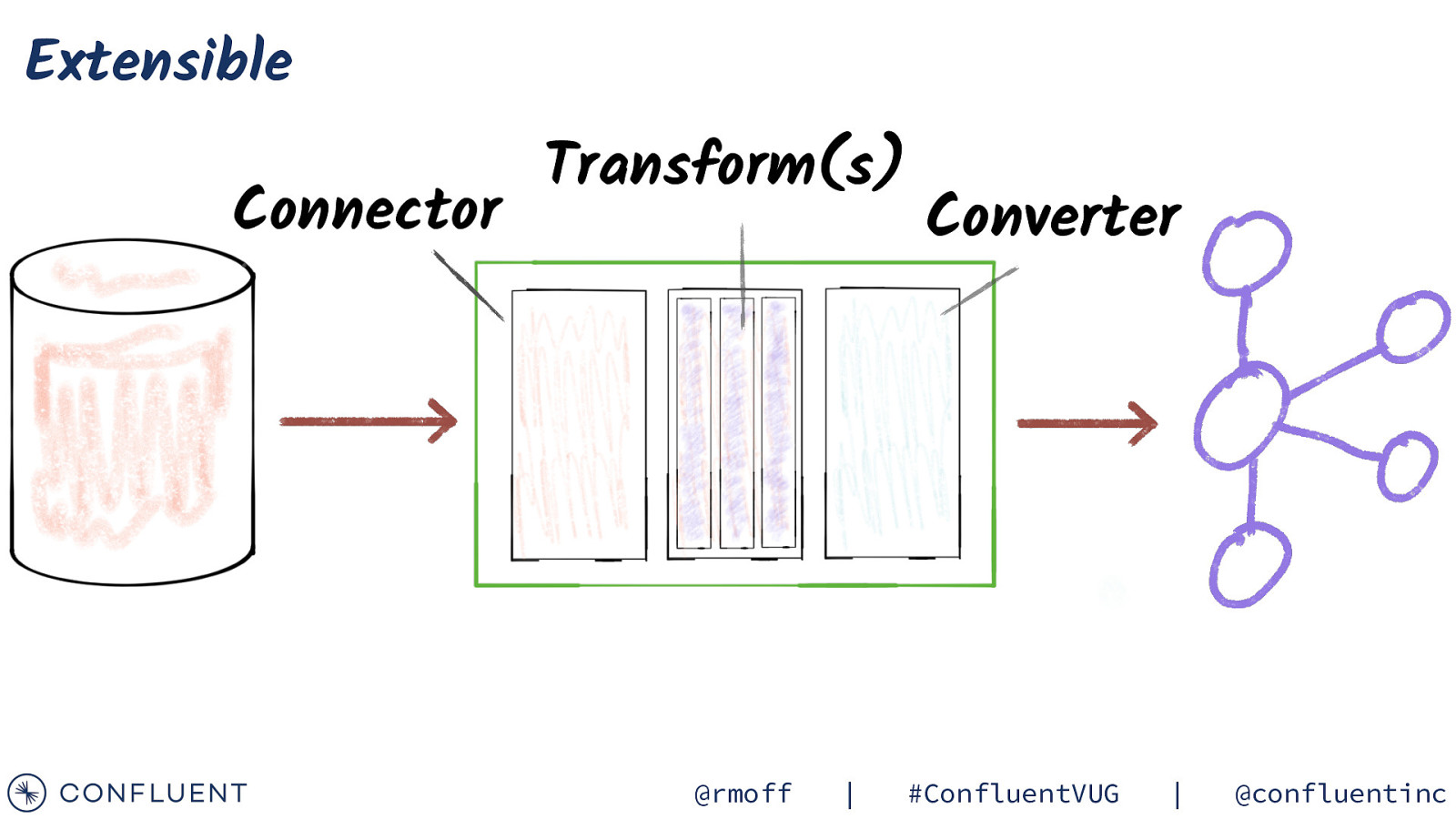

Extensible Connector Transform(s) @rmoff | Converter #ConfluentVUG | @confluentinc

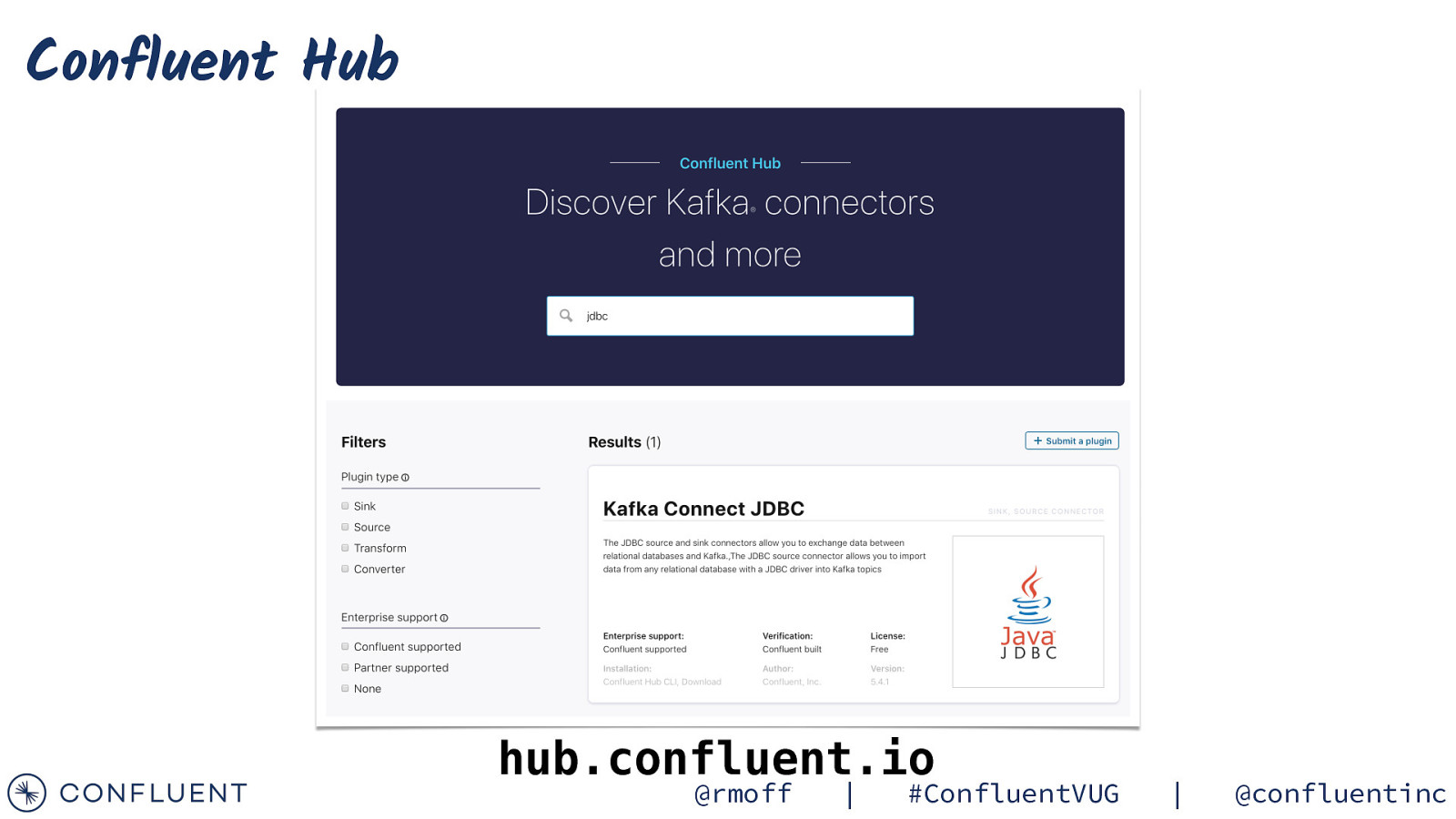

Confluent Hub hub.confluent.io @rmoff | #ConfluentVUG | @confluentinc

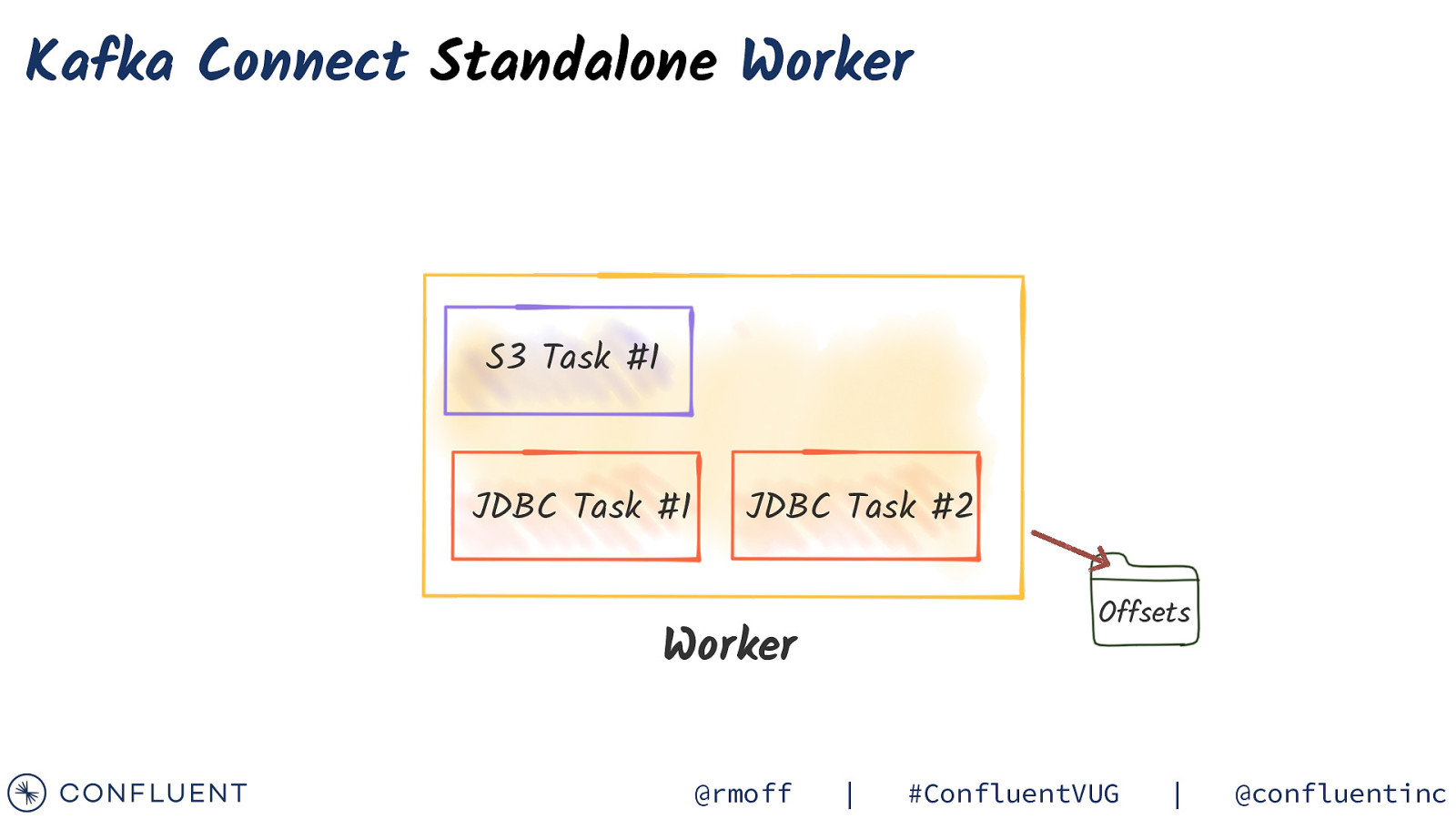

Kafka Connect Standalone Worker S3 Task #1 JDBC Task #1 JDBC Task #2 Offsets Worker @rmoff | #ConfluentVUG | @confluentinc

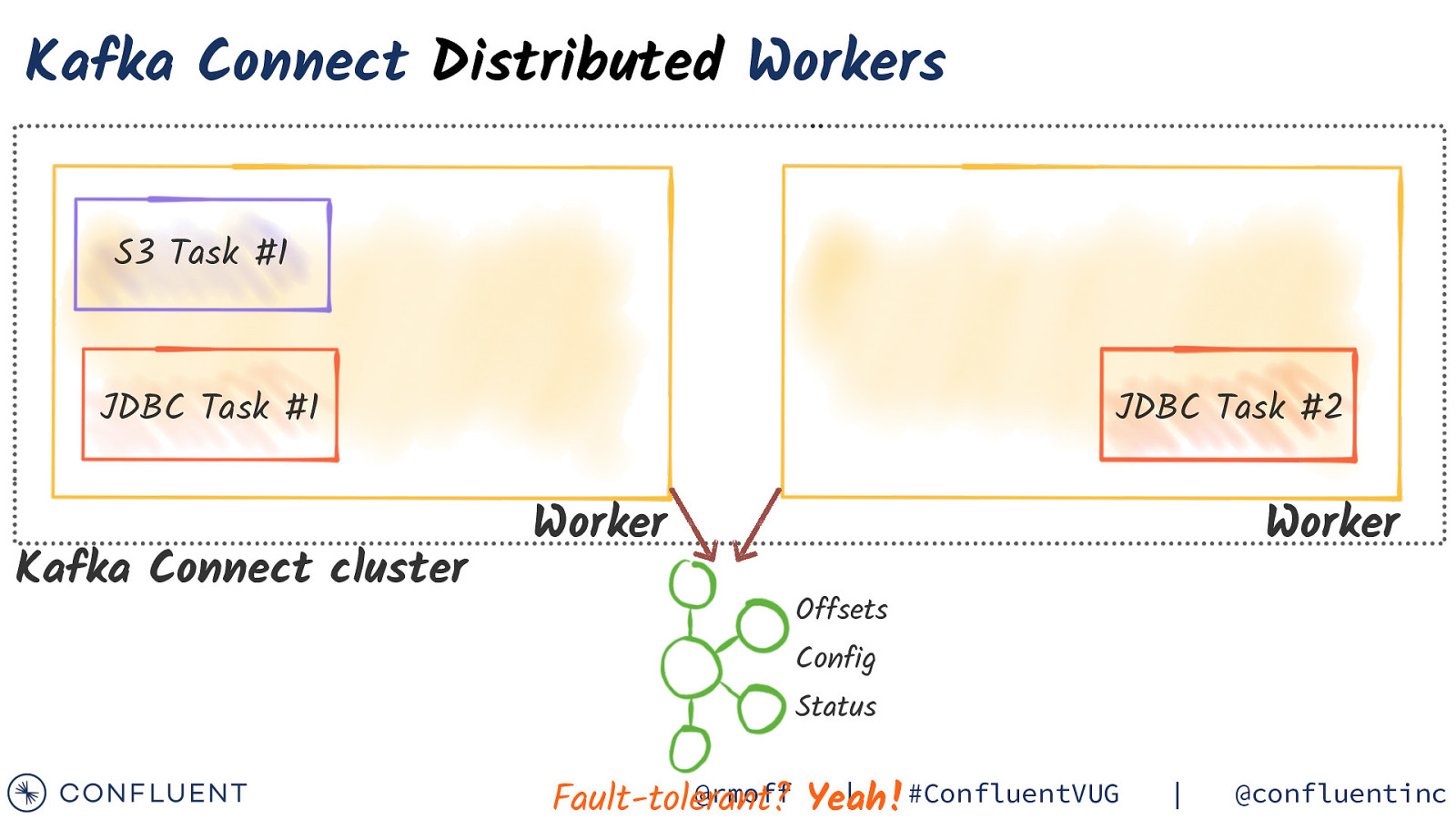

Kafka Connect Distributed Workers S3 Task #1 JDBC Task #1 Kafka Connect cluster JDBC Task #2 Worker Worker Offsets Config Status @rmoff Yeah! | #ConfluentVUG Fault-tolerant? | @confluentinc

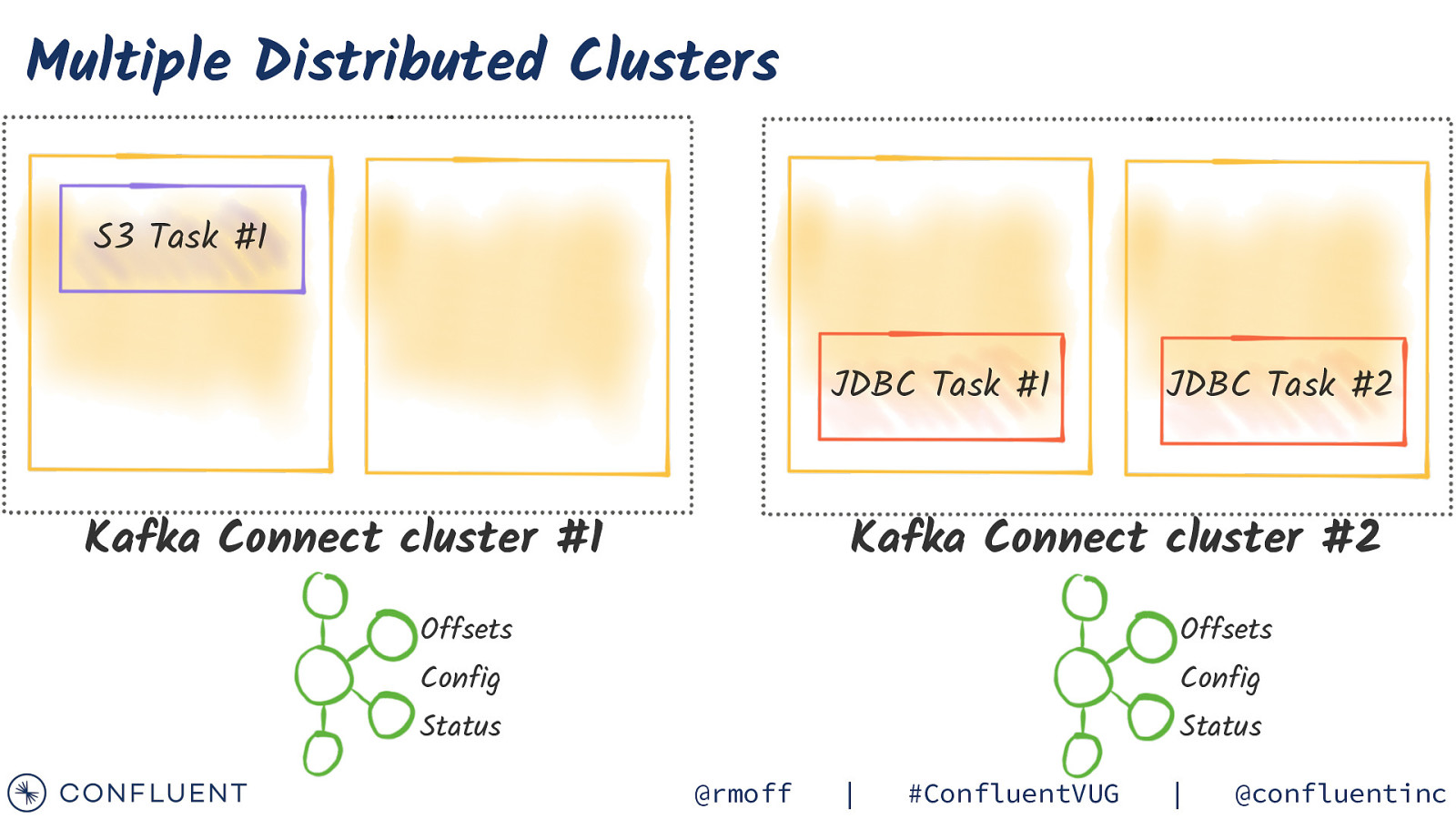

Multiple Distributed Clusters S3 Task #1 JDBC Task #1 Kafka Connect cluster #1 JDBC Task #2 Kafka Connect cluster #2 Offsets Offsets Config Config Status Status @rmoff | #ConfluentVUG | @confluentinc

Stream Integration + Processing @rmoff | #ConfluentVUG | @confluentinc

Stream Processing @rmoff | #ConfluentVUG | @confluentinc

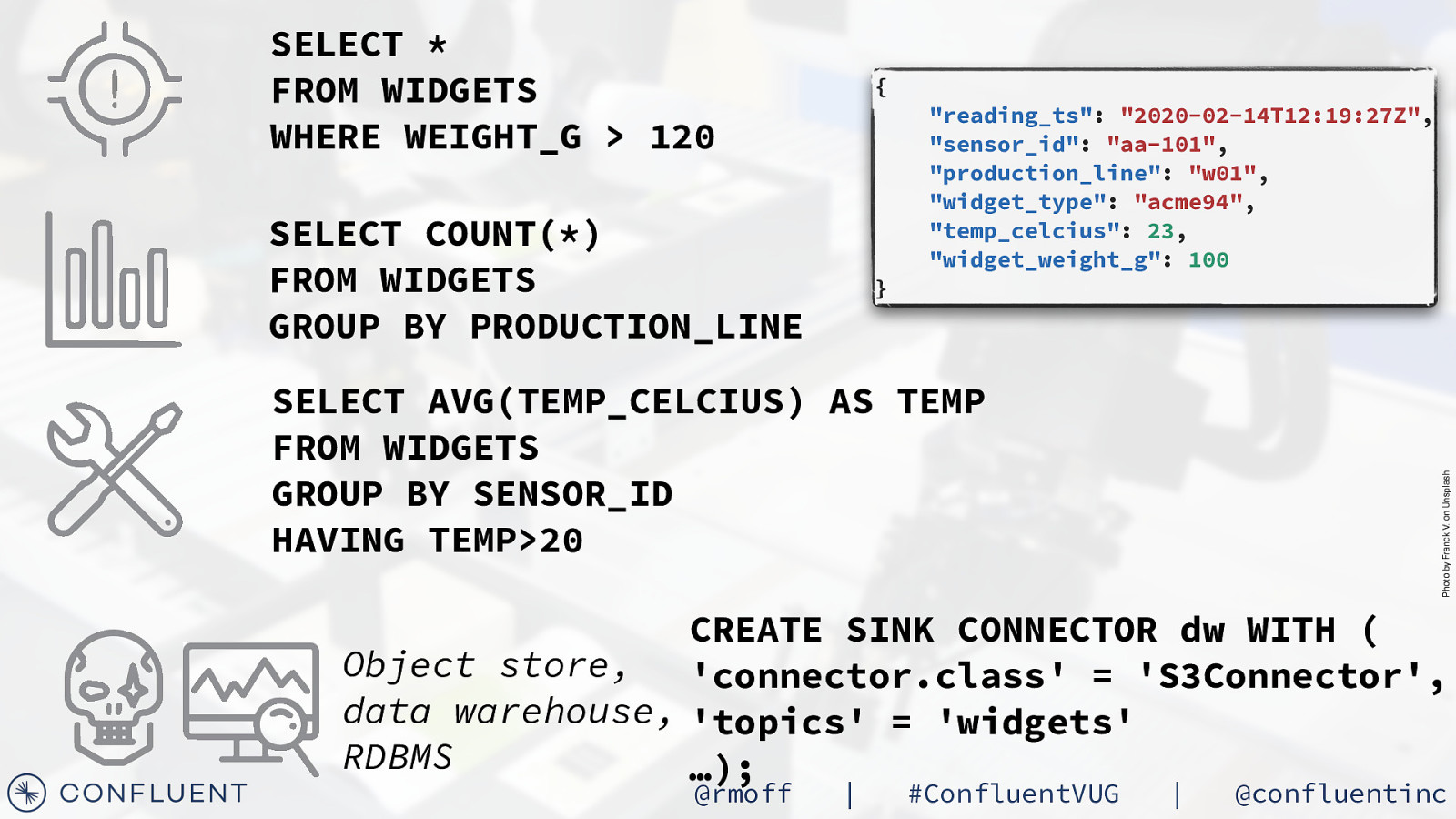

} “reading_ts”: “2020-02-14T12:19:27Z”, “sensor_id”: “aa-101”, “production_line”: “w01”, “widget_type”: “acme94”, “temp_celcius”: 23, “widget_weight_g”: 100 Photo by Franck V. on Unsplash { @rmoff | #ConfluentVUG | @confluentinc

SELECT * FROM WIDGETS WHERE WEIGHT_G > 120 { SELECT COUNT(*) FROM WIDGETS GROUP BY PRODUCTION_LINE } “reading_ts”: “2020-02-14T12:19:27Z”, “sensor_id”: “aa-101”, “production_line”: “w01”, “widget_type”: “acme94”, “temp_celcius”: 23, “widget_weight_g”: 100 Photo by Franck V. on Unsplash SELECT AVG(TEMP_CELCIUS) AS TEMP FROM WIDGETS GROUP BY SENSOR_ID HAVING TEMP>20 CREATE SINK CONNECTOR dw WITH ( Object store, ‘connector.class’ = ‘S3Connector’, data warehouse, ‘topics’ = ‘widgets’ RDBMS …); @rmoff | #ConfluentVUG | @confluentinc

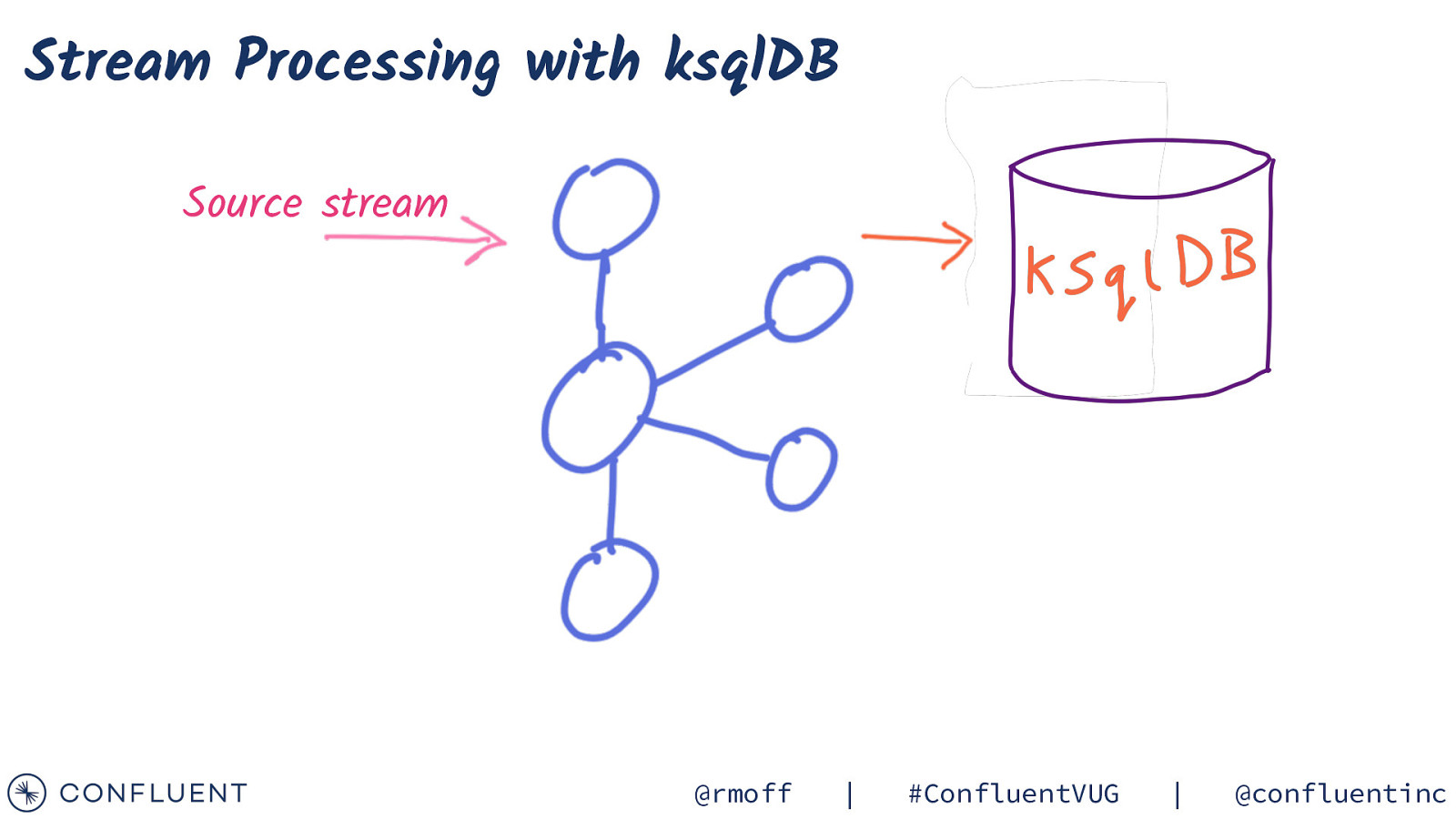

Stream Processing with ksqlDB Source stream @rmoff | #ConfluentVUG | @confluentinc

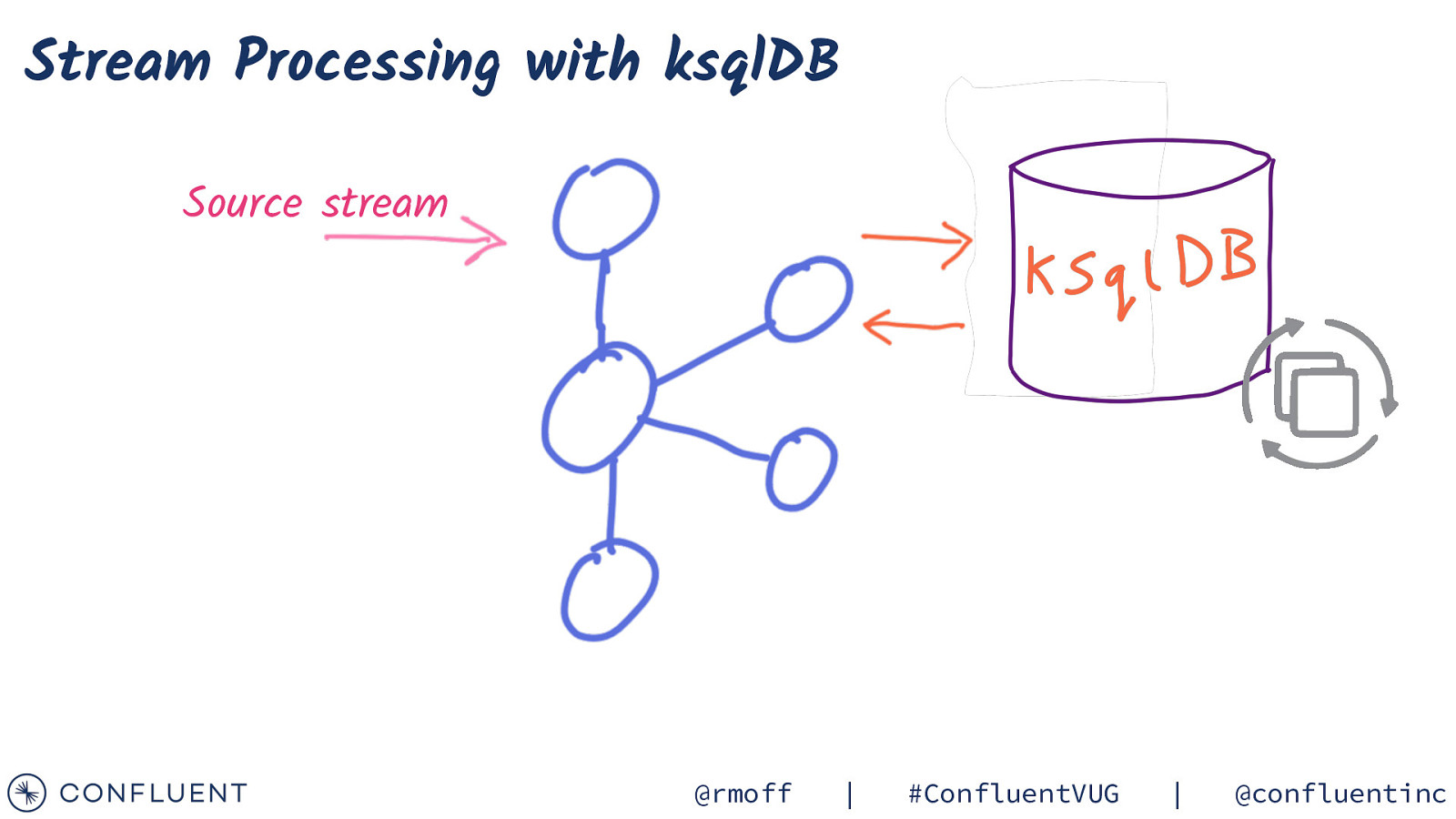

Stream Processing with ksqlDB Source stream @rmoff | #ConfluentVUG | @confluentinc

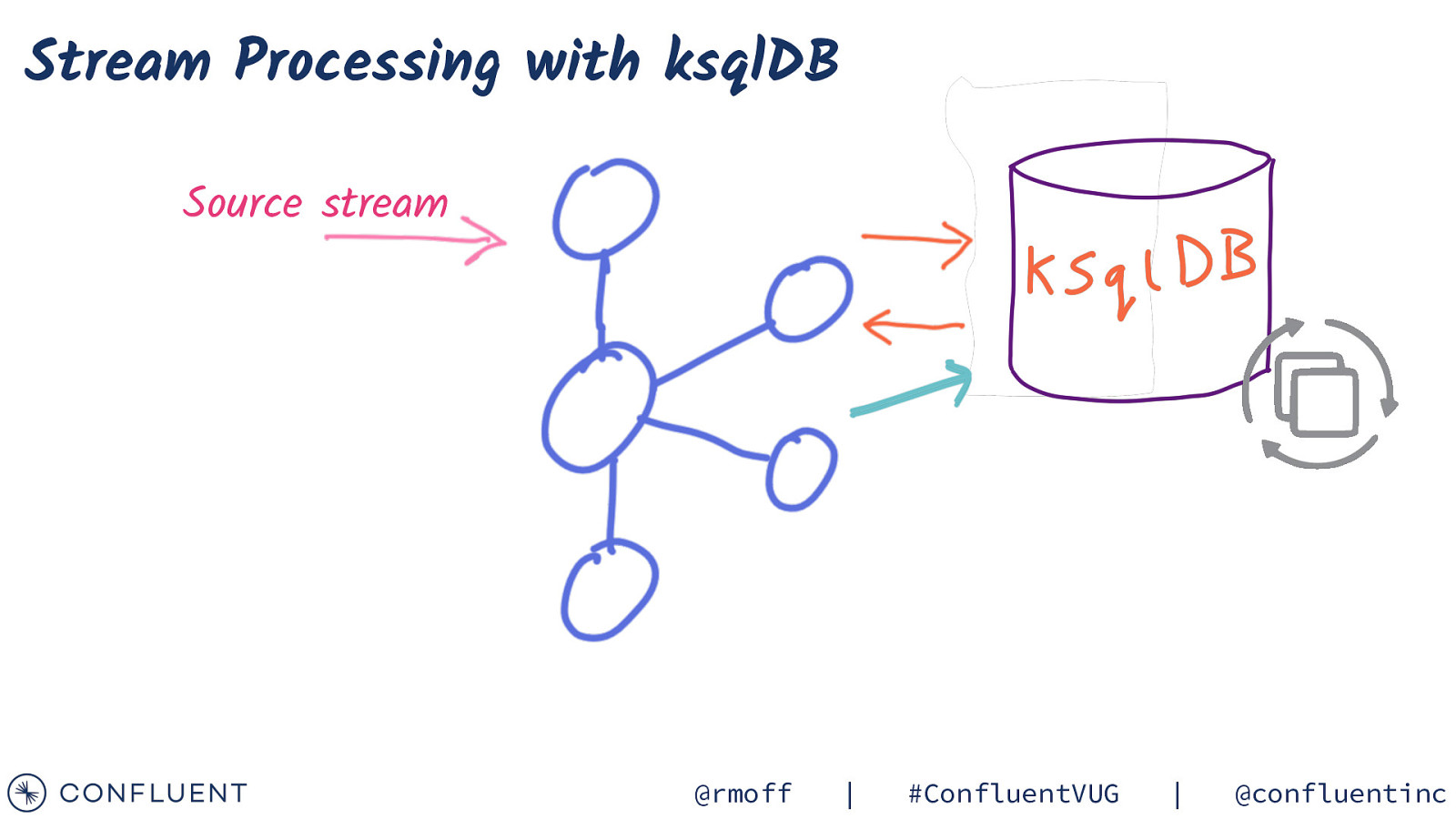

Stream Processing with ksqlDB Source stream @rmoff | #ConfluentVUG | @confluentinc

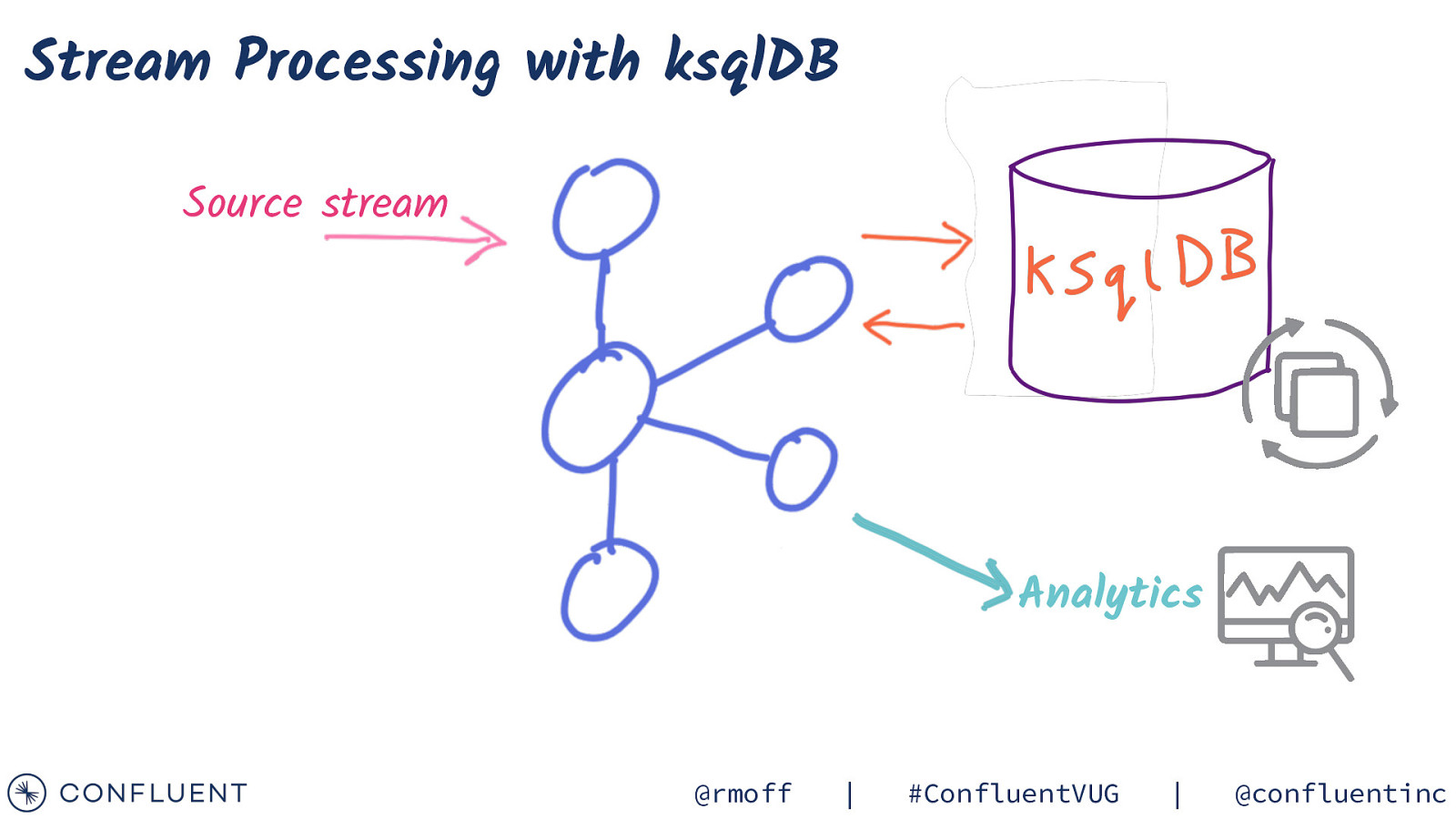

Stream Processing with ksqlDB Source stream Analytics @rmoff | #ConfluentVUG | @confluentinc

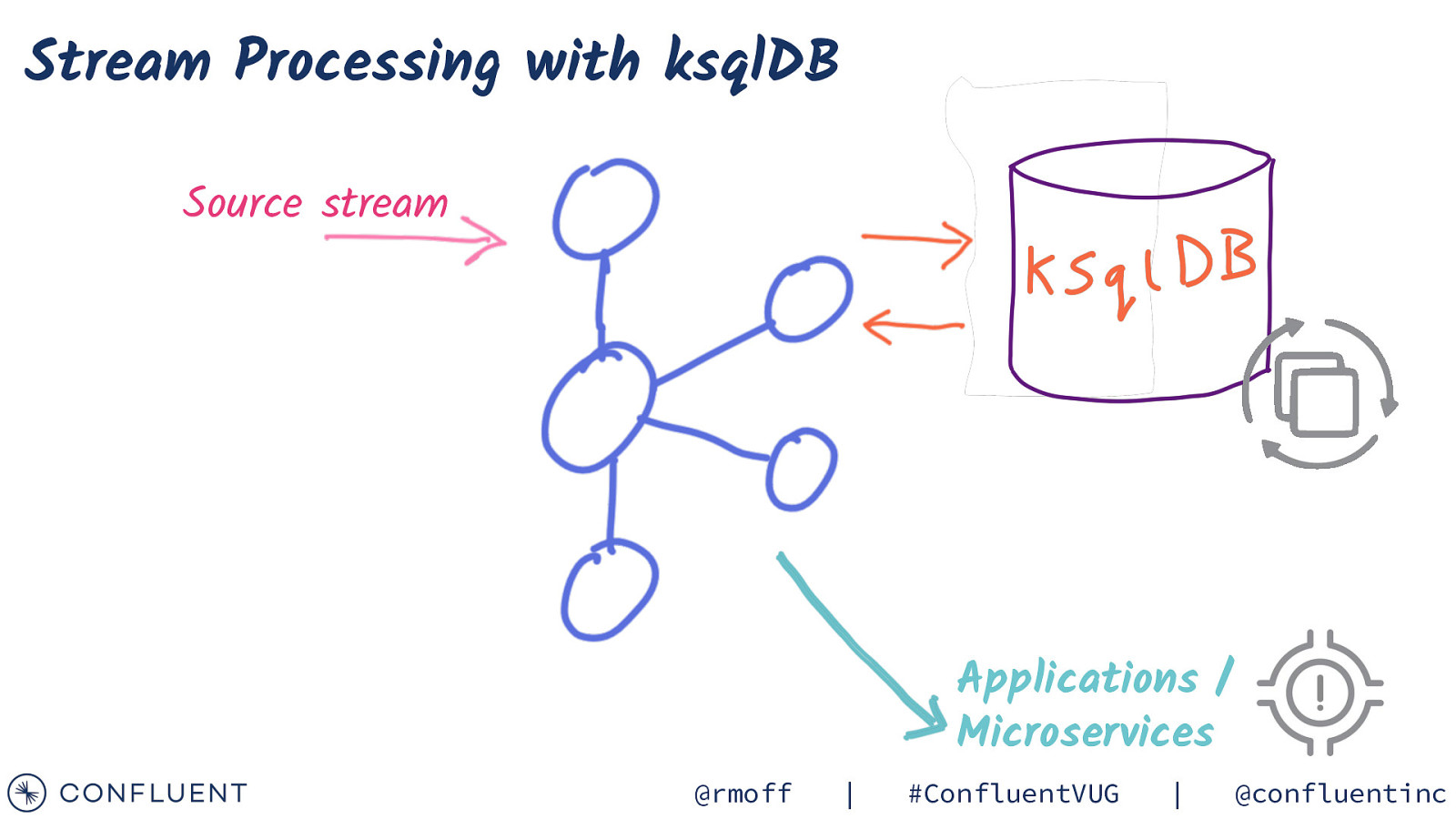

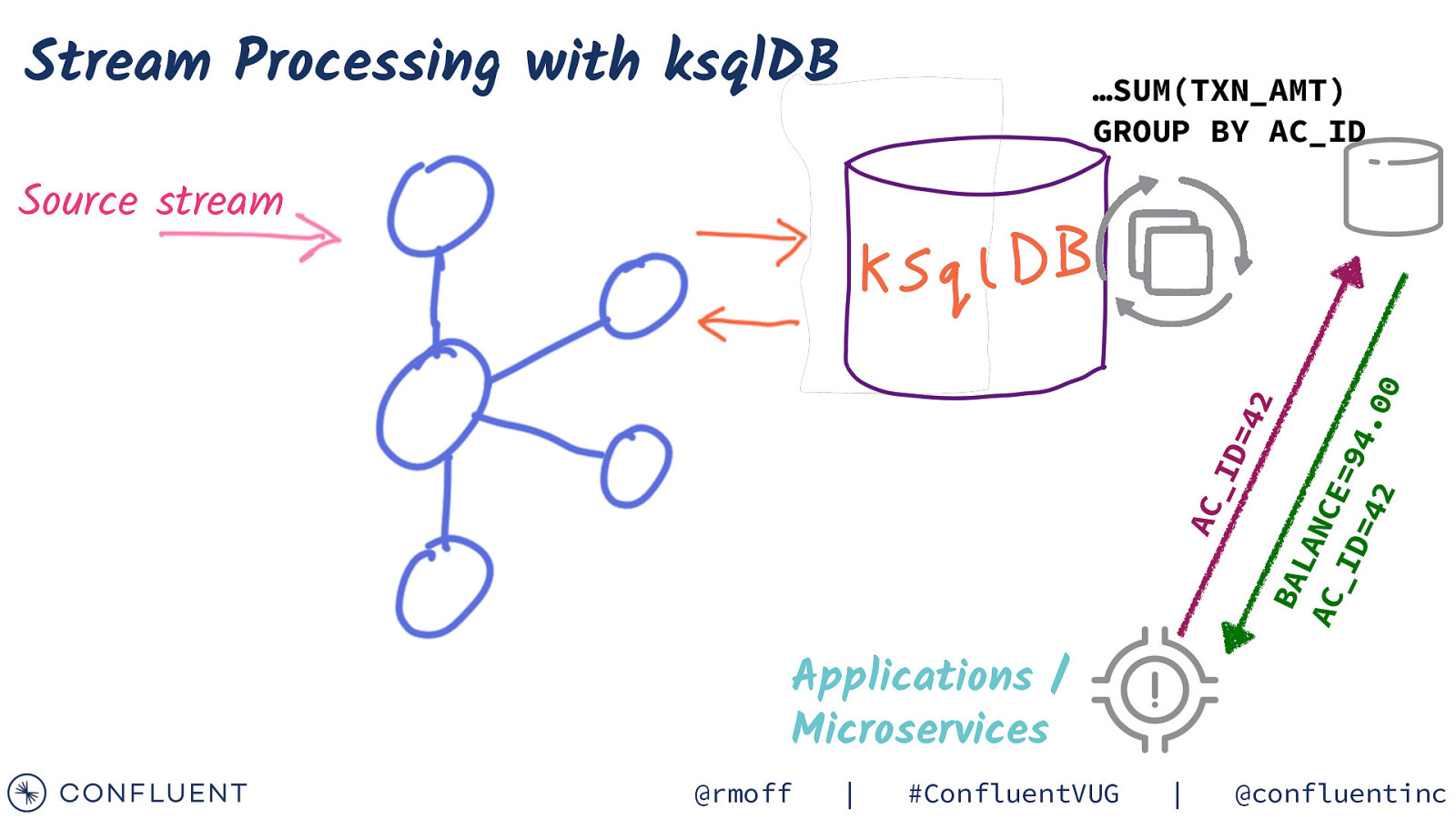

Stream Processing with ksqlDB Source stream Applications / Microservices @rmoff | #ConfluentVUG | @confluentinc

Stream Processing with ksqlDB …SUM(TXN_AMT) GROUP BY AC_ID AC _I D= 42 BA LA NC AC E= _I 94 D= .0 42 0 Source stream Applications / Microservices @rmoff | #ConfluentVUG | @confluentinc

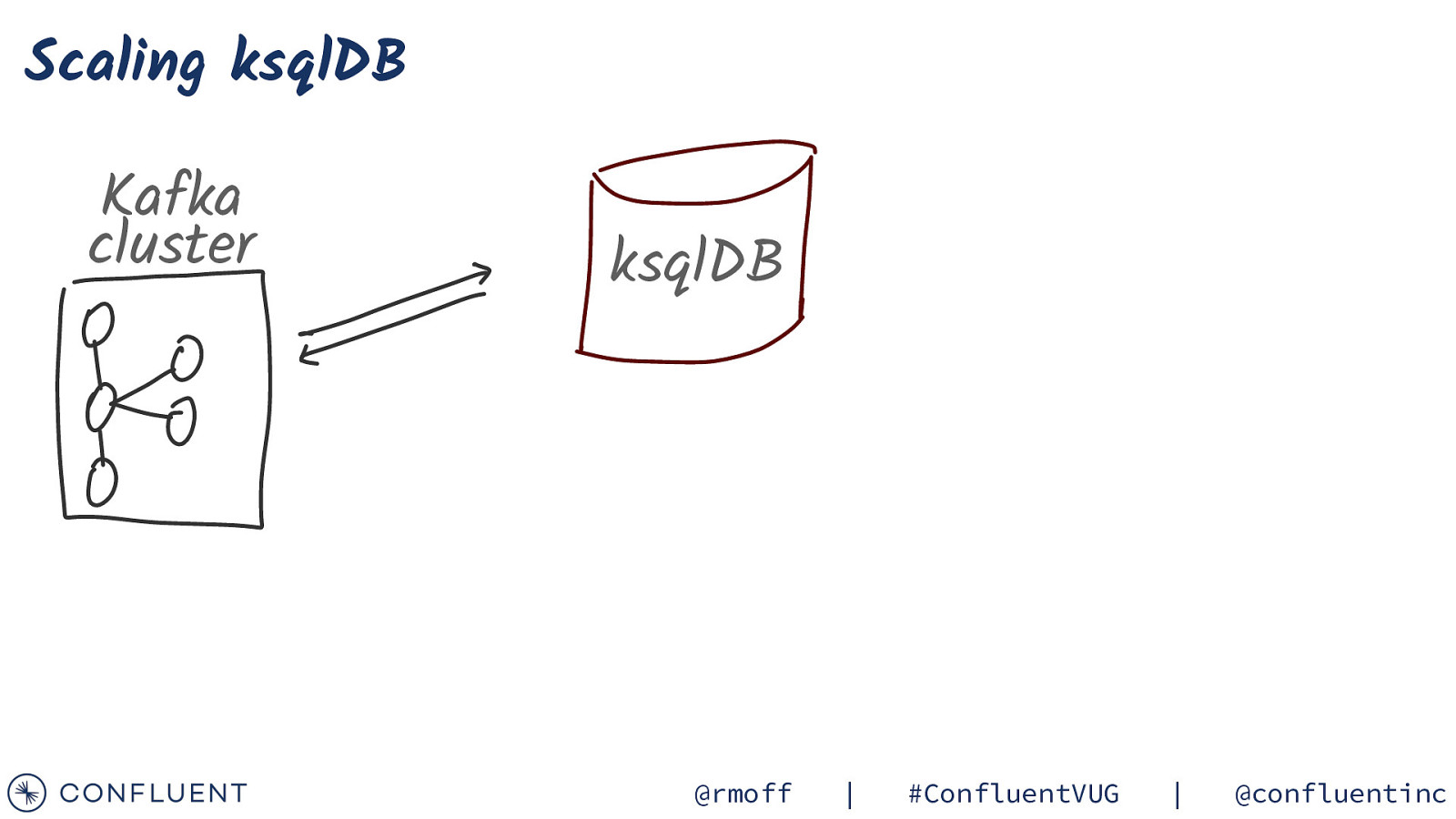

Scaling ksqlDB Kafka cluster ksqlDB @rmoff | #ConfluentVUG | @confluentinc

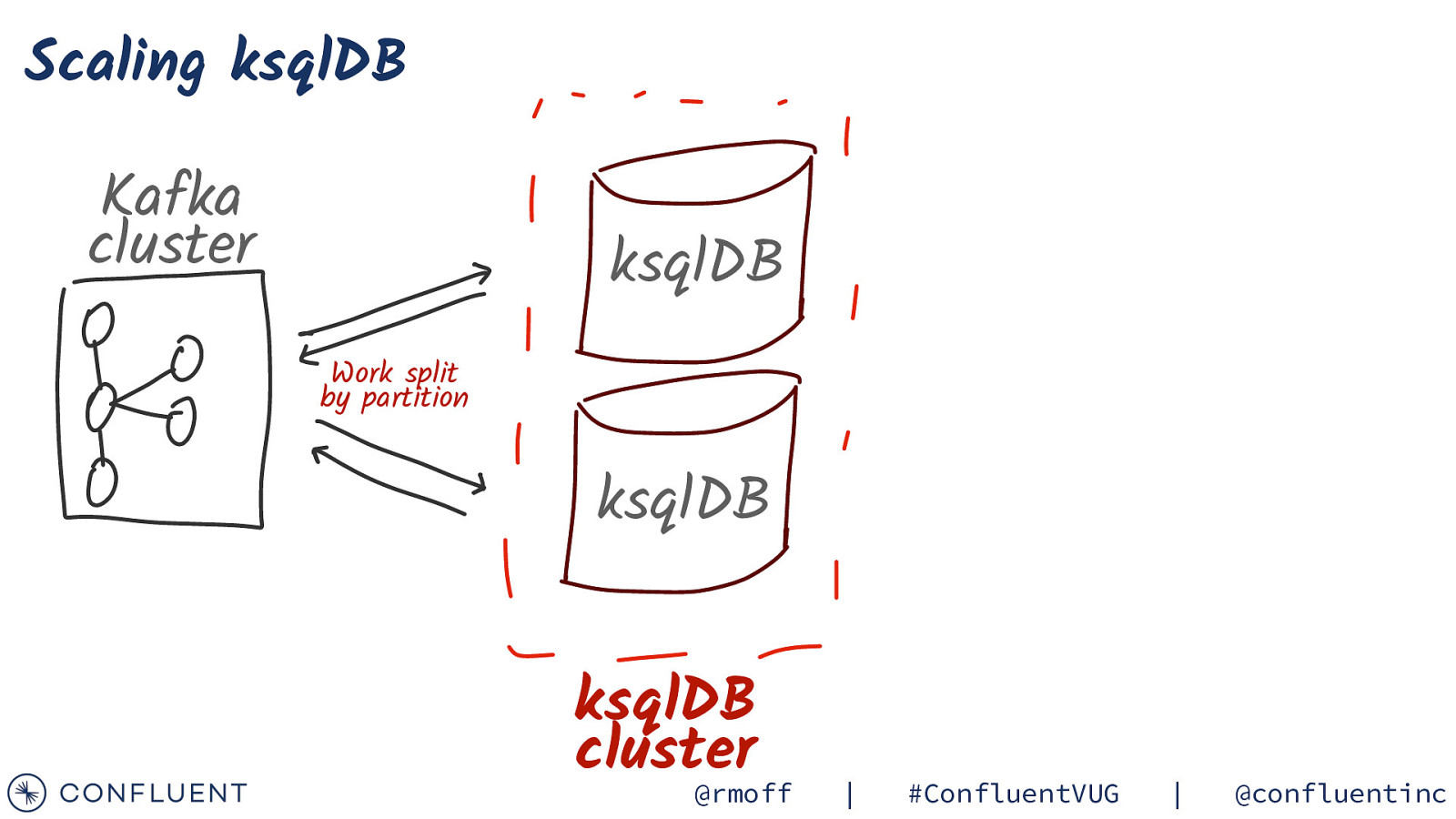

Scaling ksqlDB Kafka cluster ksqlDB Work split by partition ksqlDB ksqlDB cluster @rmoff | #ConfluentVUG | @confluentinc

Think Applications, not database instances ksqlDB cluster Kafka cluster ksqlDB cluster Fraud Inventory ksqlDB cluster Orders @rmoff | #ConfluentVUG | @confluentinc

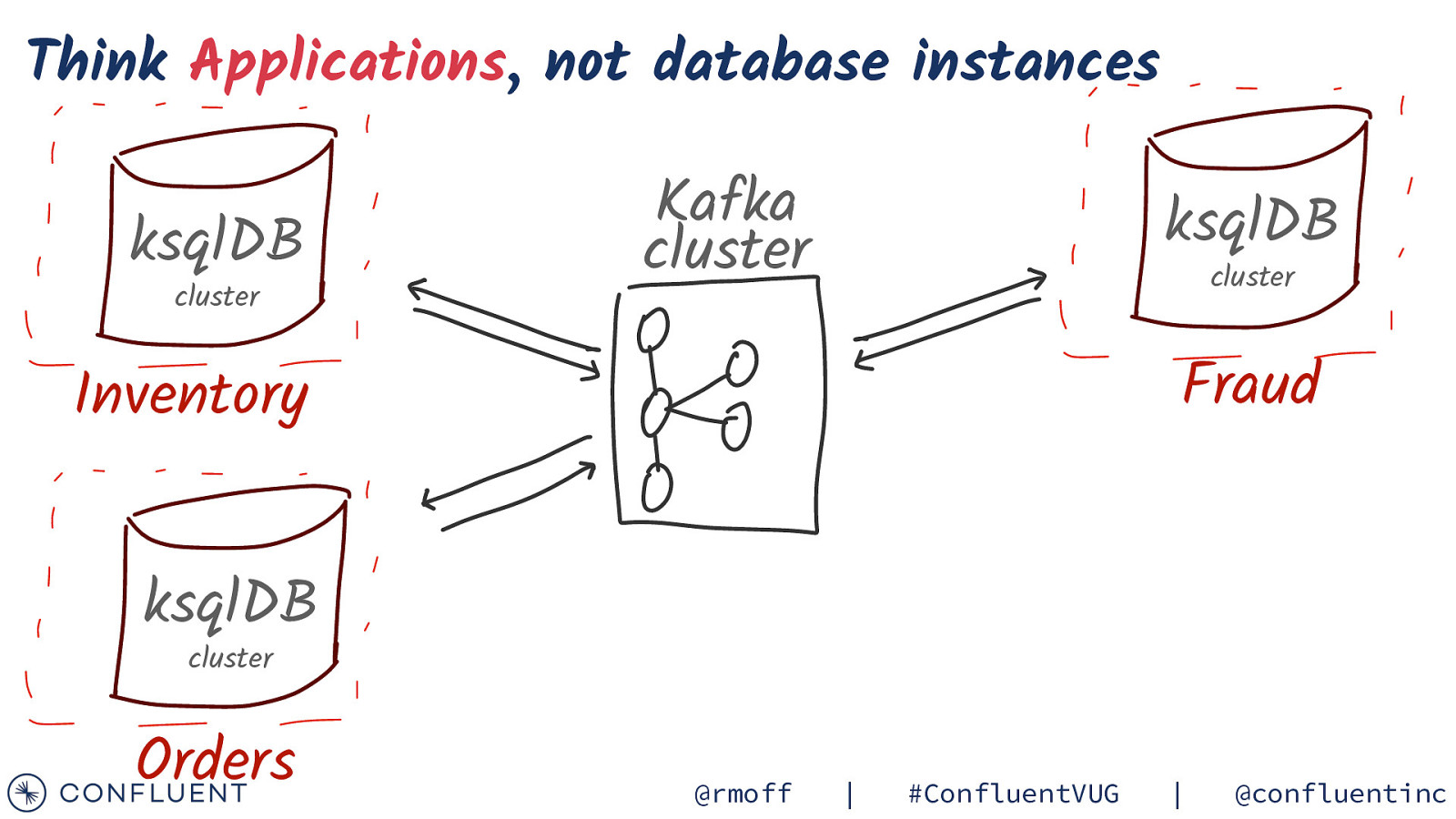

Let’s Build It! Rating events App Pro d uc e rA PI { “rating_id”: 5313, “user_id”: 3, “stars”: 4, “route_id”: 6975, “rating_time”: 1519304105213, “channel”: “web”, “message”: “worst. flight. ever. #neveragain” } @rmoff | #ConfluentVUG | @confluentinc

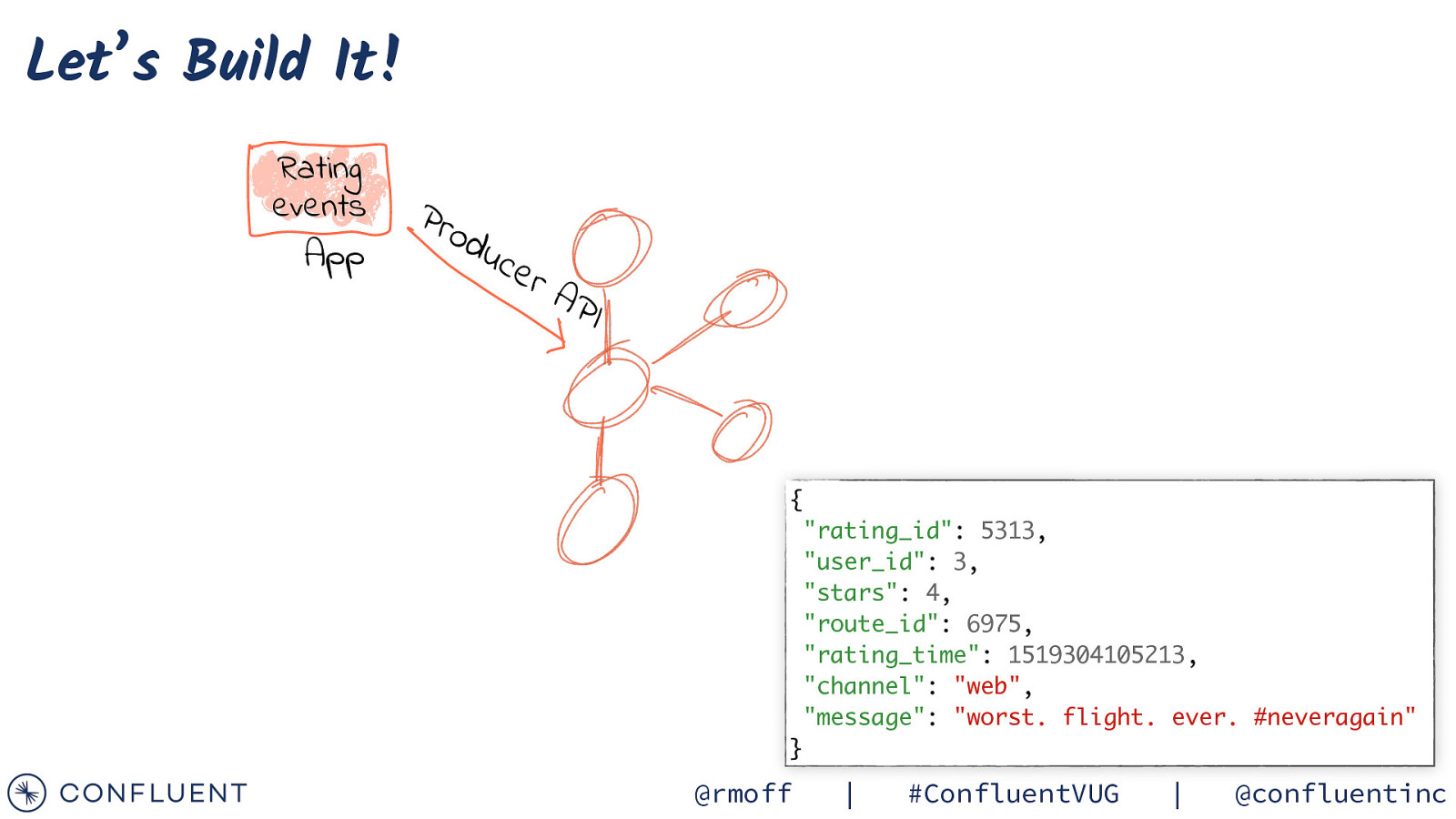

Let’s Build It! Rating events App User data Pro d uc e rA PI a k f a K t c e n n o C RDBMS @rmoff | #ConfluentVUG | @confluentinc

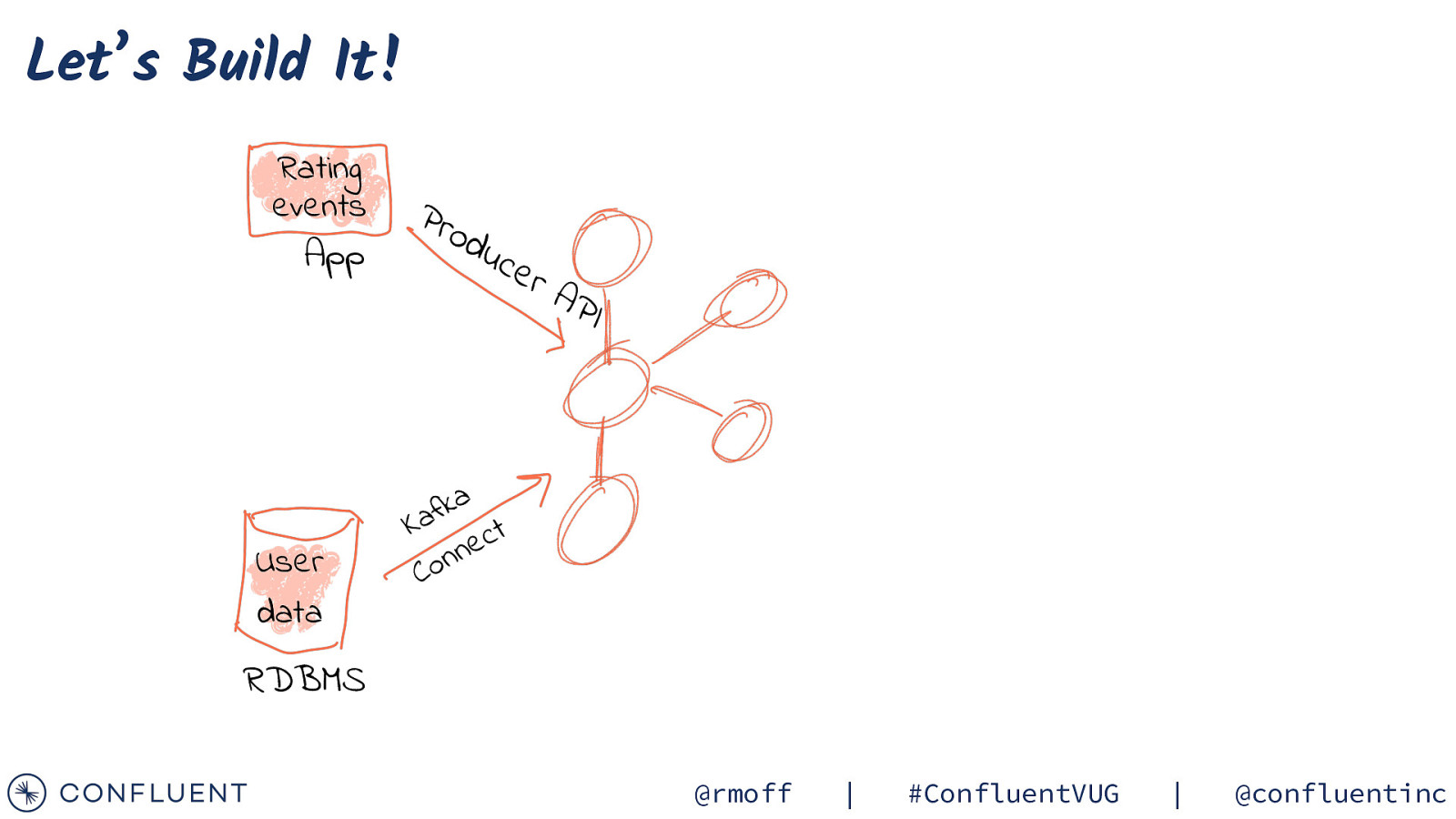

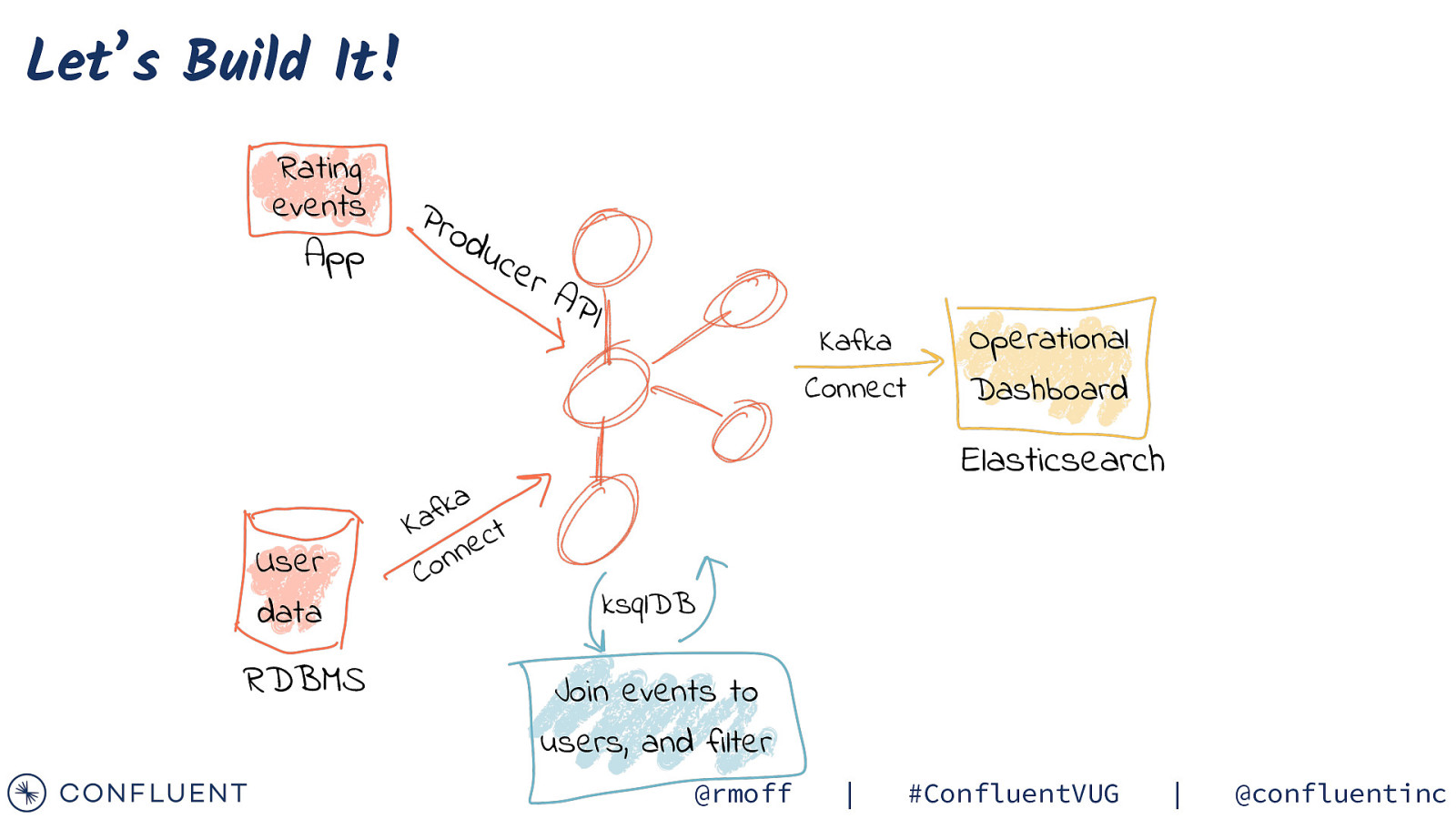

Let’s Build It! Rating events App User data RDBMS Pro d uc e rA PI a k f a K t c e n n o C Kafka Connect Operational Dashboard Elasticsearch ksqlDB Join events to users, and filter @rmoff | #ConfluentVUG | @confluentinc

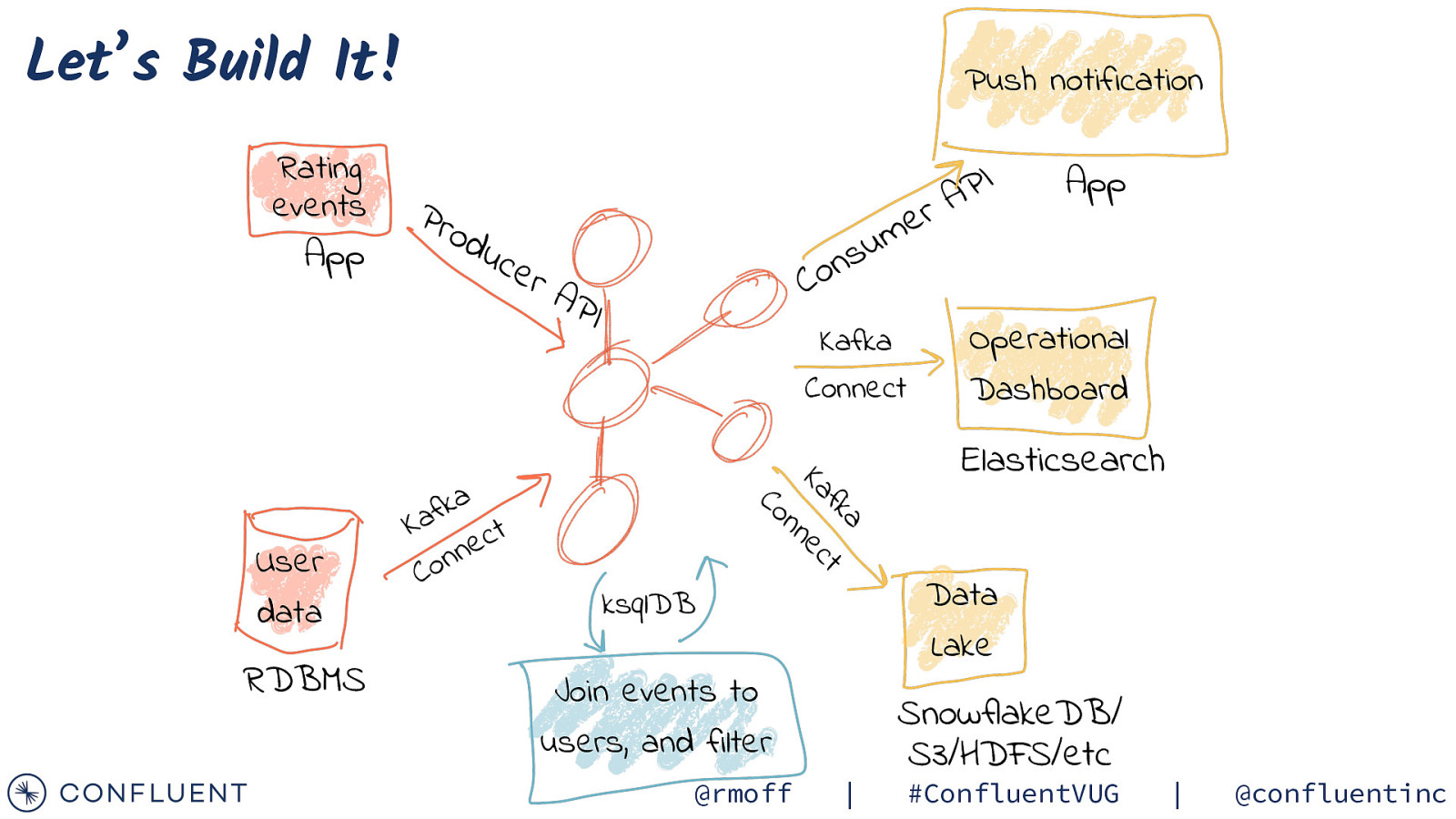

Let’s Build It! Rating events App a k f a K t c e n n o C App u s n o C uc e rA PI Kafka Connect a fk t Ka ec n RDBMS Pro d I P A r e m Operational Dashboard Elasticsearch n Co User data Push notification ksqlDB Join events to users, and filter @rmoff | Data Lake SnowflakeDB/ S3/HDFS/etc #ConfluentVUG | @confluentinc

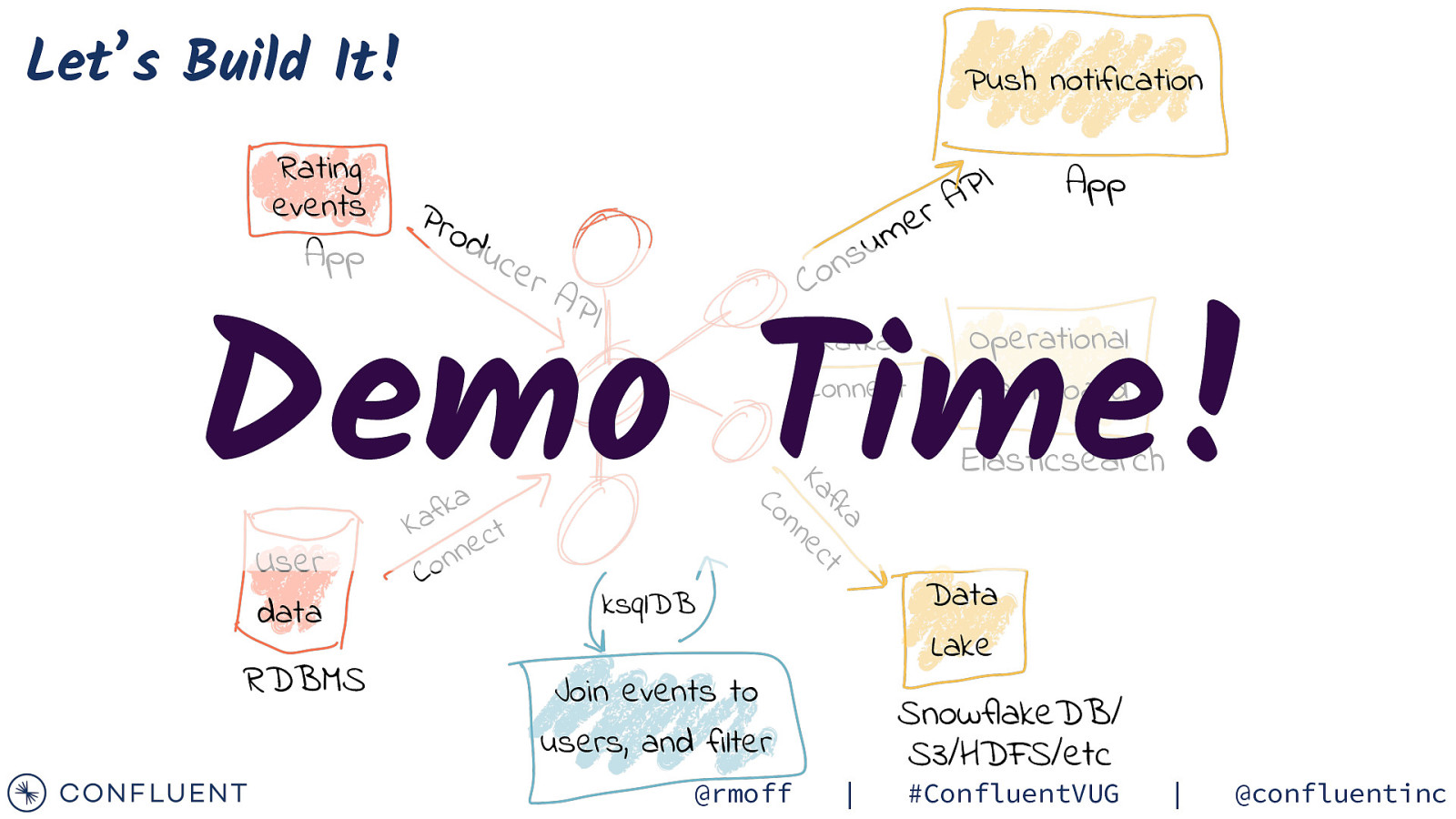

Let’s Build It! Rating events App Push notification Pro d I P A r e m App u s n o C uc e rA PI Demo Time! a fk t Ka ec n RDBMS Operational Dashboard Elasticsearch n Co User data a k f a K t c e n n o C Kafka Connect ksqlDB Join events to users, and filter @rmoff | Data Lake SnowflakeDB/ S3/HDFS/etc #ConfluentVUG | @confluentinc

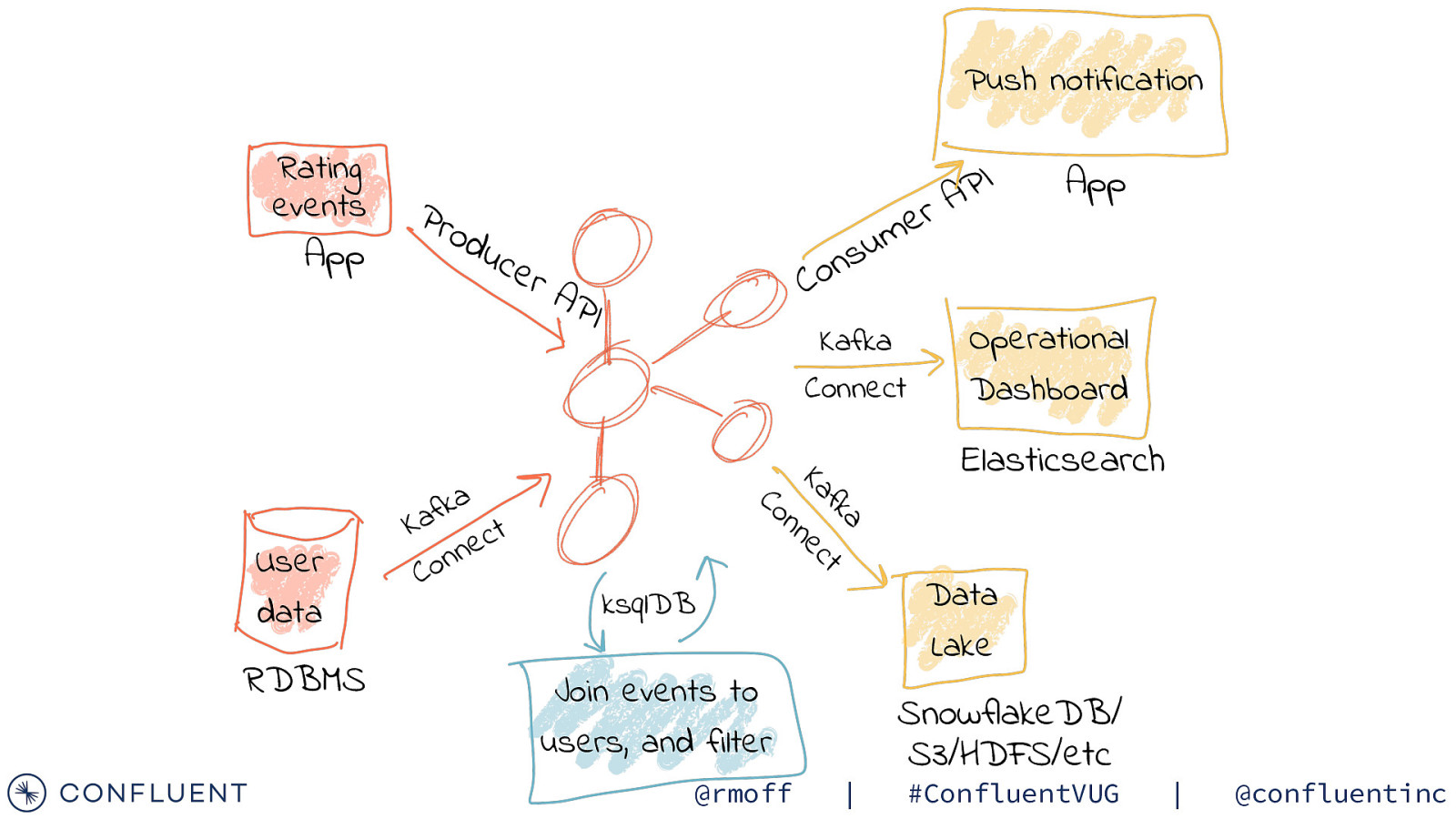

Push notification Rating events App Kafka Connect a fk t Ka ec n RDBMS u s n o C uc e rA PI a k f a K t c e n n o C App Operational Dashboard Elasticsearch n Co User data Pro d I P A r e m ksqlDB Join events to users, and filter @rmoff | Data Lake SnowflakeDB/ S3/HDFS/etc #ConfluentVUG | @confluentinc

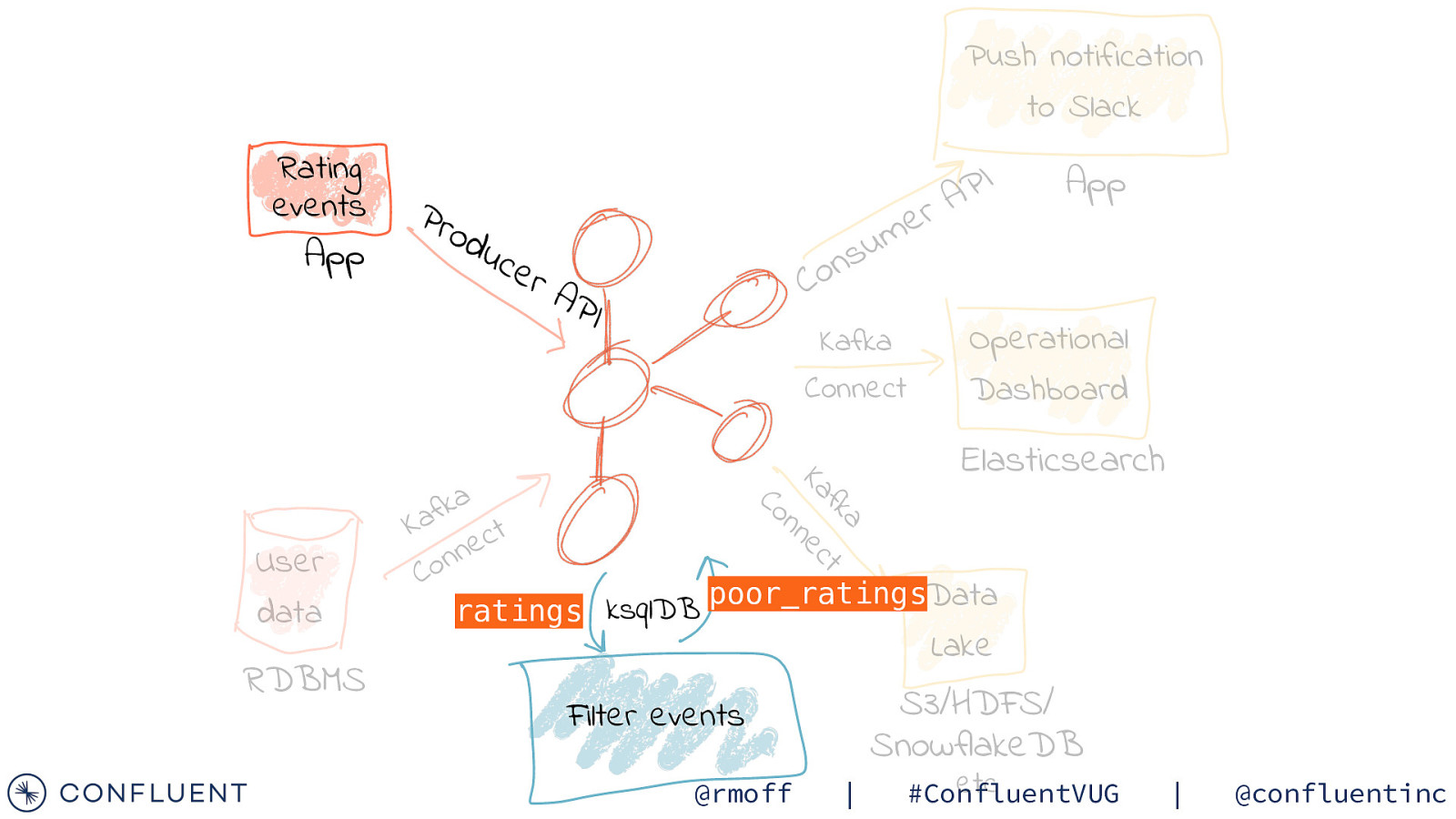

Push notification to Slack Rating events App Pro d I P A r e m App u s n o C uc e rA PI Kafka Connect Operational Dashboard Elasticsearch n Co a fk t Ka ec n a k f a K t c e n n o C poor_ratings Data ratings ksqlDB User data RDBMS Lake S3/HDFS/ SnowflakeDB Filter events @rmoff | etc #ConfluentVUG | @confluentinc

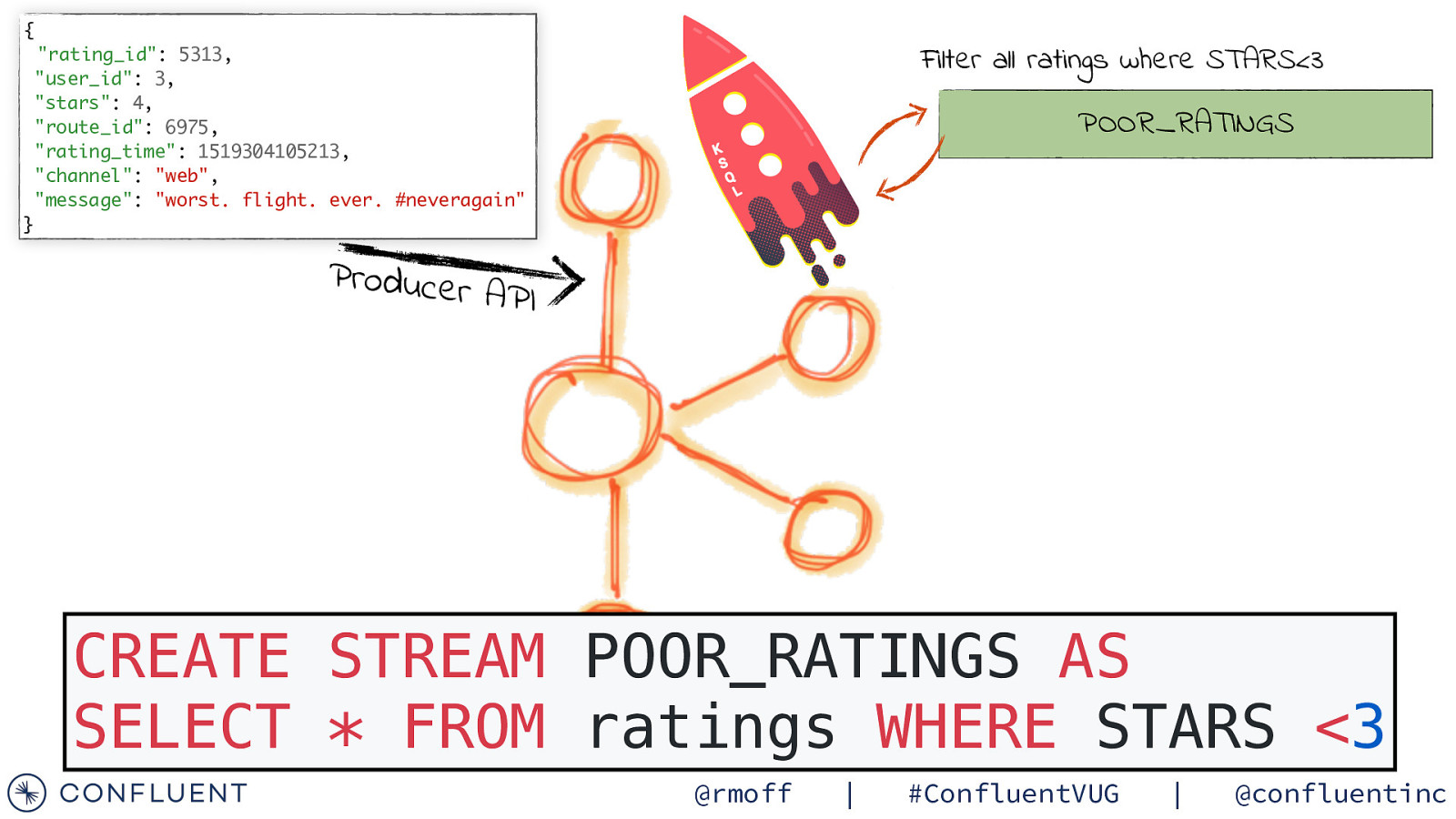

{ Filter all ratings where STARS<3 “rating_id”: 5313, “user_id”: 3, “stars”: 4, “route_id”: 6975, “rating_time”: 1519304105213, “channel”: “web”, “message”: “worst. flight. ever. #neveragain” POOR_RATINGS } Producer API CREATE STREAM POOR_RATINGS AS SELECT * FROM ratings WHERE STARS <3 @rmoff | #ConfluentVUG | @confluentinc

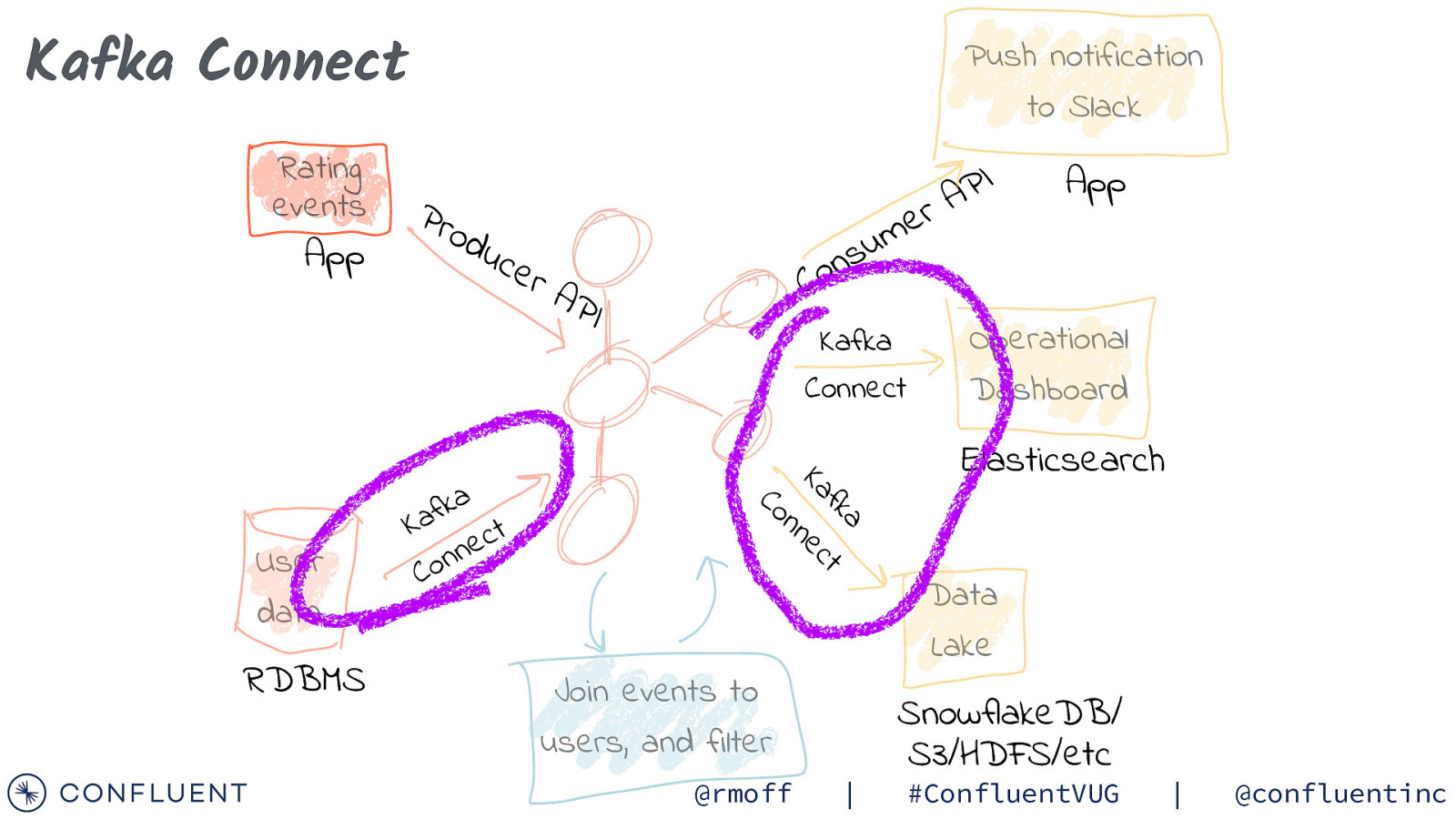

Kafka Connect Rating events App a k f a K t c e n n o C App u s n o C uc e rA PI Kafka Connect a fk t Ka ec n RDBMS Pro d I P A r e m Operational Dashboard Elasticsearch n Co User data Push notification to Slack Join events to users, and filter @rmoff | Data Lake SnowflakeDB/ S3/HDFS/etc #ConfluentVUG | @confluentinc

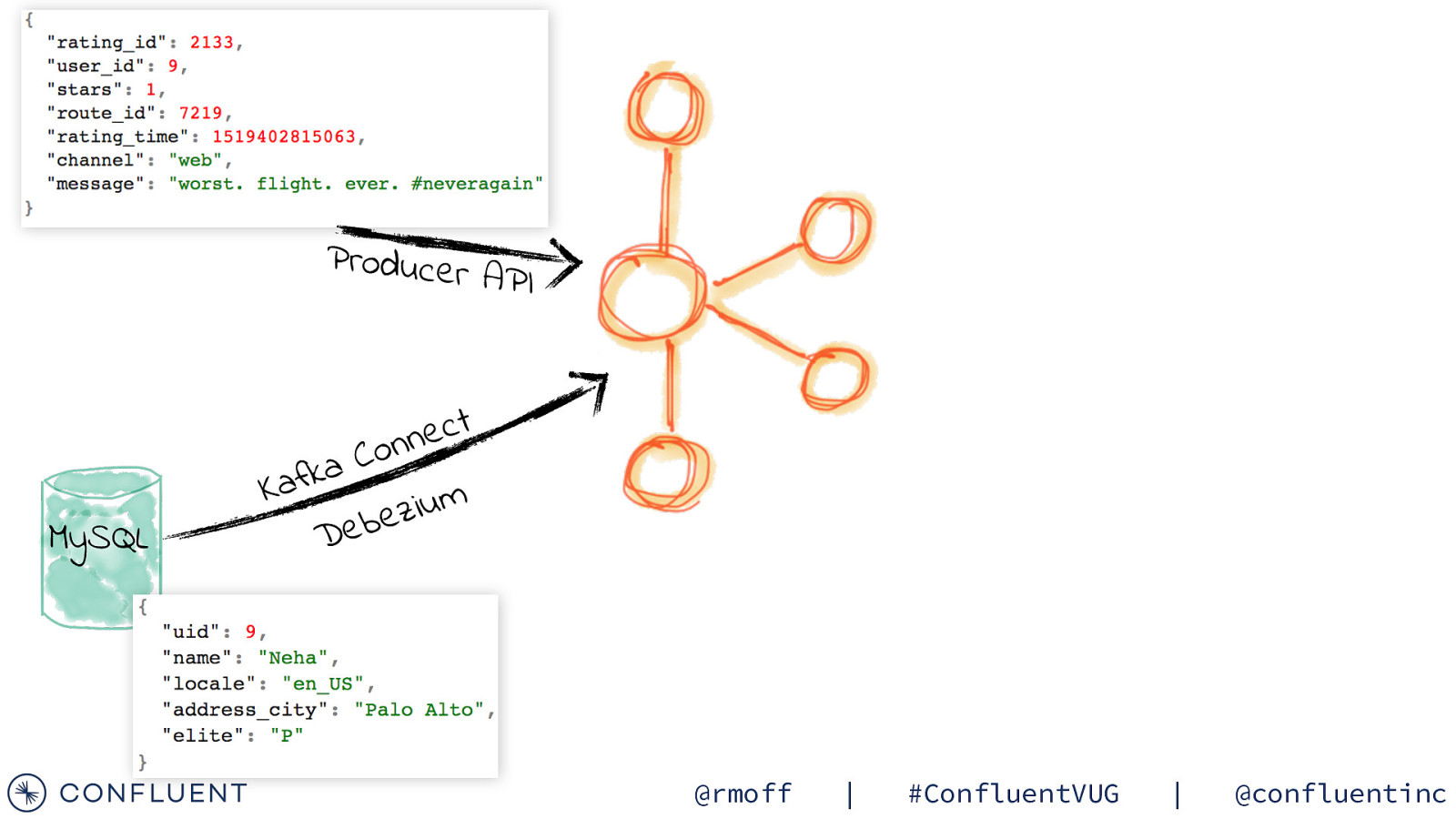

Producer API MySQL t c e n n o C a k f Ka m u i z e b e D @rmoff | #ConfluentVUG | @confluentinc

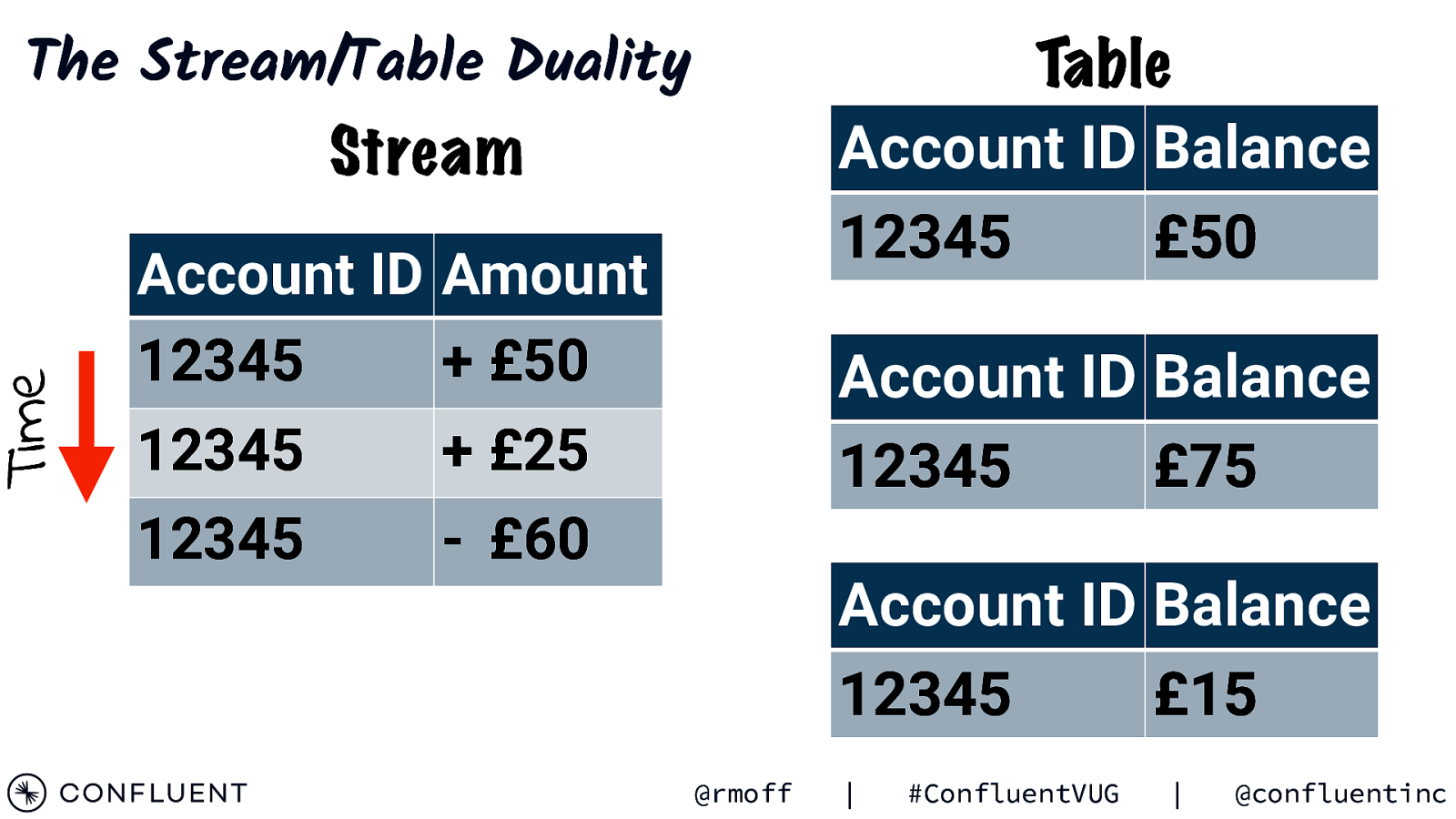

Time The Stream/Table Duality Table Account ID Balance 12345 £50 Stream Account ID Amount 12345 + £50 12345

The truth is the log. The database is a cache of a subset of the log. —Pat Helland Immutability Changes Everything http://cidrdb.org/cidr2015/Papers/CIDR15_Paper16.pdf Photo by Bobby Burch on Unsplash

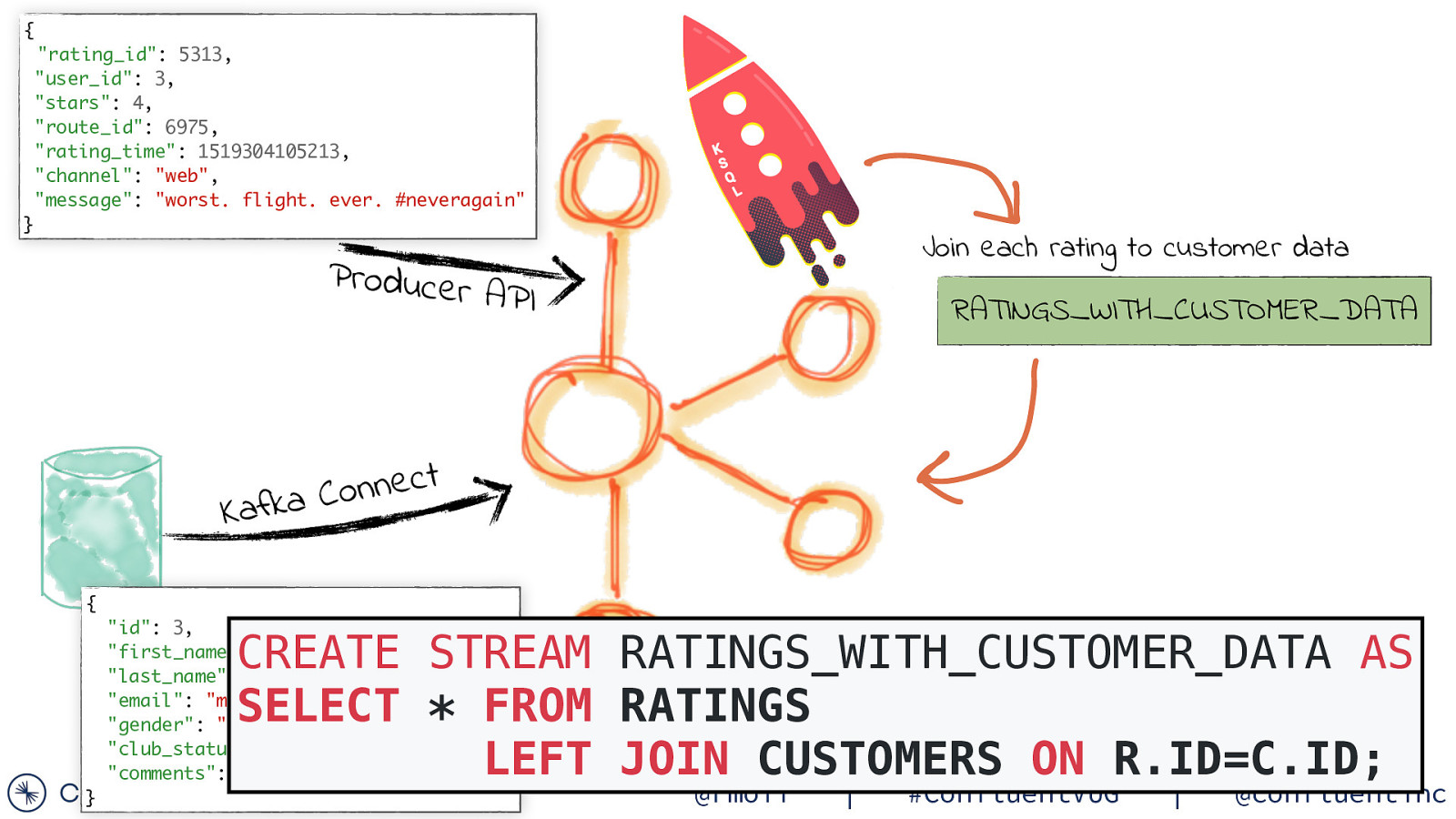

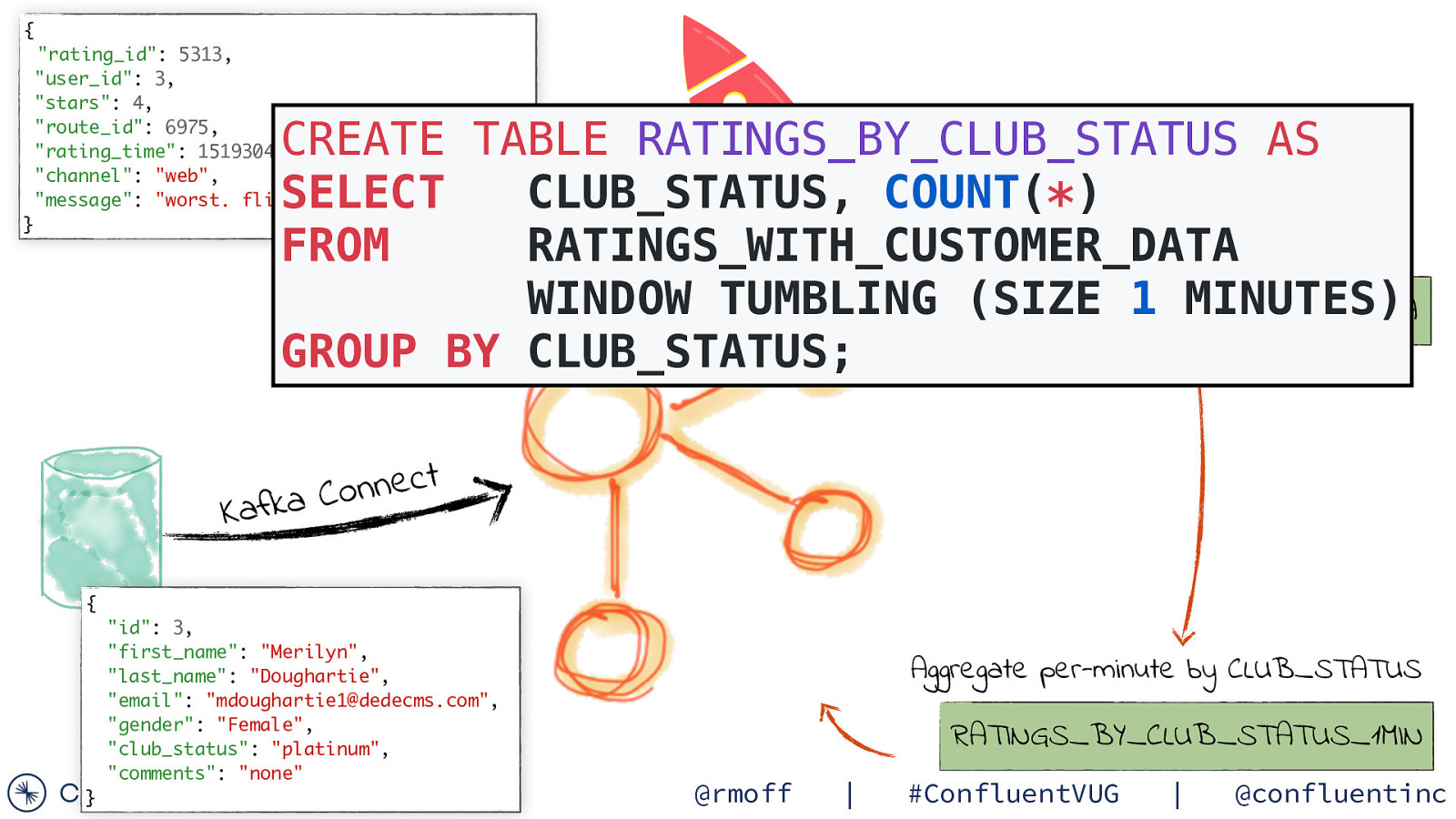

{ “rating_id”: 5313, “user_id”: 3, “stars”: 4, “route_id”: 6975, “rating_time”: 1519304105213, “channel”: “web”, “message”: “worst. flight. ever. #neveragain” } Join each rating to customer data Producer API RATINGS_WITH_CUSTOMER_DATA t c e n n o C a k f a K { “id”: 3, “first_name”: “Merilyn”, “last_name”: “Doughartie”, “email”: “mdoughartie1@dedecms.com”, “gender”: “Female”, “club_status”: “platinum”, “comments”: “none” CREATE STREAM RATINGS_WITH_CUSTOMER_DATA AS SELECT * FROM RATINGS LEFT JOIN CUSTOMERS ON R.ID=C.ID; } @rmoff | #ConfluentVUG | @confluentinc

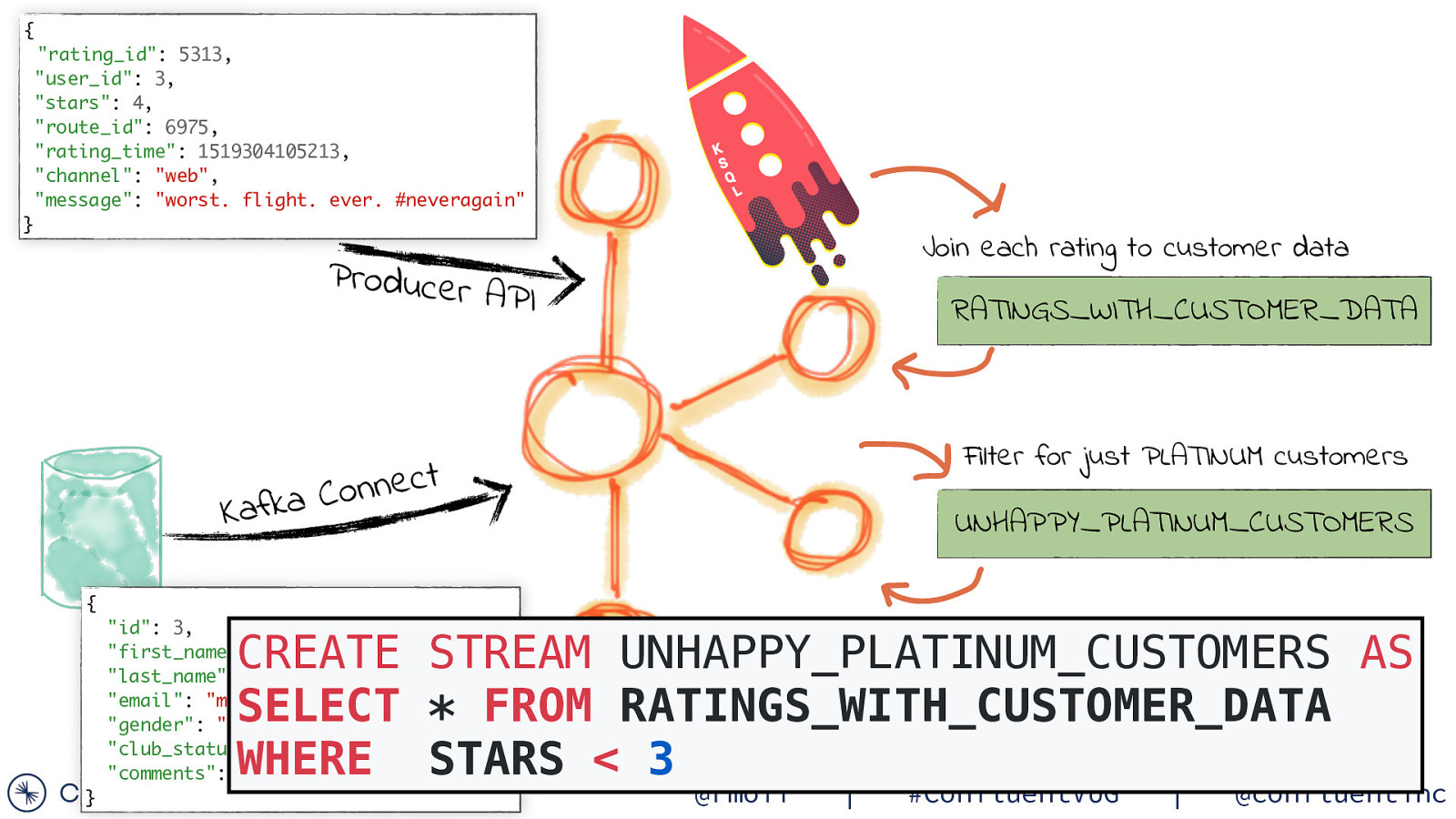

{ “rating_id”: 5313, “user_id”: 3, “stars”: 4, “route_id”: 6975, “rating_time”: 1519304105213, “channel”: “web”, “message”: “worst. flight. ever. #neveragain” } Join each rating to customer data Producer API RATINGS_WITH_CUSTOMER_DATA Filter for just PLATINUM customers t c e n n o C a k f a K UNHAPPY_PLATINUM_CUSTOMERS { “id”: 3, “first_name”: “Merilyn”, “last_name”: “Doughartie”, “email”: “mdoughartie1@dedecms.com”, “gender”: “Female”, “club_status”: “platinum”, “comments”: “none” CREATE STREAM UNHAPPY_PLATINUM_CUSTOMERS AS SELECT * FROM RATINGS_WITH_CUSTOMER_DATA WHERE STARS < 3 } @rmoff | #ConfluentVUG | @confluentinc

{ “rating_id”: 5313, “user_id”: 3, “stars”: 4, “route_id”: 6975, “rating_time”: 1519304105213, “channel”: “web”, “message”: “worst. flight. ever. #neveragain” CREATE TABLE RATINGS_BY_CLUB_STATUS AS SELECT CLUB_STATUS, COUNT(*) Join each rating to customer data FROM RATINGS_WITH_CUSTOMER_DATA Producer APWINDOW I TUMBLING RATINGS_WITH_CUSTOMER_DATA (SIZE 1 MINUTES) GROUP BY CLUB_STATUS; } t c e n n o C a k f a K { “id”: 3, “first_name”: “Merilyn”, “last_name”: “Doughartie”, “email”: “mdoughartie1@dedecms.com”, “gender”: “Female”, “club_status”: “platinum”, “comments”: “none” } Aggregate per-minute by CLUB_STATUS RATINGS_BY_CLUB_STATUS_1MIN @rmoff | #ConfluentVUG | @confluentinc

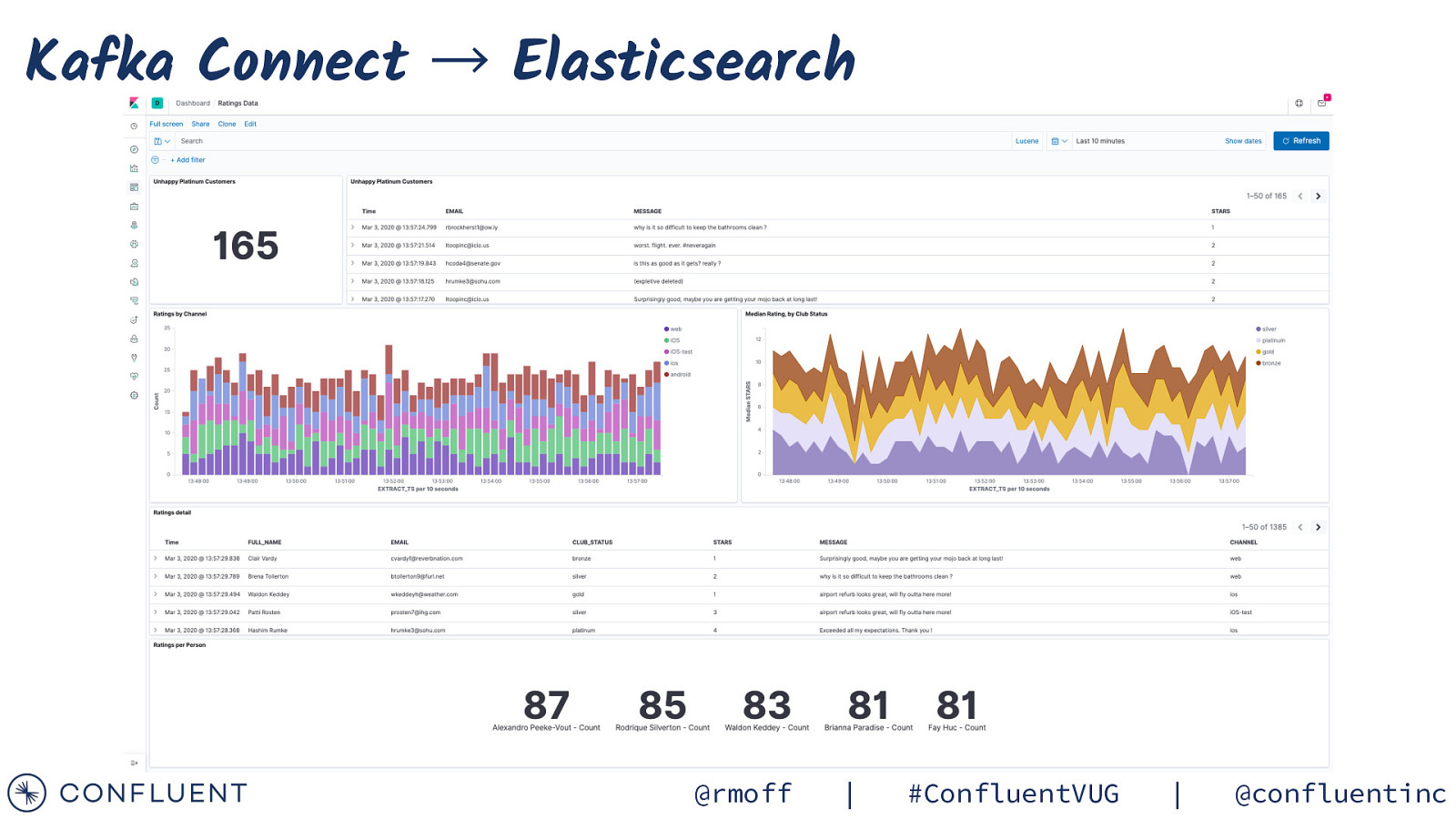

Kafka Connect → Elasticsearch @rmoff | #ConfluentVUG | @confluentinc