Accessibility Maturity × Continuous Accessibility

Andrew Hedges, Assistiv Labs A11yNYC, November 19, 2024

A presentation at A11yNYC - Accessibility New York City in November 2024 in New York, NY, USA by Andrew Hedges

Andrew Hedges, Assistiv Labs A11yNYC, November 19, 2024

If you find it helpful to follow along this way, my slides are available on Notist.

I’ve been building the web professionally since 1998, which coincidentally was the year Section 508 was passed and 1 year before version 1.0 of the World Wide Web Consortium’s Web Content Accessibility Guidelines were published.

I first learned about Section 508 soon after moving to Washington DC at the end of 1998 and it struck a chord with me by reinforcing my idealism about the early web, which many of us very earnestly believed was going democratize access to information.

Fast forward 2½ decades and I’m now a co-founder at Assistiv Labs after an eventful career as a developer and engineering leader at Disney, Apple, & Zapier.

Working at Zapier helped me fall in love with the power of automation.

It’s also where I contributed to an accessibility guild doing grassroots work to improve the accessibility of our core product, and it’s where I met Weston Thayer, the other founder of Assistiv Labs.

I joined Assistiv full-time in the Summer of 2022 to work alongside Weston and the team there to fill what we see as gaps in the tooling available for improving accessibility on the web.

– Talk about accessibility maturity models – Talk about Continuous Accessibility – Talk about how Continuous Accessibility can contribute to accessibility maturity

In terms of what we’re going to cover tonight, my agenda is pretty simple:

– Talk about accessibility maturity models – Talk about the concept of Continuous Accessibility – Talk about how Continuous Accessibility can contribute to accessibility maturity

But in a larger sense, my agenda centers on advocating for the application of automation to make accessibility testing more efficient.

As I mentioned before, I’m a big believer in automation from my time at Zapier, a company with the mission of automating all the things.

When I was there, I saw Zapier help small- and medium-sized businesses punch above their weight. Their systems enabled small teams to do some pretty impressive things.

At the same time, I’m a firm believer that the gold standard for how we validate whether a system works for humans is to have humans validate the system.

That starts with the actual users of the software, including and especially disabled people, but in the realm of digital accessibility that should almost always include skilled auditors as well.

That said, it’s not enough to get an audit—no matter how thorough or high-quality—on a yearly or even quarterly cadence and rely on that alone to validate whether your product is accessible.

So, I believe both of the following are true:

– One line of JavaScript will not fix 100% of your product’s accessibility problems within 24 hours. I think we can all agree on that. – And, there is a ton of work we can offload to the machines to enable us to make more efficient use of the precious time we humans have to make sure that what we build is as inclusive as possible.

From The Accessibility Operations Guidebook

I bought and have started reading Devon Persing’s book, The Accessibility Operations Guidebook, her guide to making accessibility work more sustainable.

It’s pretty comprehensive and I highly recommend it for anyone looking for ways to approach accessibility more systematically.

In her chapter on organizations, Devon explains that accessibility maturity models help us do the following…

– Identify the organization’s current stage of maturity – Identify gaps between the current stage and the next stage – Develop a plan to move the organization from the current stage to the next stage

Capability Maturity Models in general—of which accessibility is one of several, specialized types—tend to be process-oriented.

And I think process gets a bad rap.

Sure, done poorly, it can inhibit innovation by institutionalizing restrictive practices. But done well, process can increase the repeatability of behaviors that lead to better outcomes.

In the late 20th century, I managed university residence halls. Each year, we’d hire dozens, if not hundreds of undergrads to serve as resident advisors. These folks tended to be bright and well-intentioned, but came to the role with widely varying experiences and levels of competence with the skills they needed to be effective.

Process is one of the tools we used to ensure a solid baseline of outcomes. For example, if there was a roommate dispute and the RA for the students followed our processes, we could be pretty confident we’d end on a good outcome. Even if they only mostly followed our processes, we usually got better outcomes than if they’d just winged it.

I’m not rigid about it, but solid processes, in my opinion, are a great starting point.

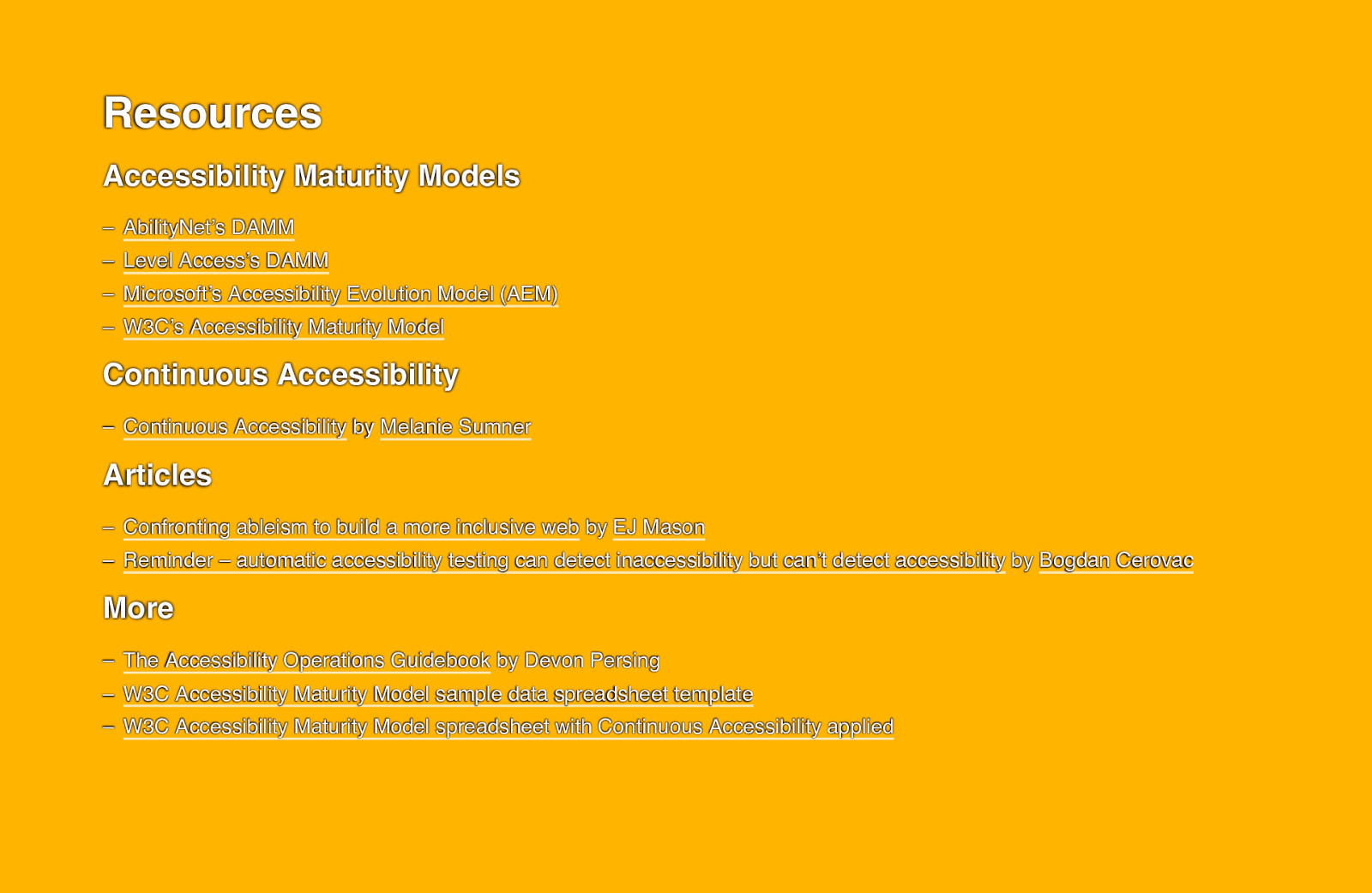

– AbilityNet’s DAMM – Level Access’s DAMM – Microsoft’s Accessibility Evolution Model (AEM) – W3C’s Accessibility Maturity Model

Here are a few of the prominent accessibility maturity models:

– AbilityNet in the UK has their Digital Accessibility Maturity Model, or DAMM – this is the one I have the most direct experience with from my time at Zapier – Level Access has a DAMM as well – Microsoft came up with what they call their Accessibility Evolution Model – W3C has a working group that is pretty far along in developing an Accessibility Maturity Model

– Dimensions – Stages – Proof points

The models all tend to be structured similarly. For the most part, they define “dimensions” for which the current “stage” is assessed by collecting “proof points” as evidence.

There are several maturity models devoted to accessibility. They all have pluses and minuses, unsurprisingly. For the purposes of this talk, I’m going to focus on the W3C’s model.

The W3C’s maturity model outlines the following 7 dimensions:

– Communications – Knowledge and Skills – Support – ICT (Information & Communication Technology) Development Life Cycle – Personnel – Procurement – Culture

So, you can see, it covers more than just technology. This is true of all of the models, that they include broader issues around how the whole organization approaches accessibility.

In my analysis, the proof points related to Continuous Accessibility—which we’ll talk about next—all fall under the Information & Communication Technology Development Life Cycle dimension.

The W3C model defines the following stages of maturity:

– Inactive – No awareness and recognition of need. – Launch – Recognized need organization-wide. Planning initiated, but activities not well organized. – Integrate – Roadmap in place, overall organizational approach defined and well organized. – Optimize – Incorporated into the whole organization, consistently evaluated, and actions taken on assessment outcomes.

Across the various dimensions, your organization (hopefully) will progress through these 4 stages.

Something to note is that, according to people more expert than me in the topic, you can’t skip ahead. You must go from Inactive to Launch to Integrate to Optimize in that order. Each stage sort of sets the table for the next.

– Dimension: ICT Development Lifecycle – Category: User Research – Proof point: User research includes disabilities

Let’s talk through a specific example. Under the ICT Development Lifecycle dimension, the W3C model includes a category called “User Research” for which one of the proof points is that “user research includes disabilities.”

If your organization does not consider disability at all in their user research, that’s unfortunate, but no judgement, ya gotta start somewhere. In that case, you’d rate this proof point as “no activity.”

If you’ve made it as far as making a plan to include people with disabilities in future research, you might rate this proof point as “started.”

If you usually, but inconsistently consider some subset of possible disabilities in your research (for example screen reader users only), you might rate this one “partially implemented.”

And if you’re consistently considering a broad range of disabilities in your user research, you can mark this proof point as “complete.”

To sum up, the point of the maturity models is to give us a framework we can use to better understand whether we’re getting better or worse at accessibility.

Let’s shift gears and talk about Continuous Accessibility.

– Have greater confidence in the quality of your code – More easily deliver accessible experiences at scale – Responsibly reduce your organization’s risk

From ContinuousAccessibility.com

Continuous Accessibility is an approach—widely advocated for by Melanie Sumner—for making the most of automation as a way to accomplish the following:

– Having greater confidence in the quality of our code – More easily delivering accessible experiences at scale – Responsibly reducing our organizations’ risk around accessibility

One of the ways Melanie says we can achieve these outcomes is by having the “computers do the repetitive tasks so that humans can solve the hard problems.”

Before we continue, let’s go on a little side quest and talk about the technologies that enable all this continuousness.

Continuous Integration / Continuous Deployment (usually referred to as “CI/CD”) has accelerated modern software development by enabling the automation of processes that used to be manual.

For real, back in the bad old days (like, 15 years ago) we used to push changes to a staging server, manually QA them, then ftp the files to a production server.

Not gonna lie, it was terrifying.

Continuous Integration (CI) refers to systems that bundle software into known, working versions.

On the web, Continuous Deployment (CD) is what updates your servers to use the new version of the software.

So, again, CI creates the new version of your software. CD deploys it to production.

The “Cs” in CI and CD are there to indicate that these are automated processes. In other words, there is software doing the work of bundling up the new version of the application and deploying it to a production environment.

CI/CD enables a bunch of things including the use of packages as a way to isolate pieces of the overall system and high-confidence deployments to production, specifically because the process is automated and not relying on a systems administrator to remember the specific sequence for updating the application and persistence layers of the site.

And, yes, in fact, I have personally seen the manual way of doing this go sideways multiple times in my career, sometimes in spectacular fashion. Thanks for asking.

CI/CD is also what enables us to run automated tests against our software prior to deployment.

The earliest of these tests were limited to ensuring the correct inputs and outputs for parts of the system.

But eventually, tooling appeared that could test the UI as well.

The problem was the vast majority of these tools were built in a culture dominated by ableism, so they didn’t—and still don’t in some cases—take disability into account.

EJ Mason wrote an amazing article on the Assistiv Labs site about how ableism becomes the default for our work unless we actively swim against the dominant culture’s tide.

In that article, EJ argues that our tools in many cases reinforce ableism.

But how exactly?

By assuming all users are using a mouse, or by implementing simulated keyboard events instead of faithfully emulating user input, as just 2 examples.

Thankfully, automation-friendly tools specifically designed to address accessibility have emerged over the last several years including axe-core by Deque and Site Scanner from Evinced among others.

In a recent article reminding us why we can’t just have nice things, Bogdan Cerovac cautions us to be aware of the limitations of the tools we use to validate accessibility.

Specifically, he contends that “automatic accessibility testing can detect inaccessibility but can’t detect accessibility.”

I agree with that. In fact, I really like that he uses the word automatic instead of automated.

There is such a thing as automated tools that take into account design intent, which automatic tools cannot.

As an industry, we put a lot of faith into automatic tools including scanners and linters that apply rulesets to websites to report on accessibility errors.

This is helpful to an extent, but we need to understand what the tools can and can’t do.

The primary thing scanners and linters can’t do that humans can is understand the intent behind a design. In a specific UI flow, what’s the path through it for an assistive technology user to accomplish the task? Irrespective of any WCAG failures, how good is the experience of attempting to accomplish the task using, for example, a screen reader or voice control?

Another category of automatable tests, end-to-end—sometimes called “E2E”—tests validate flows through a user interface (for example, logging in, creating a project or task, etc.). This is a way of encoding human judgment about the design into a test that can be automated.

Unfortunately, most E2E testing frameworks suffer from the ableist traits I mentioned earlier and offer only limited support for accessibility out of the box.

I will refrain here from making a product pitch except to say that at Assistiv Labs we’ve created an end-to-end testing framework built to treat accessibility as a first-class concern, so I know it can be done!

– Manual QA – Periodic audits

Before we continue, I want to acknowledge that manual QA and periodic, human audits are both valuable tools essential to ensuring the accessibility of your digital products.

But they’re not what we’re talking about tonight. Tonight, we’re talking about what can be responsibly offloaded to the machines.

– Attention decay

Something I call attention decay. Attention decay is the idea that the sooner after it’s made that an engineer finds out their change broke something, the more likely they are to fix it.

Say you’re a developer and you find out something you shipped 6 months ago is broken. You’ve moved on. Users have been living with the bug for months. At best, you’ll probably add the bug to the backlog and maybe ship a fix at some point in the future.

But imagine you find out you shipped a bug earlier that day. The context of the change is still fresh in your mind and you have the opportunity to fix the bug before it affects very many end users. You’re likely to jump on it and ship a fix right away.

This dynamic actually applies to any type of bug and helps explain why tests in CI/CD are so popular and valuable. Because it means catching problems before they impact your users.

Automatic

– Linters – Scanners – Flow-based tests with axe integrated

Automated

– Accessibility-first end-to-end tests

Let’s talk next about the types of automatable tools available. In the automatic category, we have…

– Linters such as axe Accessibility Linter by Deque – Scanners including Evinced and WAVE Testing Engine – Flow-based tests with axe integrated, as offered by Cypress and Deque

Additionally, there are starting to emerge automated tools that extend what we can expect of the machines, including…

– Accessibility-first end-to-end tests like what we write at Assistiv Labs

Both automatic and automated tools are useful as long as you understand the differences and how they apply to your use case.

Caveats aside, automated solutions for validating the accessibility of digital products can contribute significantly to improving our accessibility maturity.

Earlier, I mentioned Devon Persing’s book, The Accessibility Operations Guidebook.

On the website for Devon’s book, she links to a handy spreadsheet for calculating scores for the different dimensions of the W3C accessibility maturity model.

We’ll use that to explore the difference automated tools can make.

Again, we’ll use the W3C’s model, not because it’s objectively the best, but because, by virtue of originating from a standards body, it’s the one that is the least proprietary.

As I covered earlier, the W3C model defines 7 dimensions and dozens of individual proof points. Let’s narrow that list down to what’s relevant to Continuous Accessibility.

Relevant proof points for our purposes fall under the ICT Development Lifecycle dimension and include the following:

Development

– Developer’s accessibility checklists – Consistent approach to implementing accessibility features across products

Quality Review Through Release

– Consistent approach to testing and releasing products – Testing process includes automated accessibility testing – Accessibility identified as product release gate

Next, let’s talk about how Continuous Accessibility applies to each of the proof points.

Before we go on, I just want to explain my methodology.

First, I created a copy of Devon’s spreadsheet.

I then duplicated the ICT Dev Lifecycle tab to create a version with all of the proof points reset to “no activity” so I could isolate the effect of implementing Continuous Accessibility.

Next, I updated the evidence dropdown for each of our relevant proof points for each stage by logging the minimum state that must be true if Continuous Accessibility is applied.

– Developer’s accessibility checklists

You can think of linters as a kind of checklist of heuristics that are automatically applied to code within the context of an Integrated Development Environment (or IDE) such as VS Code.

With a linter installed, a developer will see warnings right where they’re coding about certain types of accessibility issues.

Ideally, these same heuristics will be enforced within CI/CD. In other words, if you use axe DevTools Linter, it probably makes sense to use axe in your CI/CD as well to make sure you’re getting consistent results.

– Developer’s accessibility checklists

Partially implemented

There are other, more manual checklists you can and should implement that cover areas that axe and similar tools can’t.

For example, you may want your team to evaluate new UI using Microsoft Accessibility Insights’ self-guided audit feature, to encourage applying human judgment to things like tab order.

So, while linters partially satisfy this proof point, they’re not enough by themselves to get us to 100%. But, “partially implemented” across all 4 stages is good for 8 points. I’ll take it!

Next proof point under Development is Consistent approach to implementing accessibility features across products.

Automated tools can validate code before it’s released, so they can be used to enforce a consistent baseline of functionality across a company’s products.

But, meeting this proof point should really involve shifting well left of the development phase into UX research and UI design.

– Consistent approach to implementing accessibility features across products

Partially implemented

So, again, Continuous Accessibility gets us part of the way there, but not to 100% complete. That’s another 8 points. For those scoring at home, we’re up to 16 out of 300 possible points.

– Consistent approach to testing and releasing products

The first proof point here is Consistent approach to testing and releasing products.

Implementing Continuous Accessibility tooling should be considered essential, but not sufficient for meeting this proof point because, while automated testing can help us find certain classes of bugs basically in real time, manual validation by humans is still necessary for areas the machines can’t test or where they don’t give us 100% confidence.

– Consistent approach to testing and releasing products

Partially implemented

Another example where Continuous Accessibility partially satisfies the proof point. So, 24 out of 300.

Before I continue, I want to go a little deeper on this last proof point.

Cam Cundiff of Asana first turned me on to the analogy of layers of testing being like a stack of Swiss cheese slices.

Any one slice would allow for light? water? whatever, to leak through. But by stacking the slices, you put at least some cheese between the top and bottom of the stack.

I bring this up because, unlike the other proof points, “consistent approach to testing and releasing products” is not explicitly tied to accessibility.

That’s because you need to test for accessibility whether or not the change being made was related to accessibility.

It happens all the time that “unrelated” changes end up breaking things for accessibility.

And that’s why Continuous Accessibility is the only realistic way to stay on top of the usability of a complex, rapidly changing application.

Any mature software organization is already going to have other types of testing in place in CI/CD. Adding testing specific to accessibility is like adding another layer of cheese to the stack.

Continuing with the Quality Review Through Release category, we have the proof point: Testing process includes automated accessibility testing.

– Testing process includes automated accessibility testing

Complete

This proof point is worded as a binary, so we’ll say that Continuous Accessibility satisfies it, but I wouldn’t be surprised to see some nuance added to it in future iterations of the model as the tools get more mature.

For now, we’ll bank a sweet 12 points, bringing our total to 36 so far.

The next proof point under Quality Review Through Release is: Accessibility identified as product release gate.

In case that term is unfamiliar, in software, a product release gate is anything in the process that might detect a problem severe enough to stop the line and prevent the software from going out.

Product release gates can be both manual—such as a QA person finding a bug—or automated.

Some of our customers at Assistiv Labs are very sophisticated in how they approach digital accessibility.

And, I don’t know of a single one that would fail a build due to an automated accessibility test not passing.

Yet.

But by applying Continuous Accessibility to your product development efforts, you set yourself up to be able to implement a true product release gate at whatever point you determine the tools are reliable enough to allow you to do so.

– Accessibility identified as product release gate

Started

So, we’ll call this one “started,” one step up from “no activity” in our spreadsheet.

That’s good for another 4 points, bringing us to…

…40 out of 300 or 13.3%.

Continuous Accessibility alone was enough to get to “completed” for 1 of the 5 proof points.

It was sufficient to justify “partially completed” for another 3 of them, and “started” for 1 more, in total fulfilling 40 of the possible 300 points (13.3%) in the ICT dev lifecycle dimension of the W3C’s accessibility maturity model.

I’m sure some of you have seen that automatic tools such as linters and scanners tend to identify a high volume of issues.

When implementing tools that scan for problems, it’s a good idea to consider in advance how to integrate those results into your existing prioritization processes.

The following are related proof points: Under the Development category…

– Documented way to triage and prioritize fixing accessibility issues and address customer-reported feedback on accessibility

Under Quality Review Through Release…

– Prioritization and refinement system for accessibility defects – Bug tracking system includes an accessibility category

I didn’t include these in my base calculations because, remember, my methodology was only to include the proof points that must be affected if you implement Continuous Accessibility, but I think they’re worth talking through.

You could theoretically throw a bunch of tooling into your CI/CD and hope for the best, but I wouldn’t recommend it.

So, while they didn’t make the initial cut, you’ll want to consider these proof points to make sure you don’t get swamped with noise in the form of duplicate issues or issues that your organization might consider low-priority.

If you get to “partially implemented” across all 4 stages for the first 2 of these proof points and “completed” for the binary one about having an accessibility category at all, that brings our total up to 68 out of 300 or 22.7%.

Design

– Accessible design review process with templates, checklists, and output – Consistent approach to designing accessibility features across products

Quality Review Through Release

– Documented testing steps and cadence for agile delivery of changes that do not go through a full release cycle.

There are a ton of advancements being made around automation at the moment, including shifting automated accessibility checks left into design.

I haven’t played with it directly, so I didn’t include it in my calculations, but as one example, Figma supports triggering a type of CI/CD for what they’re calling “Continuous Design.“

Stark, who make a popular Figma plug-in for checking accessibility, has automatable tools.

Does that combination help us get to at least partially implemented on these additional proof points? I’m not actually sure!

And I’m including the additional proof point from the quality section here because it’s a less common use case, but theoretically you could use automatic checkers to look for certain types of accessibility problems on things like draft blog posts.

If you were to hit all the points on the previous 2 slides, we can get to 108 out of 300 (36%) with a pretty reasonable level of effort. Now we’re talkin’!

Accessibility maturity models are the best way we have to track improvements in our organizations’ accessibility programs.

Continuous Accessibility enables us to deliver accessible experiences at scale, helping us improve our accessibility maturity.

But it’s not enough just to throw tools at the problem. Understand their capabilities and limitations and back them up with adjustments to your existing processes to make the most of them.

Accessibility Maturity Models

Continuous Accessibility

Articles

More