Uncorking Analytics with Apache Pulsar, Apache Flink, and Apache Pinot f Viktor Gamov, Con luent | @gamussa San Francisco, CA, October 2024

Slide 1

Slide 2

Before We Proceed… https://gamov.dev/uncorking-analytics @gamussa | @confluentinc | #DataStreamingSummit

Slide 3

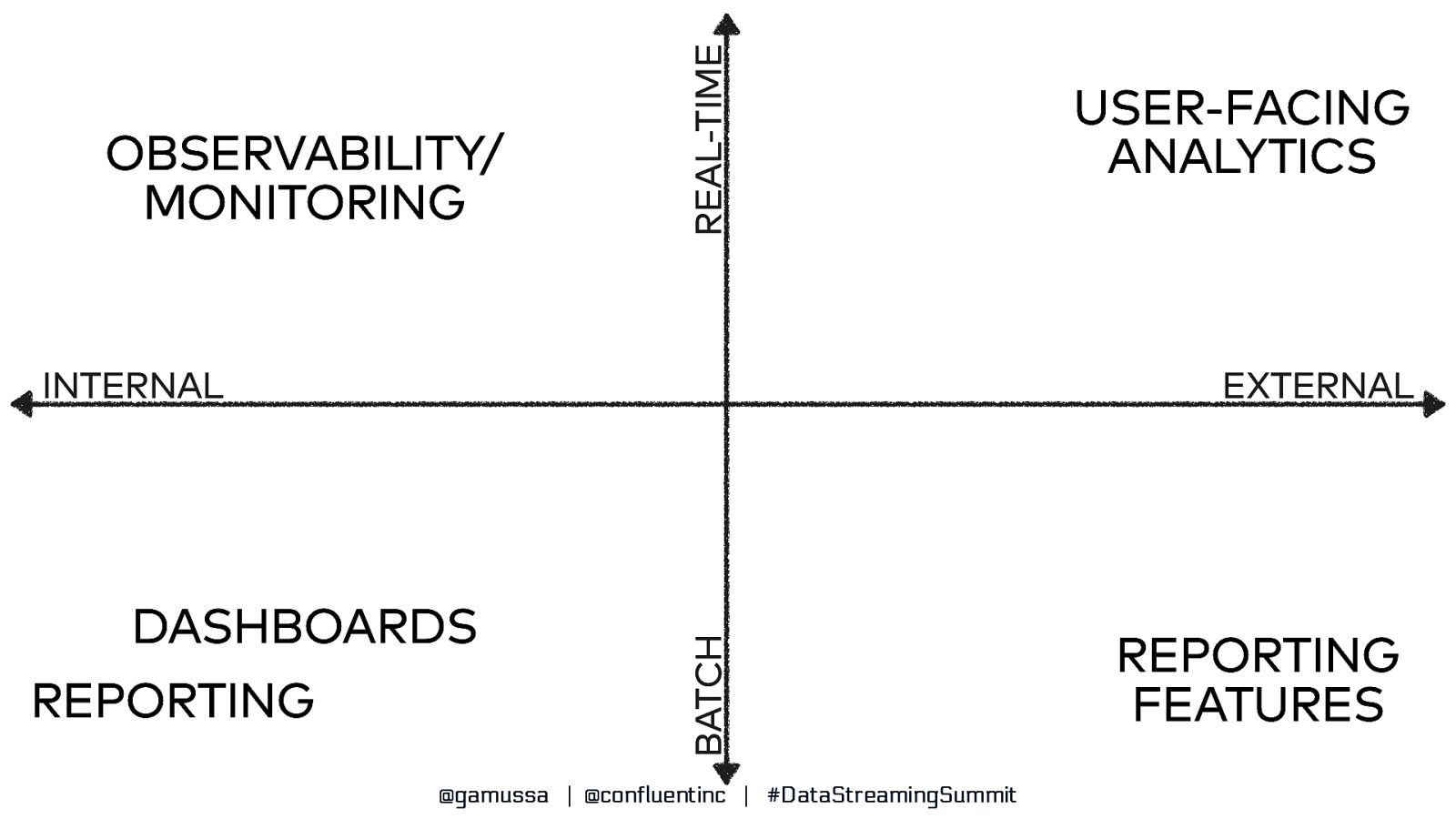

A Taxonomy of Analytics @gamussa | @confluentinc | #DataStreamingSummit

Slide 4

REAL-TIME OBSERVABILITY/ MONITORING EXTERNAL DASHBOARDS REPORTING BATCH INTERNAL USER-FACING ANALYTICS @gamussa | @confluentinc | #DataStreamingSummit REPORTING FEATURES

Slide 5

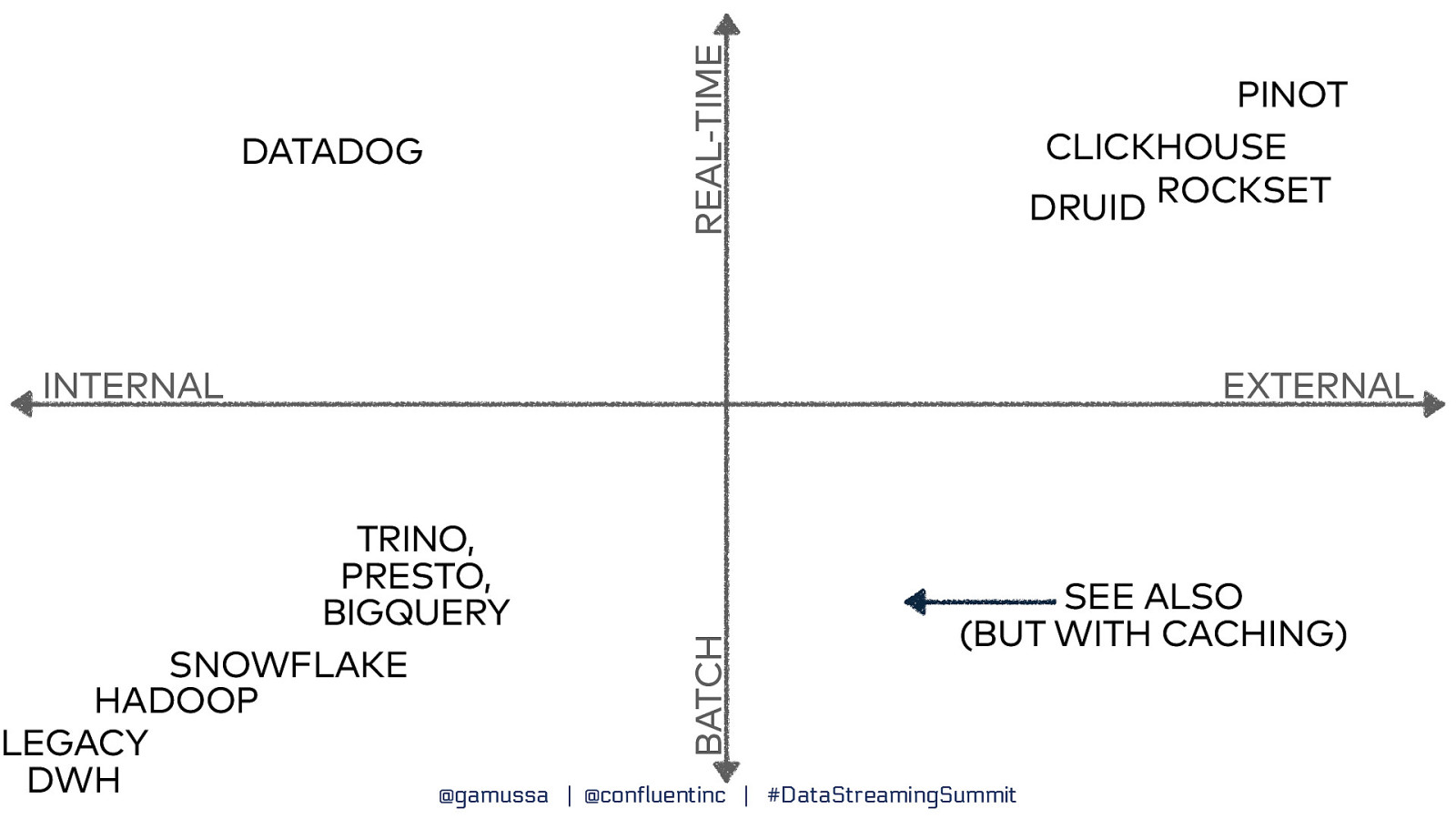

REAL-TIME DATADOG PINOT CLICKHOUSE ROCKSET DRUID TRINO, PRESTO, BIGQUERY SNOWFLAKE HADOOP LEGACY DWH @gamussa EXTERNAL BATCH INTERNAL SEE ALSO (BUT WITH CACHING) | @confluentinc | #DataStreamingSummit

Slide 6

Who Does Real-Time Analytics? @gamussa | @confluentinc | #DataStreamingSummit

Slide 7

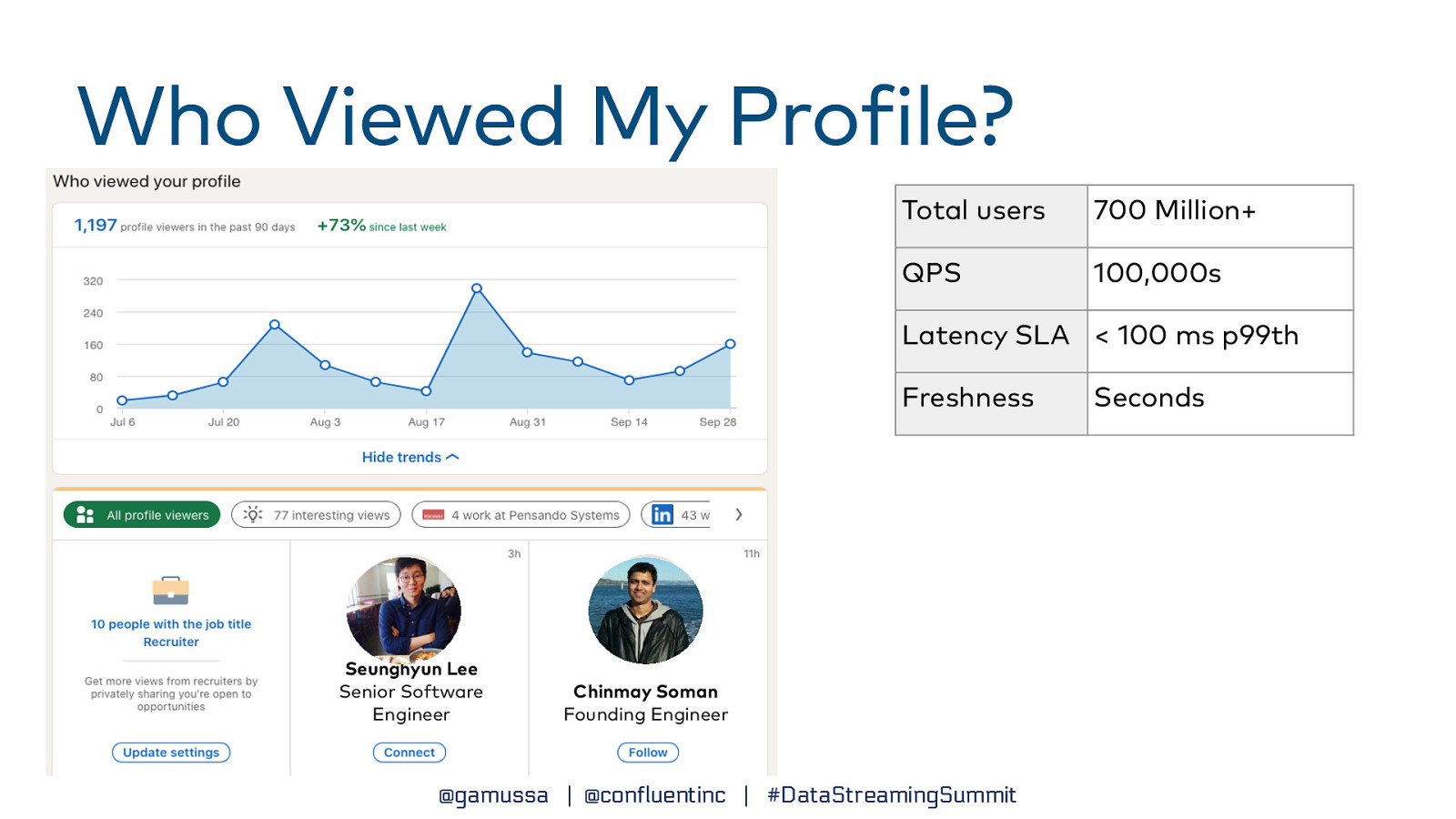

Who Viewed My Pro ile? Total users 700 Million+ QPS 100,000s Latency SLA < 100 ms p99th Freshness Seunghyun Lee Senior Software Engineer Chinmay Soman Founding Engineer f @gamussa | @confluentinc | #DataStreamingSummit Seconds

Slide 8

Viktor GAMOV Principal Developer Advocate | Con luent Java Champion O’Reilly and Manning Author Twitter X: @gamussa f f THE CLOUD CONNECTIVITY COMPANY Kong Con idential

Slide 9

@gamussa | @testcontainers | #DataStreamingSummit

Slide 10

What is Apache Pinot ? ™ @gamussa | @confluentinc | #DataStreamingSummit

Slide 11

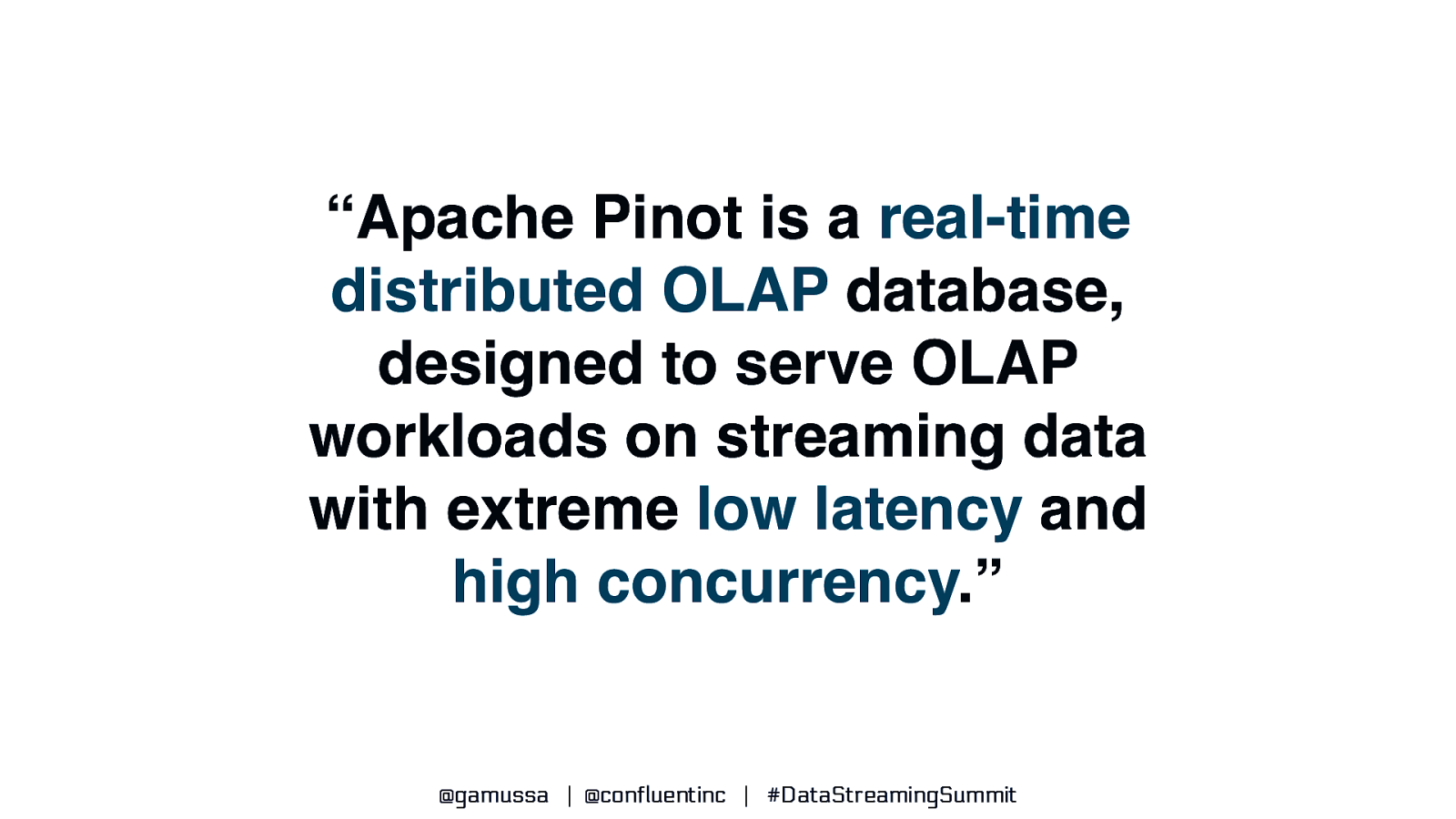

“Apache Pinot is a real-time distributed OLAP database, designed to serve OLAP workloads on streaming data with extreme low latency and high concurrency.” @gamussa | @confluentinc | #DataStreamingSummit

Slide 12

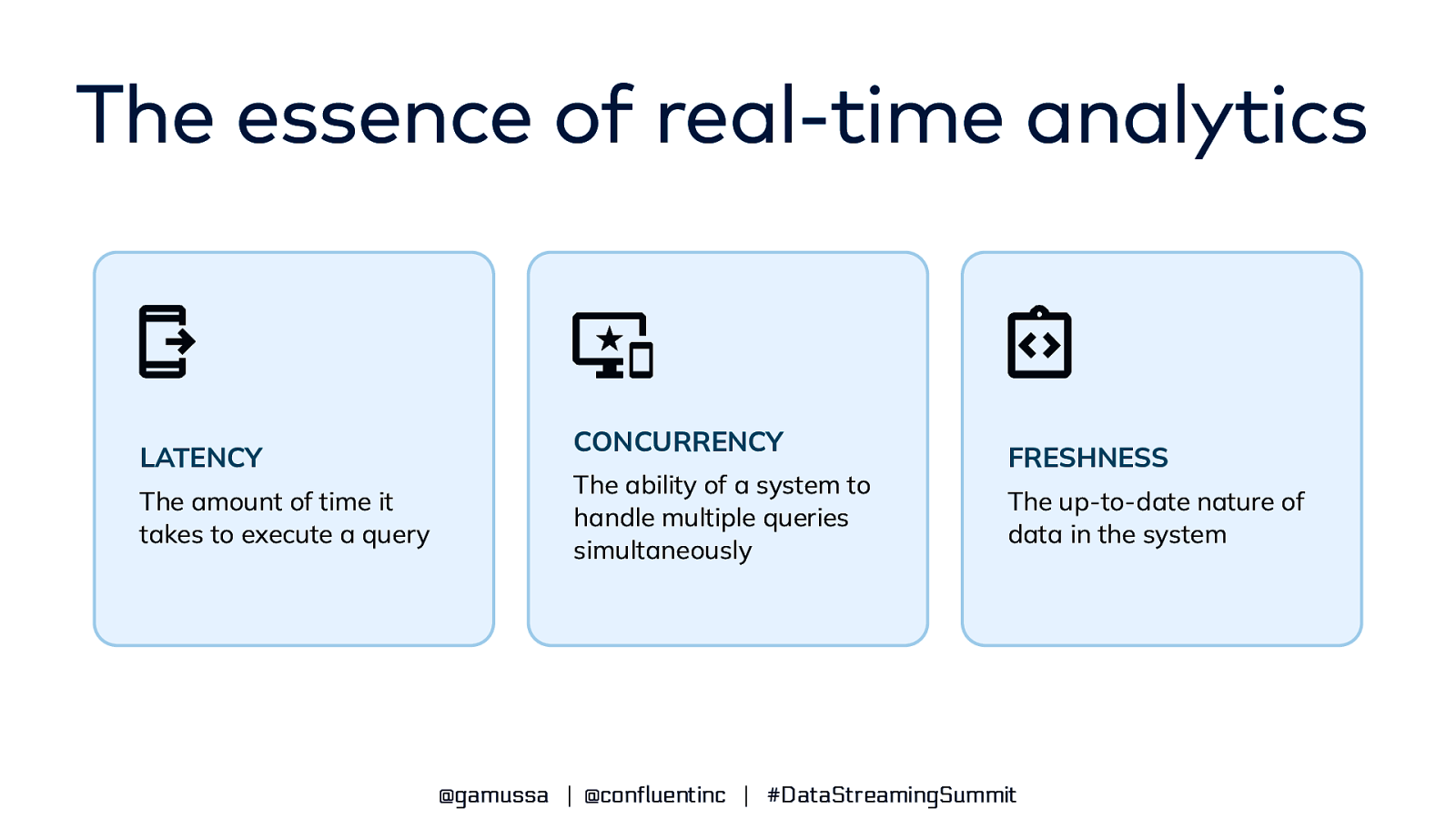

The essence of real-time analytics LATENCY The amount of time it takes to execute a query CONCURRENCY The ability of a system to handle multiple queries simultaneously FRESHNESS The up-to-date nature of data in the system @gamussa | @confluentinc | #DataStreamingSummit

Slide 13

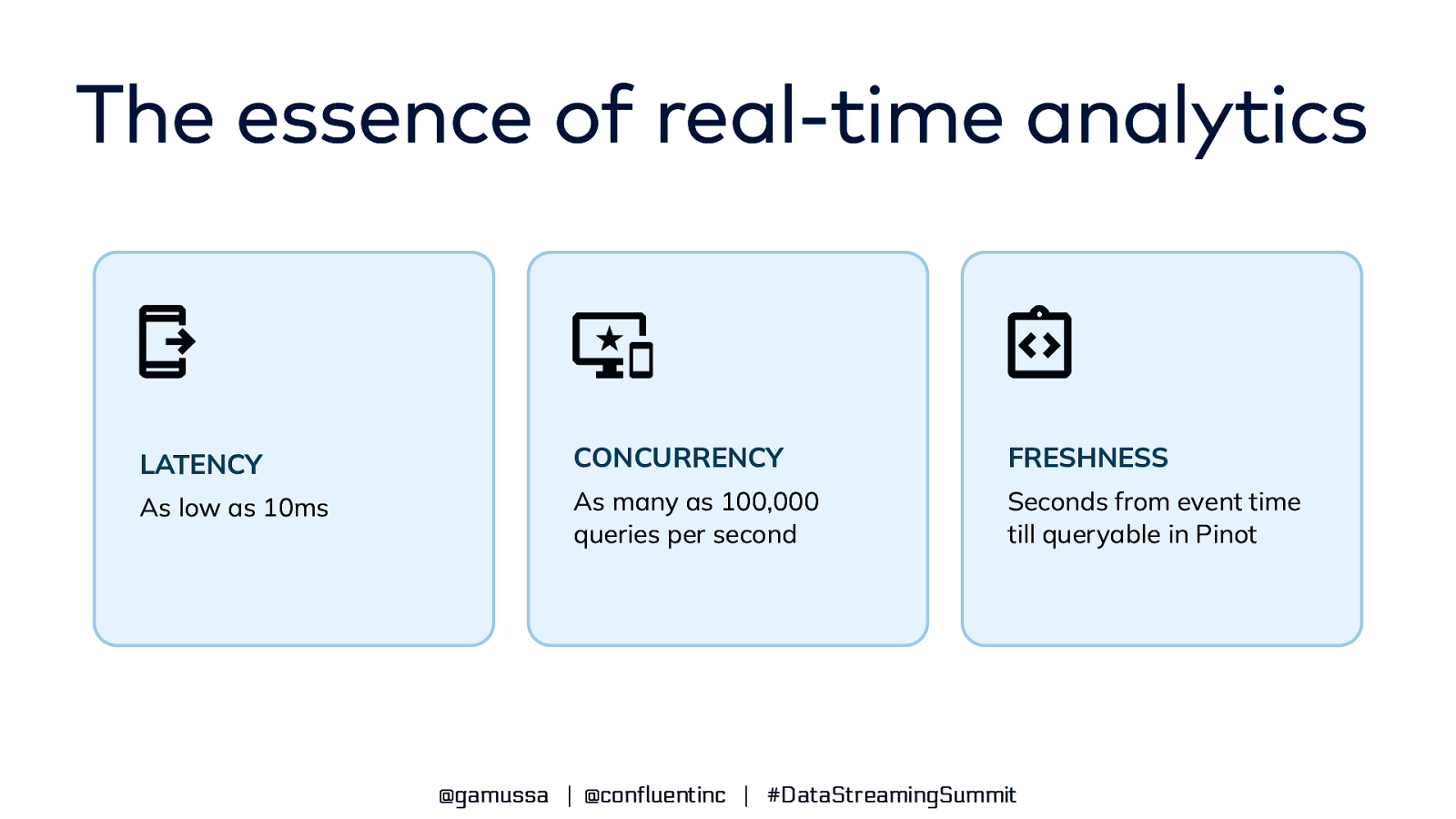

The essence of real-time analytics LATENCY CONCURRENCY FRESHNESS As low as 10ms As many as 100,000 queries per second Seconds from event time till queryable in Pinot @gamussa | @confluentinc | #DataStreamingSummit

Slide 14

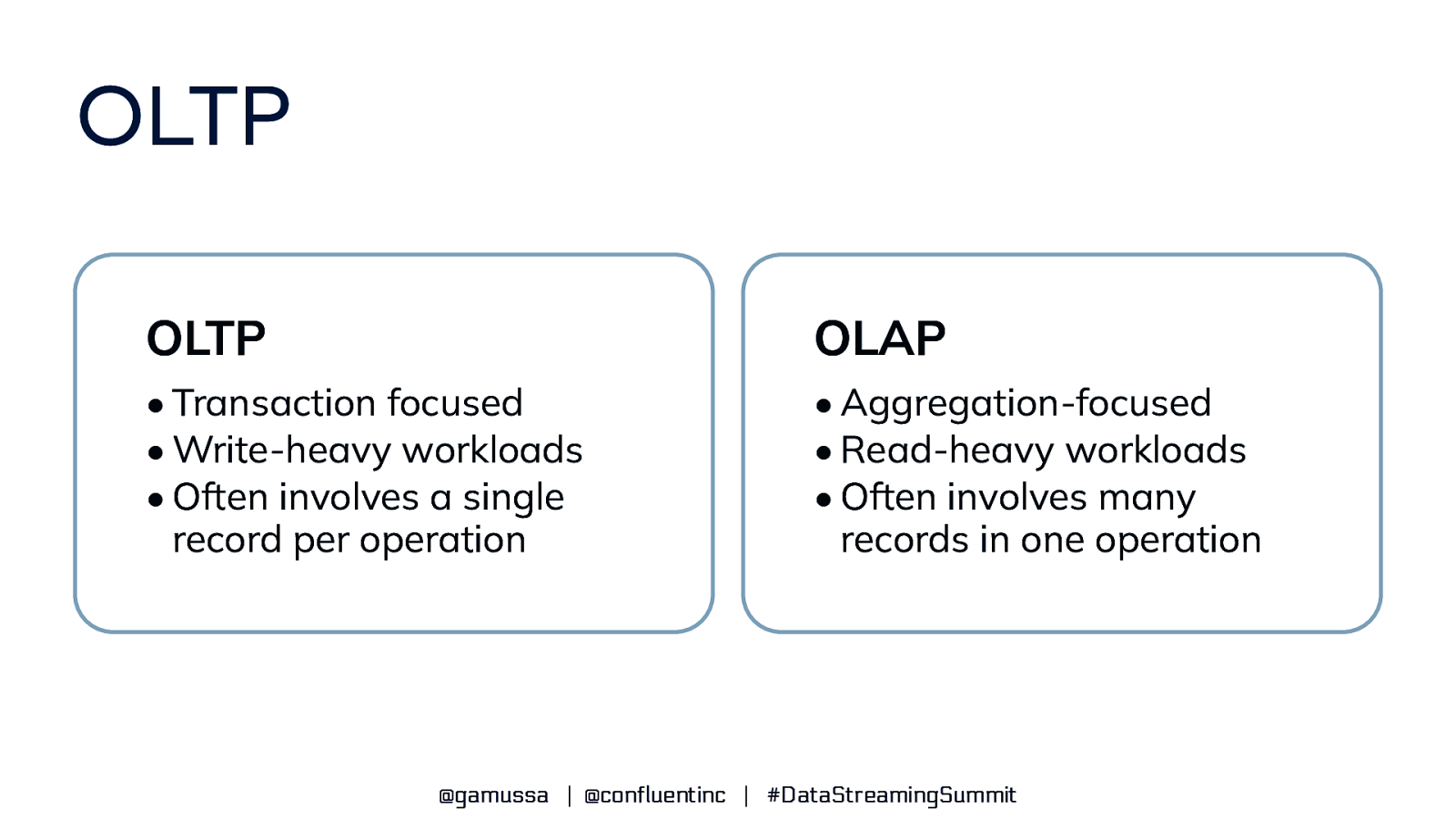

OLTP OLTP OLAP • Transaction focused • Write-heavy workloads • Often involves a single record per operation • Aggregation-focused • Read-heavy workloads • Often involves many records in one operation @gamussa | @confluentinc | #DataStreamingSummit

Slide 15

Data Model ● Pinot uses the completely familiar tabular data model ● Table creation and schema definition expressed in JSON ● Queries expressed in SQL

Slide 16

Architecture: Tables and Segments @gamussa | @confluentinc | #DataStreamingSummit

Slide 17

Tables ● ● ● ● ● ● The basic unit of data storage in Pinot Composed of rows and columns Expected to scale to arbitrarily large row counts Defined using a schema and tableConfig JSON file Three varieties: offline, real-time, and hybrid Every column is either a metric, dimension, or date/time @gamussa | @confluentinc | #DataStreamingSummit

Slide 18

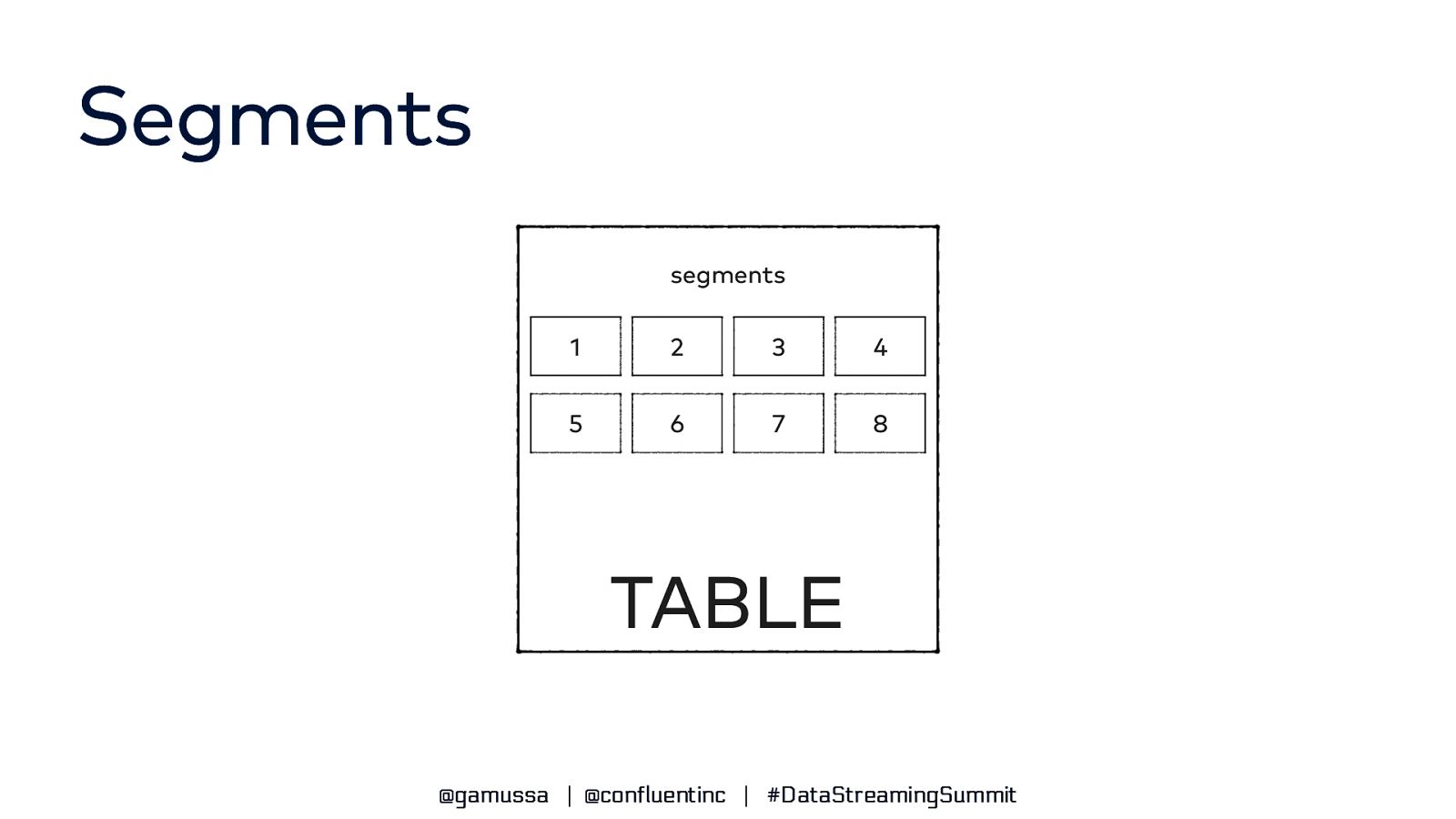

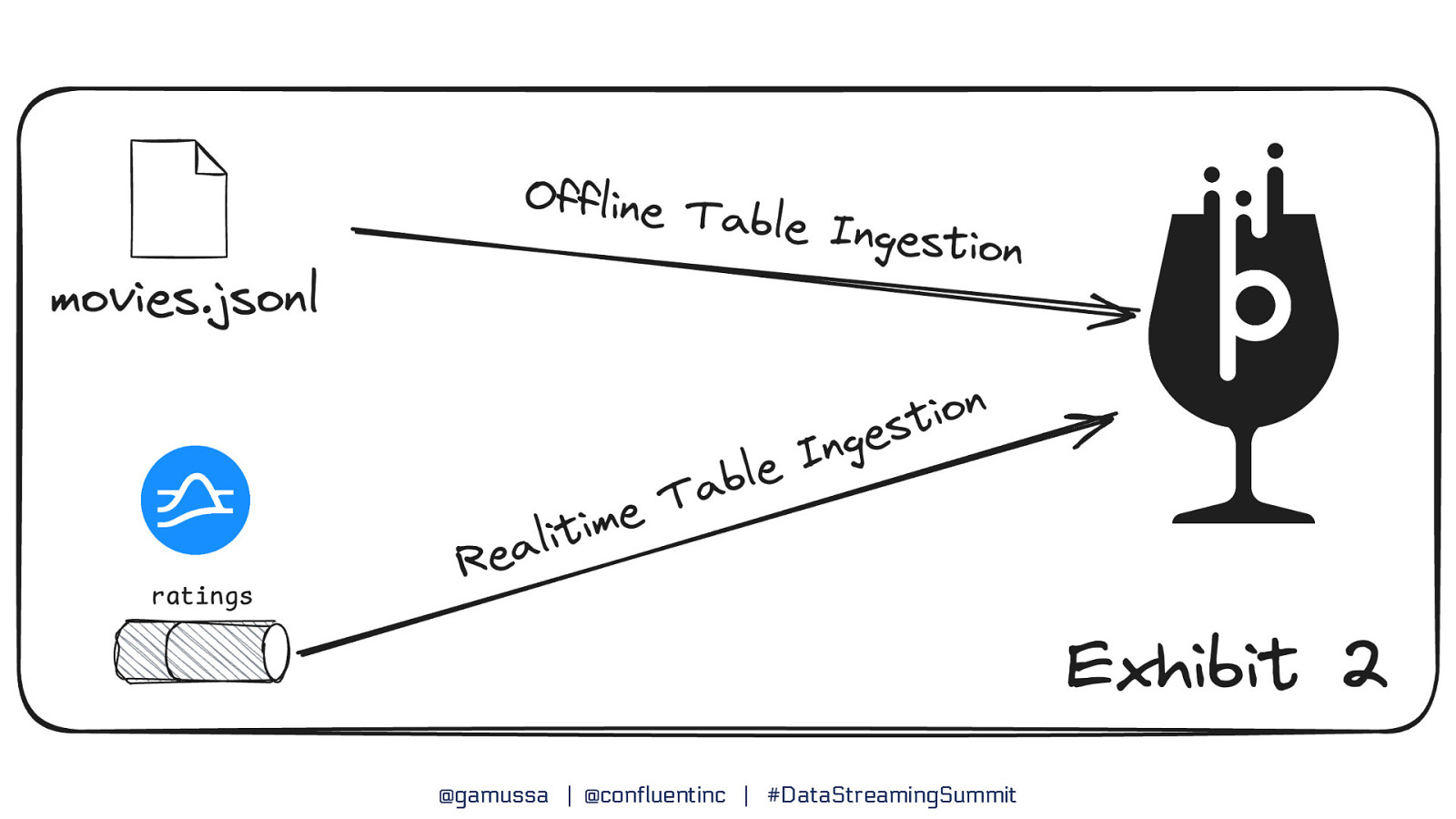

Segments ● Tables are split into units of storage called segments ● Similar to shards or partitions but transparent to you, the user ● For offline tables, segments are created outside of Pinot and pushed into the cluster using a REST API ● For real-time tables, segments are created automatically from events sourced by the event streaming system (e.g., Pulsar, Kafka) ● Standard utilities support batch ingest from standard file types (AVRO, JSON, CSV) ● APIs are available to create segments from Spark, Flink, and Hadoop @gamussa | @confluentinc | #DataStreamingSummit

Slide 19

Segments segments 1 2 3 4 5 6 7 8 TABLE @gamussa | @confluentinc | #DataStreamingSummit

Slide 20

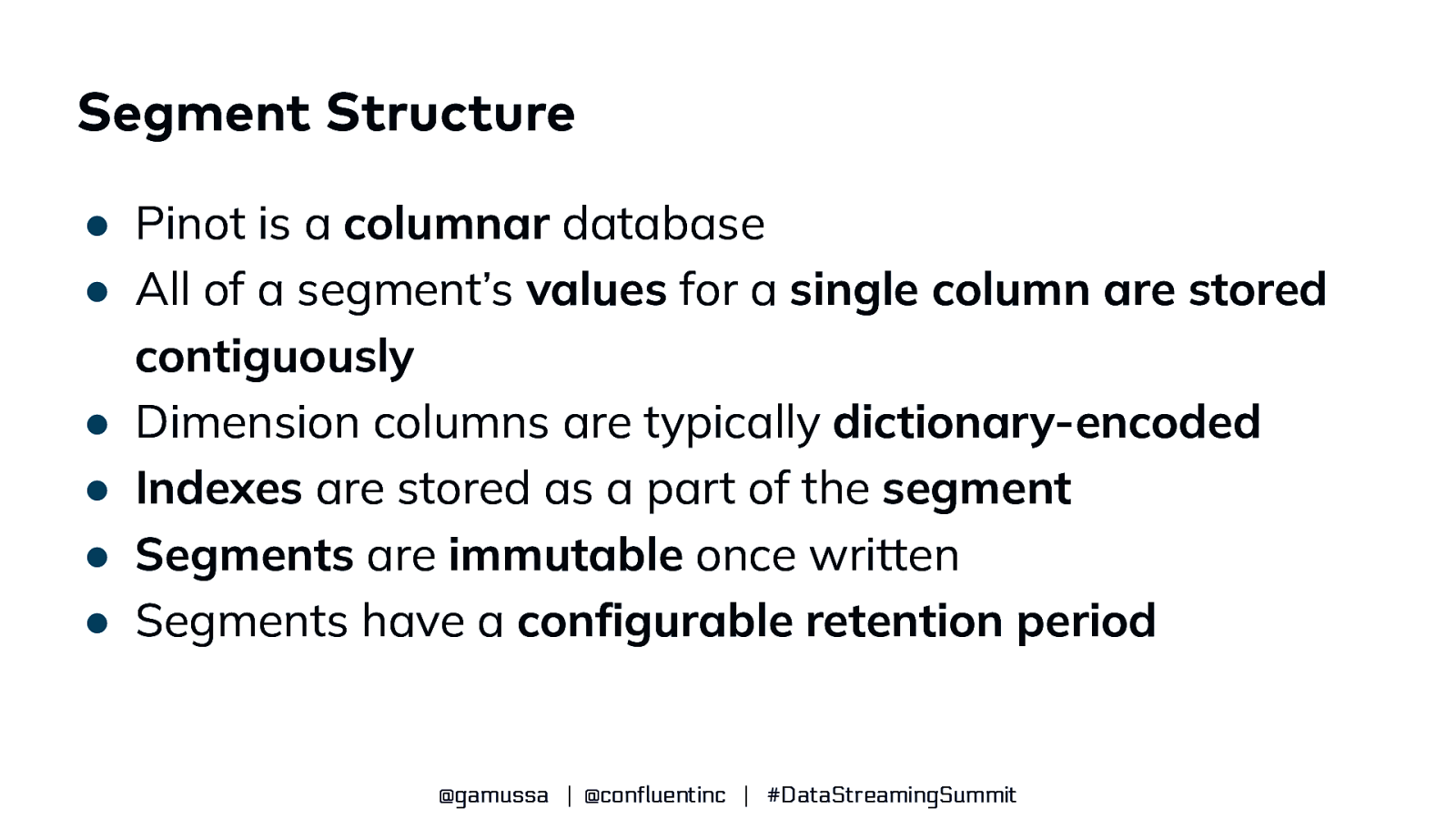

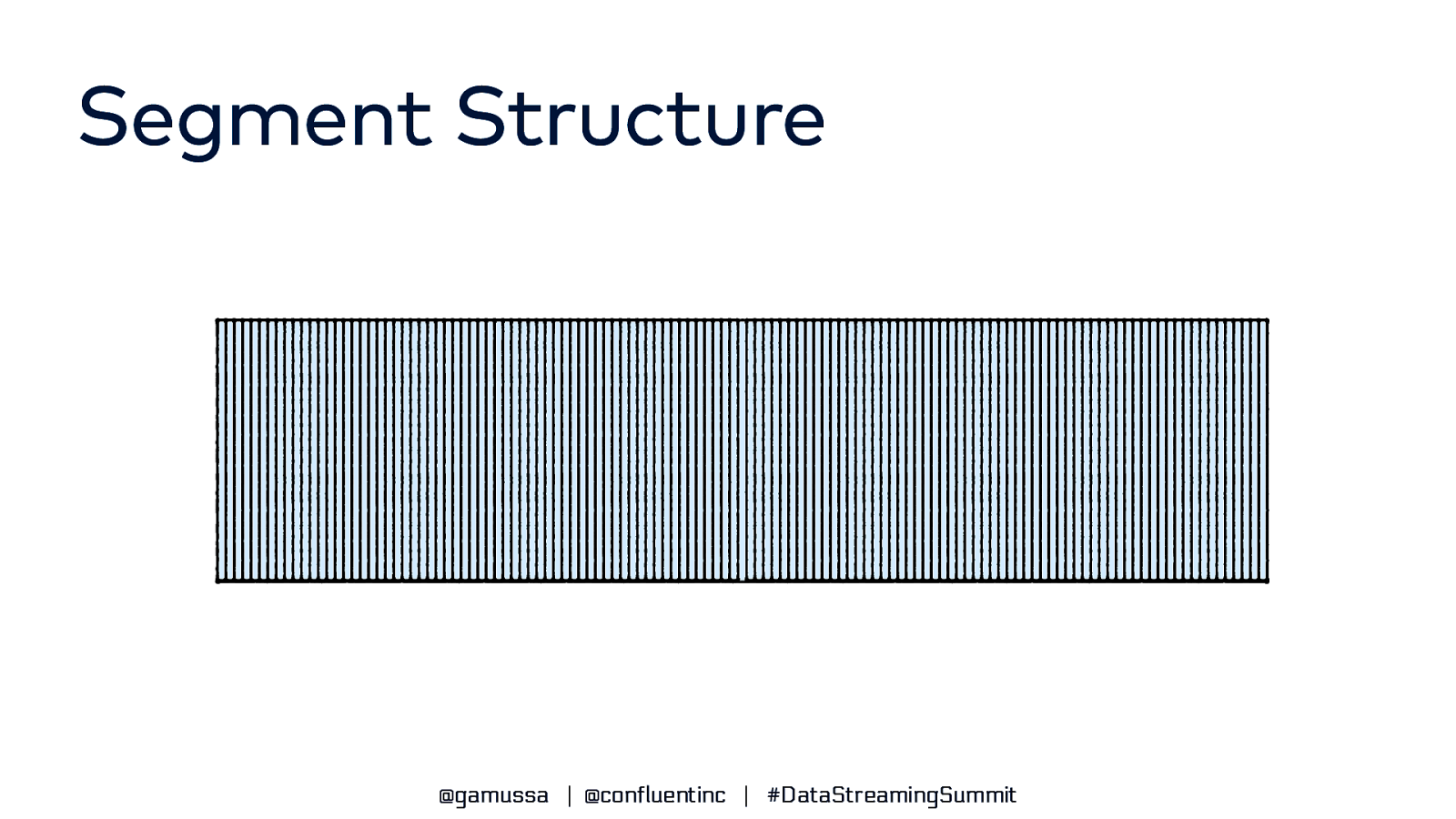

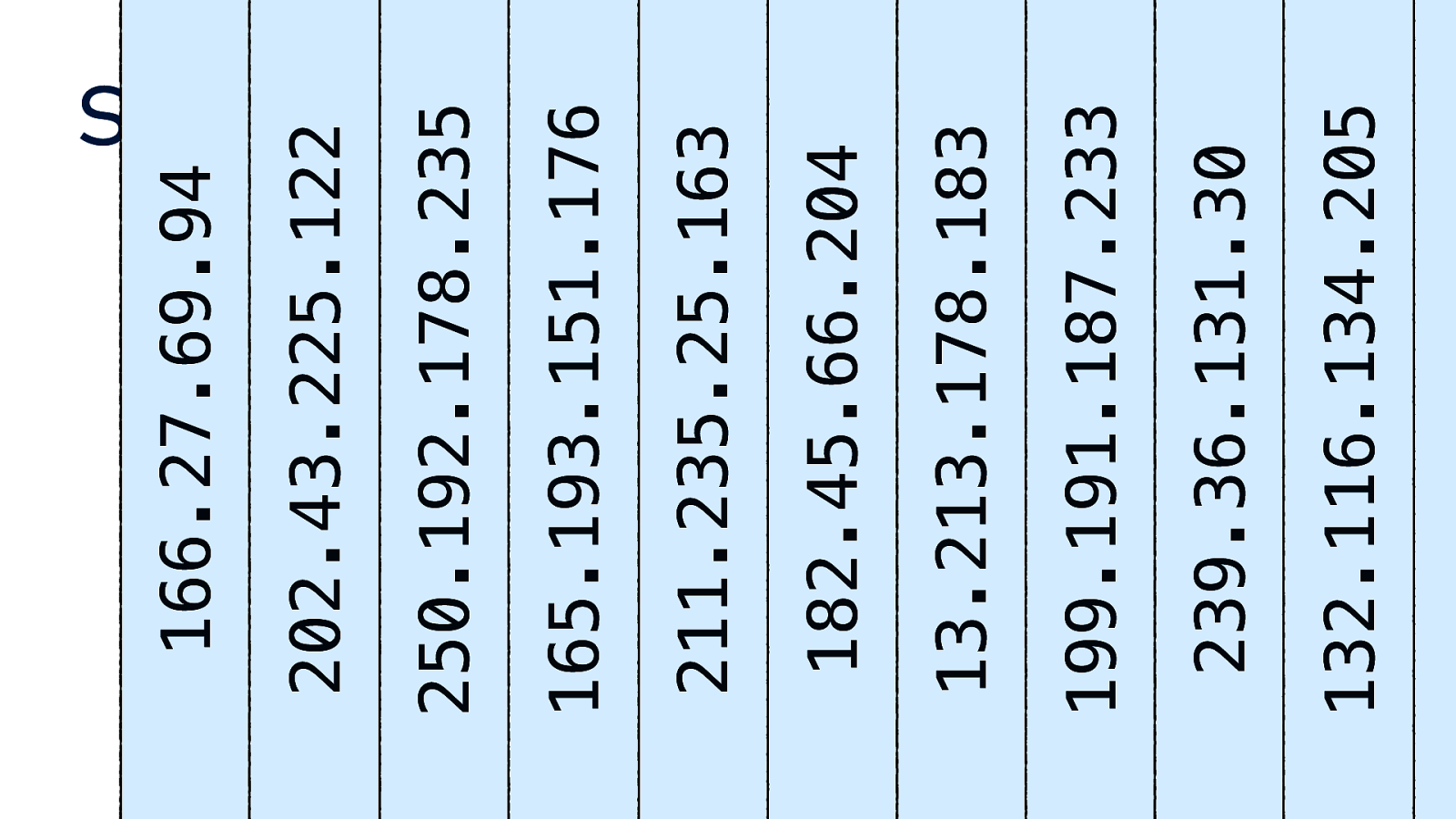

Segment Structure ● Pinot is a columnar database ● All of a segment’s values for a single column are stored contiguously ● Dimension columns are typically dictionary-encoded ● Indexes are stored as a part of the segment ● Segments are immutable once written ● Segments have a configurable retention period @gamussa | @confluentinc | #DataStreamingSummit

Slide 21

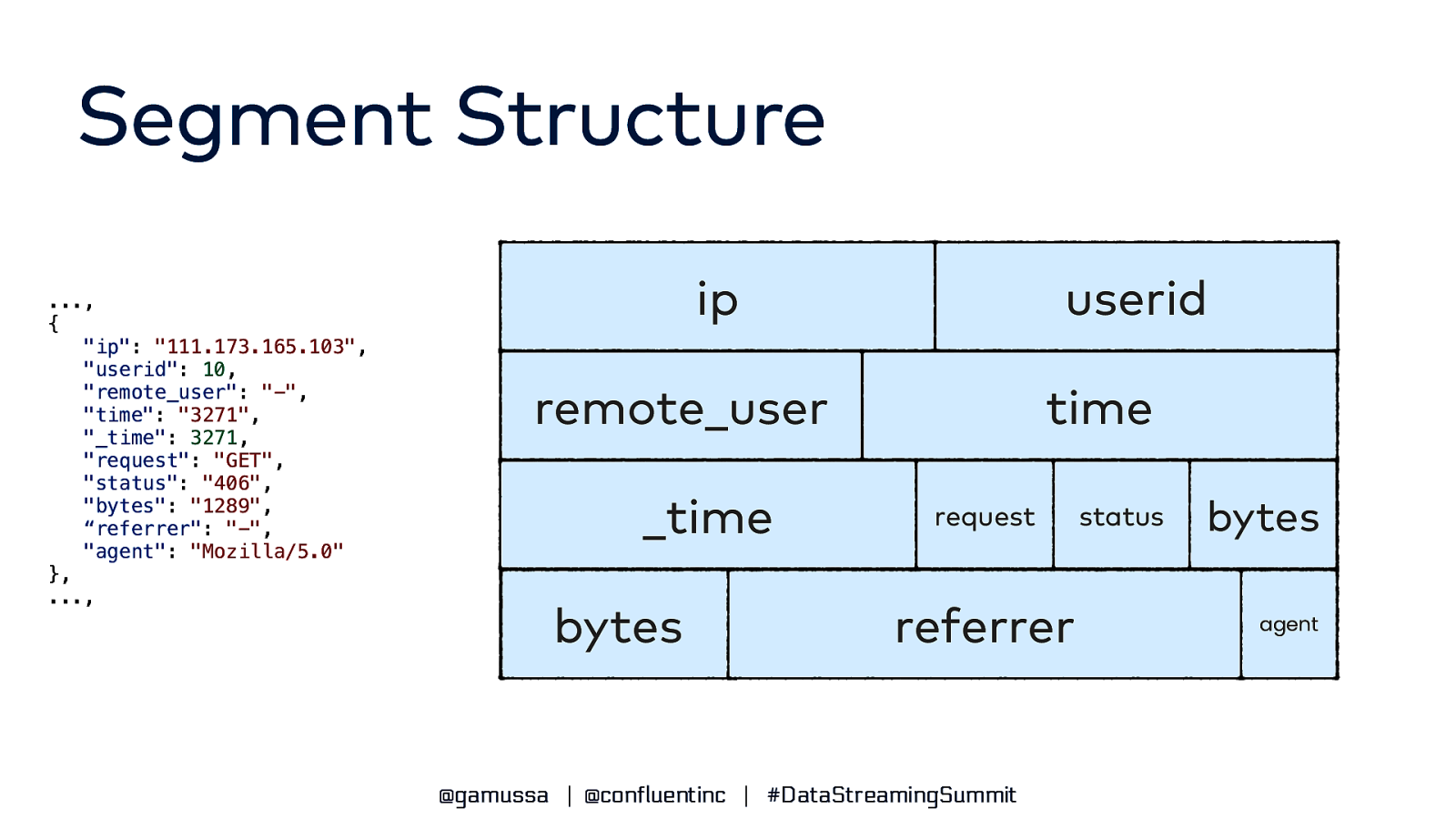

Segment Structure …, { “ip”: “111.173.165.103”, “userid”: 10, “remote_user”: “-“, “time”: “3271”, “_time”: 3271, “request”: “GET”, “status”: “406”, “bytes”: “1289”, “referrer”: “-“, “agent”: “Mozilla/5.0” }, …, ip userid remote_user _time bytes time request referrer @gamussa | @confluentinc | #DataStreamingSummit status bytes agent

Slide 22

Segment Structure ip @gamussa | @confluentinc | #DataStreamingSummit

Slide 23

Segment Structure @gamussa | @confluentinc | #DataStreamingSummit

Slide 24

@gamussa | @confluentinc | #DataStreamingSummit 132.116.134.205 239.36.131.30 199.191.187.233 13.213.178.183 182.45.66.204 211.235.25.163 165.193.151.176 250.192.178.235 202.43.225.122 166.27.69.94 Segment Structure

Slide 25

Part 1 Batch Ingestion in Pinot @gamussa | @confluentinc | #DataStreamingSummit

Slide 26

@gamussa | @confluentinc | #DataStreamingSummit

Slide 27

Part 2 Streaming Ingestion with Ka ka f @gamussa | @confluentinc | #DataStreamingSummit

Slide 28

@gamussa | @confluentinc | #DataStreamingSummit

Slide 29

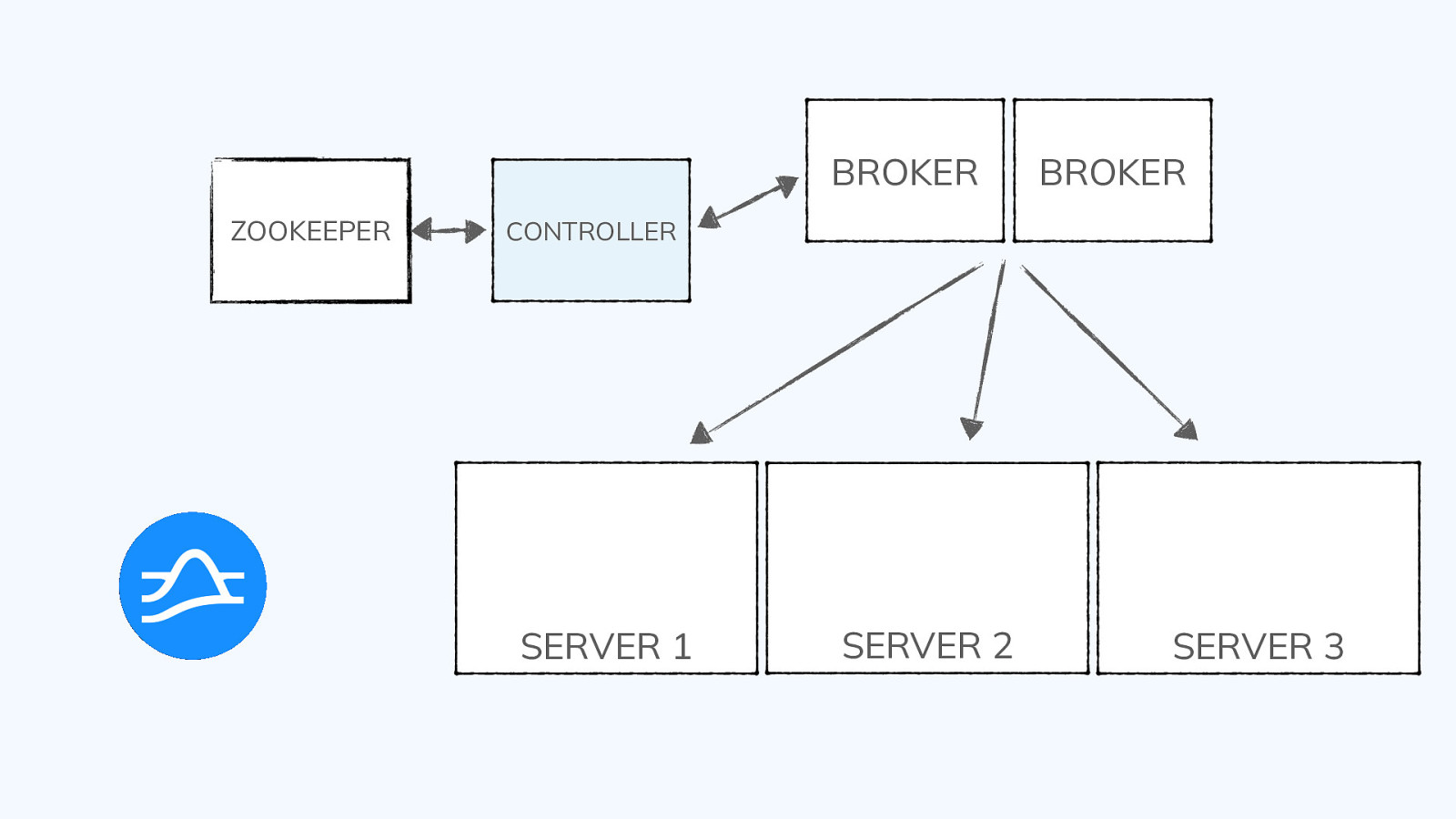

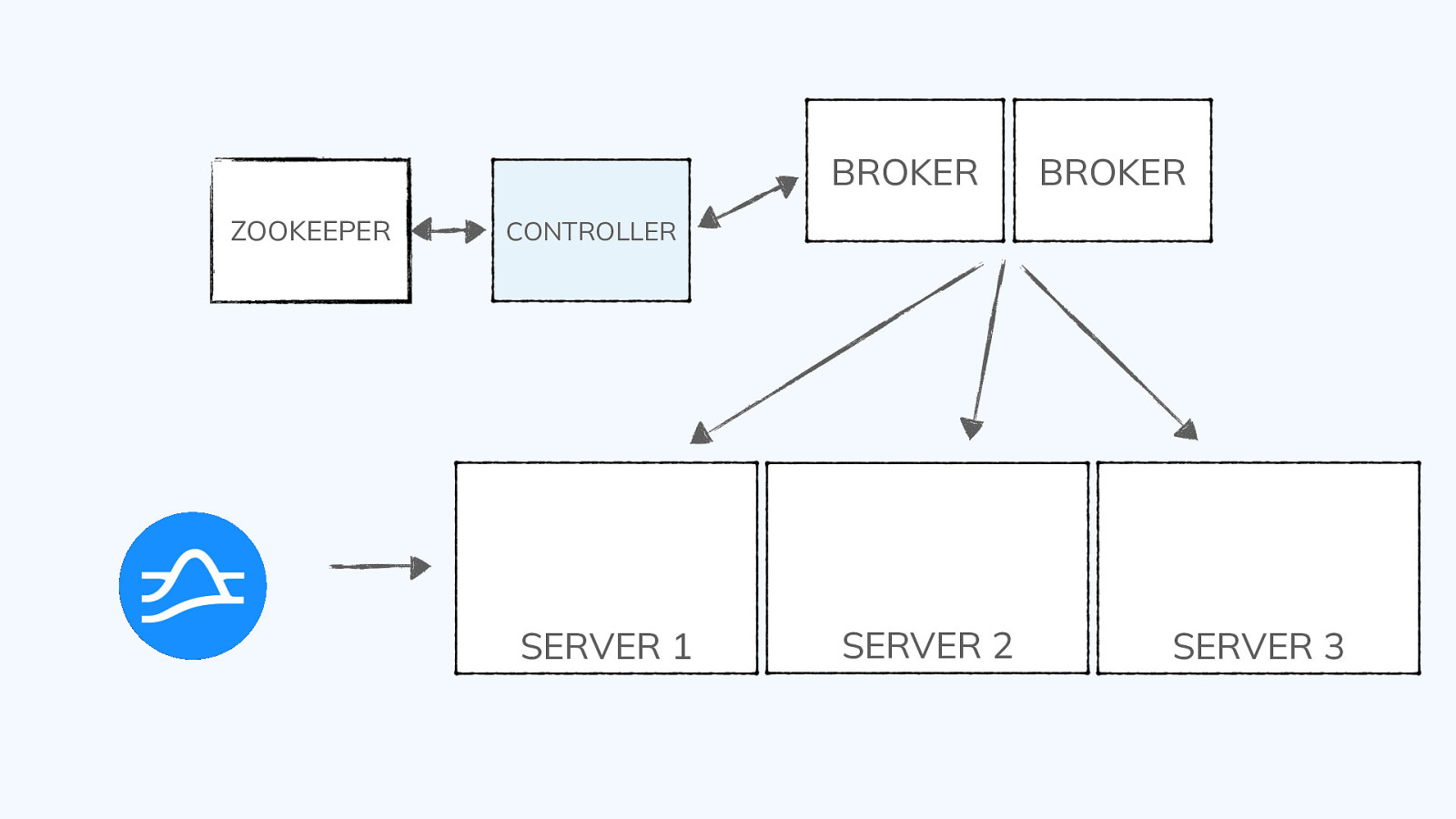

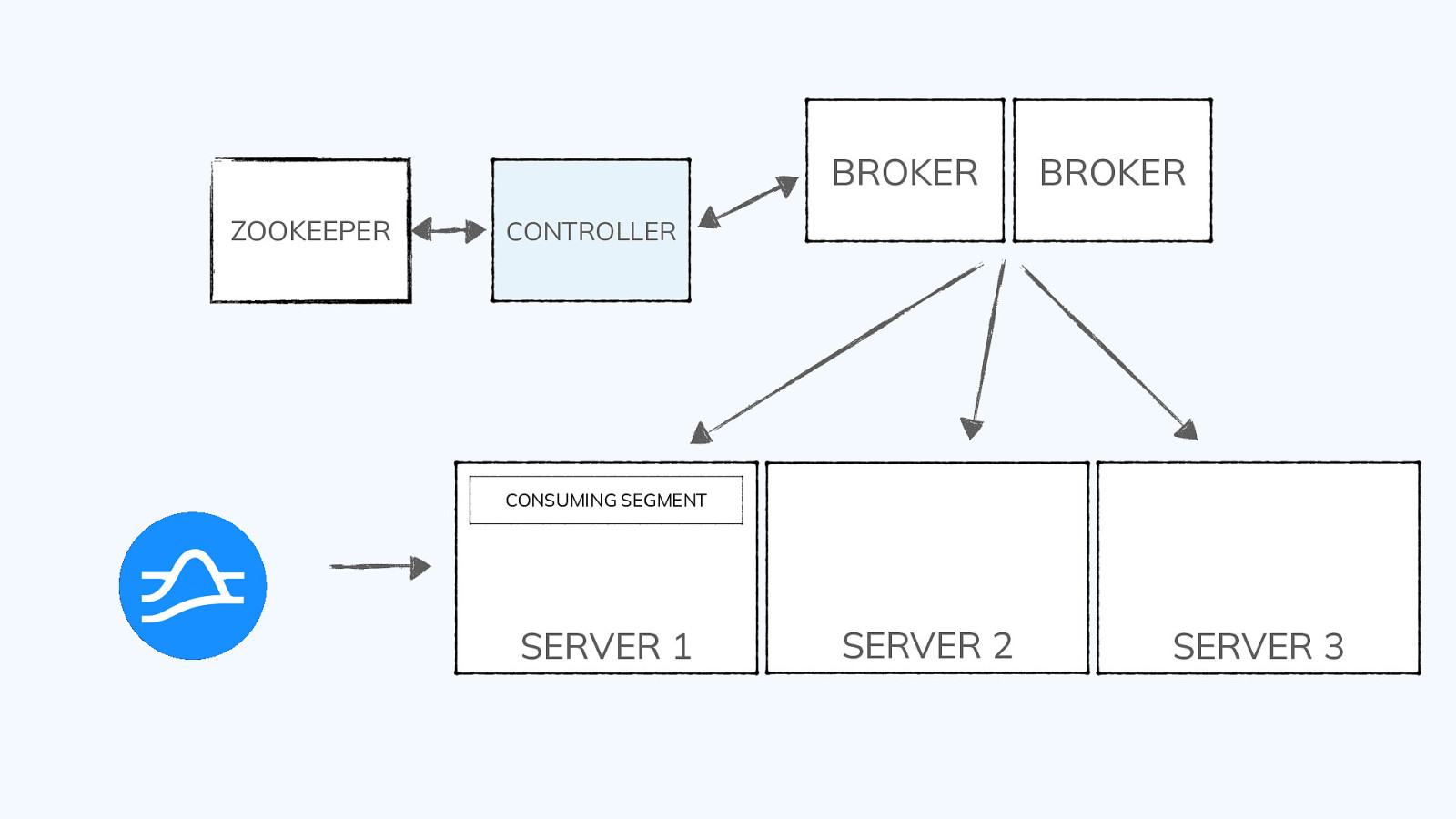

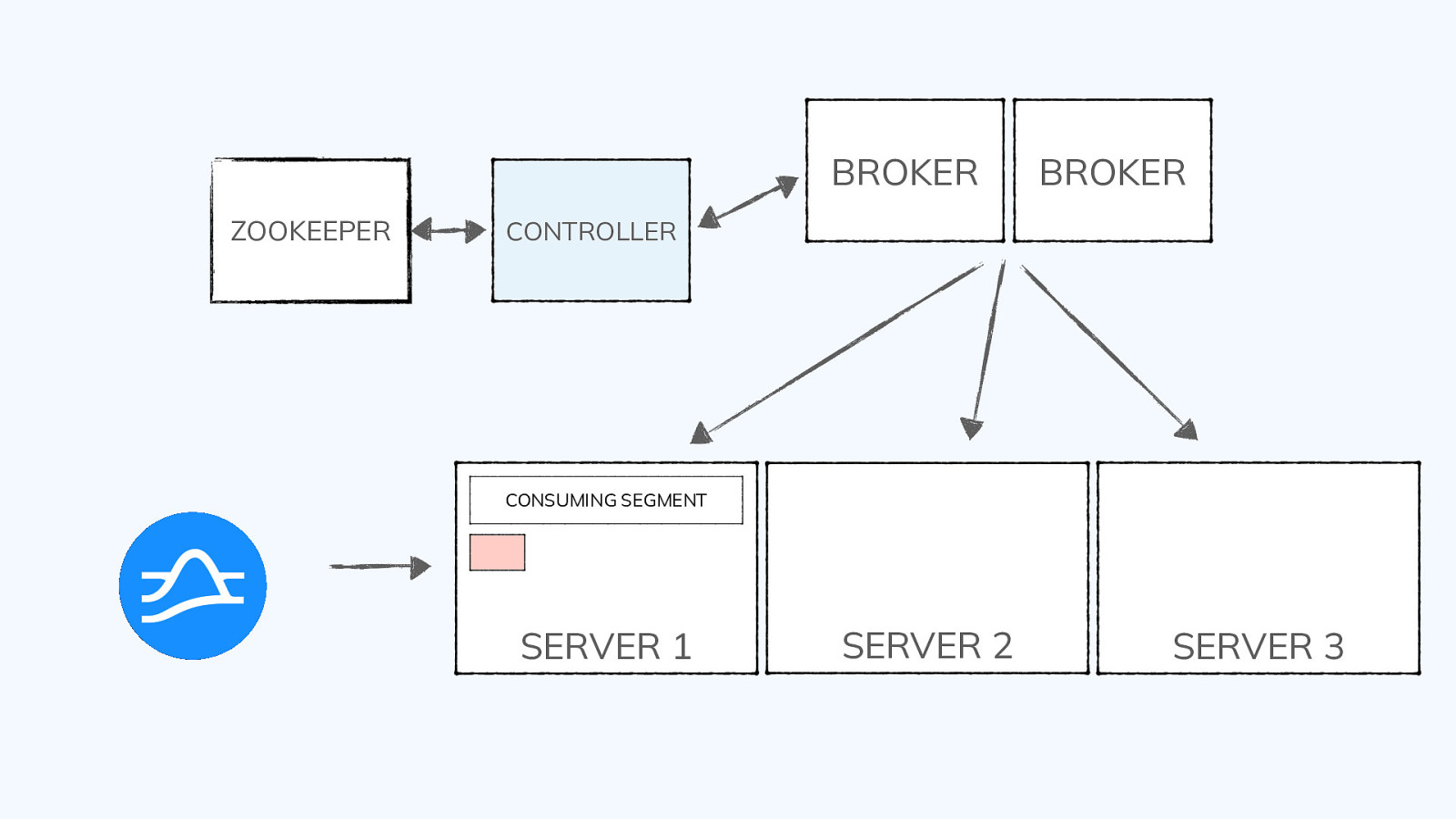

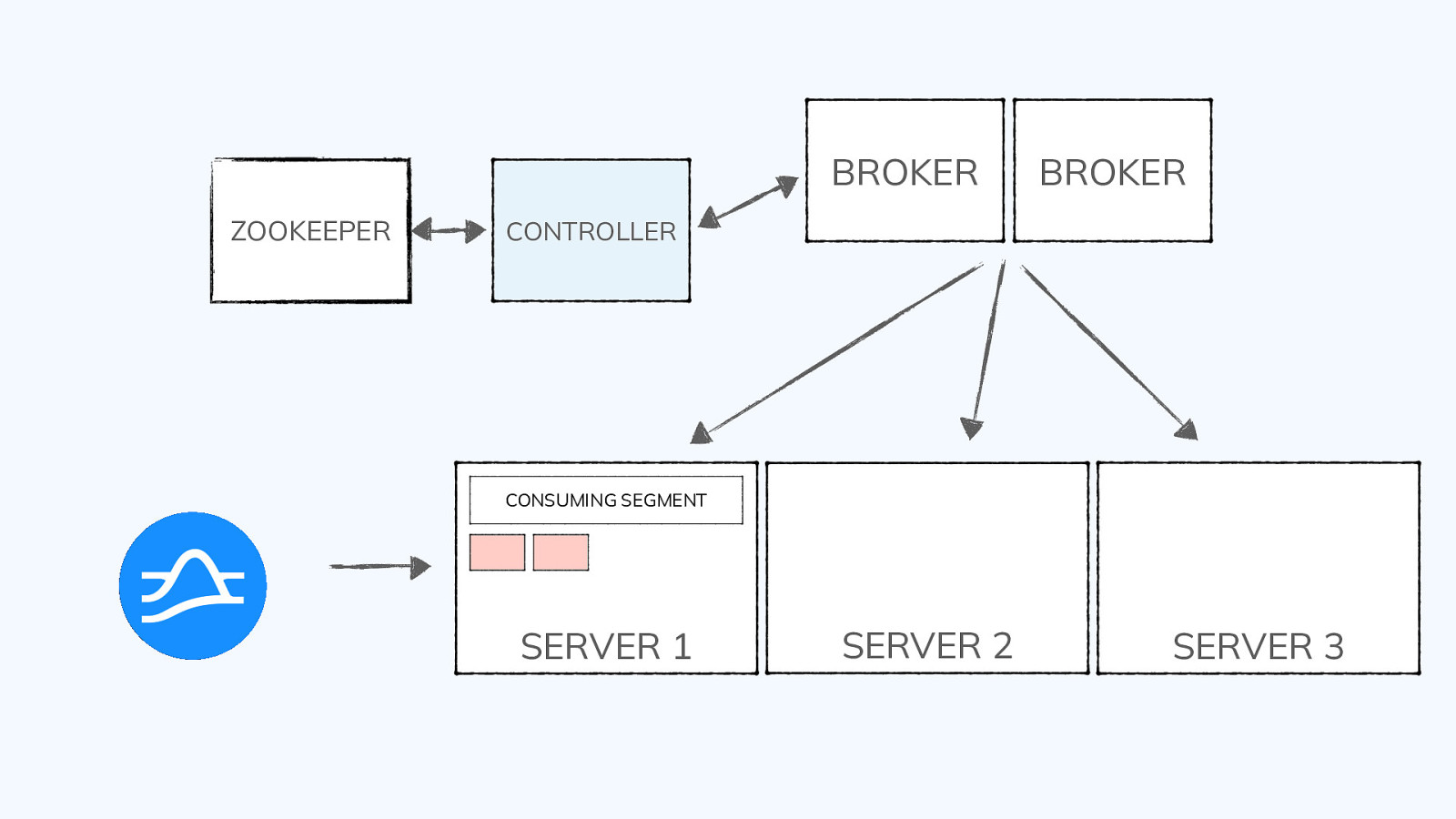

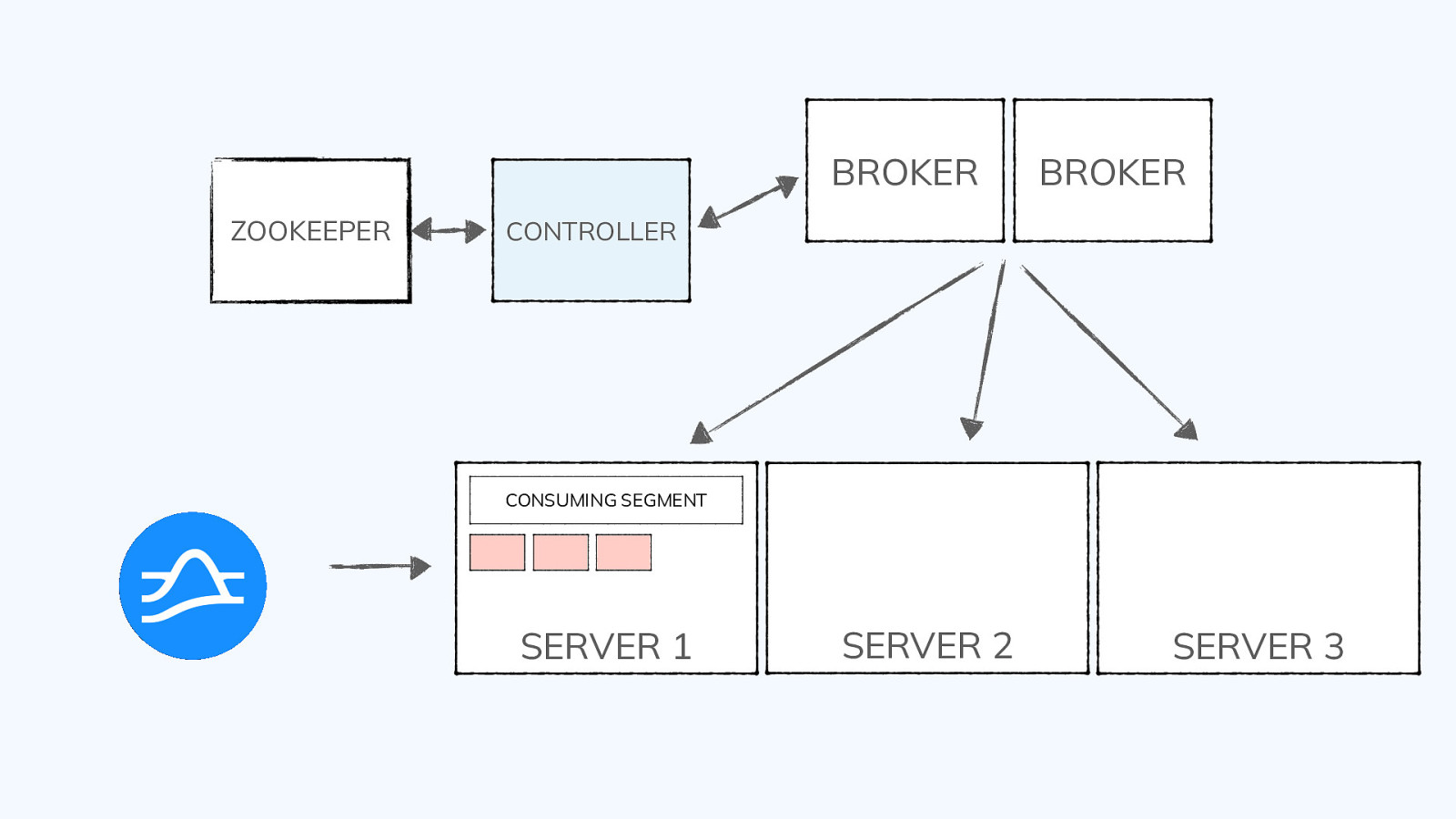

BROKER ZOOKEEPER BROKER CONTROLLER SERVER 1 SERVER 2 SERVER 3

Slide 30

BROKER ZOOKEEPER BROKER CONTROLLER SERVER 1 SERVER 2 SERVER 3

Slide 31

BROKER ZOOKEEPER BROKER CONTROLLER CONSUMING SEGMENT SERVER 1 SERVER 2 SERVER 3

Slide 32

BROKER ZOOKEEPER BROKER CONTROLLER CONSUMING SEGMENT SERVER 1 SERVER 2 SERVER 3

Slide 33

BROKER ZOOKEEPER BROKER CONTROLLER CONSUMING SEGMENT SERVER 1 SERVER 2 SERVER 3

Slide 34

BROKER ZOOKEEPER BROKER CONTROLLER CONSUMING SEGMENT SERVER 1 SERVER 2 SERVER 3

Slide 35

Part 3 Stream Join in Flink @gamussa | @confluentinc | #DataStreamingSummit

Slide 36

Flink 101 @gamussa | @confluentinc | #DataStreamingSummit

Slide 37

«Apache Flink is a framework and distributed processing engine for stateful computations over unbounded and bounded data streams.» @gamussa | @confluentinc | #DataStreamingSummit

Slide 38

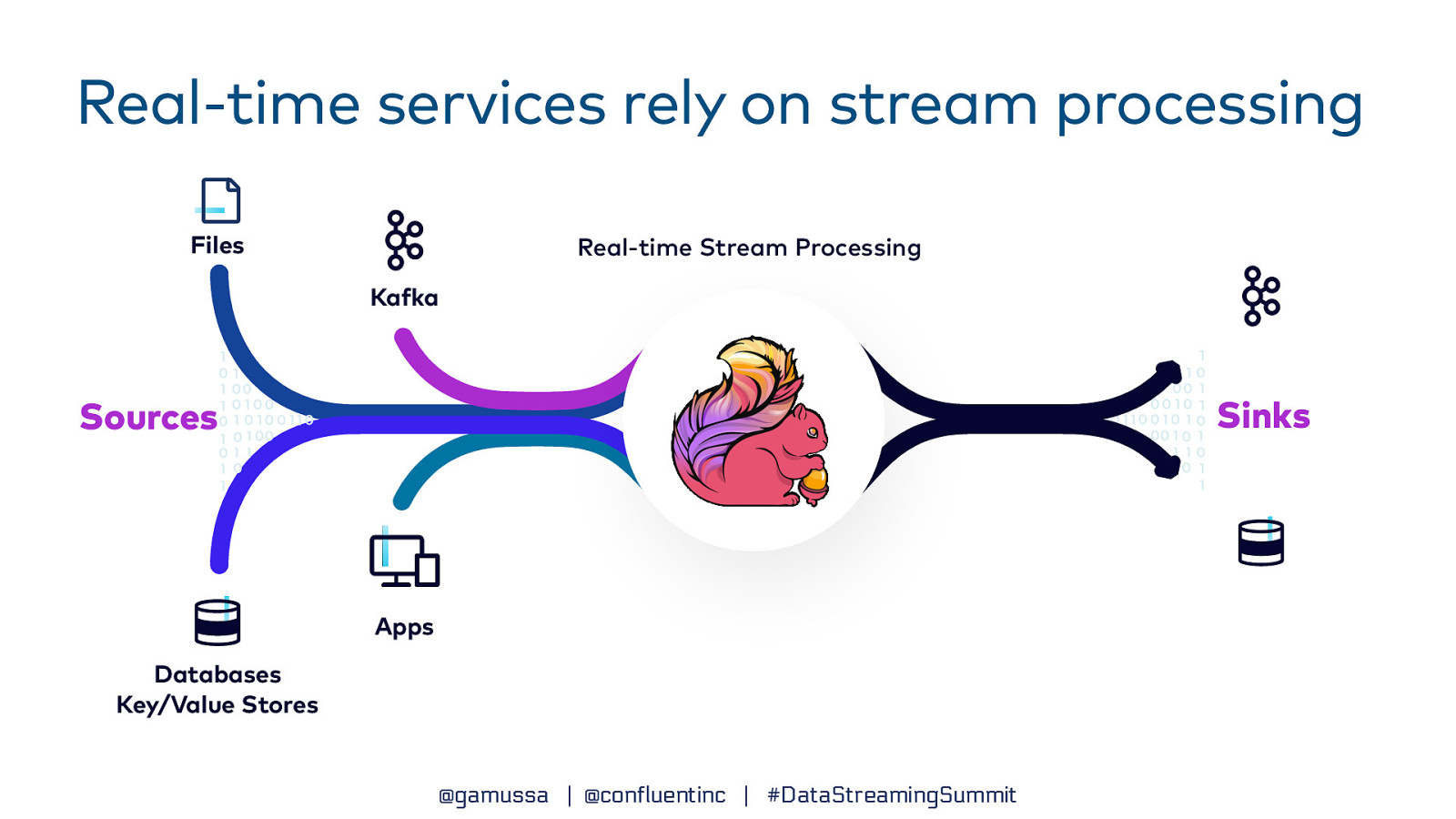

Real-time services rely on stream processing Files Real-time Stream Processing Ka ka Sinks Sources Apps Databases Key/Value Stores f @gamussa | @confluentinc | #DataStreamingSummit

Slide 39

What is Flink SQL @gamussa | @confluentinc | #DataStreamingSummit

Slide 40

A standards-compliant SQL engine for processing both batch and streaming data with the scalability, performance, and consistency of Apache Flink @gamussa | @confluentinc | #DataStreamingSummit

Slide 41

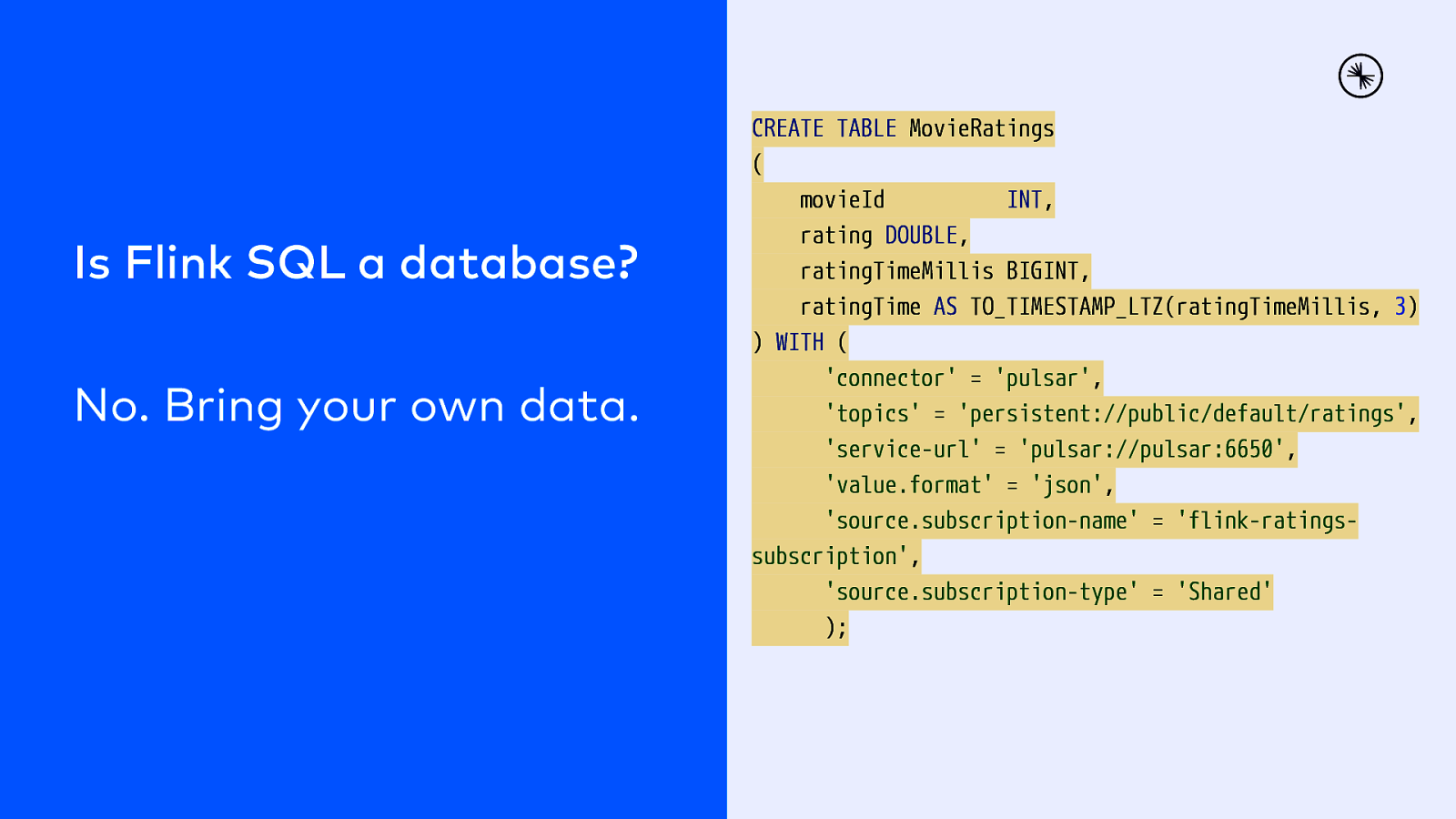

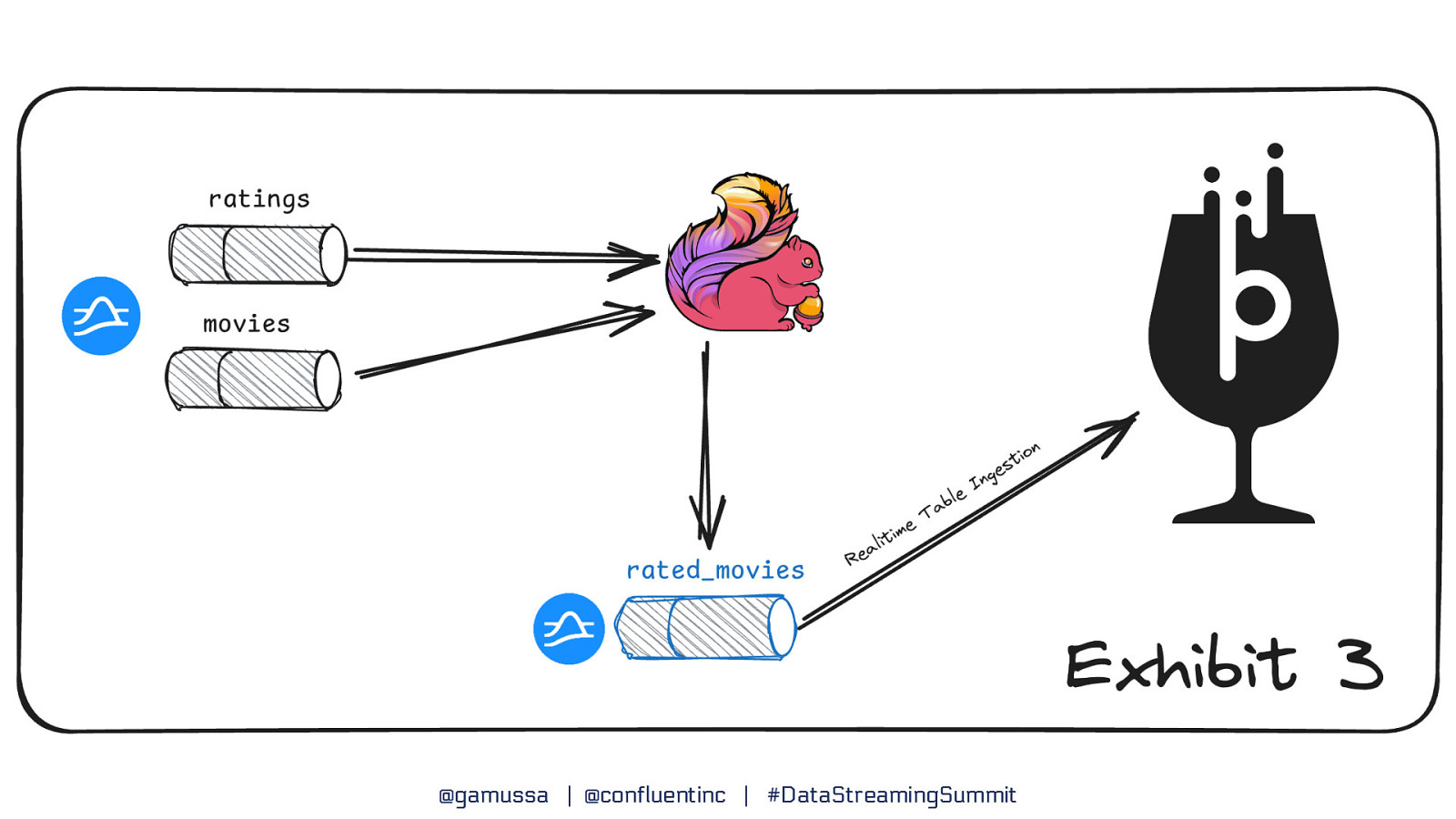

Is Flink SQL a database? No. Bring your own data. CREATE TABLE MovieRatings ( movieId INT, rating DOUBLE, ratingTimeMillis BIGINT, ratingTime AS TO_TIMESTAMP_LTZ(ratingTimeMillis, 3) ) WITH ( ‘connector’ = ‘pulsar’, ‘topics’ = ‘persistent://public/default/ratings’, ‘service-url’ = ‘pulsar://pulsar:6650’, ‘value.format’ = ‘json’, ‘source.subscription-name’ = ‘flink-ratingssubscription’, ‘source.subscription-type’ = ‘Shared’ );

Slide 42

How does Flink work with Pulsar? @gamussa | @confluentinc | #DataStreamingSummit

Slide 43

@gamussa | @confluentinc | #DataStreamingSummit

Slide 44

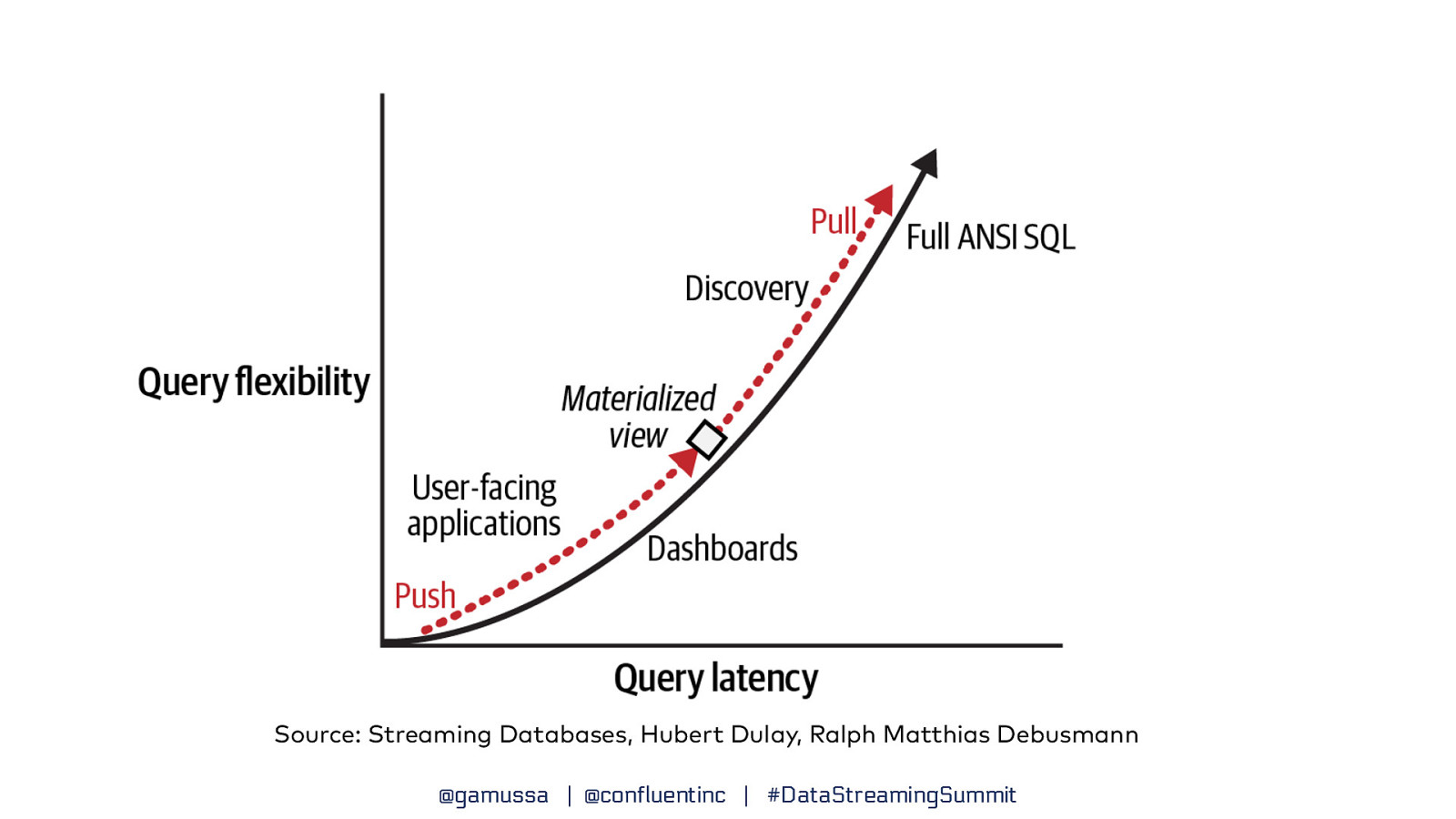

Source: Streaming Databases, Hubert Dulay, Ralph Matthias Debusmann @gamussa | @confluentinc | #DataStreamingSummit

Slide 45

Find the code of the demo 👉 https://gamov.dev/uncorking-analytics @gamussa | @confluentinc | #DataStreamingSummit