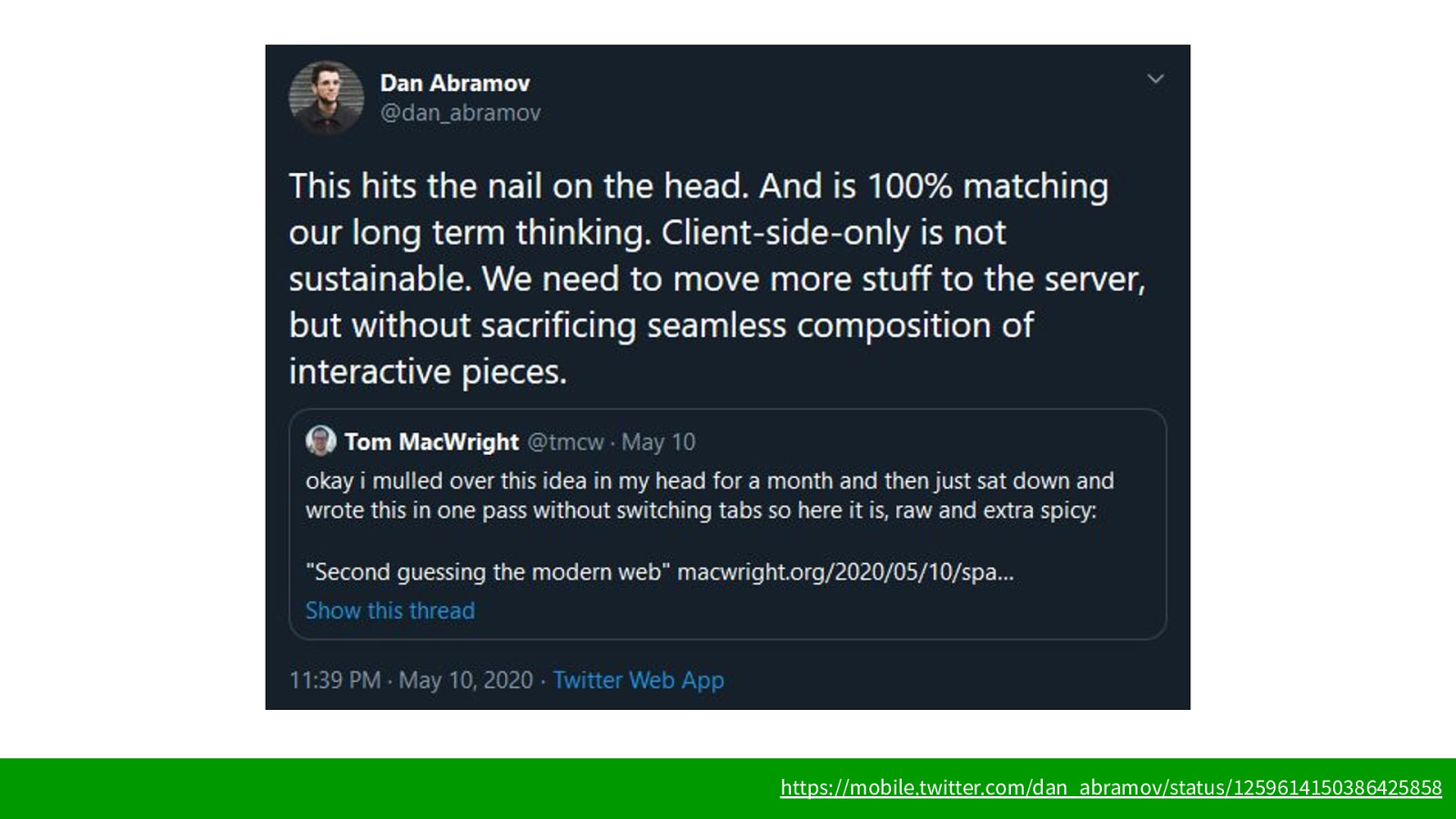

I guess most people here are involved professionally in the development of software. And most of the time that means stuff which is on, or uses, the Internet - particularly the web. But we all know just as well as the average punter that the web isn’t the nirvana of user experience it’s cracked up to be. Why? How can we make it better? That’s what we’re going to explore in this talk.