The Death of Disk Panel Session Erik Riedel, EMC HEC FSIO Workshop August 2011 top picture “floppy disks for breakfast” by Blude via flickr/cc right picture by AusMn Marshall via flickr/cc

A presentation at HEC FSIO Workshop in August 2011 in Arlington County, Arlington, VA, USA by erik riedel

The Death of Disk Panel Session Erik Riedel, EMC HEC FSIO Workshop August 2011 top picture “floppy disks for breakfast” by Blude via flickr/cc right picture by AusMn Marshall via flickr/cc

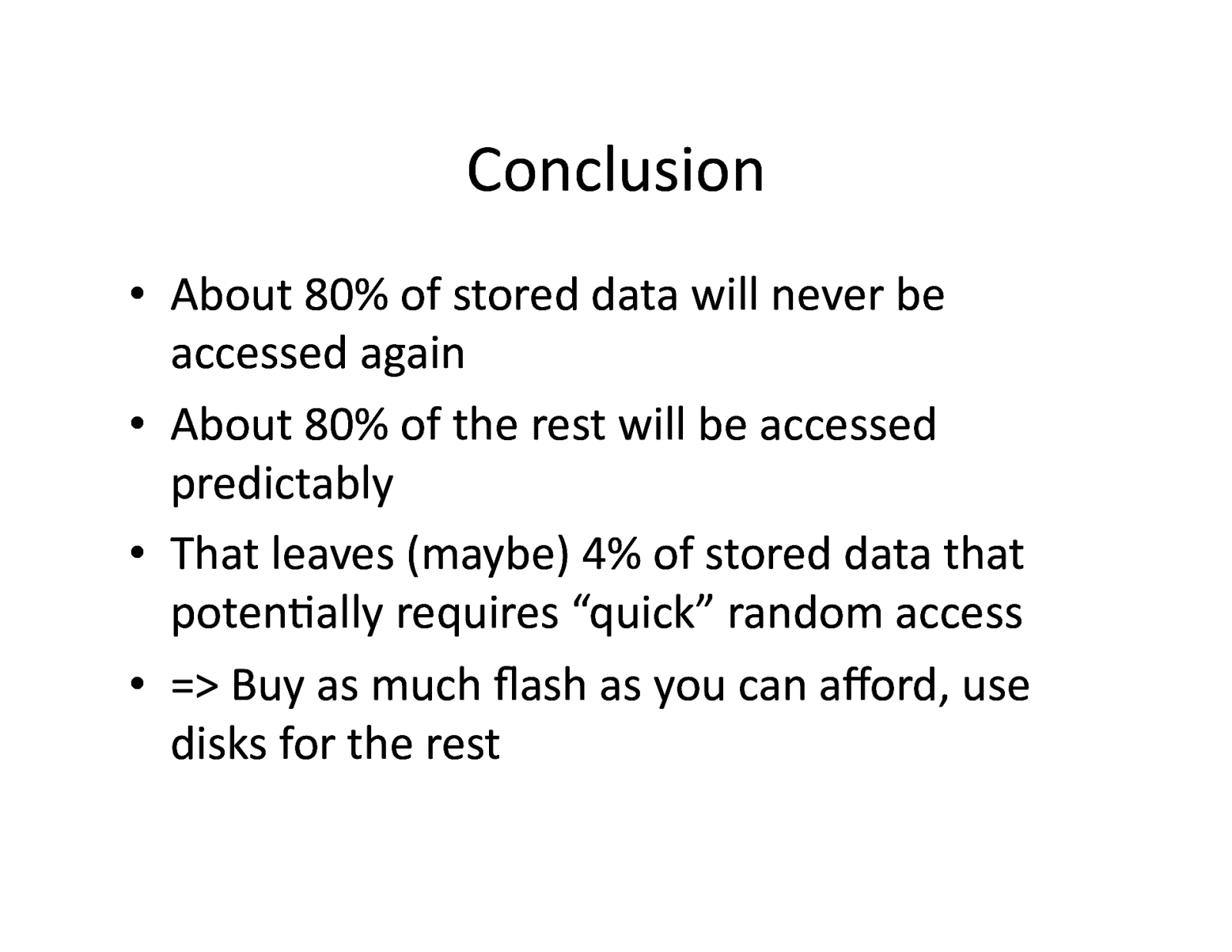

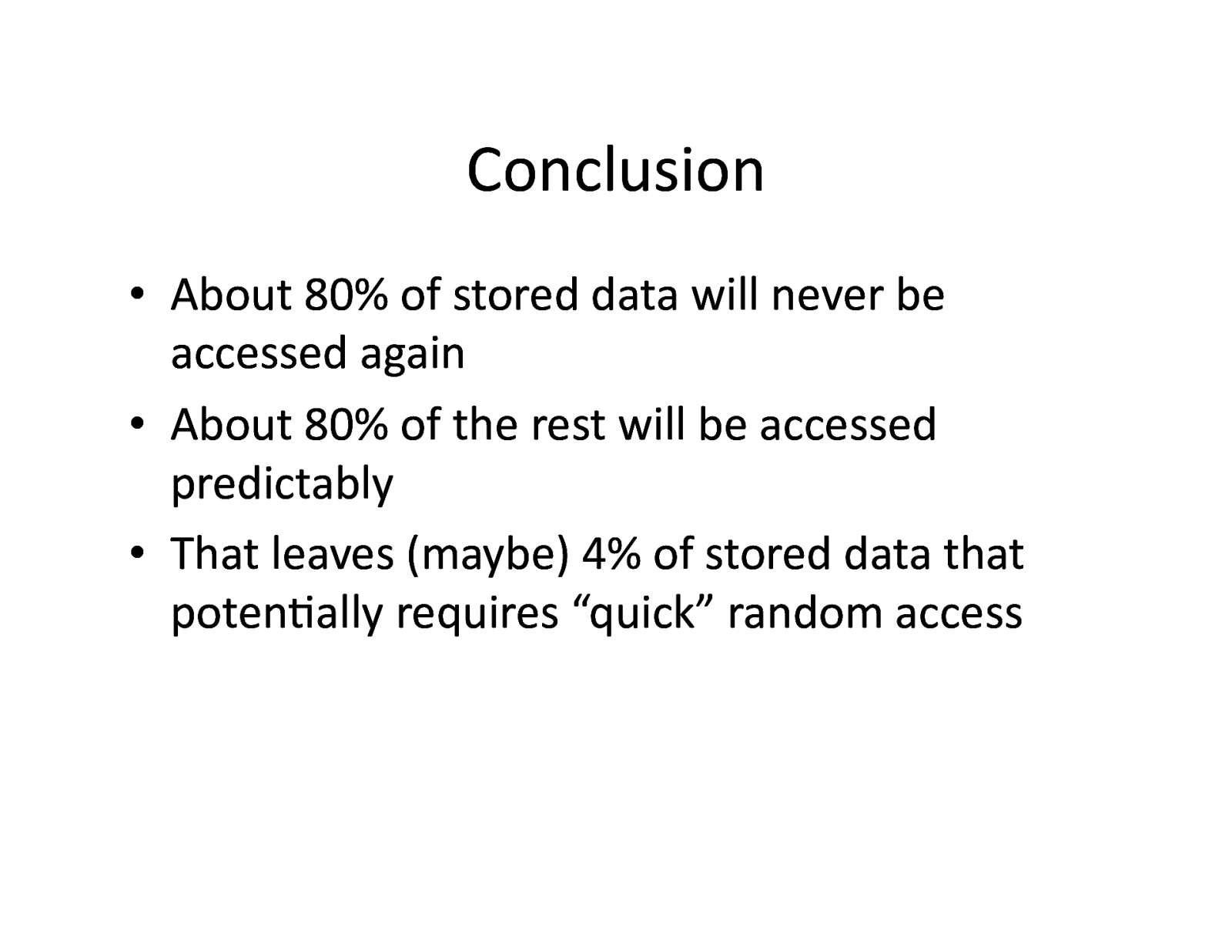

Conclusion • About 80% of stored data will never be accessed again • About 80% of the rest will be accessed predictably • That leaves (maybe) 4% of stored data that potenMally requires “quick” random access • => Buy as much flash as you can afford, use disks for the rest

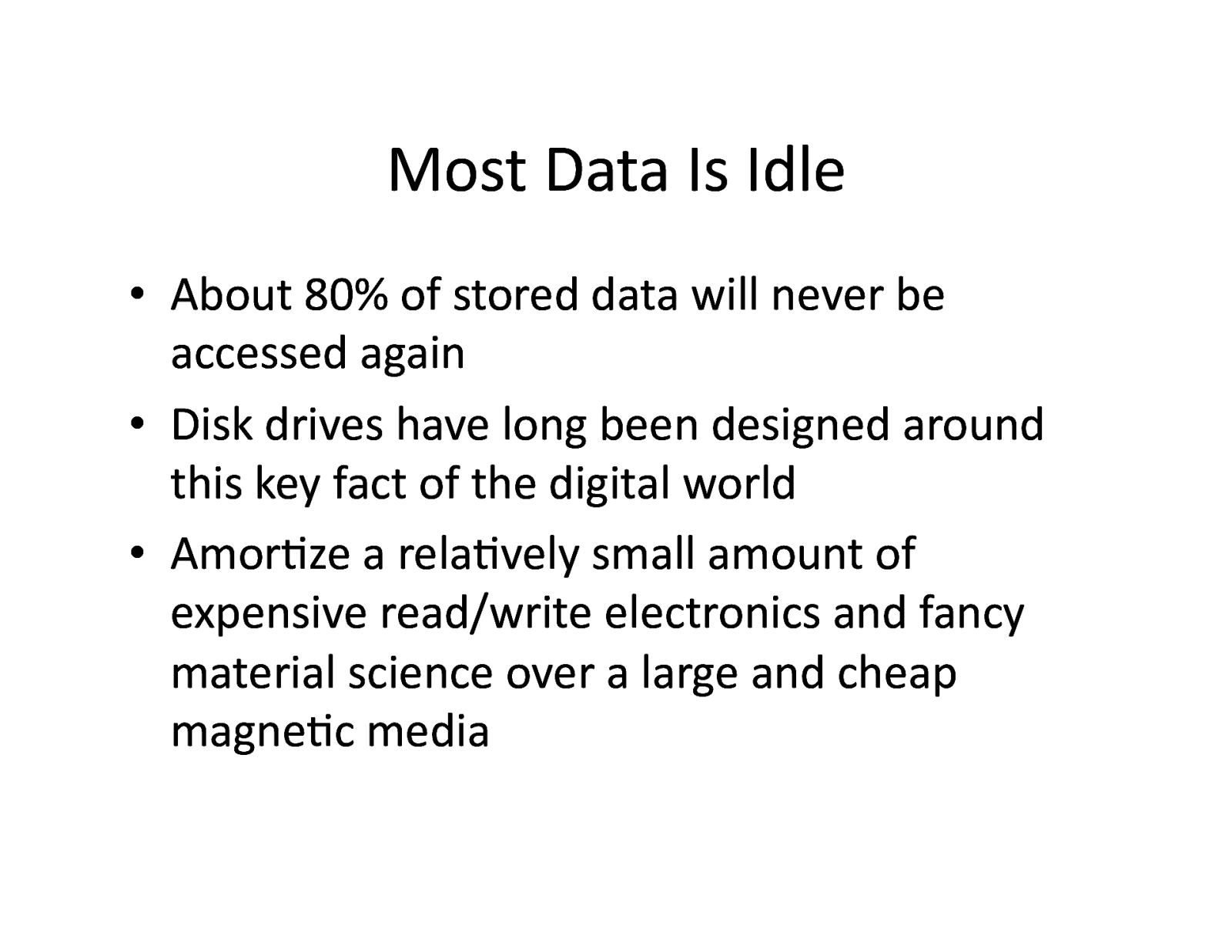

Most Data Is Idle • About 80% of stored data will never be accessed again • Disk drives have long been designed around this key fact of the digital world • AmorMze a relaMvely small amount of expensive read/write electronics and fancy material science over a large and cheap magneMc media

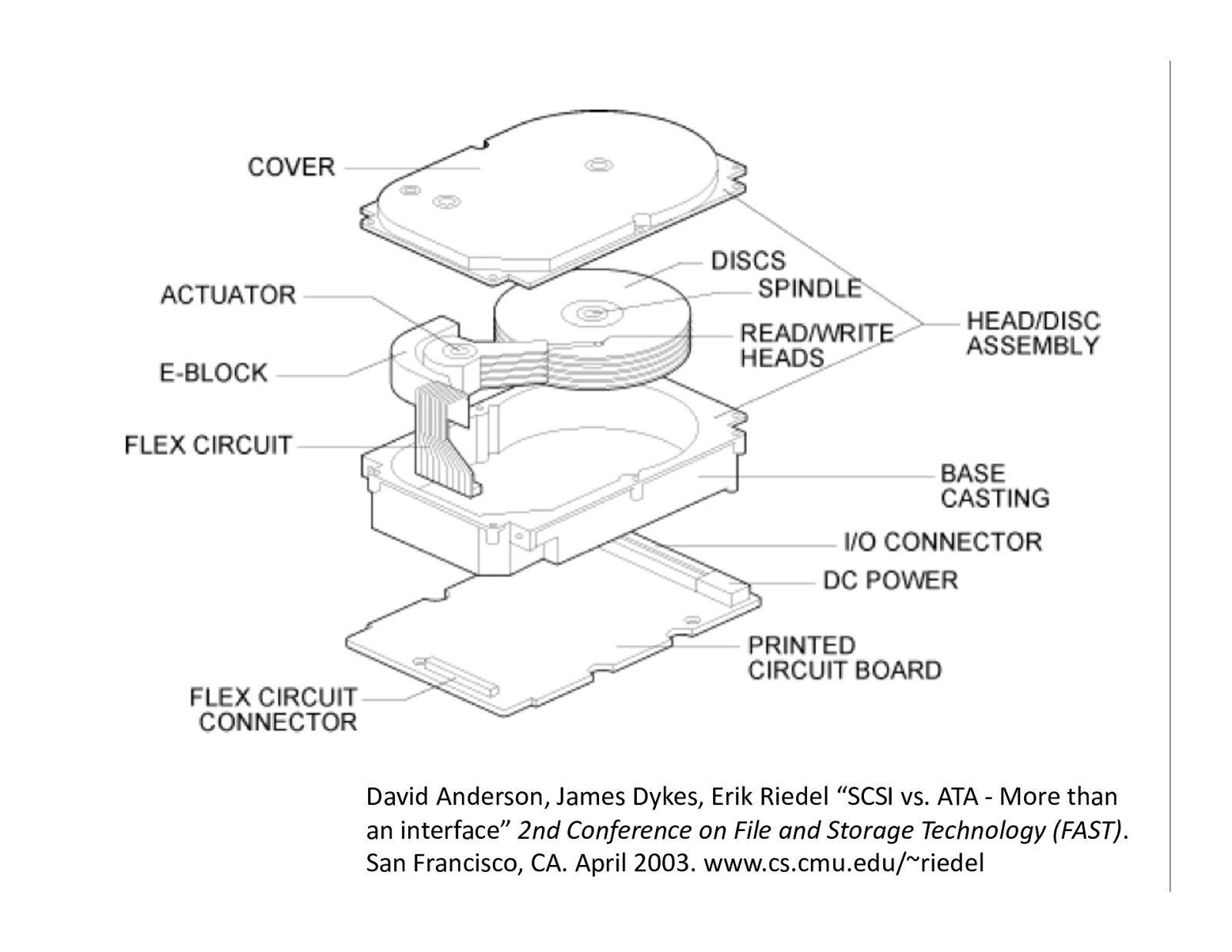

David Anderson, James Dykes, Erik Riedel “SCSI vs. ATA -‐ More than an interface” 2nd Conference on File and Storage Technology (FAST). San Francisco, CA. April 2003. www.cs.cmu.edu/~riedel

Consumer Example (At My House) Sid The Science Kid Super Why! Meet the Press Dinosaur Train Nova Steelers Games Baby Einstein

Most Data Access Is Predictable • • • • • Caching Prefetching Tiering Staging Hierarchical Storage Mgmt • all these tools have been known for years • just need to open our toolbox, sharpen some of them to apply to today’s infrastructure/apps

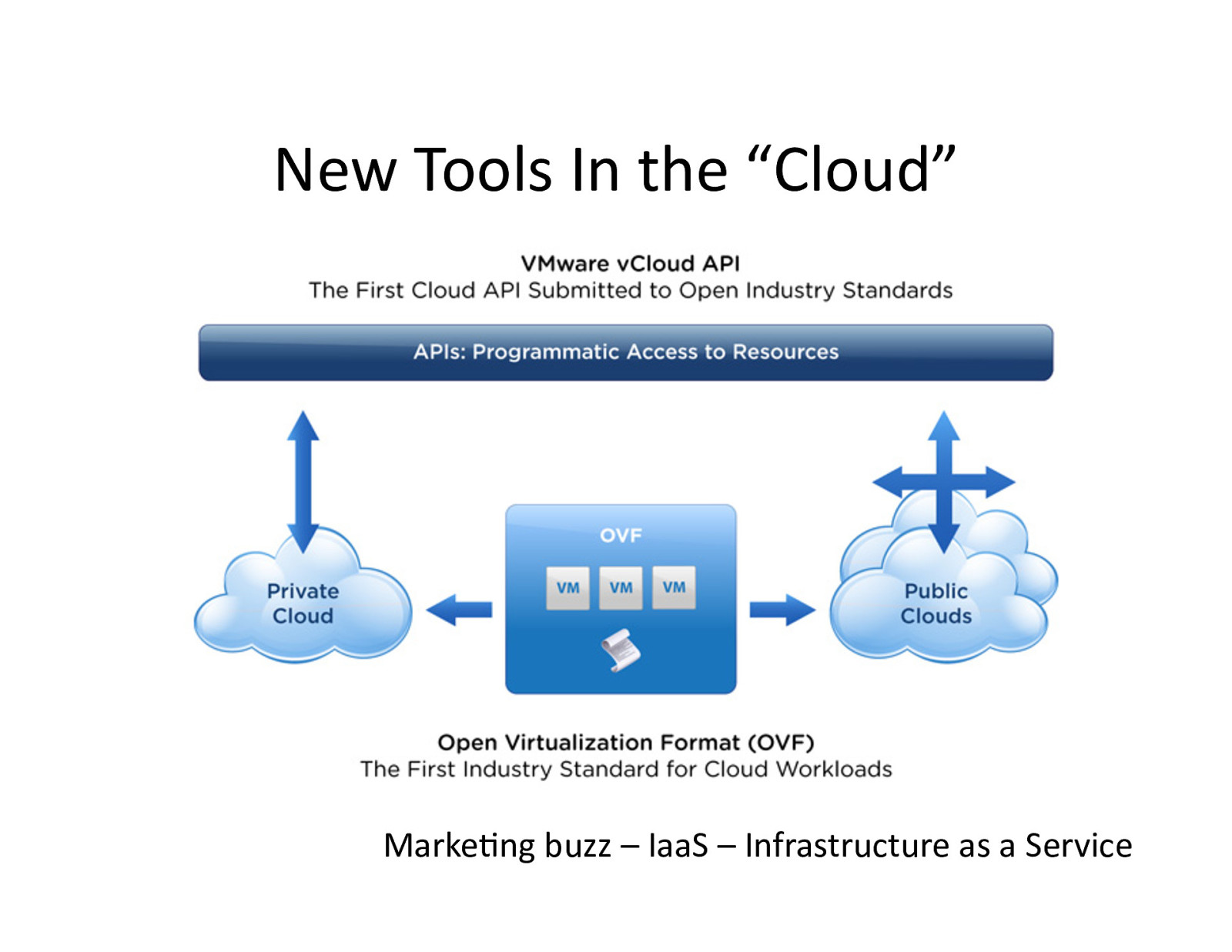

New Tools In the “Cloud” MarkeMng buzz – IaaS – Infrastructure as a Service

New Tools In the “Cloud” (2) MarkeMng buzz – PaaS – Plagorm as a Service

New Tools in the “Cloud” (3) • Key takeaways – both IaaS and PaaS are “closed loop” infrastructures – apps cannot be deployed except at the “direcMon” of the system – logging and monitoring are constant • need to get high uMlizaMon rates ($$) • need to send out bills ($$) • want high rates of “mulM-‐tenancy” to be efficient ($$) – this leads to a significant level of “predictability”

Get Predictability Into Storage • Key challenge is how to translate what “the system” knows about apps and behaviors and “SLAs” into guidance for our system-‐level tools (caching, prefetching, Mering, etc.) • Secondary challenge is avoiding “surprises” – where performance or availability or durability don’t meet the SLAs (“quality of service”) • Good news is that the new infrastructures have some powerful new ways to help us

One Example New Tool – Stunning • “The amount of Mme the virtual machine is stunned is dependent on the amount of memory to be wrilen to disk for such an operaMon, and the speed and responsiveness of the datastore’s backing storage.” – VMware KnowledgeBase hlp://kb.vmware.com/selfservice/microsites/search.do? language=en_US&cmd=displayKC&externalId=1013163 picture by Yamashita Yohei via flickr/cc

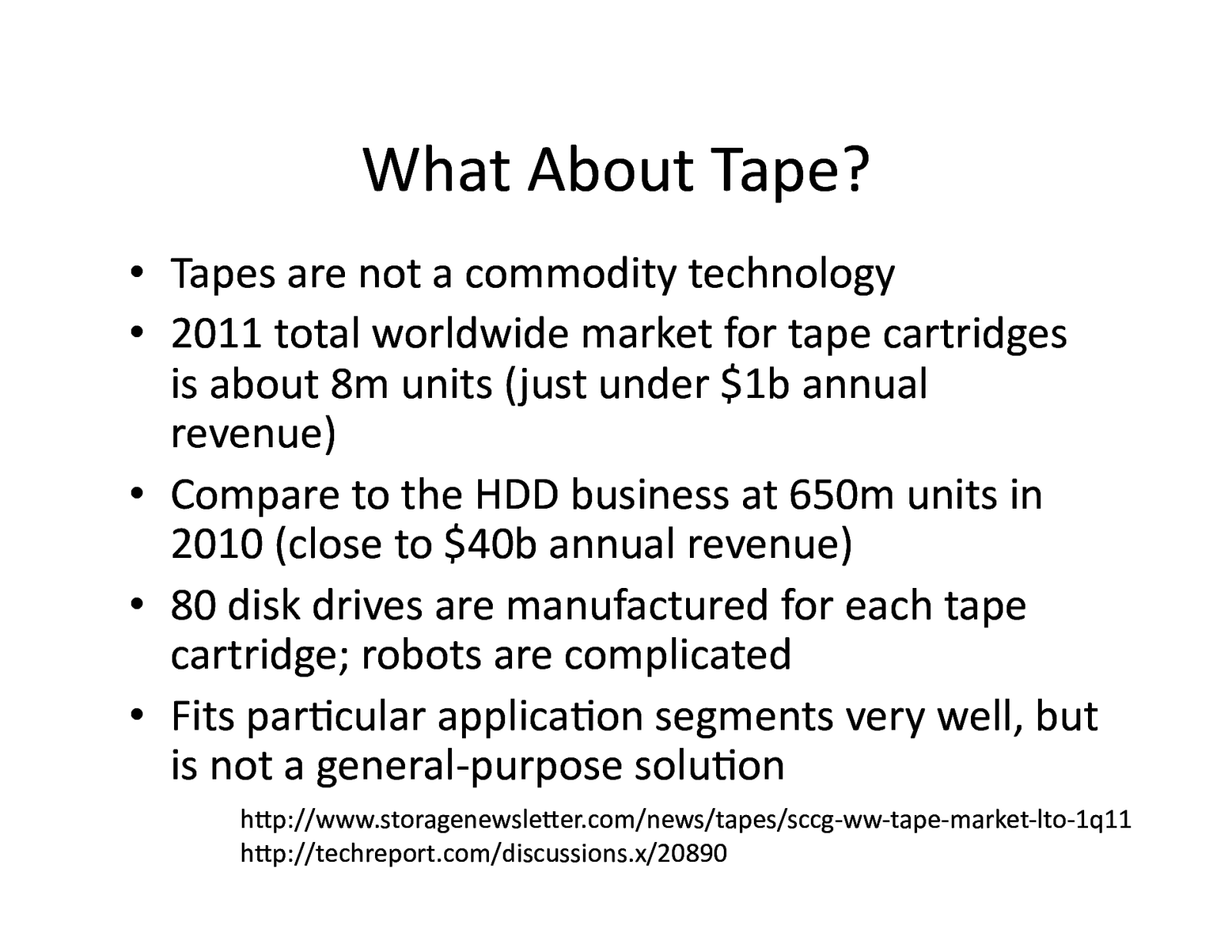

What About Tape? pictures by Gill Wildman via flickr/cc

What About Tape? • Tapes are not a commodity technology • 2011 total worldwide market for tape cartridges is about 8m units ( just under $1b annual revenue) • Compare to the HDD business at 650m units in 2010 (close to $40b annual revenue) • 80 disk drives are manufactured for each tape cartridge; robots are complicated • Fits parMcular applicaMon segments very well, but is not a general-‐purpose soluMon hlp://www.storagenewsleler.com/news/tapes/sccg-‐ww-‐tape-‐market-‐lto-‐1q11 hlp://techreport.com/discussions.x/20890

Conclusion • About 80% of stored data will never be accessed again • About 80% of the rest will be accessed predictably • That leaves (maybe) 4% of stored data that potenMally requires “quick” random access

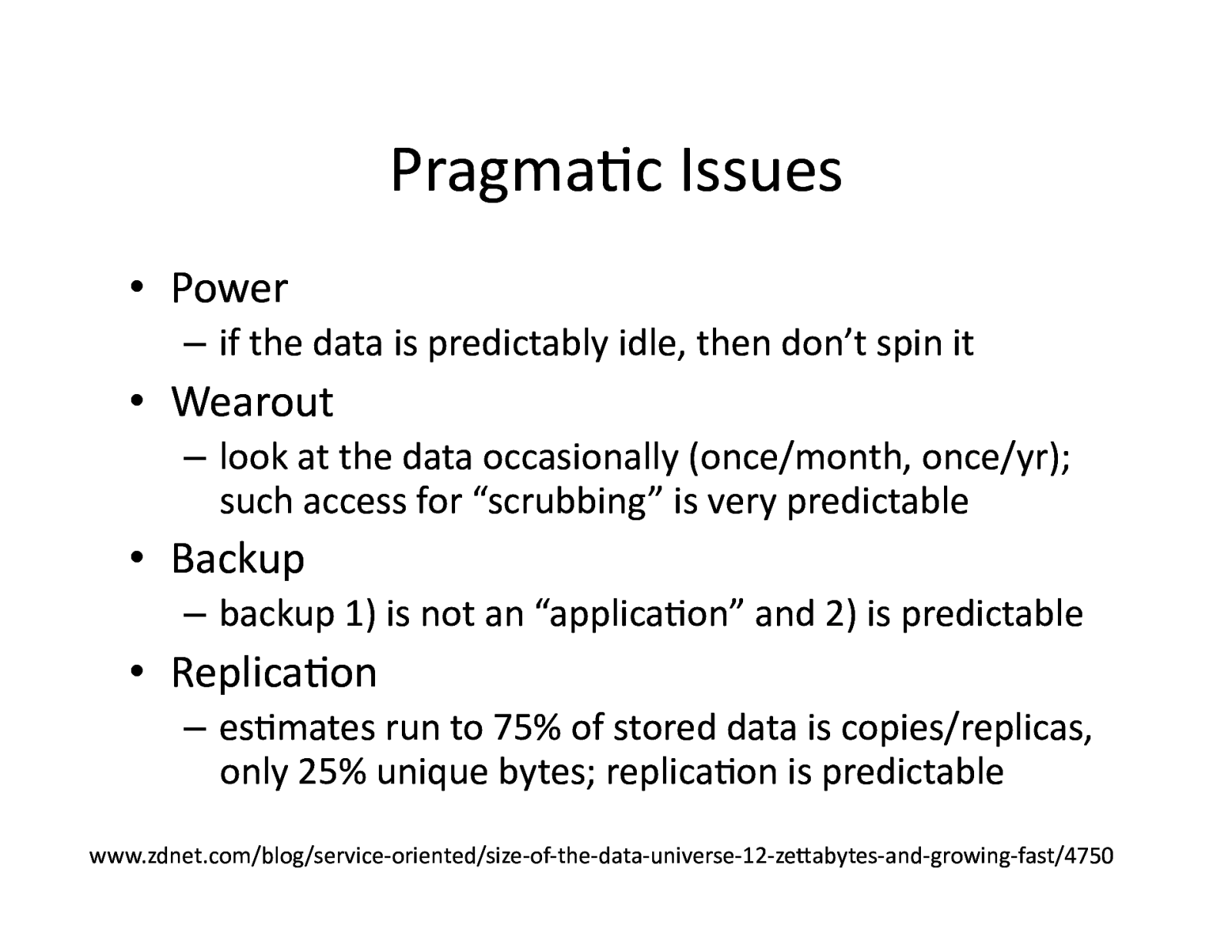

PragmaMc Issues • Power – if the data is predictably idle, then don’t spin it • Wearout – look at the data occasionally (once/month, once/yr); such access for “scrubbing” is very predictable • Backup – backup 1) is not an “applicaMon” and 2) is predictable • ReplicaMon – esMmates run to 75% of stored data is copies/replicas, only 25% unique bytes; replicaMon is predictable www.zdnet.com/blog/service-‐oriented/size-‐of-‐the-‐data-‐universe-‐12-‐zelabytes-‐and-‐growing-‐fast/4750

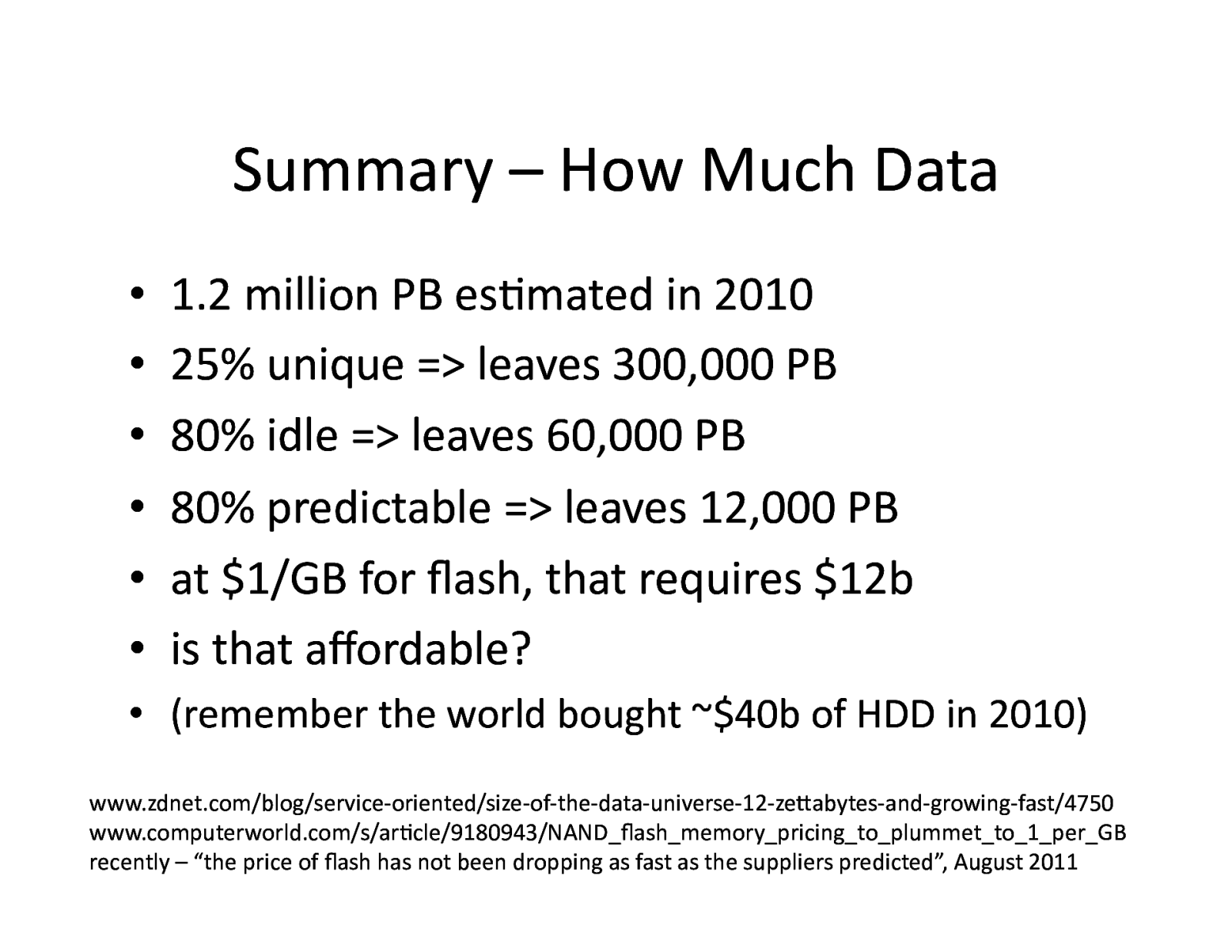

Summary – How Much Data • • • • • • 1.2 million PB esMmated in 2010 25% unique => leaves 300,000 PB 80% idle => leaves 60,000 PB 80% predictable => leaves 12,000 PB at $1/GB for flash, that requires $12b is that affordable? • (remember the world bought ~$40b of HDD in 2010) www.zdnet.com/blog/service-‐oriented/size-‐of-‐the-‐data-‐universe-‐12-‐zelabytes-‐and-‐growing-‐fast/4750 www.computerworld.com/s/arMcle/9180943/NAND_flash_memory_pricing_to_plummet_to_1_per_GB recently – “the price of flash has not been dropping as fast as the suppliers predicted”, August 2011

Conclusion • About 80% of stored data will never be accessed again • About 80% of the rest will be accessed predictably • That leaves (maybe) 4% of stored data that potenMally requires “quick” random access • => Buy as much flash as you can afford, use disks for the rest www.businessinsider.com/736-‐of-‐all-‐ staMsMcs-‐are-‐made-‐up-‐2010-‐2 n.b. 73.6% of all staMsMcs are made up, do the calculaMons for your own environments, your mileage may vary

• In the balle of rust vs. silicon, both will survive rust picture by Jos Faber via flickr/cc silicon picture from “Chip bug vs chip bug” by Windell Oskay via flickr/cc

hlp://www.emc.com/storage/atmos/atmos.htm a brief word from my sponsors