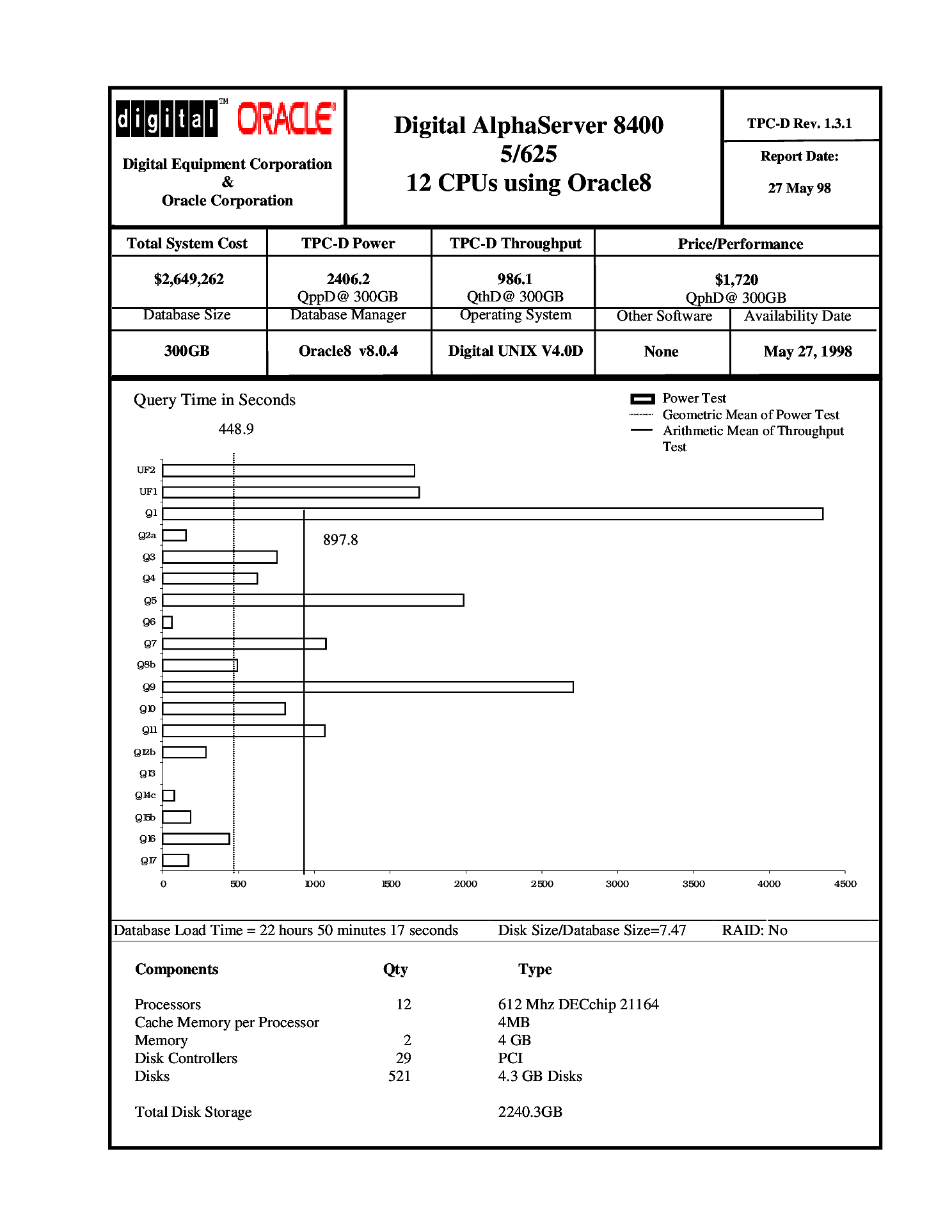

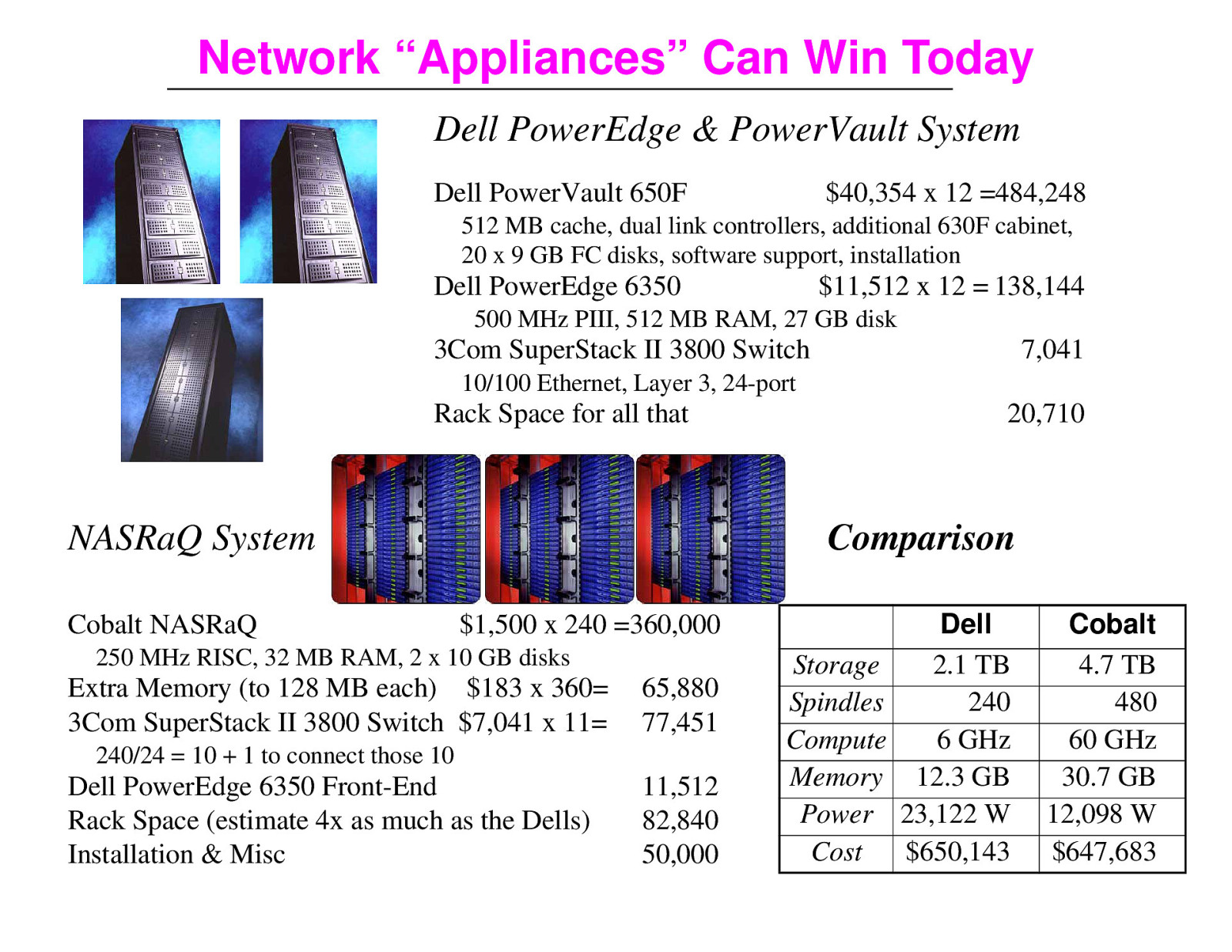

Network “Appliances” Can Win Today Dell PowerEdge & PowerVault System Dell PowerVault 650F

$40,354 x 12 =484,248

512 MB cache, dual link controllers, additional 630F cabinet, 20 x 9 GB FC disks, software support, installation

Dell PowerEdge 6350

$11,512 x 12 = 138,144

500 MHz PIII, 512 MB RAM, 27 GB disk

3Com SuperStack II 3800 Switch

7,041

10/100 Ethernet, Layer 3, 24-port

Rack Space for all that

NASRaQ System Cobalt NASRaQ

20,710

Comparison $1,500 x 240 =360,000

250 MHz RISC, 32 MB RAM, 2 x 10 GB disks

Extra Memory (to 128 MB each) $183 x 360= 3Com SuperStack II 3800 Switch $7,041 x 11=

65,880 77,451

240/24 = 10 + 1 to connect those 10

Dell PowerEdge 6350 Front-End Rack Space (estimate 4x as much as the Dells) Installation & Misc

11,512 82,840 50,000

Storage Spindles Compute Memory Power Cost

Dell 2.1 TB 240 6 GHz 12.3 GB 23,122 W $650,143

Cobalt 4.7 TB 480 60 GHz 30.7 GB 12,098 W $647,683