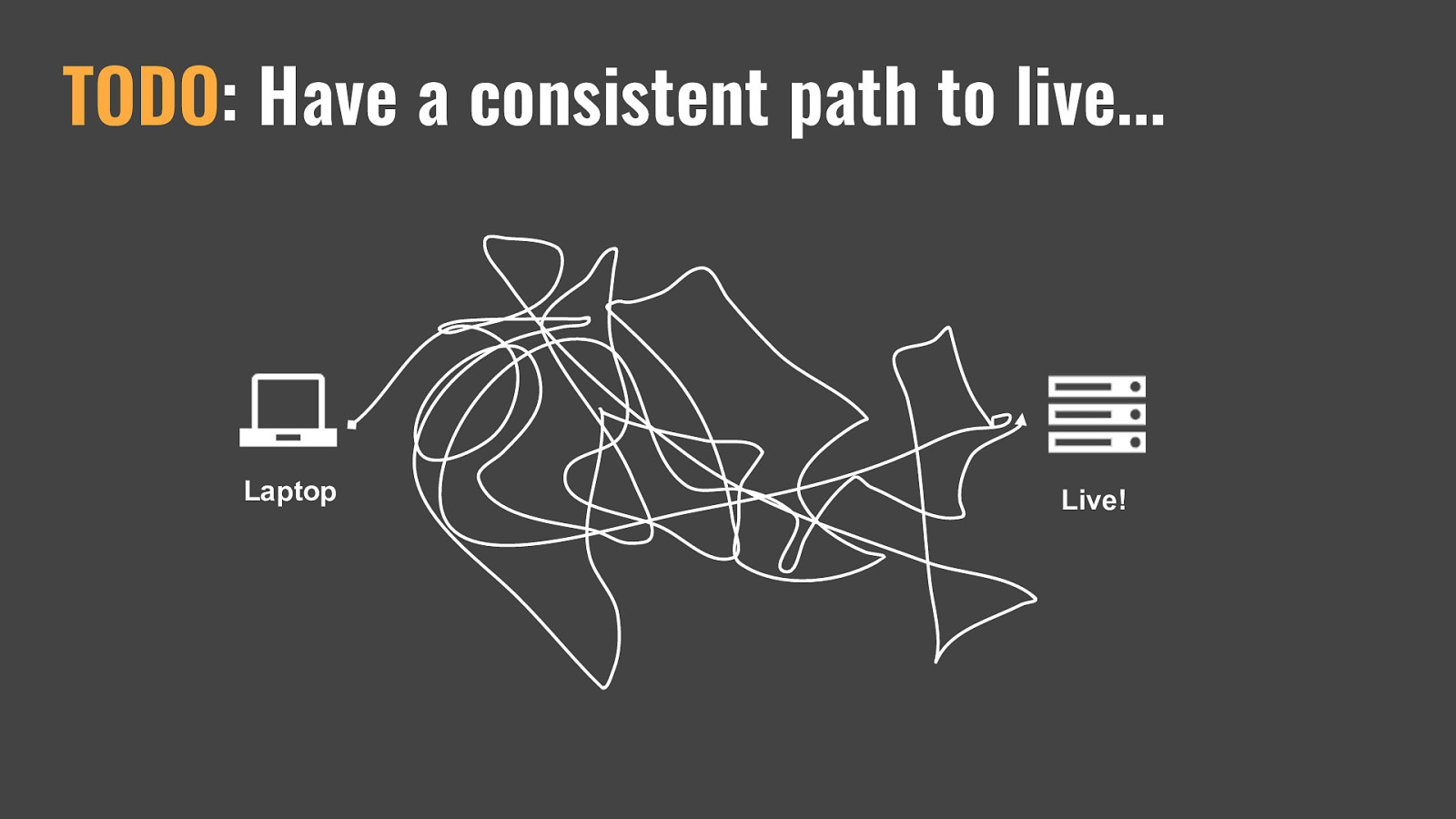

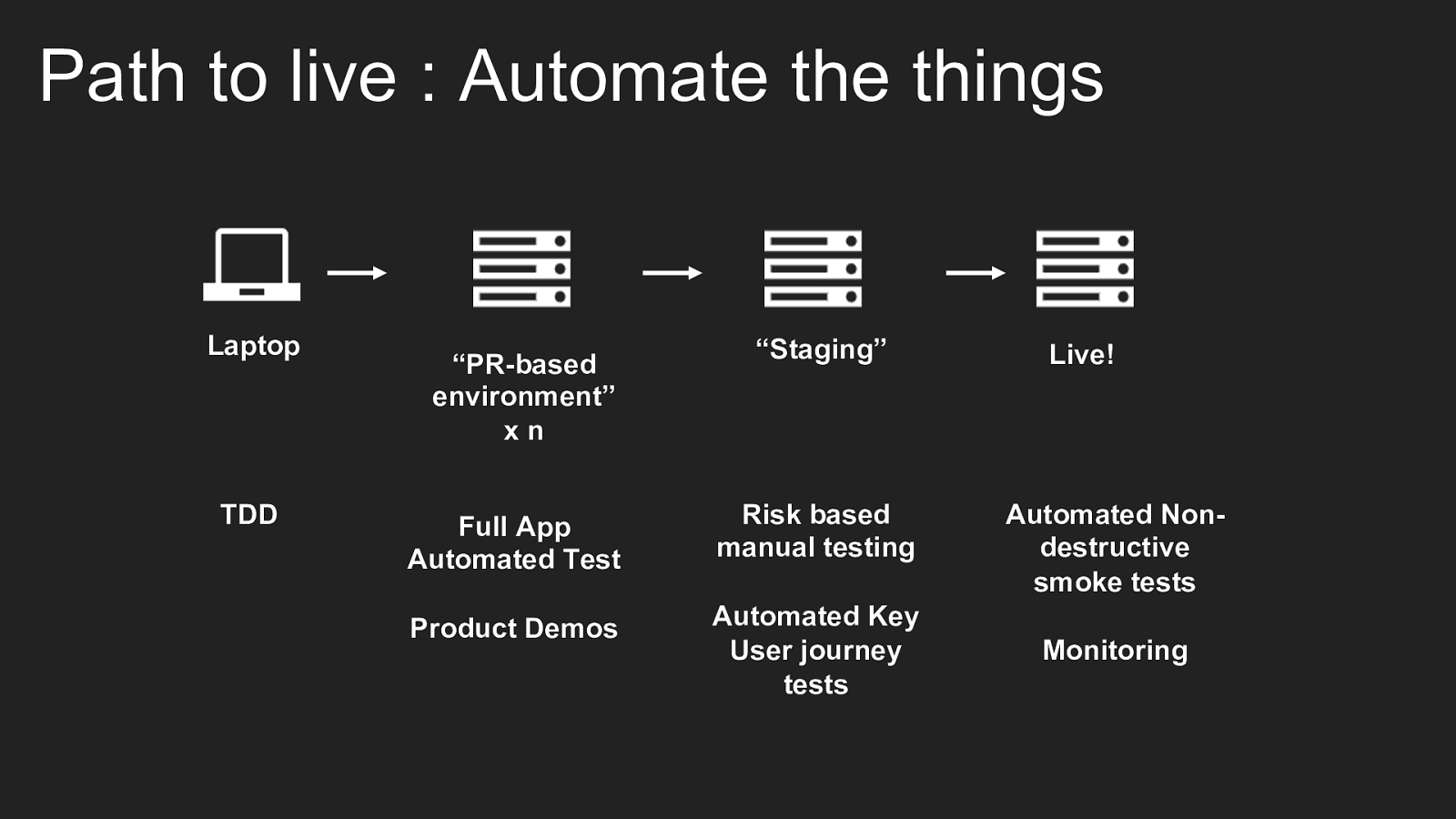

This is, incidentally, where we were about 4 years ago. And, because the digital gods love to laugh at us, at around that point we were reaching end of life on core components chosen back at the start of the work. For us that was a reliance on Ubuntu 14.04, which was losing security support. And also had a deployment system based on hand rolled Docker orchestration with Salt, Ansible, Fabric, Duct Tape, String and optimism. How do you (did we) speed up delivery and get ourselves out of this situation? Well, never underestimate a good crisis to instigate change.