Scaling Down While Scaling Up Design Choices That Increase Efficiency & Performance

A presentation at OCP Regional Summit in April 2024 in Lisbon, Portugal by erik riedel

Scaling Down While Scaling Up Design Choices That Increase Efficiency & Performance

EMEA DEPLOYMENTS DEPLOYMENTS SUSTAINABILITY Scaling Down While Scaling Up – Design Choices That Increase Efficiency & Performance Erik Riedel, PhD, Chief Engineering Officer Flax Computing Hesham Ghoneim, PhD, Strategic Product Development Sims Lifecycle Services

Abstract This talk presents a detailed design study of several OCP hyperscale systems vs previous server & rack designs, including trade-offs & choices that reduce complexity, reduce costs, increase performance, and increase scalability. We will also quantify that a clear side-effect of these choices is to reduce the carbon footprint of data center deployments & operations. These carbon savings arise from both operational & scope 3 benefits, and they continue to accrue the longer systems are productively utilized. The power of collaborative, “out of the box” design approaches that have crossed traditional industry silos – across hardware, software, & operations – have enabled innovative designs that have proven their value and influence across the industry. The fact that these designs were pursued with simplicity and efficiency in mind mean that they also inherently consume less resources in both energy, materials, and operational effort paying dividends in the short-run and long-run.

Expected Learnings Talk attendees will learn about the details of several OCP server, storage & GPU designs that rethink the traditional data center server to provide clear benefits in reduced complexity, reduced costs, increased performance, & increased efficiency at scale. By reviewing a small subset of open hardware designs from the 10 year history of the Open Compute Project, we will outline the clear engineering trade-offs that were made, and the resulting gains in efficiency & scalable deployment. Attendees will also learn how these optimizations have direct benefits to the reduction of carbon footprints – both energy & materials consumption – when combined with modern data center infrastructure, deployment, & software architectures. The combination of creative hardware designs, software architectures, & operational approaches developed in wide industry collaborations with traditionally siloed teams and companies operating together have made these individual evolutions into a true revolution.

Social 280 Scaling Down While Scaling Up – learn about OCP designs that reduce complexity, costs & carbon footprints, while increasing performance & efficiency. By innovating across industry silos, these collaborations have made a series of design evolutions into a true revolution.

Outline • Intro – carbon footprint of computing • Design Footprints – compare OCP to traditional server designs • Materials Footprints – material + carbon impacts • Materials Reuse / Recycle – extend life, reduce carbon impacts • Server Density – can we improve further • Workload / Carbon – example: memory density • Conclusions + Call To Action

natural resources carbon footprint demand growth

design footprints

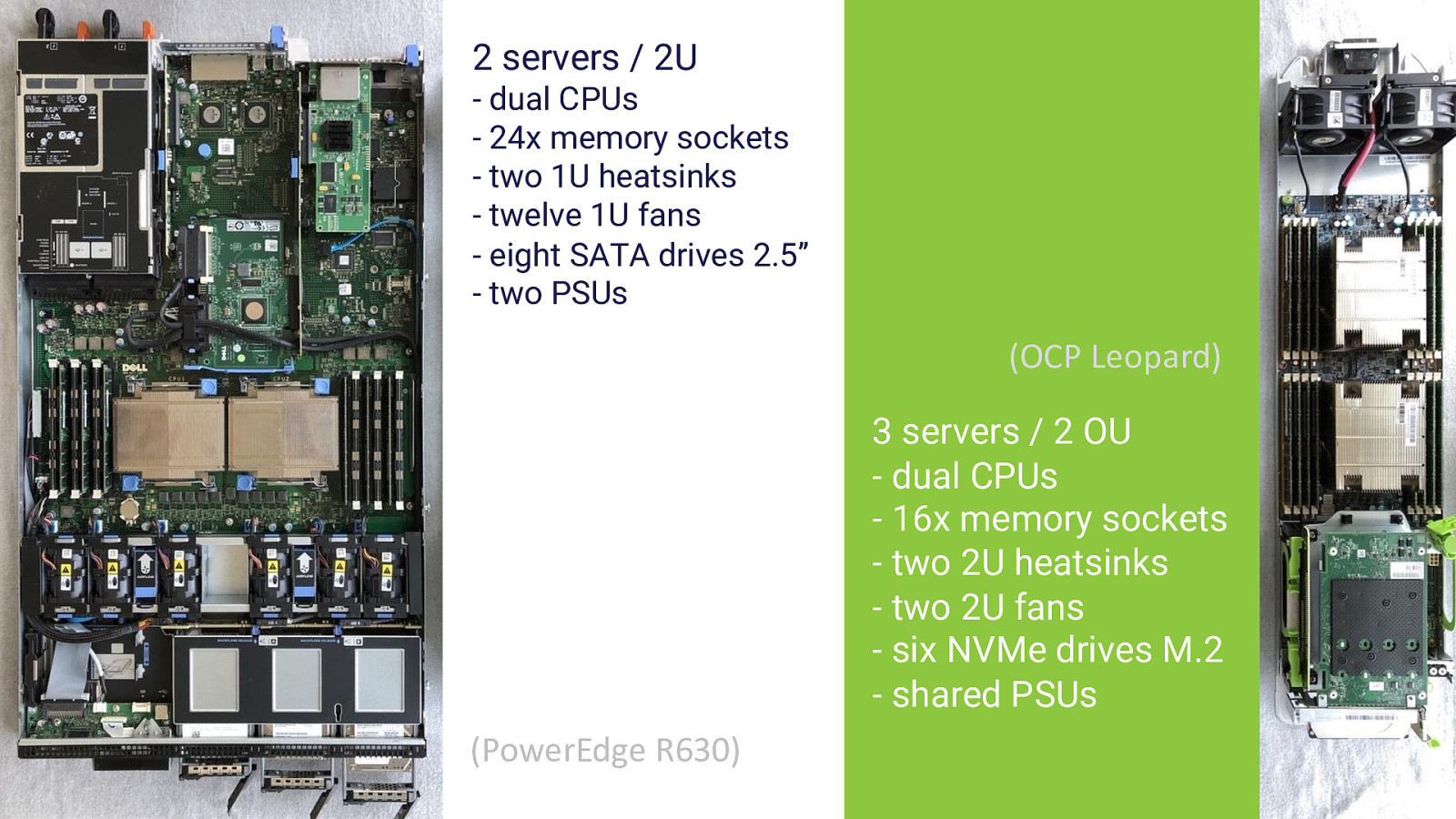

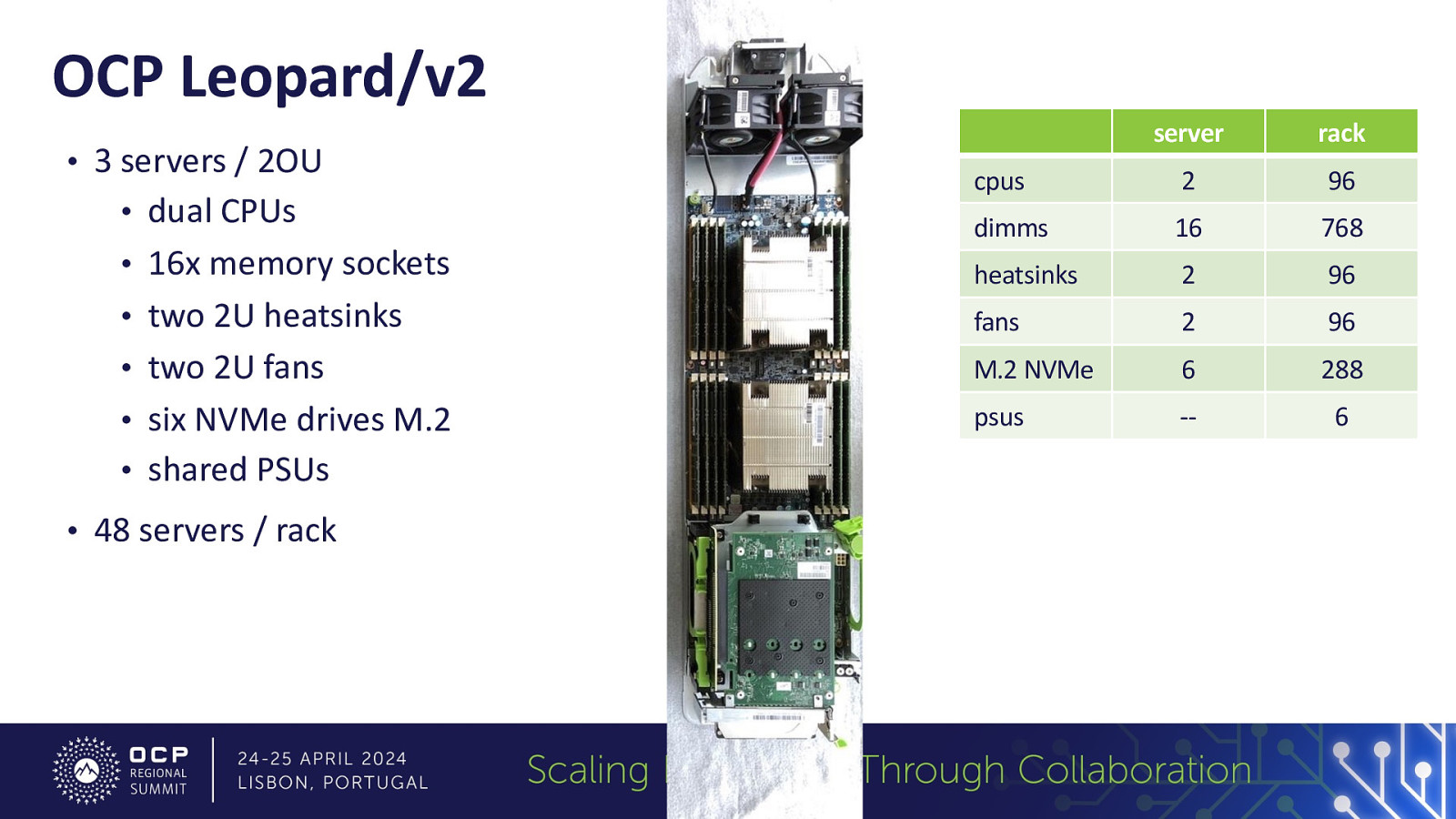

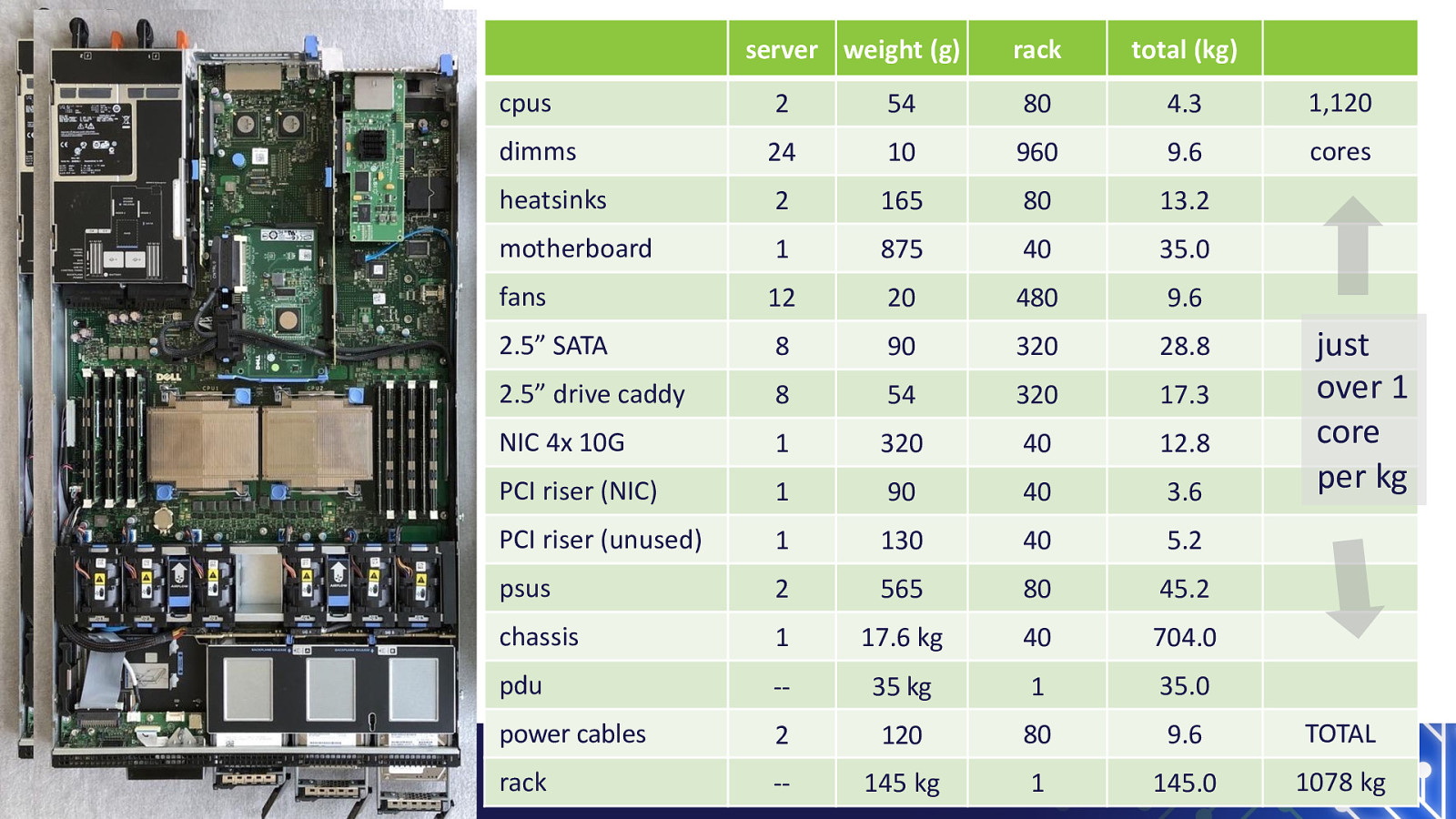

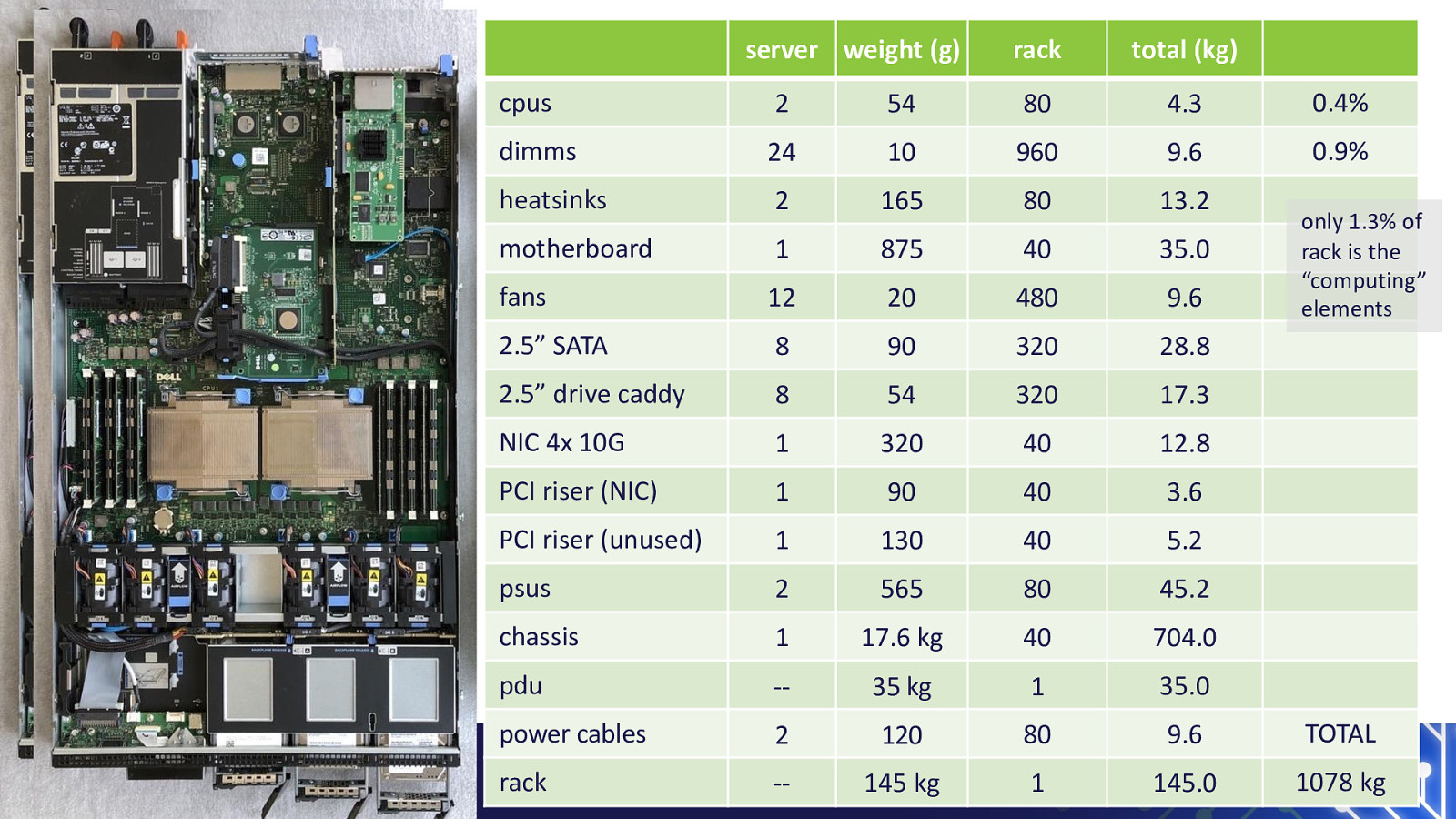

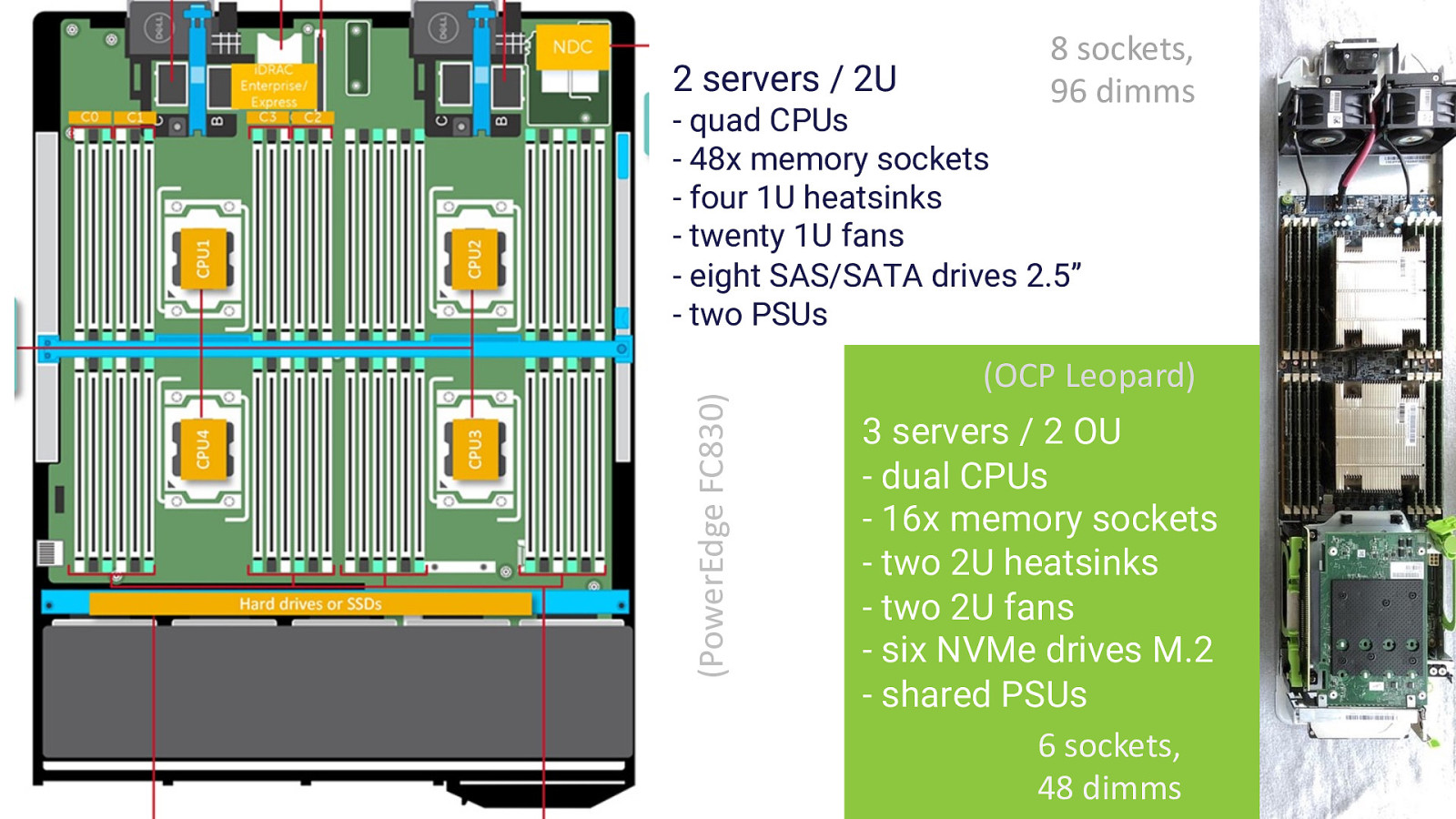

2 servers / 2U - dual CPUs - 24x memory sockets - two 1U heatsinks - twelve 1U fans - eight SATA drives 2.5” - two PSUs (OCP Leopard) 3 servers / 2 OU - dual CPUs - 16x memory sockets - two 2U heatsinks - two 2U fans - six NVMe drives M.2 - shared PSUs (PowerEdge R630)

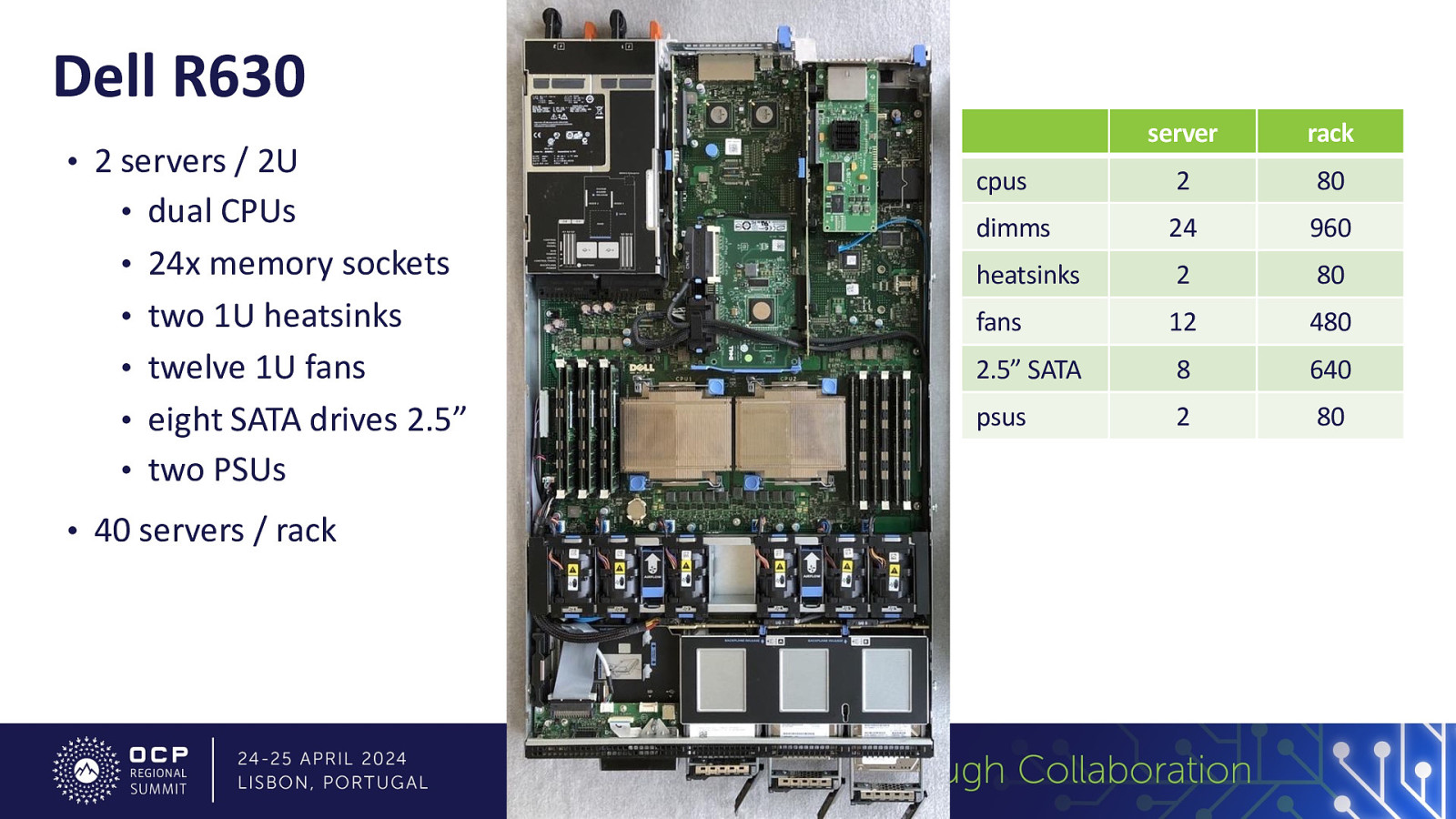

Dell R630 server rack cpus 2 80 dimms 24 960 heatsinks 2 80 • two 1U heatsinks fans 12 480 • twelve 1U fans 2.5” SATA 8 640 • eight SATA drives 2.5” psus 2 80 • 2 servers / 2U • dual CPUs • 24x memory sockets • two PSUs • 40 servers / rack

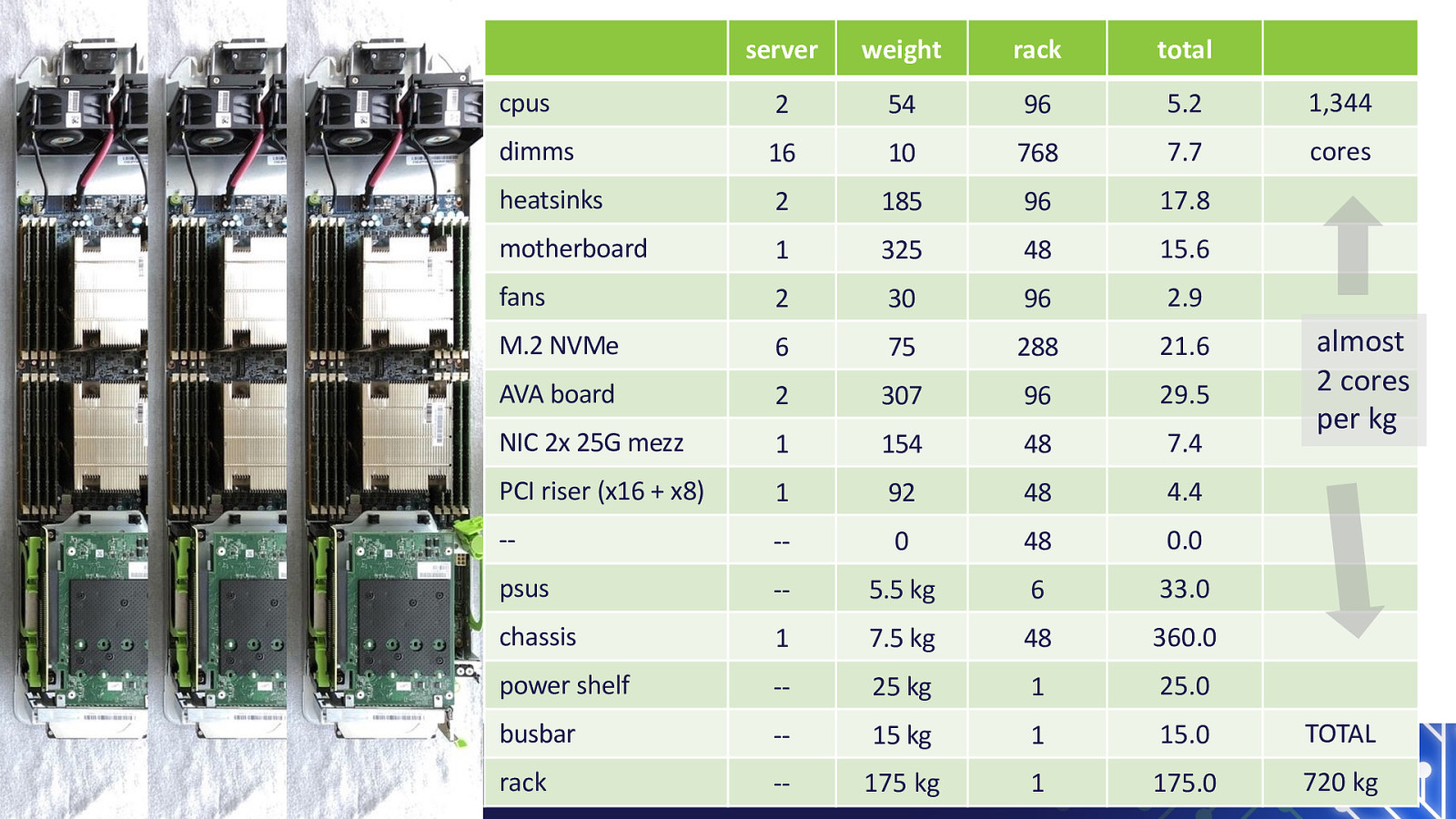

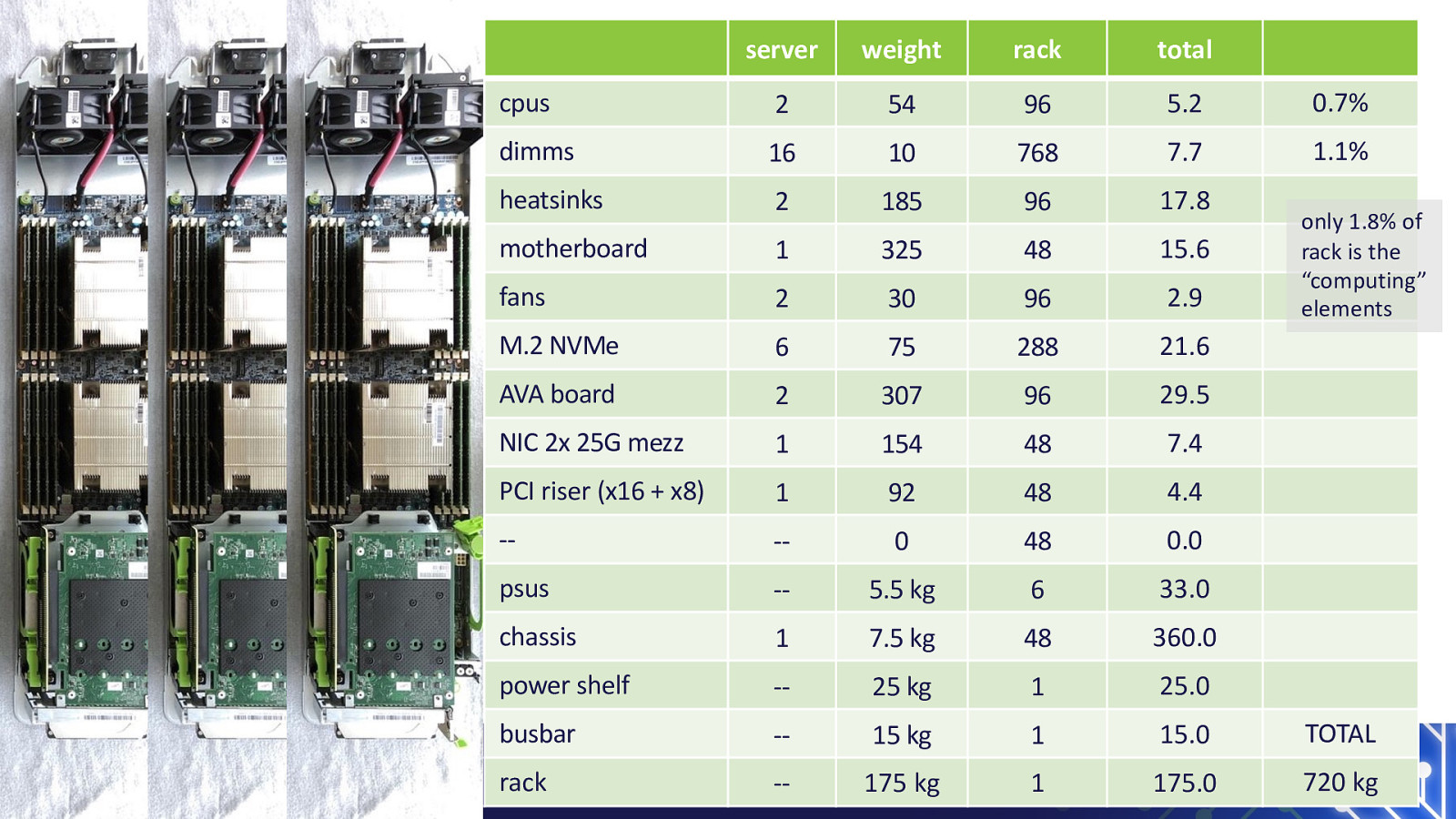

6 • 3 servers / 2OU • dual CPUs • 16x memory sockets • shared PSUs • 48 servers / rack

materials footprints

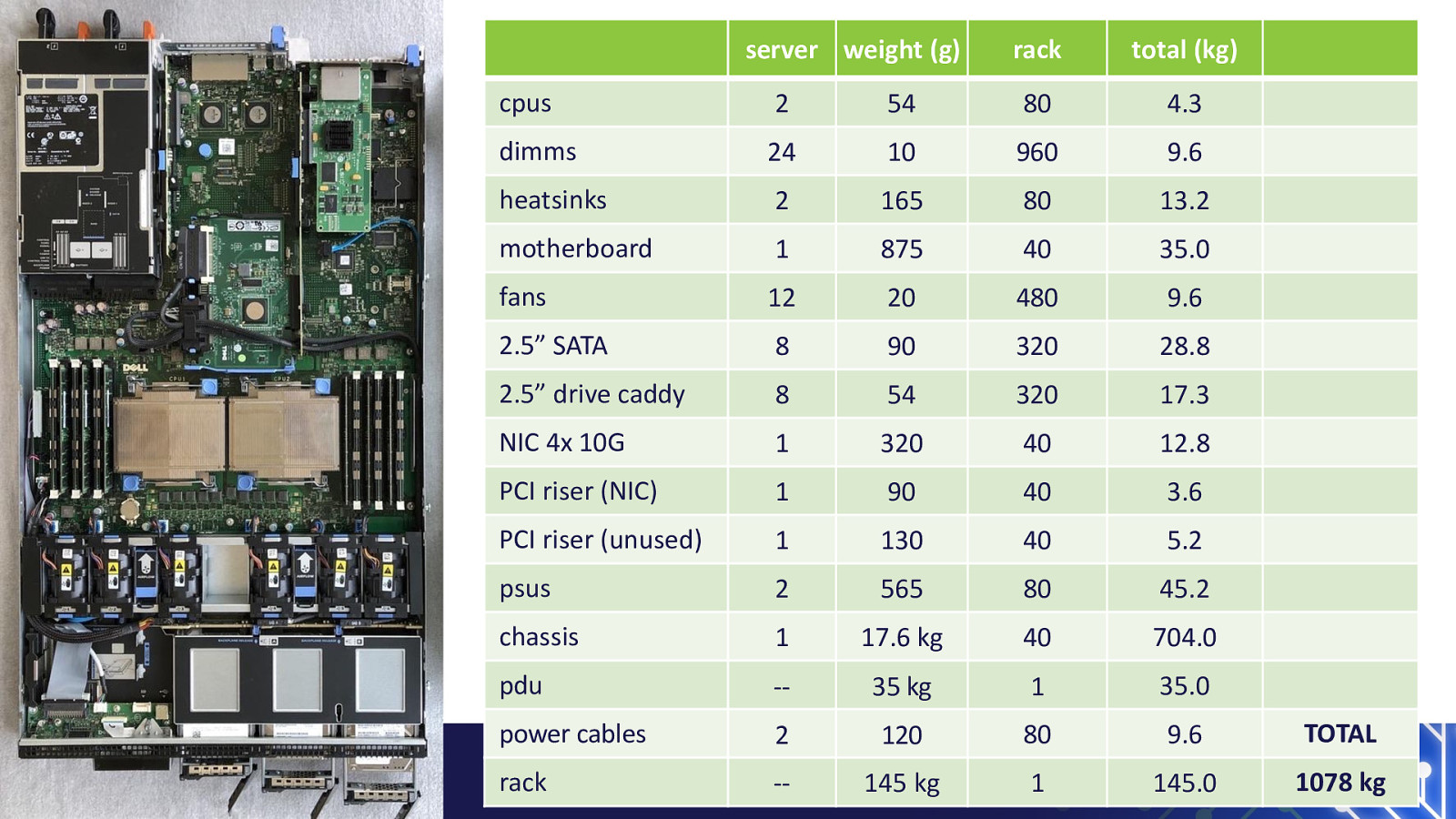

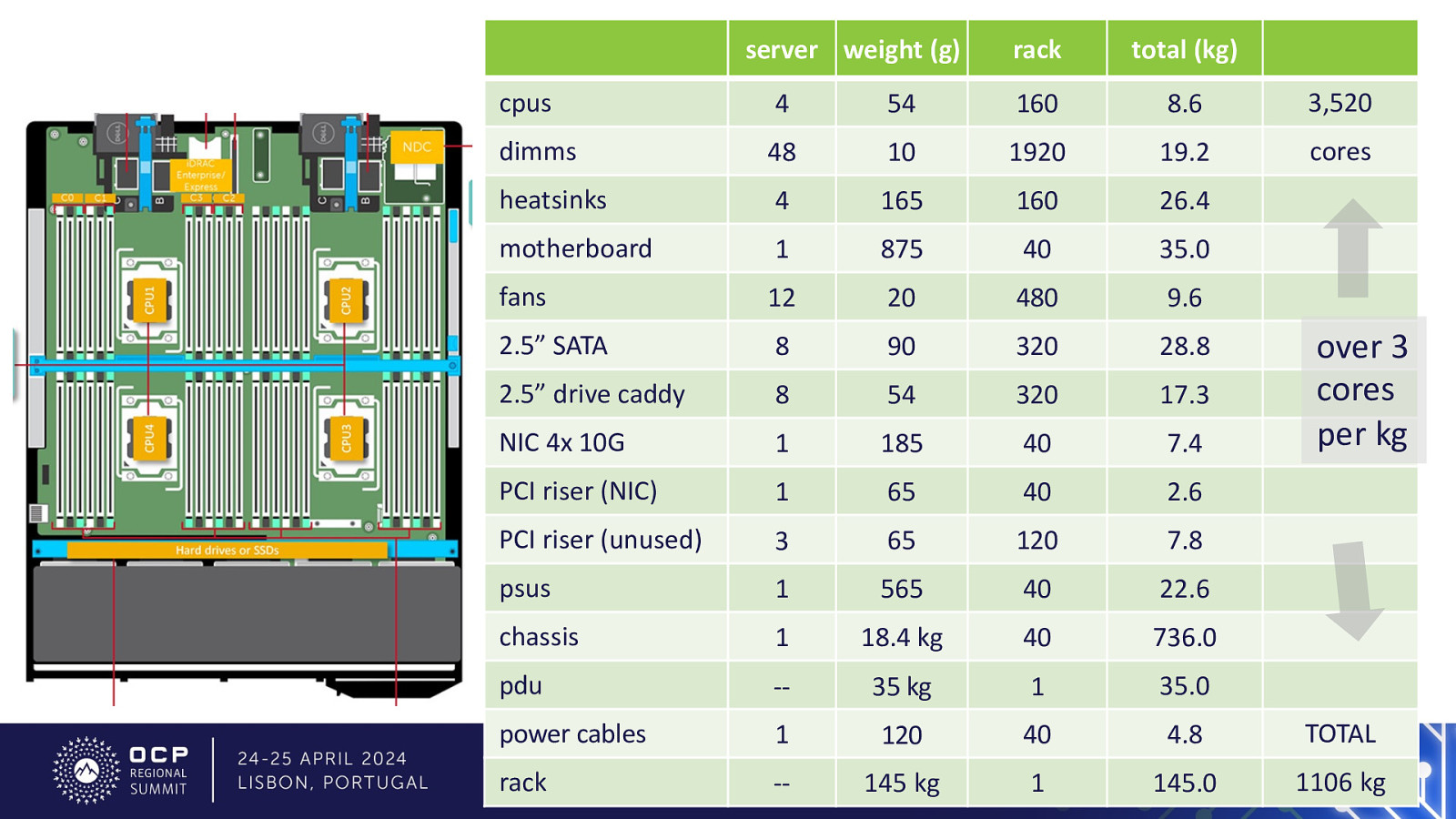

145 kg 1 145.0 1078 kg

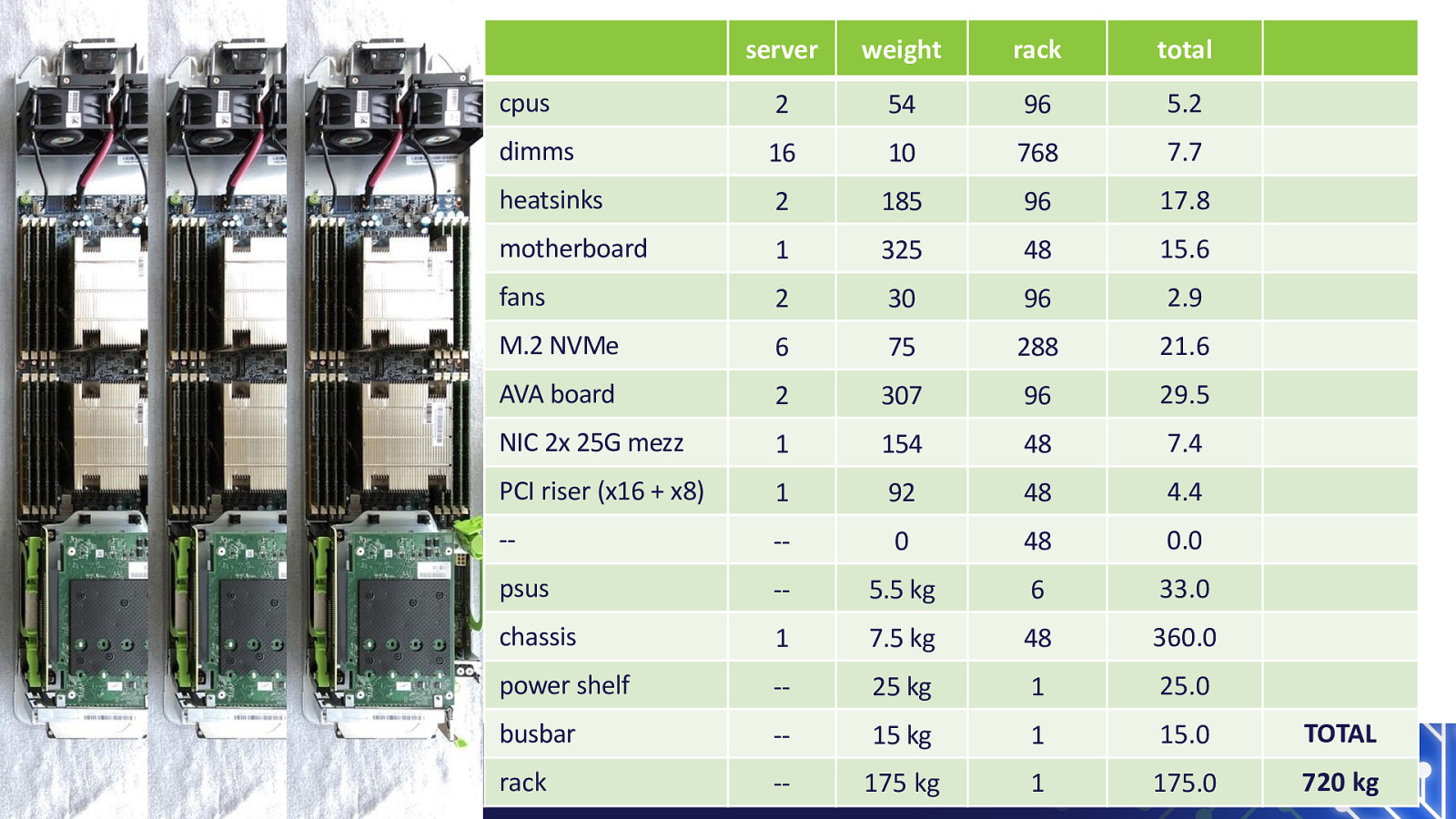

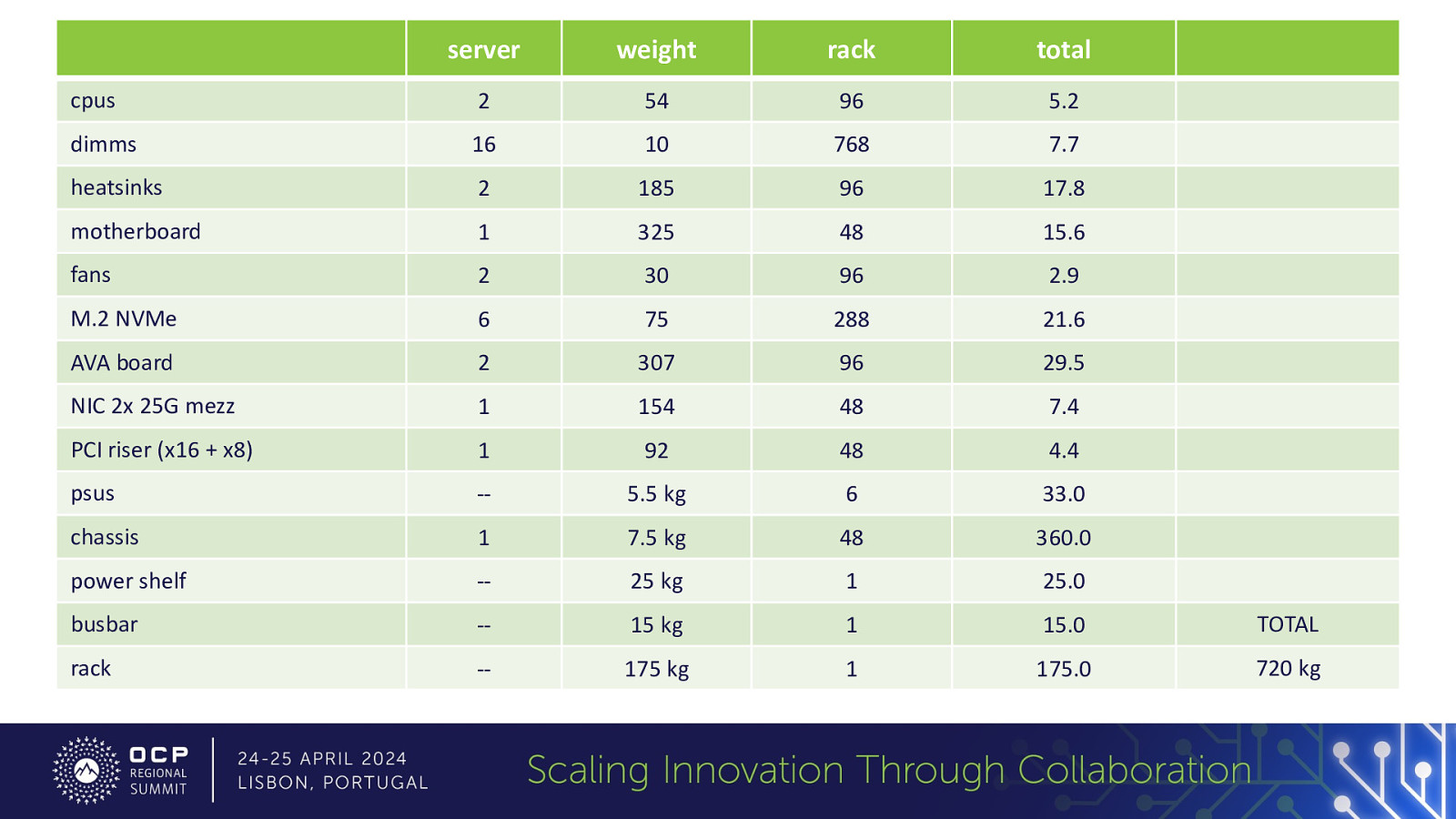

175 kg 1 175.0 720 kg

145 kg 1 145.0 1078 kg just over 1 core per kg

145 kg 1 145.0 1078 kg only 1.3% of rack is the “computing” elements

175 kg 1 175.0 720 kg almost 2 cores per kg

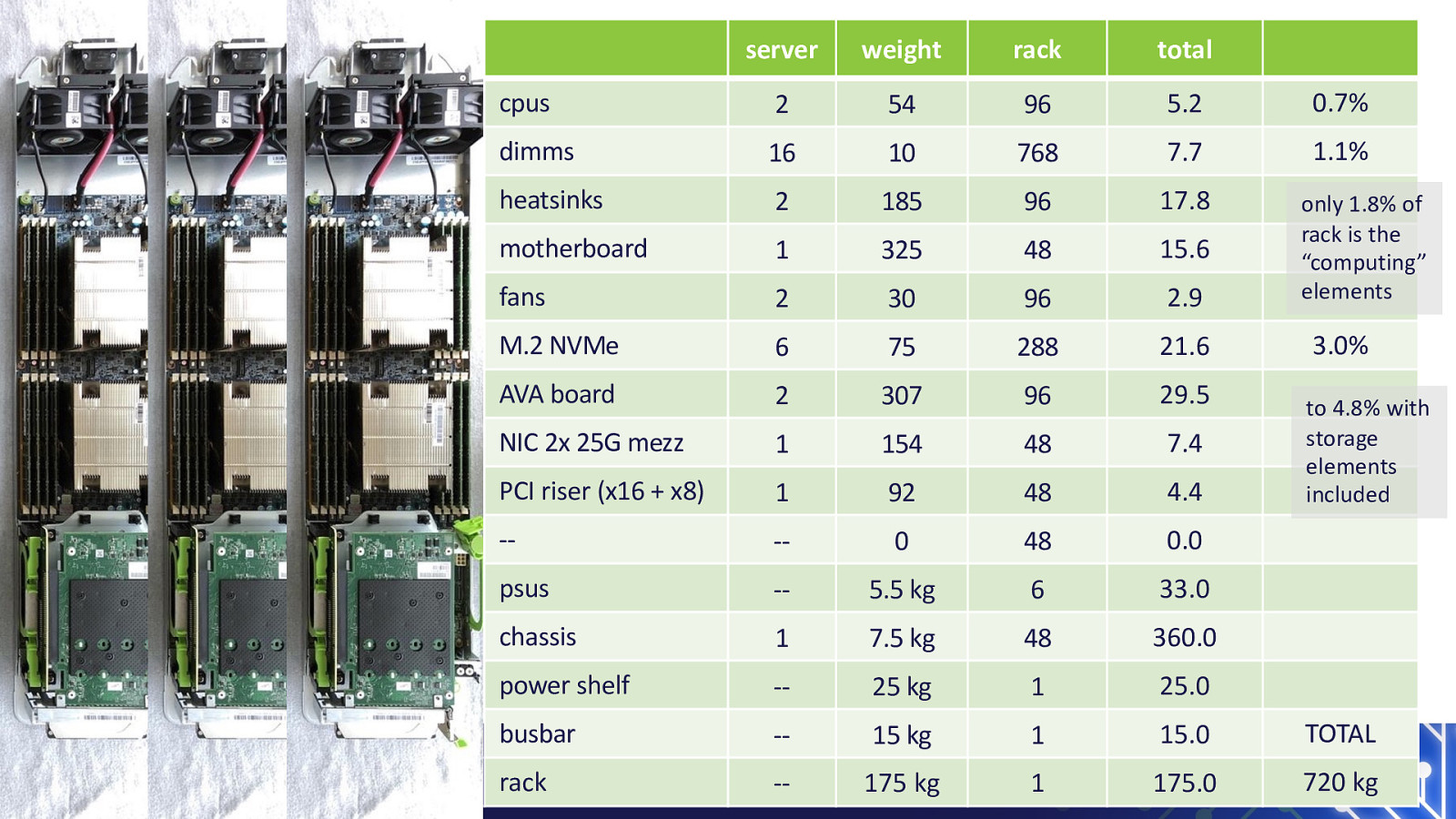

175 kg 1 175.0 720 kg only 1.8% of rack is the “computing” elements

175 kg 1 175.0 720 kg only 1.8% of rack is the “computing” elements 3.0% to 4.8% with storage elements included

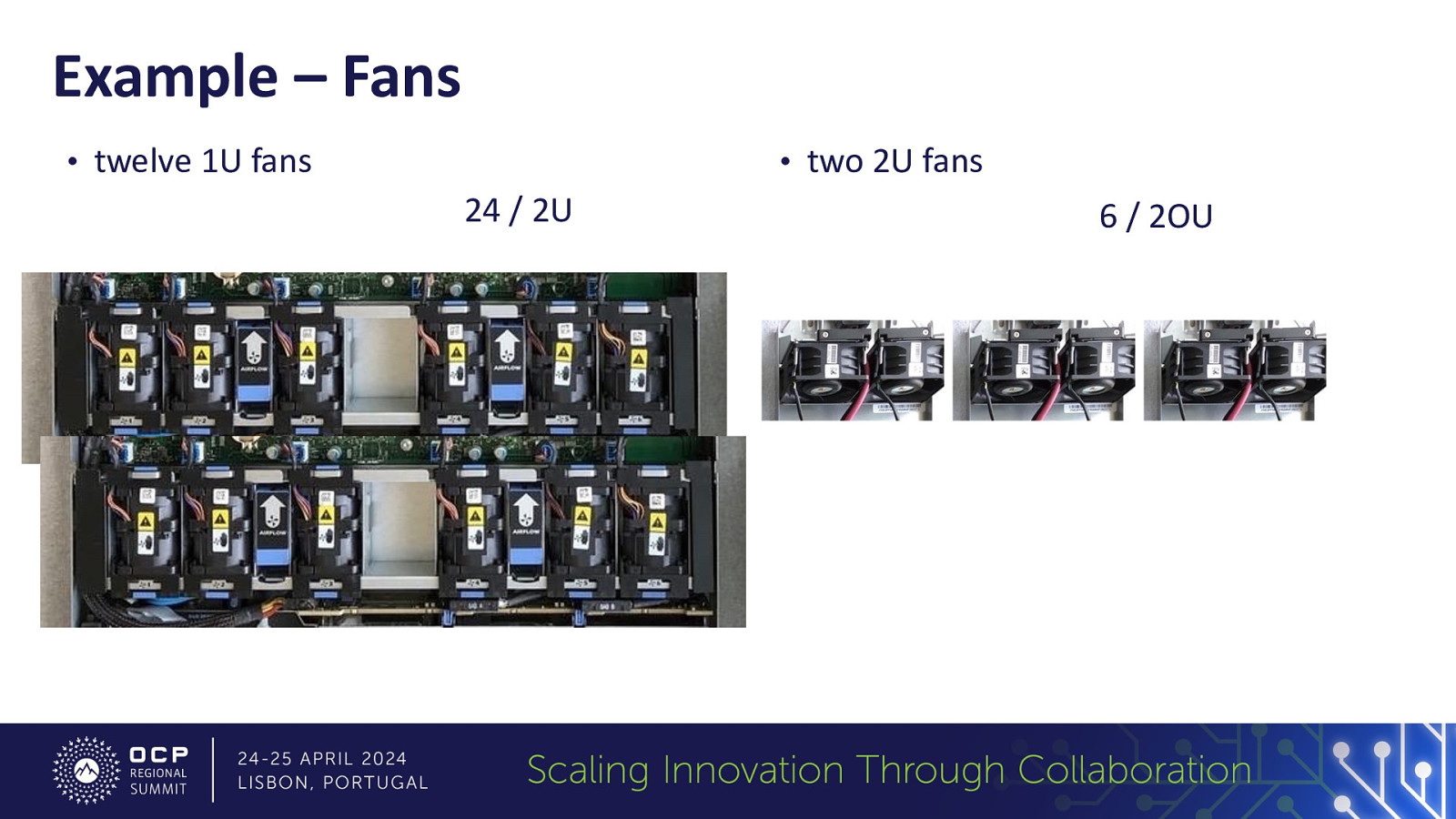

Example – Fans • twelve 1U fans • two 2U fans

Example – Fans • twelve 1U fans • two 2U fans 24 / 2U 6 / 2OU

…but wait, there’s more… (even denser server options)

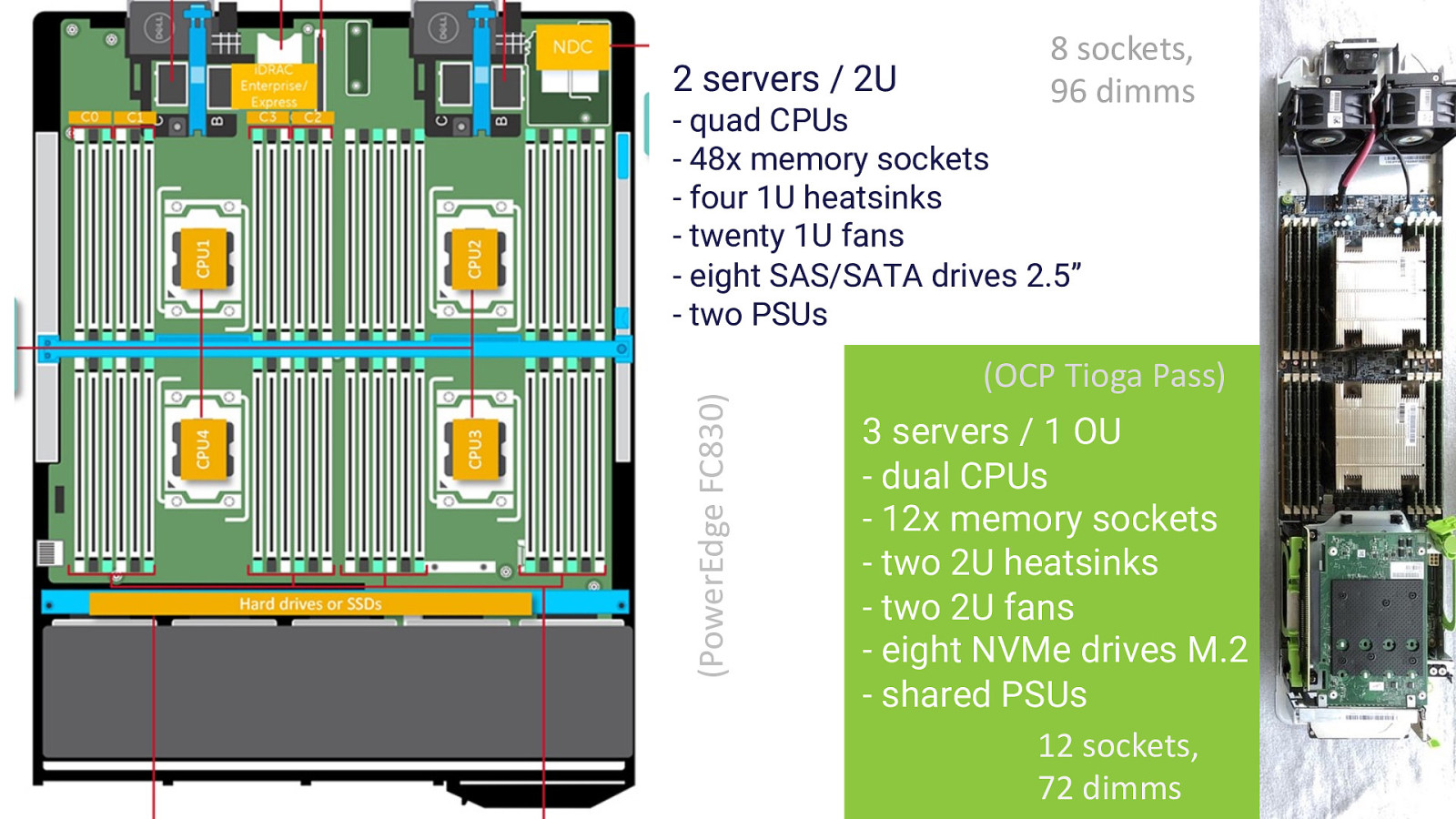

2 servers / 2U 8 sockets, 96 dimms (PowerEdge FC830)

145 kg 1 145.0 1106 kg over 3 cores per kg

materials reuse

175 kg 1 175.0 720 kg

REUSE (MEMORY COMPONENTS)

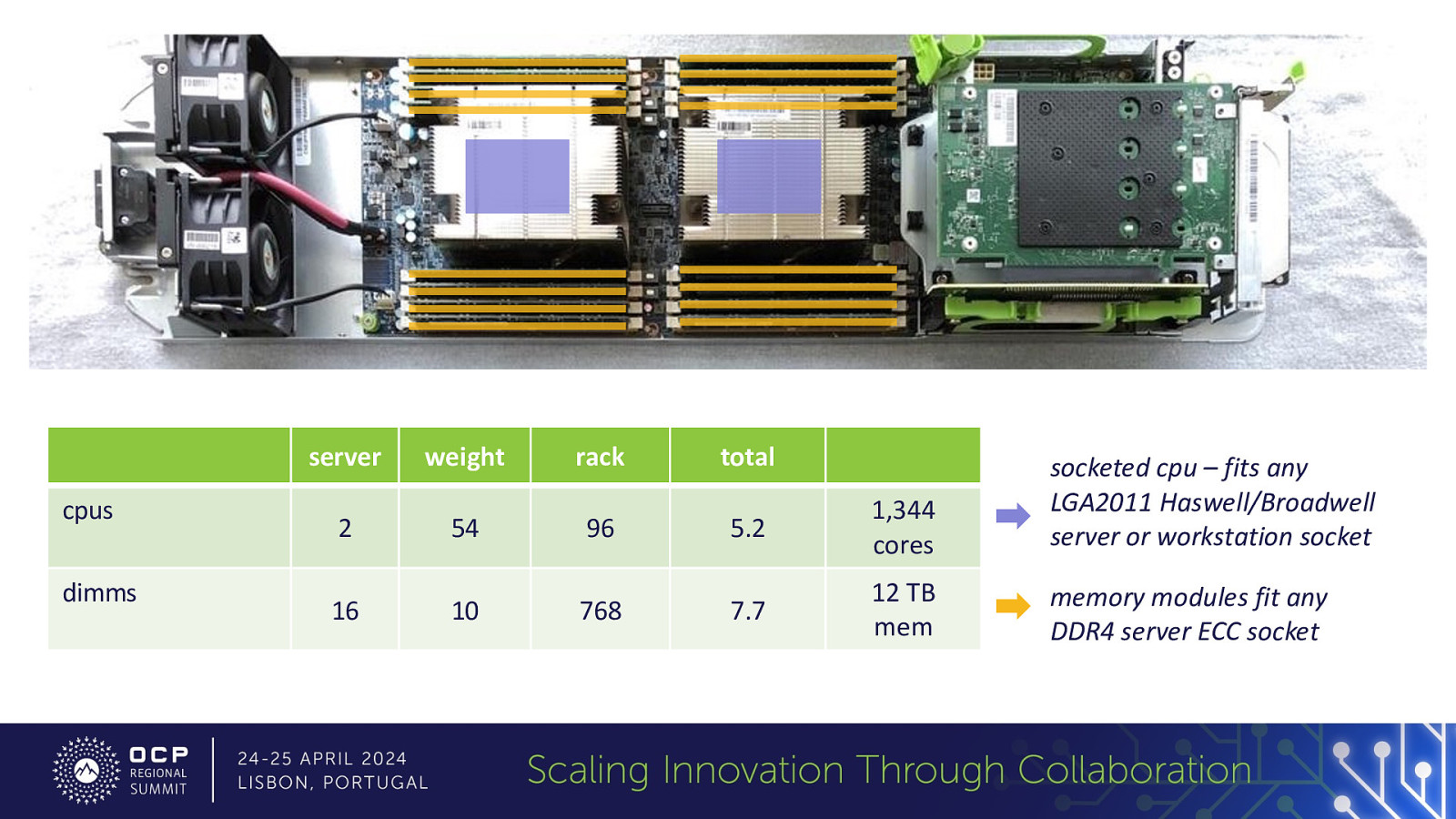

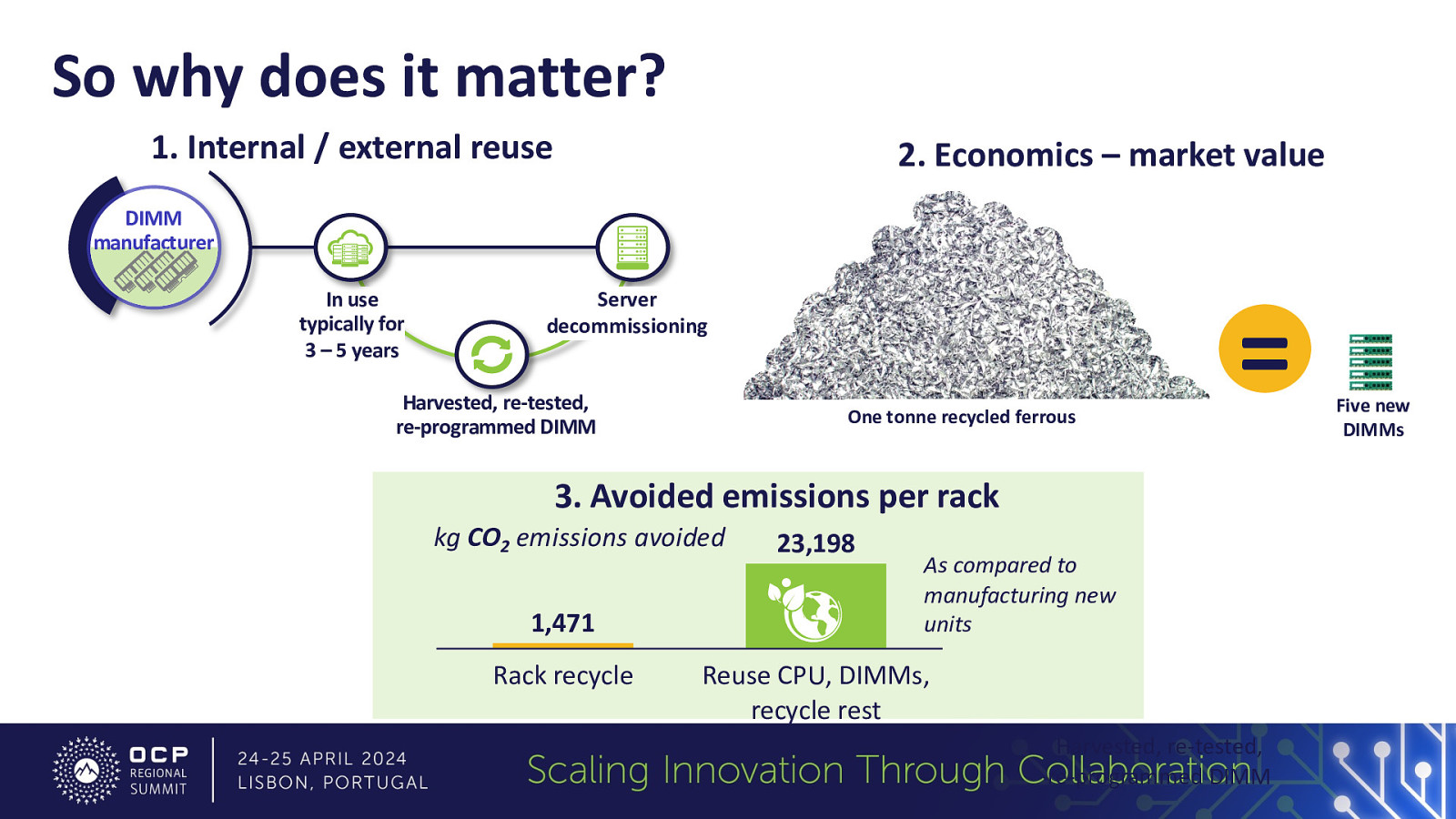

cpus dimms server weight rack total 2 54 96 5.2 1,344 cores socketed cpu – fits any LGA2011 Haswell/Broadwell server or workstation socket 16 10 768 7.7 12 TB mem memory modules fit any DDR4 server ECC socket

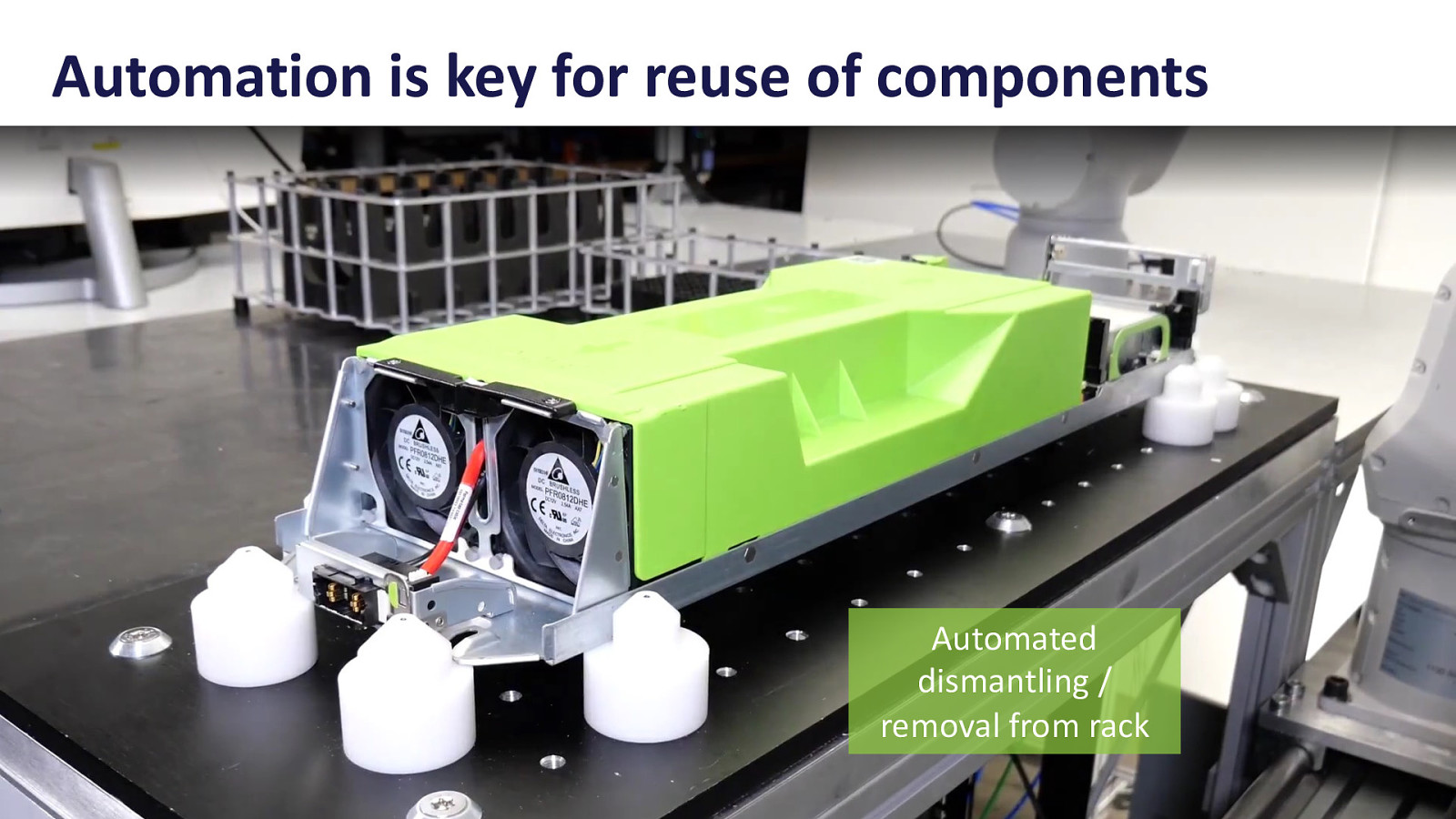

Automation is key for reuse of components Automated dismantling / removal from rack

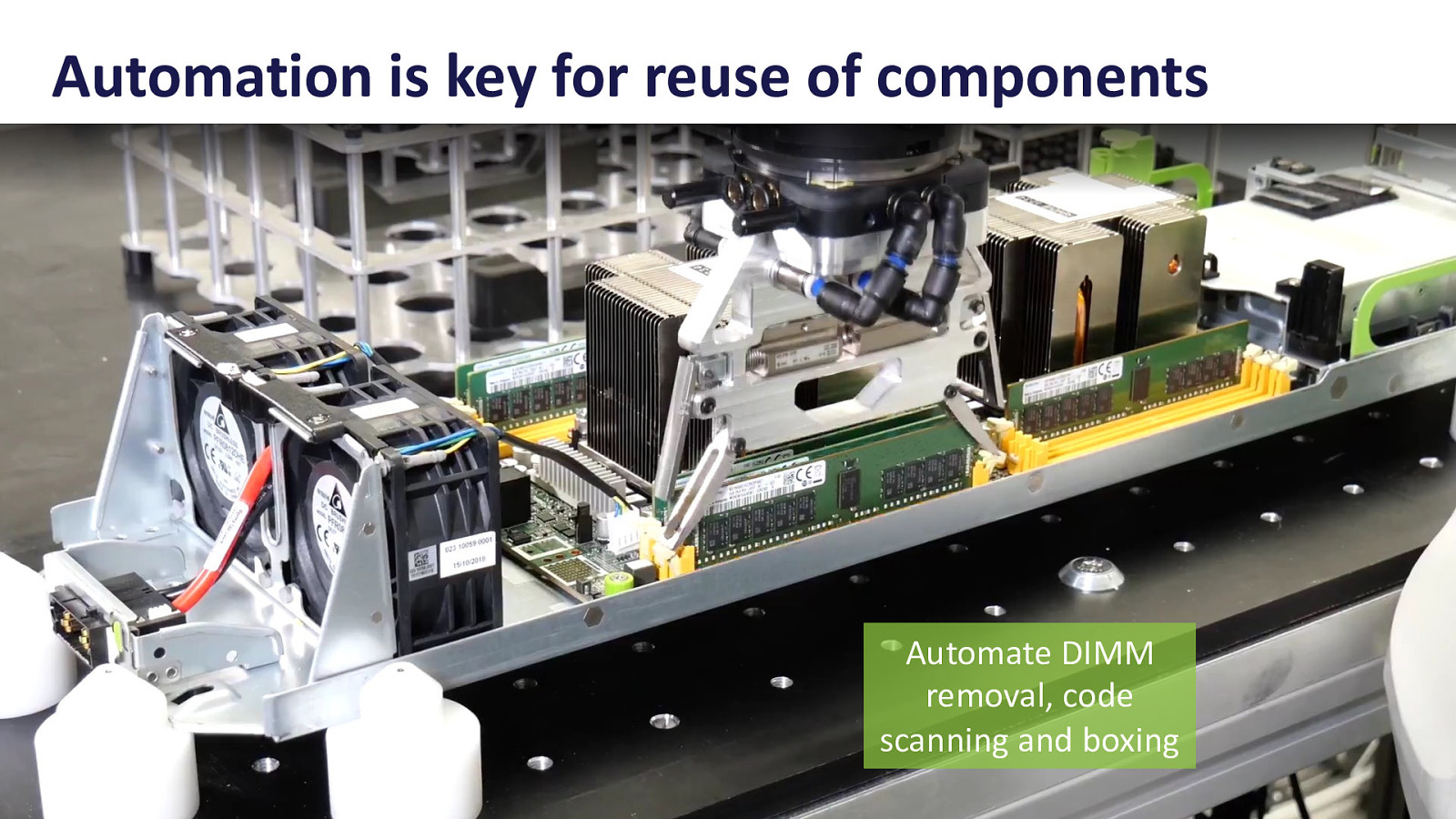

Automation is key for reuse of components Automate DIMM removal, code scanning and boxing

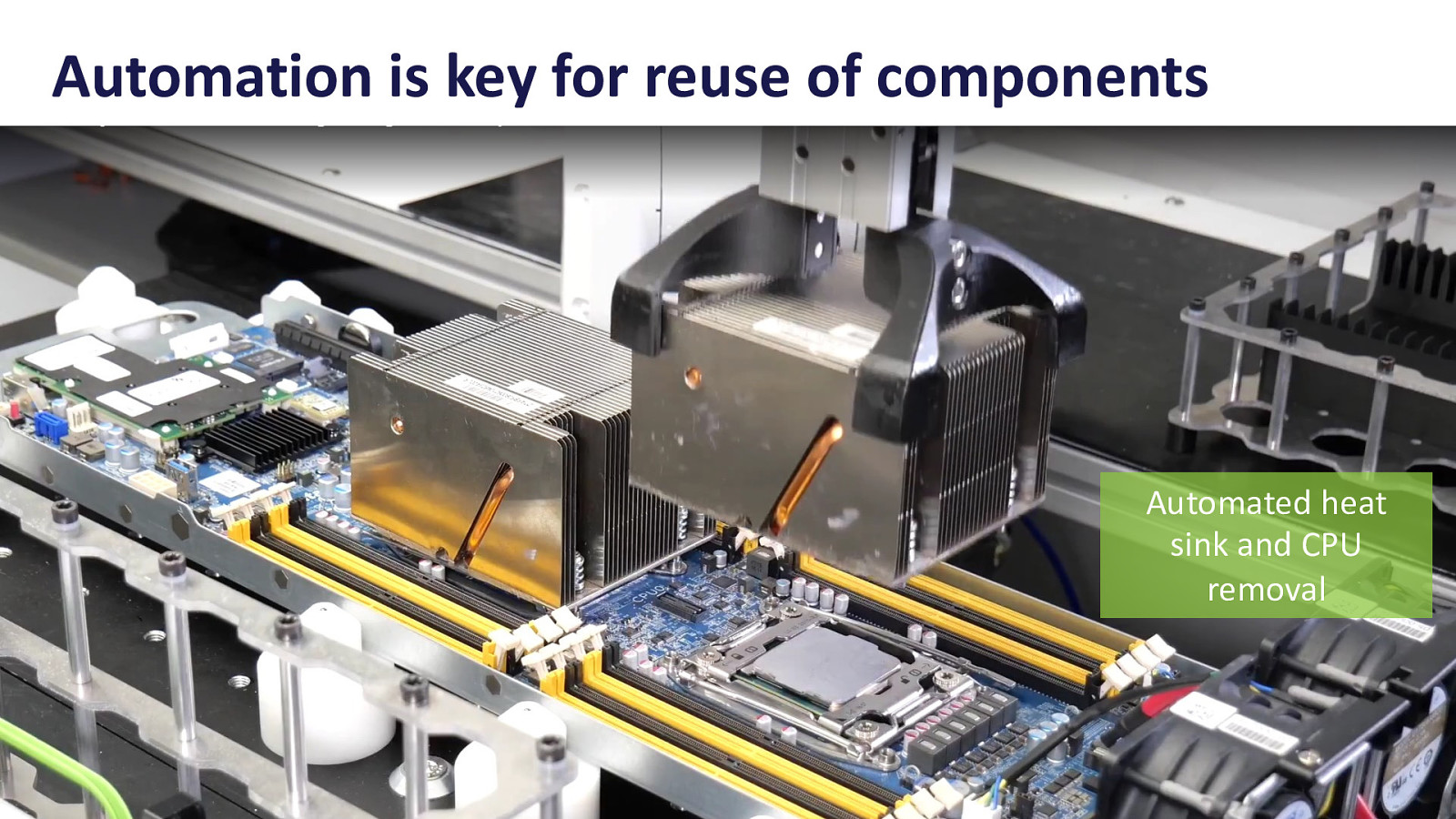

Automation is key for reuse of components Automated heat sink and CPU removal

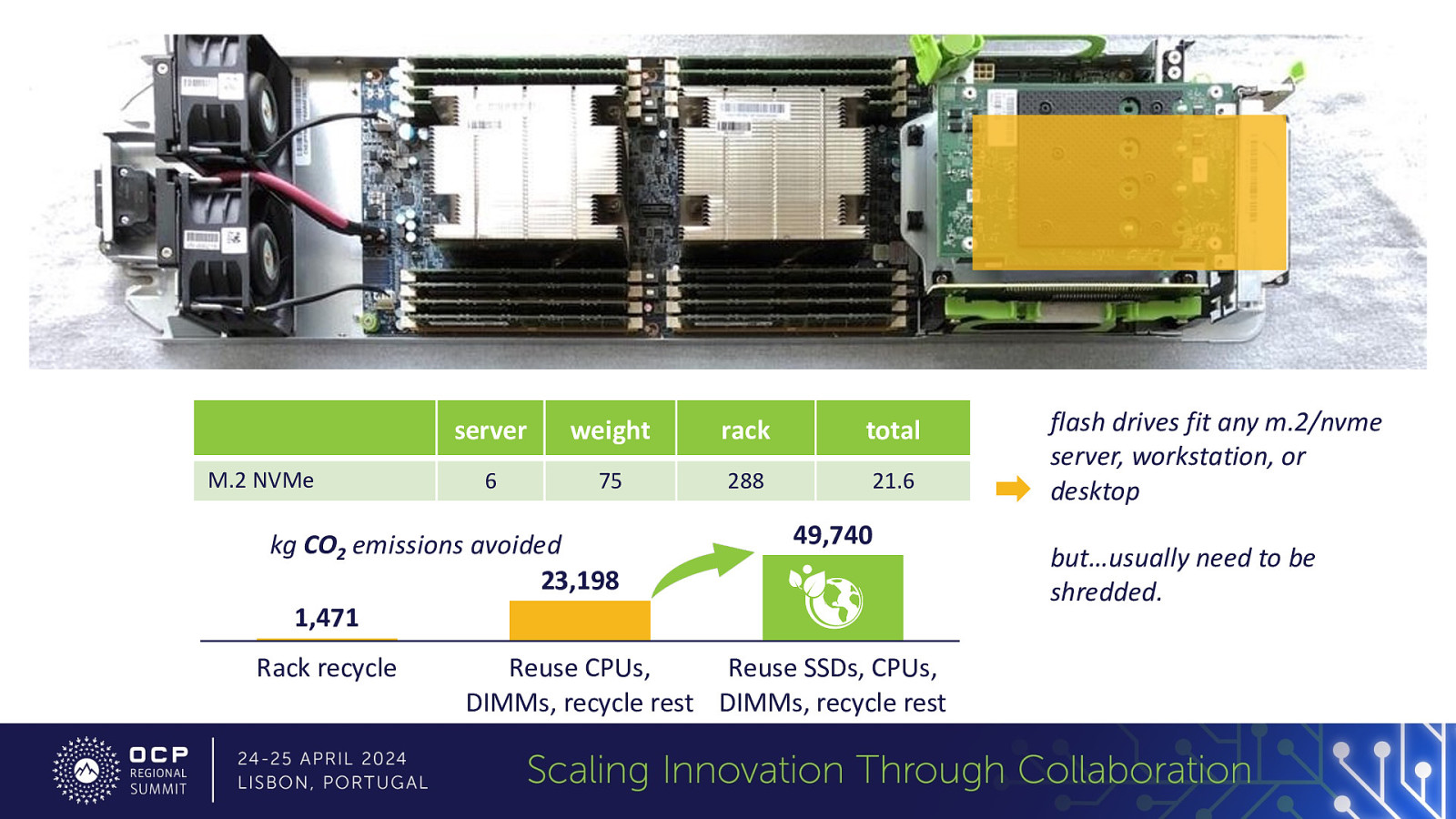

REUSE SHRED (STORAGE COMPONENTS)

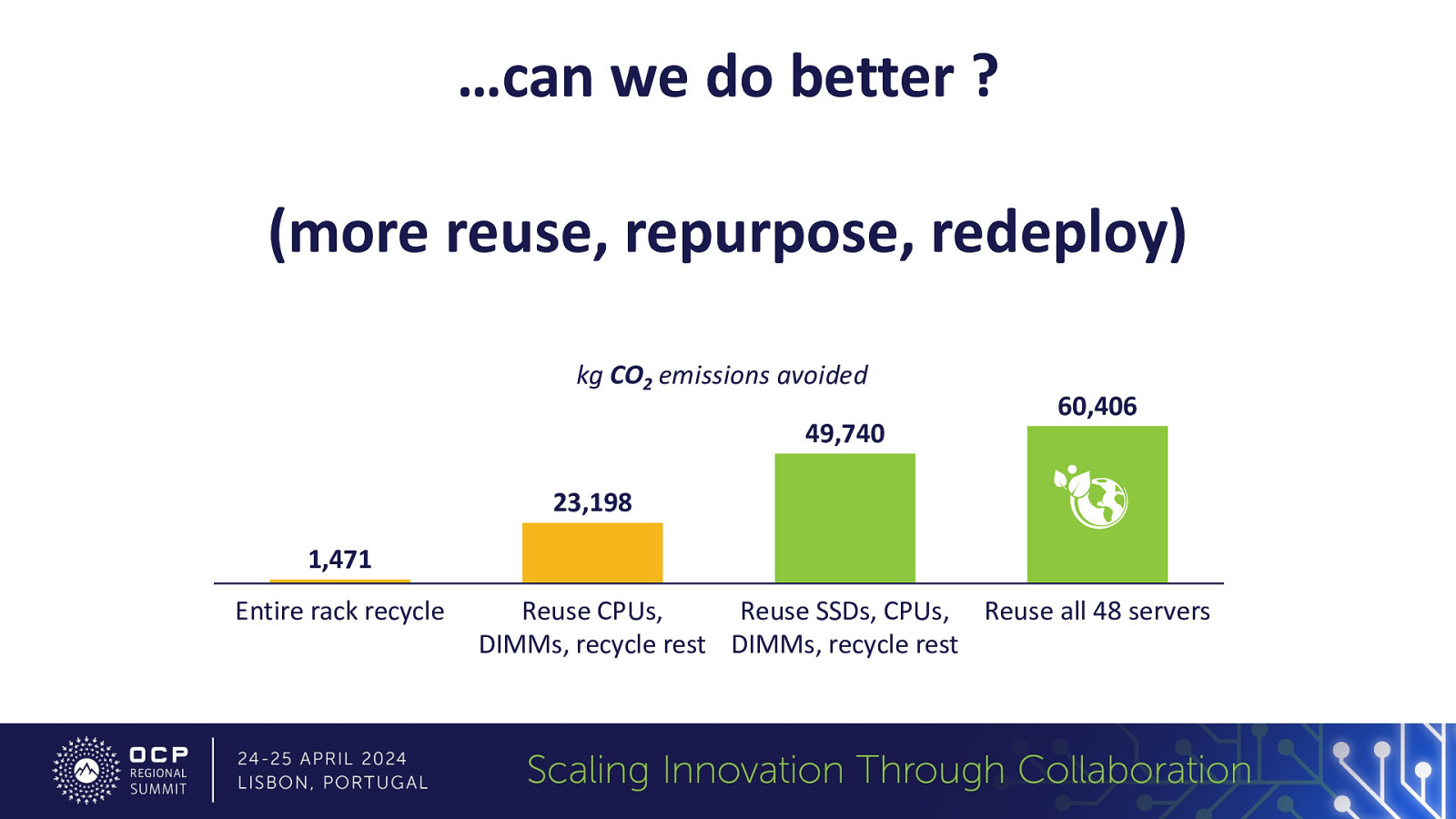

M.2 NVMe server weight rack total 6 75 288 21.6 kg CO2 emissions avoided 23,198 1,471 Rack recycle 49,740 Reuse CPUs, Reuse SSDs, CPUs, DIMMs, recycle rest DIMMs, recycle rest flash drives fit any m.2/nvme server, workstation, or desktop but…usually need to be shredded.

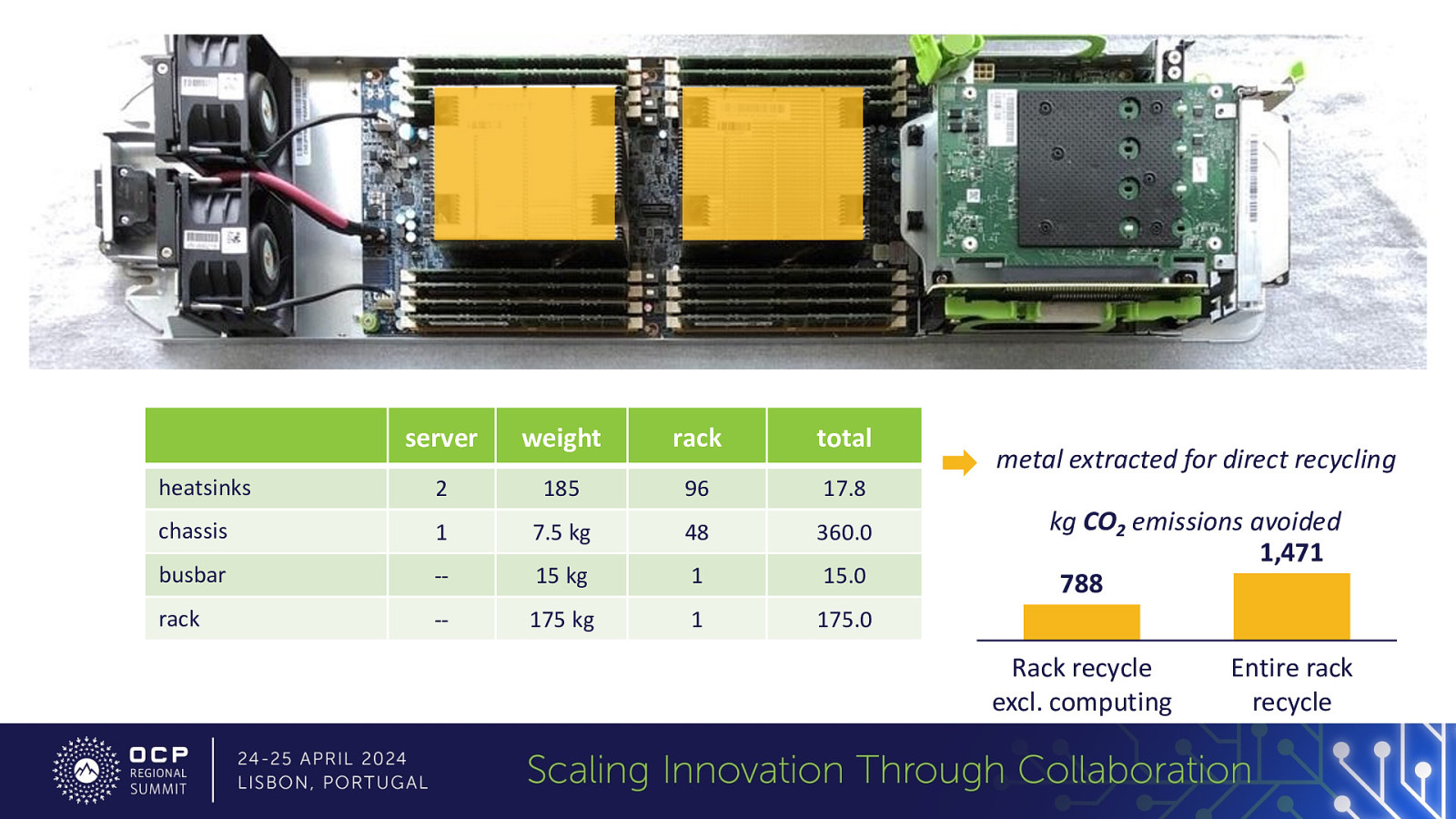

RECYCLE (METAL COMPONENTS)

175 kg 1 175.0 metal extracted for direct recycling kg CO2 emissions avoided 1,471 788 Rack recycle excl. computing Entire rack recycle

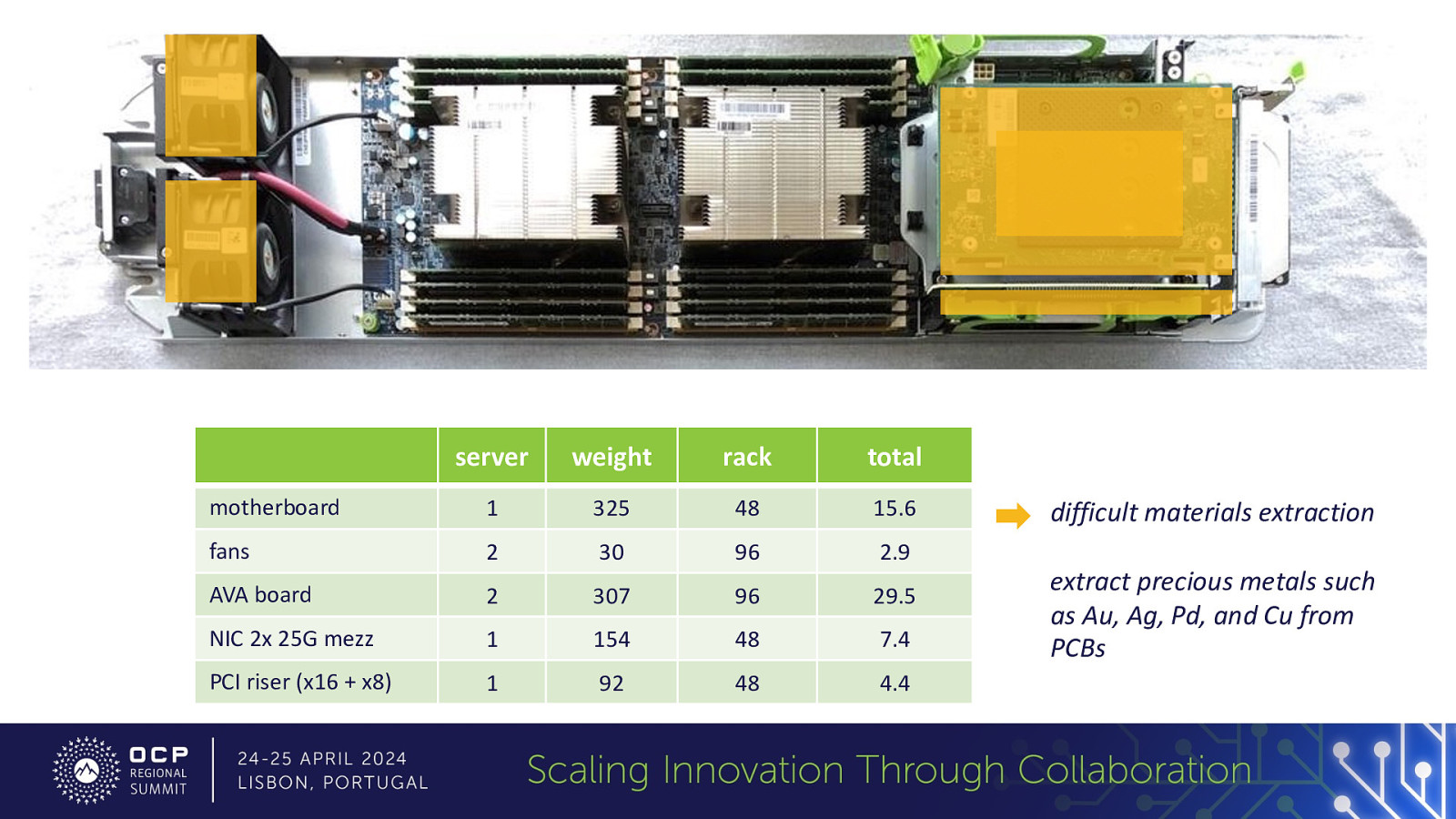

RECYCLE REUSE (SYSTEMS COMPONENTS)

server weight rack total motherboard 1 325 48 15.6 fans 2 30 96 2.9 AVA board 2 307 96 29.5 NIC 2x 25G mezz 1 154 48 7.4 PCI riser (x16 + x8) 1 92 48 4.4 difficult materials extraction extract precious metals such as Au, Ag, Pd, and Cu from PCBs

…can we do better ? (more reuse, repurpose, redeploy) kg CO2 emissions avoided 49,740 60,406 23,198 1,471 Entire rack recycle Reuse CPUs, Reuse SSDs, CPUs, Reuse all 48 servers DIMMs, recycle rest DIMMs, recycle rest

…additional details… (higher density design optimization)

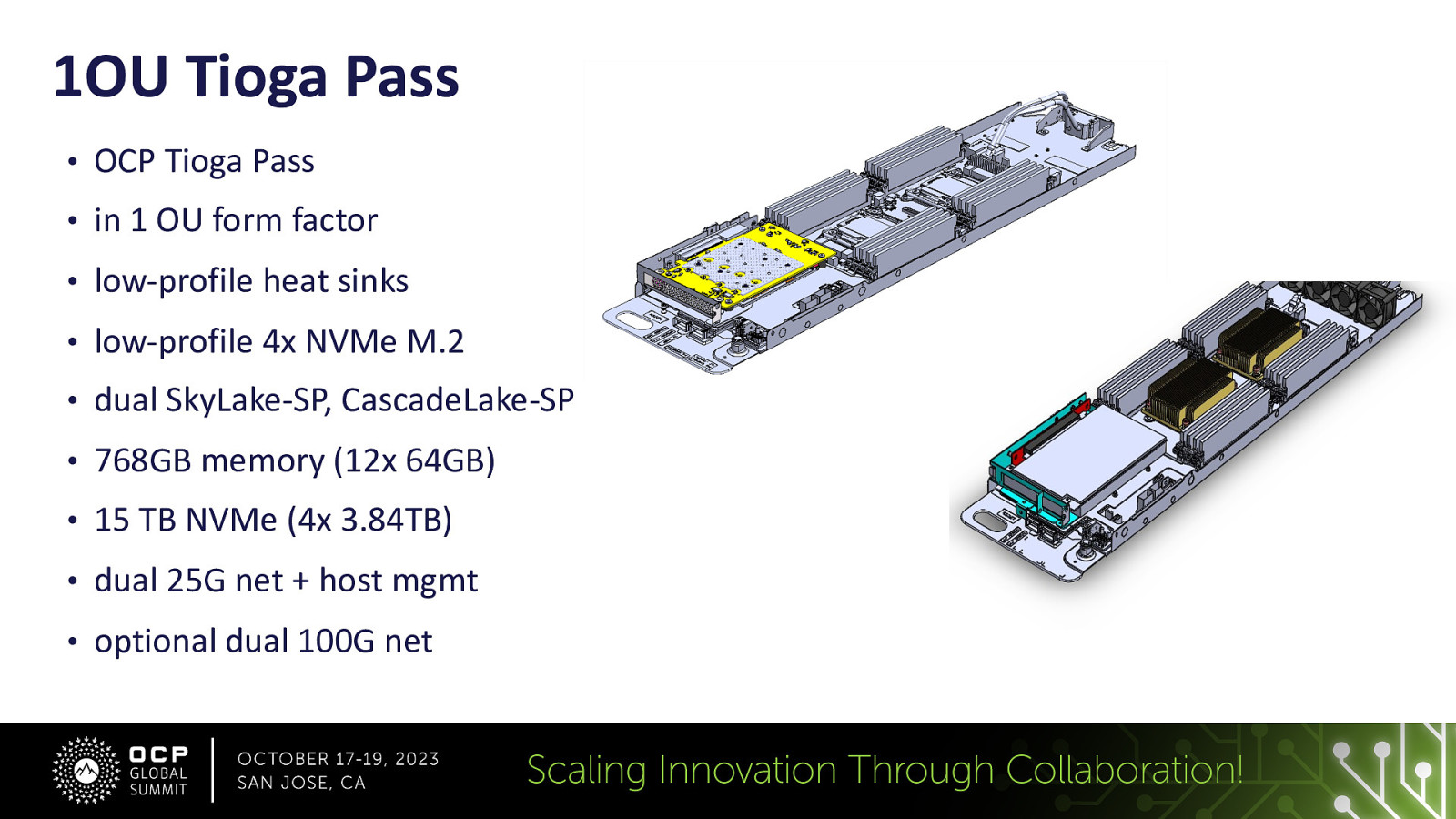

1OU Tioga Pass • OCP Tioga Pass • in 1 OU form factor • low-profile heat sinks • low-profile 4x NVMe M.2 • dual SkyLake-SP, CascadeLake-SP • 768GB memory (12x 64GB) • 15 TB NVMe (4x 3.84TB) • dual 25G net + host mgmt • optional dual 100G net

2 servers / 2U 8 sockets, 96 dimms (PowerEdge FC830)

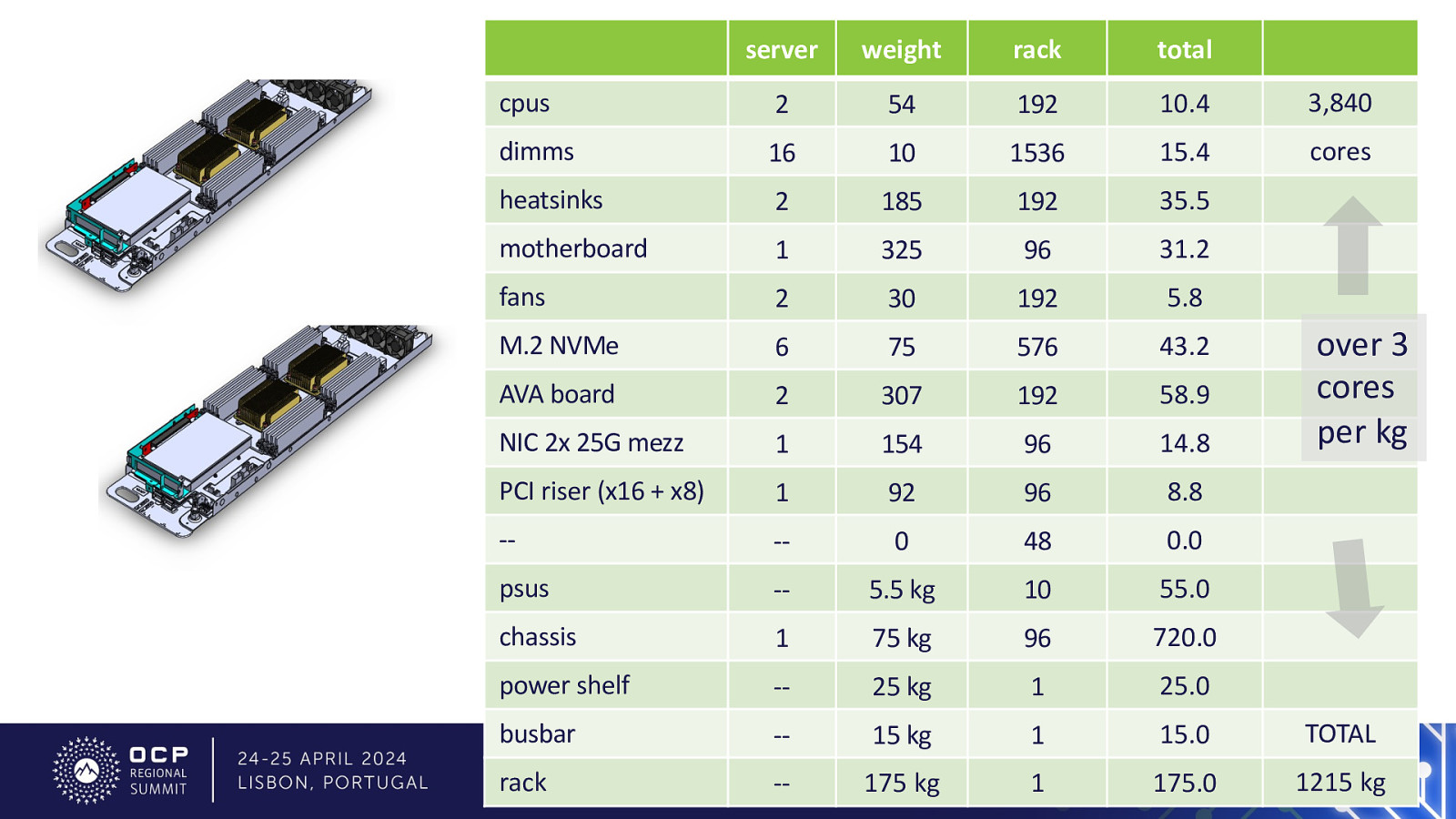

175 kg 1 175.0 1215 kg over 3 cores per kg

workload / carbon

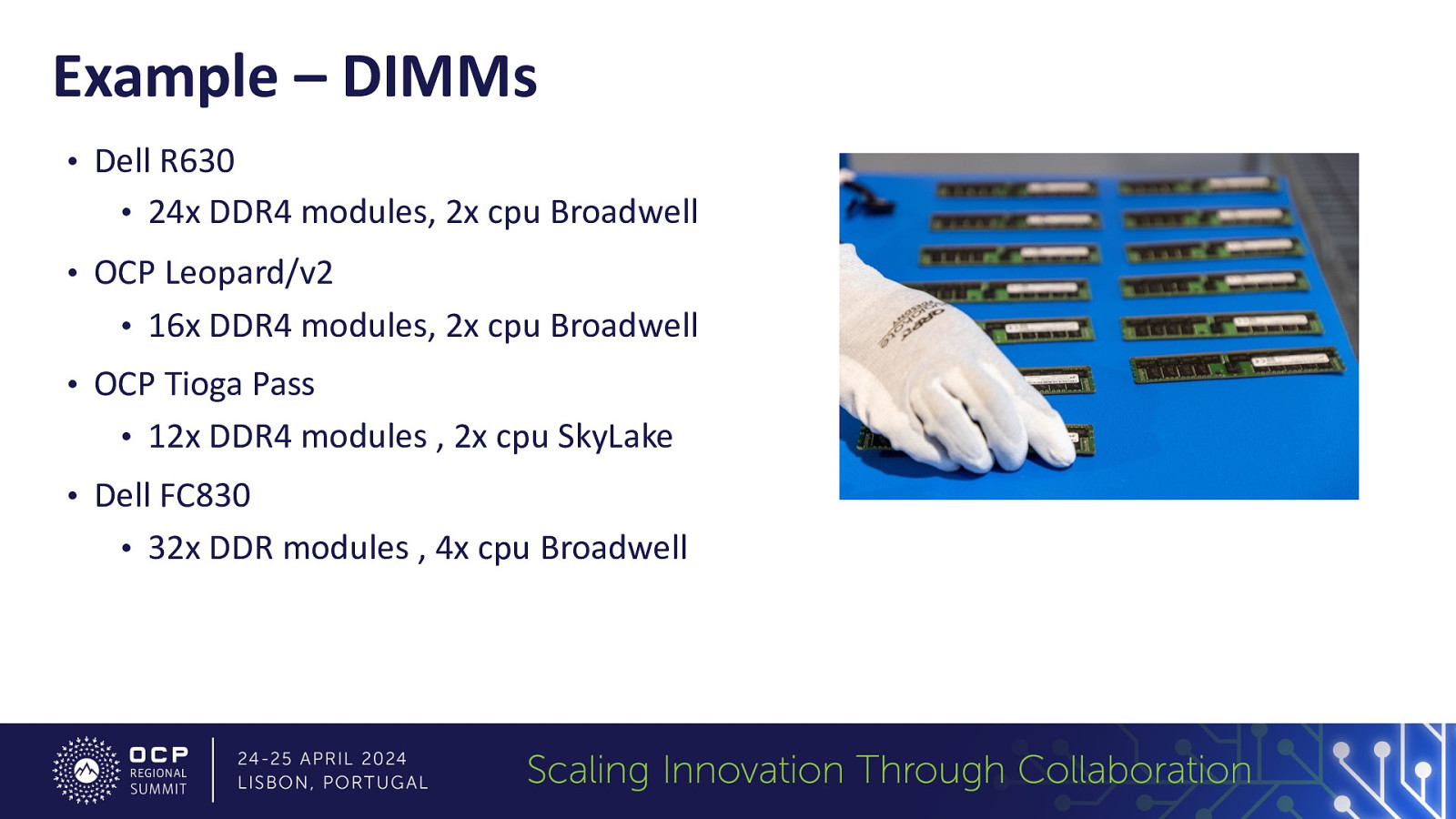

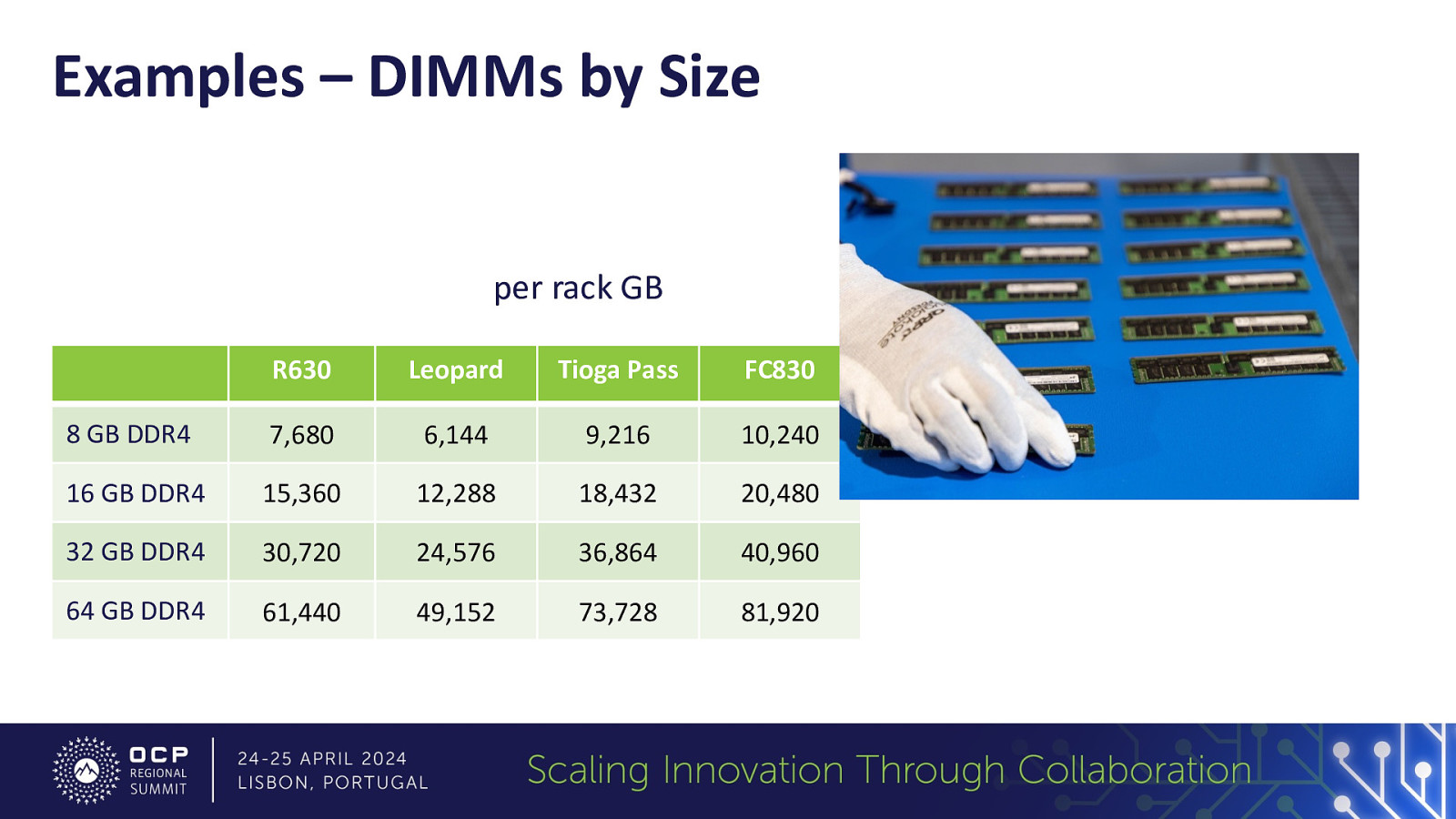

Example – DIMMs • Dell R630 • 24x DDR4 modules, 2x cpu Broadwell • OCP Leopard/v2 • 16x DDR4 modules, 2x cpu Broadwell • OCP Tioga Pass • 12x DDR4 modules , 2x cpu SkyLake • Dell FC830 • 32x DDR modules , 4x cpu Broadwell

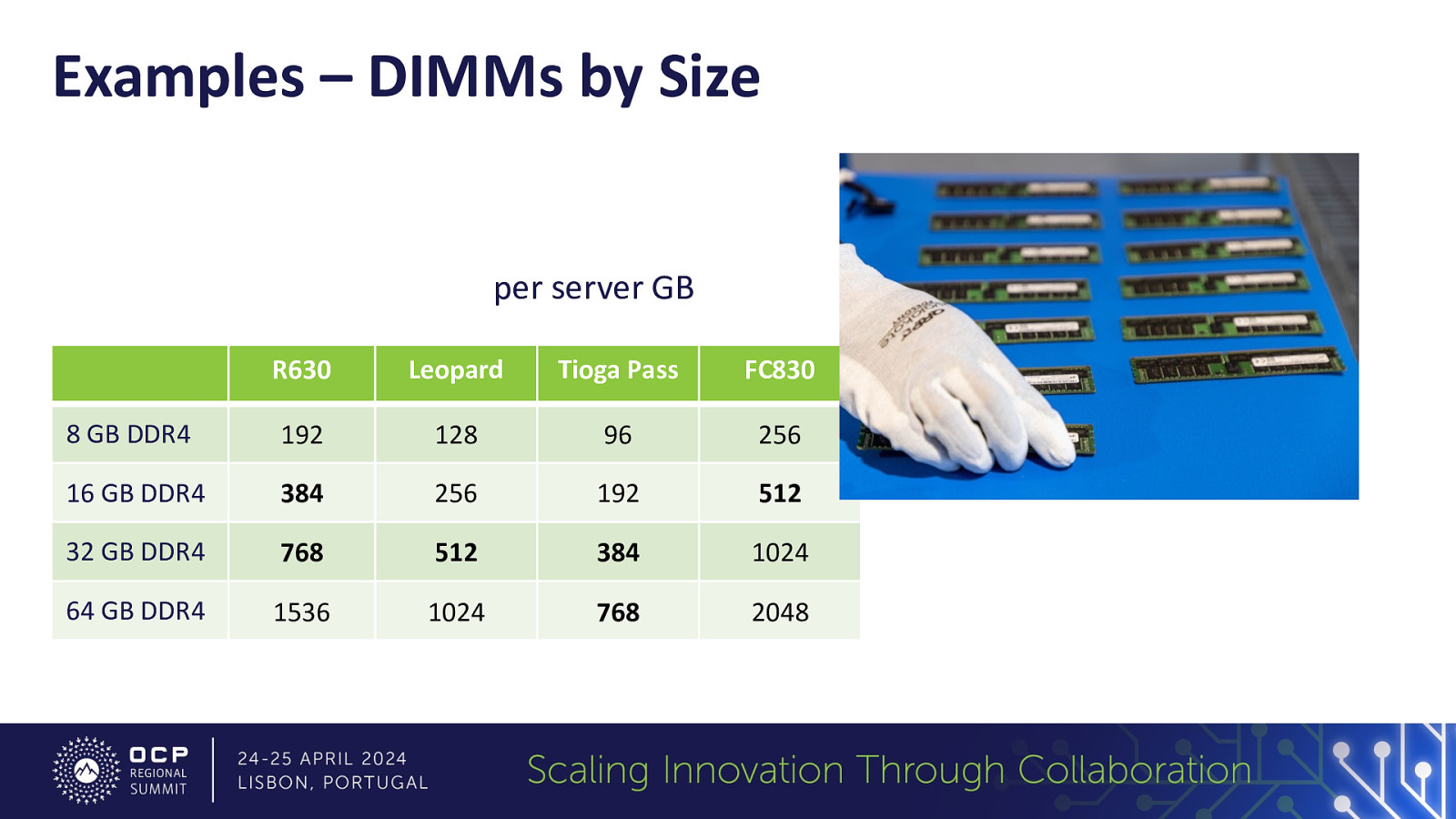

Examples – DIMMs by Size per server GB R630 Leopard Tioga Pass FC830 8 GB DDR4 192 128 96 256 16 GB DDR4 384 256 192 512 32 GB DDR4 768 512 384 1024 64 GB DDR4 1536 1024 768 2048

Examples – DIMMs by Size per rack GB R630 Leopard Tioga Pass FC830 8 GB DDR4 7,680 6,144 9,216 10,240 16 GB DDR4 15,360 12,288 18,432 20,480 32 GB DDR4 30,720 24,576 36,864 40,960 64 GB DDR4 61,440 49,152 73,728 81,920

See It For Yourself

Call to Action • Reach out to us to get involved • Engage us to evaluate / quantify your server carbon footprints • www.flaxcomputing.com • www.simslifecycle.com • Evaluate your own servers, share the results with us report @ flaxcomputing.com • Contribute measurements and component details (reuse stories, workload information, etc.) and share what components you would be most interested in data @ flaxcomputing.com sls.sustainability@simsmm.com

Thank you!